Cortex Agents Go GA: Snowflake Turns BI Into Action

Snowflake made Cortex Agents generally available on November 4, 2025, shifting business intelligence from static dashboards to outcomes. See why running agents in the data plane matters and how to pilot in 30 days with confidence.

Breaking: the data warehouse becomes an agent runtime

On November 4, 2025, Snowflake announced general availability for Cortex Agents. In practical terms, the place where your governed data lives can now host autonomous assistants that plan tasks, call tools, and execute work without shipping sensitive data to a separate runtime. See the official note in the Cortex Agents GA announcement.

If you have spent years publishing dashboards to executives, this moment changes the job description. Business intelligence stops at “what happened.” Agents move to “what should happen next.” An assistant that sits next to your governed tables can read intent, plan a multi‑step approach, choose between structured and unstructured sources, generate SQL, reflect on whether the result is sound, and either present a conclusion or prepare a safe action for approval. The pattern is no longer ask and visualize, it is ask and do.

Alongside this launch, the broader ecosystem has been maturing similar ideas. We recently examined how AWS AgentCore as enterprise runtime makes agent operations first‑class in cloud infrastructure, how OpenAI AgentKit for production turns prototypes into deployable services, and how Atlas Agent Mode in the browser brings agent execution to client surfaces. Cortex Agents extend that arc by relocating agent execution directly to the data plane that already holds identity, policy, and lineage.

Why now

Three shifts converged to make this feasible and useful.

-

Models learned to plan and use tools. LLMs moved from single‑shot text prediction to iterative planning, with structured tool calls and reflection. That means an agent can choose a search tool over documents, drop to SQL for facts, and ask clarifying questions when prompts are ambiguous.

-

Warehouse governance matured. Column masking, row access policies, object tagging for sensitive fields, and account‑wide access history are no longer lab features. They are routine controls that analytics teams rely on every day.

-

Teams are done duplicating stacks. Shipping copies of tables into a bespoke agent service requires rebuilding security, lineage, and cost management. Every duplicate pipeline becomes a compliance and accounting headache.

Cortex Agents meet that moment by running where your data already lives, so the agent’s plan, tool invocations, and outputs stay under the same identity, policy, and lineage umbrella.

From dashboards to action

Dashboards answer what happened. Agents answer what should happen next. A few concrete scenarios make the difference clear.

-

Churn intervention: A customer‑success lead in Microsoft Teams asks, “Which enterprise accounts are likely to churn in the next 30 days, and who should we call?” An agent queries a risk view, searches support transcripts, ranks urgency with clear reasoning, composes a call list with suggested talking points, and posts it back to the channel. There are no spreadsheet exports and no screenshots of charts.

-

Revenue close: Finance asks, “Can we close the quarter today, and what reconciliations are still open?” The agent checks ledger tables, flags three unresolved revenue recognitions, opens tasks for the correct owners, and drafts a summary email. A human approves before anything posts to the system of record.

These examples work because planning, tool use, and governance sit side by side. The agent plans, switches between tools like search over unstructured text and analyst over structured data, then reflects on intermediate outputs before returning a result or proposing an action. All of that runs on Snowflake’s data plane, not on a disconnected agent server.

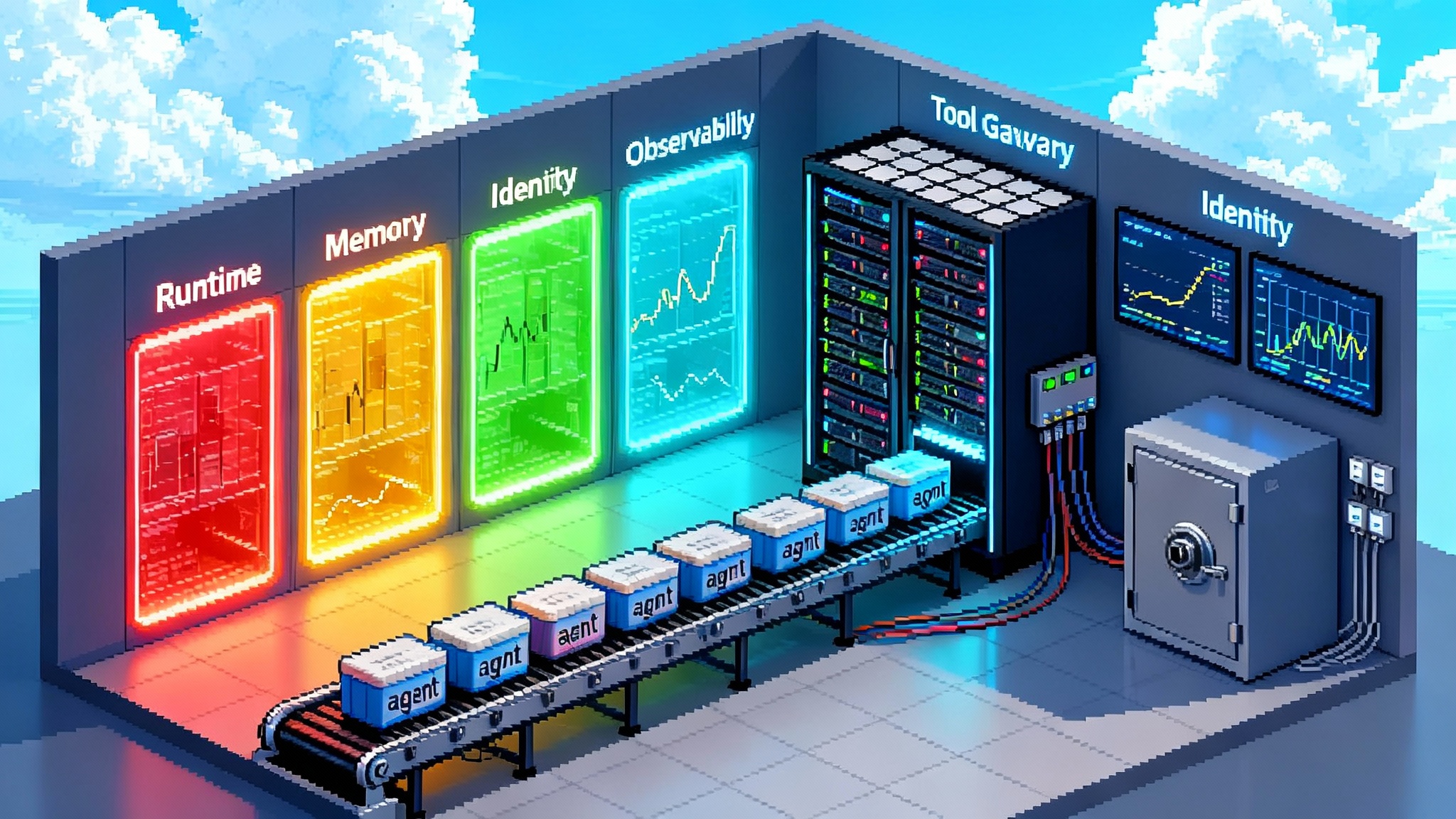

Why the data plane matters for agents

Compliance stays enforceable

When agents run inside the warehouse, they inherit the same role‑based access controls that protect analytics today. A marketing analyst who cannot see raw credit card fields in dashboards will not see them through an agent either. Column masking rules apply at query time. Row policies ensure the sales manager in the East only sees her territory when she asks about pipeline.

The benefit is operational, not philosophical. Governance teams can map policy once and observe the effect. If a rule changes, agents inherit it immediately. There is no second policy engine to keep in sync and no blind spots created by data extracts living on a separate service.

Lineage and audit are built in

Agents leave a trail. Query history shows every statement the agent ran, access history shows which base objects were touched, and lineage views let owners trace how data moved through views and dynamic tables. For unstructured inputs, search services sit as first‑class objects that can be inspected just like any other asset.

This matters in regulated environments that must document who saw what, when, and why. It also matters for everyday debugging. A chat transcript is not enough. You need the plan, the tools selected, the inputs used, and each intermediate result. Cortex emits those traces in event tables that administrators can query and review in Snowsight.

Cost control moves from art to accounting

Running agents on the data plane puts spend where you already govern it: warehouse credits and serverless features. You can meter by warehouse, tag agent workloads by cost center, enforce resource monitors that stop runaway experiments, and rely on auto‑suspend so you are not paying for idle capacity. You also avoid a hidden tax common in pilots, which is duplicate storage and egress from shipping sensitive tables to an external runtime.

The takeaway for finance teams is simple. If your analytics bills are predictable, your agent bills can be too. The same policies, tags, monitors, and dashboards apply.

Early integrations put agents where people work

On November 5, 2025, Snowflake confirmed that the Cortex Agents integration for Microsoft Teams and Microsoft 365 Copilot is generally available. Users can chat with an agent directly in Teams or inside Copilot using their Snowflake security context, with behavior and access controlled by the instructions and tools you define in Snowflake. See the Teams and Copilot GA note for details.

This is not cosmetic. Putting agents into collaboration surfaces raises the odds that a forecast check or a policy lookup happens in time to change a decision. It also reduces the temptation to copy sensitive answers into ungoverned side channels.

What to evaluate before you scale

Treat agents like production workloads from day one. Use this pragmatic framework for a 30‑day pilot.

1) Planning quality

Goal: verify that the agent can break a user goal into steps, choose the right tools, and ask for clarification when needed.

- Benchmark tasks you already know how to solve. Write five end‑to‑end tasks per use case, such as “Find enterprise customers with high churn risk, justify the ranking with three signals, and propose the next action.”

- Measure precision in both plan and answer. Did it choose search or analyst appropriately, call the correct semantic view or search service, drop to plain SQL when necessary, and match ground truth on the final answer?

- Watch failure modes. Favor graceful recovery like “I cannot answer because the marketing_contact table is not accessible to this role” over confident but wrong responses.

- Test ambiguity handling. Submit short prompts like “top churn accounts” and confirm the agent asks “Which region and time window?” before it charges ahead.

2) Tool use safety

Goal: prevent agents from reading or writing beyond policy and avoid unintended side effects.

- Start read‑only. Use a warehouse dedicated to agent queries, grant least privilege on views and search services, and block write privileges until you have telemetry.

- Create a write sandbox. When writes are allowed, point the agent at a staging schema or a mock endpoint that records intent without touching production systems. Promote only after human approval.

- Use allowlists for tools. Expose the specific semantic models, search services, and stored procedures the agent needs. Avoid broad function catalogs and ad hoc external calls.

- Simulate policy context. Test under multiple roles. If an answer includes masked columns for one user and clear text for another, instructions and policy are out of alignment.

3) Observability and governance

Goal: ensure you can answer who did what, with what data, and at what cost.

- Turn on agent logging and verify that you can query event tables. Confirm you see full traces of plans, tool spans, inputs, and outputs.

- Build an access‑trail query. Find every base object touched by the agent in the last day, grouped by user, and share results with your data protection officer.

- Set a cost dashboard. Tag the agent’s warehouse and serverless features, then track daily credit burn against a budget. Alert when burn rate exceeds your planned envelope.

- Collect human feedback. Add a simple thumbs up or down and capture comments. Qualitative signals speed iteration as much as numeric metrics.

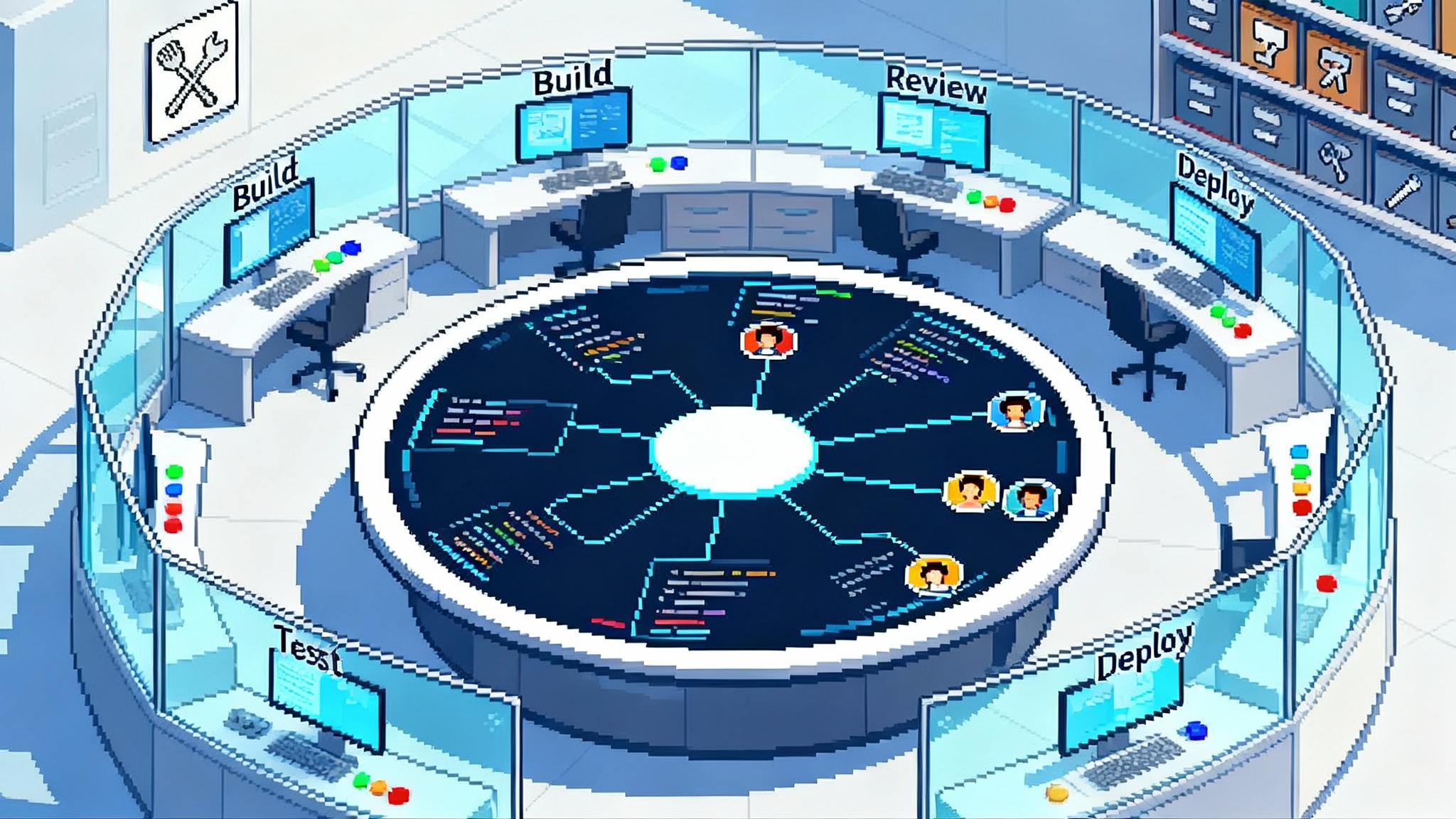

A 30‑day pilot blueprint

This blueprint assumes the data, security, and application teams partner from day one. Prove value on two high‑impact use cases, then decide whether to scale.

Week 1: scope, guardrails, and scaffolding

- Pick two use cases that are valuable but safe. Examples include forecast explanations, churn triage, policy lookup, or invoice discrepancy analysis. Avoid anything that writes to production systems.

- Define success metrics. For each use case, choose three objective measures, such as correct top‑five list as judged by a human reviewer, average time to answer under ten seconds, and zero policy violations.

- Prepare data and semantics. Confirm that underlying tables are clean and documented. If you use a semantic model for structured data, define the key entities, measures, and joins the agent should rely on. For documents, define a search service that indexes only sources you want the agent to quote.

- Create a pilot warehouse. Small to medium is enough for most tests. Enable auto‑suspend and tagging, and attach a resource monitor with a hard daily cap.

- Write precise agent instructions. Define tone, formatting, citation behavior, escalation criteria, and which tools to use in which situations. Include a rule that the agent must ask clarifying questions when inputs are ambiguous.

- Set up Teams access. Deploy the agent to Microsoft Teams or Copilot for a small group of pilot users. Map identities so the agent runs with the user’s role and privileges.

Week 2: closed‑loop testing and red teaming

- Build a test harness. For each use case, create ten prompts with expected outcomes. Run them daily and record traces, results, and timing.

- Red team with security. Try prompts that fish for sensitive fields, attempt role escalation, or ask the agent to summarize data it should not see. Confirm masking and row rules hold, and that the agent refuses politely when required.

- Stress tool selection. Provide tasks that require switching between structured and unstructured sources. For example, “Summarize the top three churn drivers from support tickets and relate them to last quarter’s churn by segment.”

- Analyze failures. Use traces to identify where plans went wrong. Fix with better semantics, tool configuration, or instruction tuning rather than hoping the model guesses differently next time.

Week 3: limited production and feedback loops

- Expand to a small real‑user group. Aim for 20 to 50 users across sales, support, and finance who will actually use the agent in their daily flow.

- Keep a human in the loop for any action beyond read‑only. Use a staging schema or a task queue that requires approval for updates, emails, or ticket creation.

- Instrument feedback. Require users to rate answers and collect a short comment when they rate down. Review this daily with the data and app teams.

- Track cost and latency. Right‑size the warehouse. If you scale up to meet latency requirements, record the effect on credits and the step change in response time.

Week 4: production readiness and go or no go

- Run a decision review. Did the pilot meet its metrics across accuracy, safety, latency, and cost stability? Where did it fall short, and can gaps be closed with configuration rather than research?

- Codify runbooks. Document common failures, handoffs to humans, and escalation paths. Define on‑call responsibilities for the app and data teams.

- Harden privileges. Convert ad hoc grants into a role hierarchy, and replace broad grants with least privilege on specific views, search services, and procedures.

- Plan staged writes. If you move beyond read‑only, decide which actions the agent can take without approval, which require approval, and which are out of scope.

- Set a scale plan. If you proceed, add a second agent for a different domain as a separate deployment, not a mix of intents in one agent. This keeps instructions crisp and access narrow.

Practical design choices that pay off

- Keep tools simple. Prefer a few clear tools over many overlapping ones. The agent should know exactly when to use a semantic view, a search service, or a stored procedure.

- Write for determinism. Give explicit decision rules, for example, “When the prompt includes the phrase churn driver, always call search over support tickets before calling analyst.”

- Prevent prompt drift. Freeze instructions during the pilot and change them only during scheduled updates. Daily edits erase your ability to measure improvement.

- Separate concerns by schema. Put agent artifacts in a dedicated schema, including search services, semantic views, and procedures. This makes grants, monitoring, and cost tracking simpler.

What could go wrong, and how to avoid it

- Overbroad access. Granting a high‑privilege role to speed the pilot yields flattering accuracy numbers that will not hold in production. Start least privilege, accept lower initial accuracy, and improve with better semantics and tools.

- Unobserved writes. Allowing writes without a staging pattern can surprise downstream systems and trigger audit headaches. Use a clear staging schema and approval flow.

- Hidden cost drivers. Agents that query giant, unconstrained views force the warehouse to scan more than needed. Narrow views, add clustering where it helps, and create smaller task‑specific views.

- Vague ownership. If nobody owns the agent’s instructions and tool catalog, quality will drift. Assign a product owner who steers improvements and says no to random changes.

The bottom line

Cortex Agents moving to general availability makes one idea concrete. Analytics is no longer a place you go to look at charts. It is a place where work happens. When agents run at the data plane, compliance is not a slide promise, it is enforced at query time. Lineage is not a slide, it is a query you can run. Cost is not a surprise, it is a warehouse monitor and a tag report.

The institutions that win this shift will do three things. They will pick focused use cases that matter, they will treat agents like production services with policy and telemetry, and they will keep the agent inside the data plane so every action is governed and auditable. With a 30‑day pilot that proves value and safety, you can move from dashboards to action with confidence. The warehouse is no longer just storage and compute. It is your agent runtime.