IBM AgentOps makes watsonx Orchestrate the control tower

IBM used TechXchange on October 7 to bring AgentOps into watsonx Orchestrate, turning observability and policy into the advantage for enterprise agents. Here is what changes now and how to build a control tower that scales.

Breaking: observability becomes the moat

Two days in Orlando just reset the enterprise agent playbook. On October 7, IBM used its TechXchange stage to unveil AgentOps inside watsonx Orchestrate and to push agentic orchestration forward with governance-first features and a larger partner ecosystem. The point was sharp and overdue: the next competitive advantage will not come from bigger language models. It will come from shipping agents that are observable, controllable, and interoperable inside messy, hybrid enterprises. IBM’s own framing is explicit in its October 7 announcement about new software and infrastructure to operationalize agentic AI, with AgentOps providing lifecycle transparency and policy controls inside Orchestrate (IBM introduces AgentOps for Orchestrate).

On October 8, IBM and S&P Global followed with a customer deployment that shows the shift from demos to production. S&P Global will embed IBM’s orchestration framework into supply chain offerings and build new agents for the Orchestrate Agent Catalog. That is a second data point in as many days that observability and governance are crossing from slideware into operational reality (S&P Global supply chain deployment).

What AgentOps actually changes

Think of early enterprise agents as talented pilots flying without instruments. They might complete tasks, yet teams could not see why a decision was taken, whether policies were applied, or how tools were used along the way. AgentOps turns that cockpit back on. It inserts telemetry, guardrails, and lifecycle governance into the agent runtime so that builders can answer four practical questions every time an agent acts:

-

What did it intend to do, and why?

-

What data sources, tools, and prompts did it use?

-

Did it stay within policy, budget, and scope?

-

How well did it perform, and how do we improve it next time?

In watsonx Orchestrate, the Agent Catalog already includes many prebuilt agents and tools. With AgentOps, each can be wrapped in a standard contract for logging, policy enforcement, identity, and performance evaluation. That contract is the difference between an impressive demo and an auditable system of record.

Telemetry that matters

Most teams already track token counts and latency. Useful, but not enough. Visibility-first agents need a richer set of signals that can be replayed and compared across runs:

- Goal and plan events: every step in the plan, its rationale, and the tool invoked.

- Tool outcomes: success or failure, error type, time to completion, and downstream impact.

- Data lineage: which dataset versions were touched, what filters were applied, and whether sensitive fields were masked.

- Policy checks: which rules were evaluated, pass or fail, and the remediation taken.

- Human interventions: who escalated, what was corrected, and what feedback was captured.

- Cost envelope: predicted cost before execution versus actual spend after execution.

AgentOps-class tooling bakes these signals into a single timeline so teams can replay, debug, and compare runs. That replay is not a nice-to-have. It is how you prove compliance, tune prompts, retire brittle flows, and identify where a human should be in the loop.

Guardrails you can prove

Policies that only exist in wikis do not count. Builders need policy-as-code that the agent runtime enforces and records. In practical terms:

- Data access policies: column and row level rules, masking for personally identifiable information, and automatic denials when business hours or geographic constraints are violated.

- Tool permissions: allowlists for high risk tools, typed input and output contracts, and timeboxed leases so an agent cannot hold a dangerous capability forever.

- Spend controls: per task budgets and circuit breakers when cost or error thresholds are exceeded.

- Delegation rules: explicit handoff points to humans for approvals, with secure context packs so reviewers see exactly what the agent saw.

AgentOps turns each of those from documentation into runtime checks and signed logs. That is what auditors and risk teams will accept in 2026.

A marketplace that finally fits the enterprise

The expanded Agent Catalog matters because it standardizes how agents are discovered, installed, and governed. Marketplace primitives to expect and design around now:

- Identity and attestation: every agent has a publisher identity and a verifiable build hash.

- Capability descriptors: what the agent can do, what tools it calls, and what data scopes it requires.

- Permissions manifest: a simple, reviewable list of allowed actions and resources.

- Telemetry contract: required metrics and trace events that the agent must emit.

- Policy packs: installable rules for regulated workflows like onboarding, procurement, and claims handling.

- Versioning and channels: stable, beta, and canary lanes with rollbacks that preserve state.

When a buyer knows what an agent claims to do, sees how it behaves, and can enforce policy without bespoke work, adoption accelerates. This is exactly why S&P Global building agents for the catalog is notable. It brings proprietary data and domain logic into a format the rest of the platform can govern and observe consistently. The trend rhymes with what we have seen in other ecosystems, like the way AWS is making agents deployable and how Databricks automates AgentOps pipelines.

Interoperability beats lock in

On paper, enterprises want a single platform. In practice, they run a patchwork of vendor systems and legacy tools. Orchestrate’s emphasis on agent coordination across many applications meets that reality. Integrations with major systems mean you can route a plan across an enterprise resource planning system, a ticketing tool, and a data warehouse without writing brittle glue code for each hop. The key shift is to treat every vendor tool as a typed capability with explicit contracts and timeboxed permissions.

Interoperability also changes reliability math. When one tool fails, an observable agent can try an alternate path, flag the policy variance, and still complete the job without hiding the deviation. That is how agent networks will meet service level objectives in 2026. The pattern also complements edge strategies that bring tools closer to where events occur, as seen in an edge native agent backend.

A concrete example: onboarding that withstands audits

Imagine an HR onboarding agent that drafts offers, creates employee records, provisions access, and enrolls benefits. Without strong observability, you get occasional success and occasional panic. With AgentOps style instrumentation, every action is attributable:

- The plan shows why the agent chose a particular benefits package and cites the relevant policy section.

- Tool calls to identity systems are logged with request and response schemas and masked values.

- A permission change that would violate segregation of duties policy is blocked with a clear error and a remediation path.

- If benefits rates change mid run, the agent records its recalculation and requests a human approval before sending an updated offer.

When auditors arrive, you do not produce a slide. You play back the timeline.

The 2026 builder’s checklist

Here is what forward leaning teams should implement over the next twelve months. Treat this as your minimum viable discipline for agentic systems.

-

Define the agent golden signals: task success, policy violations, cost delta, tool reliability, and human effort saved. Make these default dashboards so every run has a comparable scorecard.

-

Standardize event schemas: adopt a small set of trace types for plan, tool, data, policy, and human events. Require these for every agent and tool so your telemetry can travel across teams.

-

Build a pre production gauntlet: scenario tests for happy paths, adversarial prompts, tool faults, slow dependencies, and stale data. Fail closed on policy issues and keep a library of known bad prompts.

-

Run in shadow mode: let agents operate alongside humans for a phase, emitting decisions without acting. Compare outcomes, then graduate with thresholds, not vibes. Keep the shadow runs for regression testing later.

-

Add a human in the loop router: codify when to escalate and what context to pass. Capture reviewer feedback directly into agent evaluation data and fold it into nightly scoring.

-

Enforce spend and safety gates: budgets per task, model, and tool. Kill switches for runaway loops. Alerts for unexpected tool fan out or spikes in error rates.

-

Adopt policy as code: store rules in version control, review them like application code, and attach them to deployments. Treat rule changes as releases with change logs and clear owners.

-

Treat data lineage as first class: tag datasets and document versions. Log how the agent transformed or filtered data. Prove what information touched the decision so you can answer data residency and privacy questions.

-

Design for vendor diversity: define capability interfaces so you can swap a vector store, ticketing system, or model without rewriting flows. Record compatibility tests as part of release acceptance.

-

Plan your evidence: decide in advance what you will need to show a regulator, a customer, or your board. Make those artifacts part of the default run so evidence is produced automatically.

Why the S&P Global deployment matters

S&P Global is not adopting orchestration to admire a dashboard. It is embedding agents into supply chain workflows where mistakes are expensive and delays are public. The collaboration aims to combine proprietary risk and procurement data with IBM’s agentic orchestration so that decisions are both faster and more defensible. It also commits S&P Global to publish agents into the Agent Catalog. That move signals a broader marketplace model: trusted data owners package domain logic into governed agents that customers can install with clarity about capabilities, policies, and telemetry.

For builders, that means the catalog is not just a shelf of prompts. It is a controlled entry point into an enterprise. If your agent cannot show its work and accept external policy packs, it will not get past the door.

Designing a visibility first architecture today

If you start now, you can ship an auditable agent program before budgeting season. Use this reference approach to set up a control plane, then iterate.

-

Control plane: a central service that issues identities for agents, signs deployments, manages permissions, and distributes policy packs. The control plane should expose an approvals API and a release channel switch.

-

Telemetry pipeline: a standardized collector that accepts agent traces, routes them to storage, and maintains a replayable timeline. Do not bury this inside a single team’s logs. Make it a product with its own roadmap and SLOs.

-

Policy engine: a fast rule evaluator that can operate inline during execution. It should annotate the trace with the rule set, the version, the evaluation result, and any remediation taken.

-

Tool proxy: a mediation layer that wraps external tools with time limited credentials, validates schemas, and emits tool level reliability metrics. If a tool fails, the proxy should offer a typed error contract that agents can act on.

-

Catalog and registry: a place to publish agents with manifests, attestations, and dependency graphs. Include risk ratings, required policy packs, and a readable permissions manifest.

-

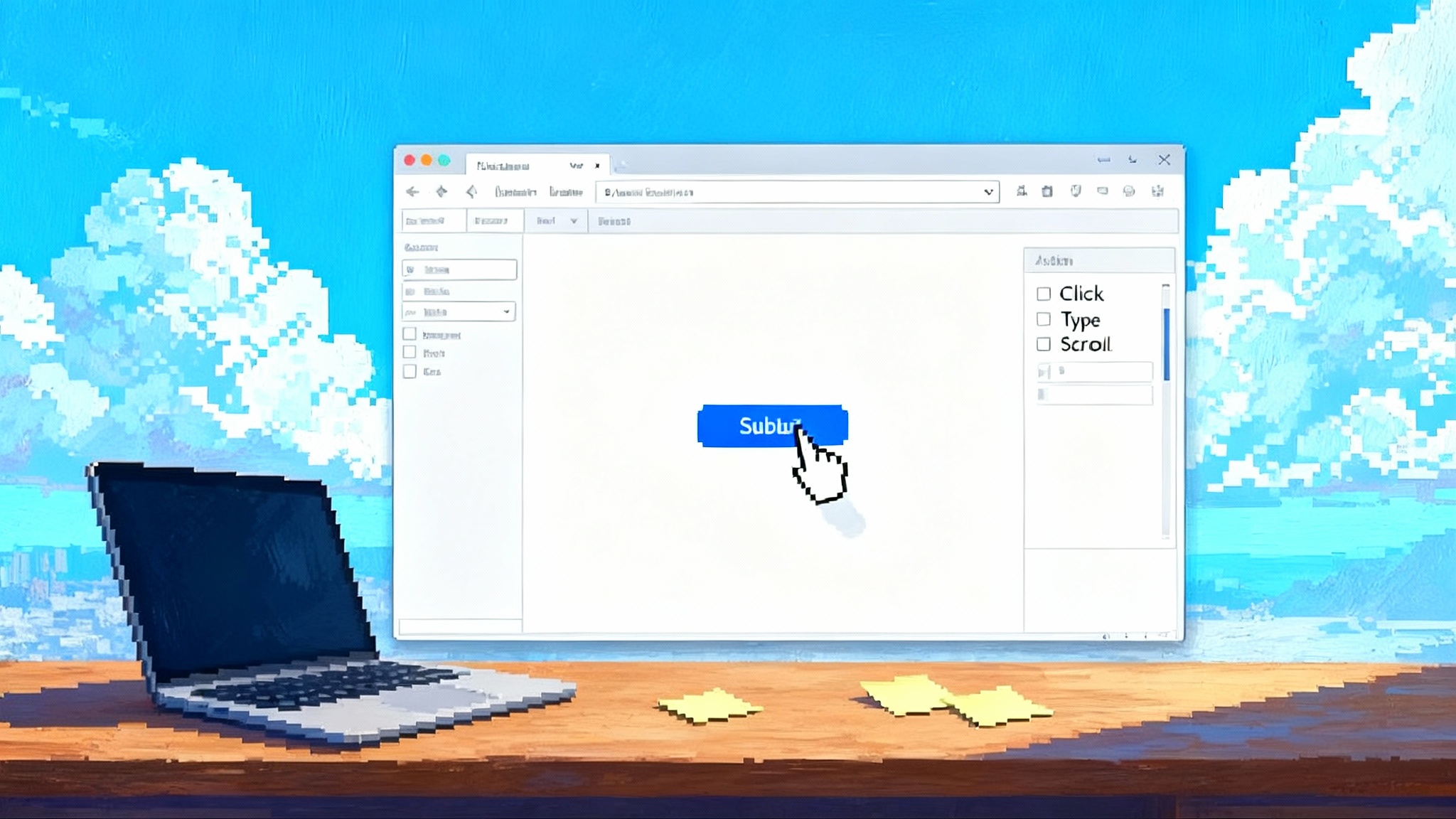

Human in the loop console: a workbench that shows the agent’s plan, the relevant context, and the policy rationale for any escalation. Make it easy to add feedback that the agent can learn from later. Keep audit logs read only.

-

Incident response runbooks: treat agents like services. When something goes wrong, you need standard steps, on call rotations, and postmortems tied to trace data.

Metrics that tell the truth

As your program matures, improve the fidelity of your measures. A few that separate mature teams from the rest:

- Policy adherence rate with reasons for each violation and mean time to remediation.

- Tool availability percent by tool class and the agent behaviors that compensate.

- Cost per successful outcome, not per token, with attribution to the tools that drove the spend.

- Human effort saved measured as tasks fully automated, tasks assisted, and tasks requiring escalation.

- Drift detection for prompts, tools, and data sources with automatic backtests.

How this changes vendor strategy

The product race is shifting. For model makers, faster and cheaper still matter, but the growth wedge is the quality of agent instruments and the breadth of policy packs. For platform providers, the winning move is to be a neutral control tower for multi vendor stacks. For data owners, value creation looks like trusted agents that carry proprietary insight into customers’ workflows without leaking it elsewhere.

Expect three new buying questions in 2026:

- Can we prove what the agent did and why, in a way a regulator accepts?

- Can we swap tools or models without painful rewrites or loss of observability?

- Can we constrain the agent’s behavior with policies that apply everywhere, not only in one product?

Vendors that answer yes will outgrow those that answer maybe.

The Monday plan

If you are a platform leader or head of engineering, you can move this week.

- Pick one workflow that your business cares about and that a regulator might ask about. Onboarding, procurement, or claims are common choices.

- Draft the golden signals you will measure. Wire up a simple trace schema and a dashboard before you write another prompt.

- Wrap your riskiest tools in a proxy that enforces schemas and permissions, and that emits reliability metrics.

- Implement a policy engine with three starter rules: data masking, spend ceiling, and escalation for decisions above a set dollar amount.

- Run the agent in shadow mode for two weeks. Compare results to human outcomes. Then turn on controlled writes with circuit breakers.

- Publish the agent to your internal catalog with a manifest, a version, and a telemetry contract. Treat it like a product and review releases.

The bottom line

IBM’s October 7 and October 8 announcements are not just a product launch. They are a clear signal that enterprise agents will be judged by their discipline, not their size. AgentOps and the expanded Orchestrate catalog are the beginnings of a control plane for agentic work. S&P Global’s move takes it out of the lab and into supply chains where visibility, policy, and interoperability are not optional. Builders who design for evidence will win 2026. Those who chase model headlines without instruments will spend it explaining what went wrong.

To go deeper on the broader market shift, compare how AWS is making agents deployable and how Databricks automates AgentOps pipelines. Together with IBM’s push, these moves define what a real control tower looks like and why observability is the moat.