Salesforce’s Voice-Native, Hybrid Agents: 90-Day Playbook

Salesforce is rolling out voice-native agents and hybrid reasoning at Dreamforce 2025. Learn what they mean for CRM, how to build an emotion-aware pilot, and a focused 90-day plan to prove ROI with full auditability.

The breakthrough: agents that can talk and truly reason

Salesforce is preparing two big upgrades to Agentforce during Dreamforce 2025 in San Francisco on October 14-16, 2025: voice-native agents and hybrid reasoning. According to the Axios report on voice agents, the emphasis will be on handling nuance and emotion during live calls, not just transcribing words. That matters because the contact center remains both the most costly and the most emotionally charged channel in CRM.

Voice-native means the agent treats the phone as a first-class channel. Hybrid reasoning means the agent blends multiple thinking styles to complete business tasks without going off the rails. Together, they promise fewer handoffs, cleaner compliance, and faster resolutions.

What voice-native really means

Most chatbots learned to handle phone calls by bolting telephony onto text-first flows. Voice-native flips that model. Speech is the primary input and output, while text understanding, retrieval, and actions happen behind the scenes. In practice, you should expect four core capabilities:

- Low-latency speech recognition with partial hypotheses so the agent can respond while the caller is still talking.

- Prosody, sentiment, and pause detection so the agent adjusts pace and tone when stress rises or when a customer shares something sensitive.

- Robust turn-taking and barge-in handling so the agent stops immediately when interrupted and resumes with context intact.

- Phone-native workflows such as IVR containment, callback offers during long queues, and post-call summaries written directly to the case.

Critically, voice-native is about action, not chatter. If a caller says, "I ordered the wrong size; please swap it," the agent should verify the order, check policy, schedule an exchange, generate a label, and confirm via the customer’s preferred channel. Conversation is the interface, but fulfillment is the job.

A frequent question is whether you need a new contact center. Usually not. A voice-native agent should register as a virtual endpoint in your existing queue, follow the same routing rules, and log to the same case object your supervisors already audit.

What hybrid reasoning is and why it matters

Large language models excel at pattern matching and fluent dialogue, but business processes require rules, lookups, approvals, and arithmetic. Hybrid reasoning orchestrates several reasoning styles inside one agent so it can converse, decide, and act with guardrails. Think of it as a small team working together inside the agent:

- A conversational model interprets intent and maintains dialogue coherence.

- Retrieval fetches the exact policy or knowledge article from a trusted source.

- A rules and policy layer checks eligibility, limits, and compliance gates.

- A planning loop breaks goals into steps, invokes tools, and verifies outputs.

- A reflection step decides whether to ask a clarifying question or escalate to a human.

Salesforce’s framing of this approach appears in its Atlas materials. For a technical look at the orchestration loop, see how the Atlas Reasoning Engine powers Agentforce. The point is fewer hallucinations, greater consistency, and decisions grounded in the customer record and policy metadata.

A simple metaphor helps: a great hotel concierge listens, asks a follow up, checks the registry, looks up an event, applies hotel policy, books a car, and confirms pickup time. Hybrid reasoning makes your service agent that concierge for returns, warranties, entitlements, and appointments.

Why this leap is timely for CRM leaders

- It converts high-cost, high-emotion calls into consistent workflows. When the agent hears stress, it slows down, simplifies language, and still completes the return or refund.

- It narrows the gap between conversation and action. With direct access to case, order, inventory, and policy, the agent resolves in one flow instead of bouncing between tiers.

- It improves control and compliance. Policies become machine-readable instructions rather than brittle prompt text hidden in a file. Supervisors can update rules weekly and prove exactly what the agent did and why.

- It sharpens the human handoff. When escalation happens, agents see transcript, actions tried, tools called, confidence scores, and a suggested next step, which reduces handle time and repetition for the customer.

For context on the broader market shift, see how the enterprise AI control plane is emerging and how CX platforms like Zendesk have moved toward the CX switch to autonomous resolution. These patterns signal the same direction: conversation plus controlled action.

A grounded blueprint: how a voice-native, hybrid agent works

Here is a typical signal flow for a service use case:

- A call lands on the virtual agent, which listens and transcribes in real time.

- The system detects topic and sentiment, then retrieves a policy bundle for that topic.

- The planning loop proposes a sequence of actions, calls tools to read or update records, and checks results.

- If a risky step appears, the agent asks a targeted confirmation or routes to a human.

- The agent narrates what it is doing in simple, plain language to build trust and reduce confusion.

- Every step is logged in an immutable audit trail: audio snippets, transcript segments, tools called, parameters used, returned values, and reasons for decisions.

- The agent summarizes the outcome in the case, tags exceptions for review, and triggers any post-call workflow such as a satisfaction survey.

If you already run Service Cloud, this blueprint maps mostly to objects you have: Case, Contact, Order, Entitlement, and Knowledge. You add a policy store, a tool registry that exposes safe actions, and a reasoning engine that orchestrates them.

The 90-day playbook to land results by Q1 2026

The objective is a production pilot that proves emotion-aware containment with clean handoffs, full compliance logging, and a credible ROI model. Break the quarter into three sprints.

Days 0-30: Scope, data, and governance

- Choose one call reason with measurable value. Examples include exchanges or returns, appointment scheduling, warranty verification, billing clarification, or order status with remediation. Avoid legal disputes and edge cases in the first pilot.

- Define success with numbers. Set targets for containment rate, average handle time, human transfers, first contact resolution, customer satisfaction, and policy violations. Sample first-pilot targets: 25 to 35 percent containment, 15 to 25 percent reduction in handle time on escalations, and a 10 point CSAT lift for the piloted reason code.

- Build governance guardrails. Create a policy catalog that maps each reason to constraints: maximum refund, allowed actions, data fields the agent can read or write, and cases that must escalate. Implement real-time redaction for payment and sensitive data, plus an audit log that stores transcripts and tool calls with retention that matches legal policy.

- Prepare the knowledge base. Identify the three to five documents the agent must cite verbatim, then convert them into structured snippets with versioning. Outdated knowledge is a leading cause of agent mistakes, so assign an owner and run a weekly review.

- Involve Legal, Security, and the contact center supervisor early. Agree on escalation ladders and the exact language used for consent and policy decisions.

Days 31-60: Design and build the pilot agent

- Design conversational flows that start simple, then branch only when necessary. For each branch, write positive and negative tests, including interruptions, background noise, and accent variation.

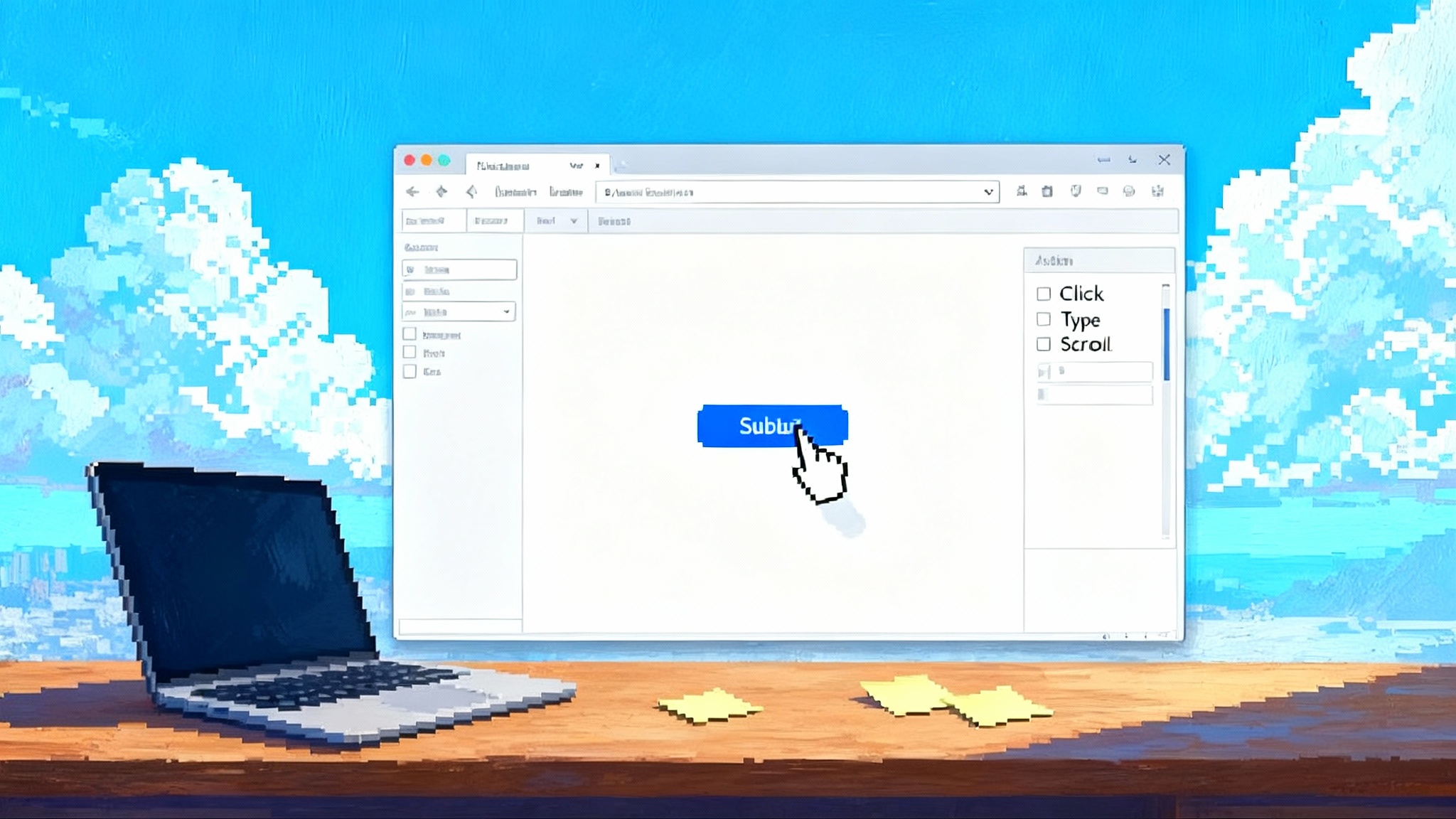

- Wire up safe tools. Start with read-only checks and constrained actions: create return label, reserve appointment slot, adjust an order line, apply credit under a limit. Each tool should declare inputs, outputs, and preconditions. If a precondition fails, the agent explains why and offers an alternative.

- Implement emotion and intent strategy. Define specific cues for empathy adjustments, such as slower speech after long pauses or a brief apology when sentiment dips. Emotion handling is about tone and pacing, not therapy. Keep it concrete: acknowledge, confirm, solve.

- Generate and review audit trails. Supervisors should see a live view that includes transcript segments, tools called, and confidence notes. Add a one-click button to escalate and annotate a case when something looks off.

- Dry-run internally. Have staff call from noisy mobile phones and landlines. Test mid-sentence course corrections. Review five failure cases for every success case, then tune policies or tools before exposing real customers.

Days 61-90: Limited production and ROI tracking

- Go live to 5 to 10 percent of traffic for the chosen reason code during staffed hours so humans can step in instantly.

- Set thresholds that force a handoff. Examples include more than three repeats of the same question, repeated low-confidence retrieval, or a detected policy conflict.

- Measure every call. Capture time to first action, total duration, sentiment progression, containment outcome, and a human quality score if it escalated. Compare against a control group handled by humans.

- Hold weekly reviews. Fix policy gaps and tool errors quickly. If a policy forces too many escalations, either raise the limit carefully or create a new safe tool to automate the missing step.

- Summarize the economics at day 90. Use a conservative cost model for speech, model tokens, and orchestration.

How to measure ROI without hand-waving

Start with a straightforward unit economic model.

- Cost per automated minute: estimate speech recognition and synthesis, model inference, and orchestration at an all-in cost between 2 and 6 cents per minute, depending on volume and provider. Include a buffer for spikes.

- Savings per contained call: use your baseline fully loaded cost per voice contact, commonly 4 to 10 dollars per call in North America for simple tasks, and higher for specialized work.

- Partial automation value: when the agent gathers data and summarizes before handoff, count the saved minutes. A two minute reduction on a five minute human call is meaningful at scale.

An example for a returns flow:

- 100,000 monthly calls on the chosen reason code.

- Pilot targets: 30 percent containment and two minutes saved on the 70 percent that escalate.

- Baselines: 6 dollars per human-handled call and five minutes average handle time.

- Costs: voice-agent runtime at 0.05 dollars per minute and an average of four minutes per call.

Outcome math per month:

- 30,000 contained calls save about 6 dollars each, which is 180,000 dollars in gross savings.

- 70,000 assisted calls save about 2 dollars each in time, which is 140,000 dollars.

- Runtime costs are about 20,000 dollars.

- Net monthly impact is roughly 300,000 dollars. Even if your containment lands at 20 percent and time saved is one minute, you are still in six figures. Count only the flows you actually piloted and compare against the concurrent control group.

For comparisons across platforms and deployment patterns, review how the Agents Marketplace make AI deployable to plan for scale-out after the pilot.

Safety, compliance, and the human handoff

- Transparent actions: the agent narrates steps in plain language so callers understand what is happening. This builds trust and simplifies human takeover.

- Strong escalation: design a warm transfer with a short human summary and an explicit announcement to the caller that a person is now on the line. Avoid dead air and use a three second rule for the human to greet.

- Logged decisions: capture who did what and why, including model prompts, retrieved documents with versions, tool parameters, and policy checks. Lock the audit record to the case.

- Data discipline: redact sensitive numbers in real time and restrict tools so the agent sees only what policy allows. Avoid blanket permissions for free-form updates.

- Continuous evaluation: run a daily review of a stratified random sample of calls, including the longest and the lowest-confidence ones. Quantify error types and fix the root cause, not just the symptom.

Team and operating model

- A product owner for the pilot reason code who is accountable for outcomes.

- A conversation designer who writes prompts, guardrails, and clarifying questions.

- A platform engineer who integrates tools and builds the audit pipeline.

- A quality lead who scores calls, tracks errors, and drives remediation.

- A contact center supervisor who owns escalation rules and staffing.

- Legal and Security reviewers who sign off on policy and retention.

Keep the team small, then scale only when the first pilot shows durable value. Avoid the temptation to chase ten flows at once.

What to watch during Dreamforce week

Dreamforce runs October 14-16, 2025 at Moscone Center in San Francisco, with keynotes and sessions streaming on Salesforce+. As announcements arrive, focus on three practical questions:

- Voice-native availability and supported telephony providers. This determines how quickly you can plug in without touching queueing and routing.

- Hybrid reasoning controls. Look for policy bundles you can edit without code and a visible loop that shows retrieve, reason, act, and reflect. The easier it is to supervise, the safer and faster you can deploy.

- Supervisor tools and analytics. You want an interaction explorer that shows transcripts, tools, sentiment, and exceptions, with export options for compliance review and business intelligence.

Bottom line

If you already use Salesforce for service, this is the moment to pilot one voice flow with hybrid reasoning and full auditability. Pick a narrow scope with clear value, install strong guardrails, and measure relentlessly. Forty-five days is enough to stand up a safe pilot, and ninety days is enough to know if it bends your cost curve and improves customer satisfaction. Dreamforce 2025 offers the perfect launch window, and Q1 2026 offers the perfect report card.