Tinker’s Debut Signals Fine-Tuning’s Mainstream Moment

Tinker gives small teams lab-grade control of training loops on open-weight models while renting the heavy infrastructure. Here is why that shift matters, what to build first, how to evaluate safely, and a 90-day playbook.

Breaking: a lab-grade fine-tuning rig you can rent by the loop

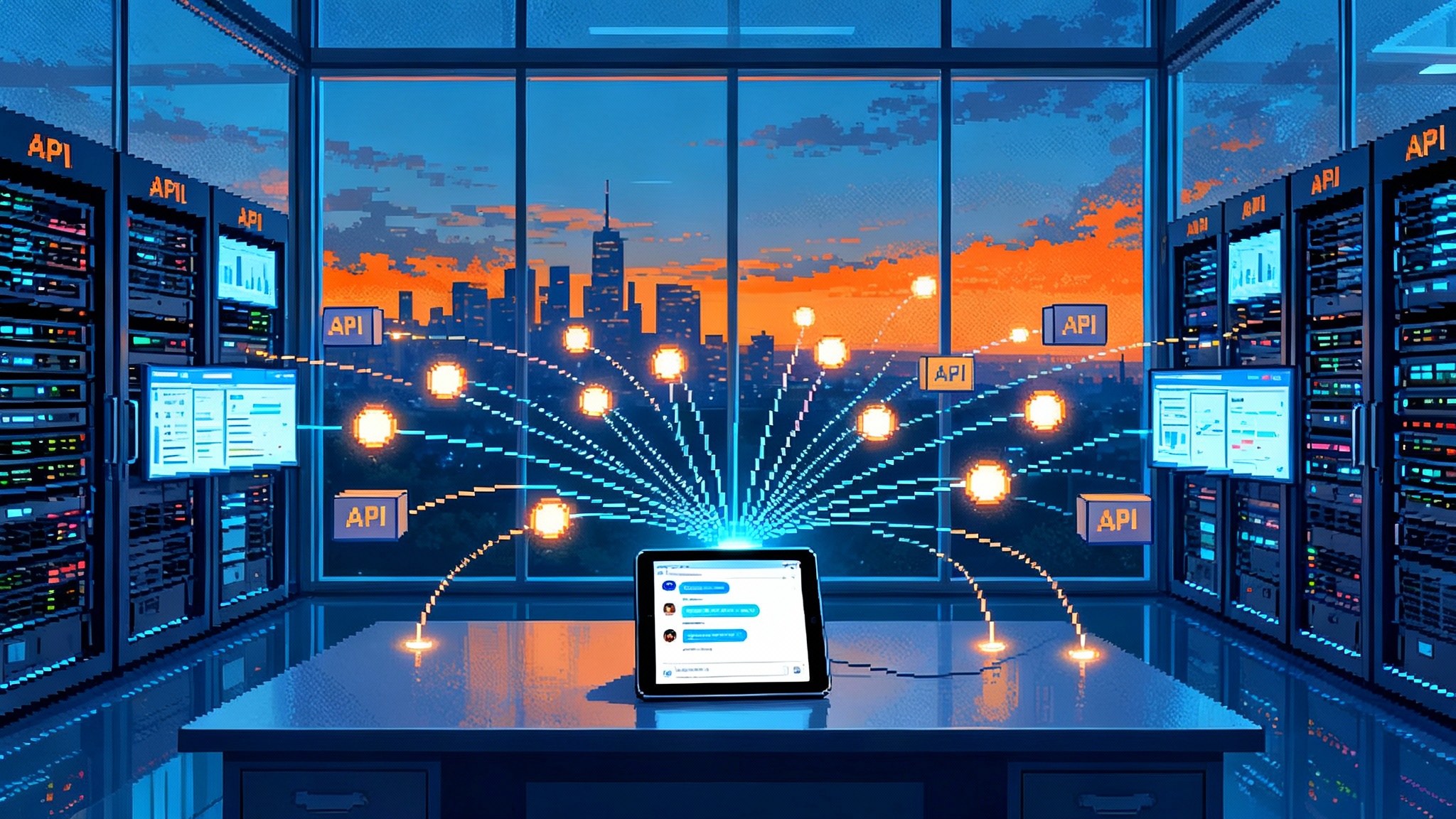

On October 1, 2025, Thinking Machines Lab introduced Tinker, a self-serve training and fine-tuning API built for open-weight models. The promise is simple and bold. You write the training loop you want, Tinker handles the distributed hardware and orchestration, and you keep control of your data, loss functions, and evaluation strategy. In practice, it turns what used to be a months-long infrastructure project into a week-one capability for a small team. See the official Tinker announcement post.

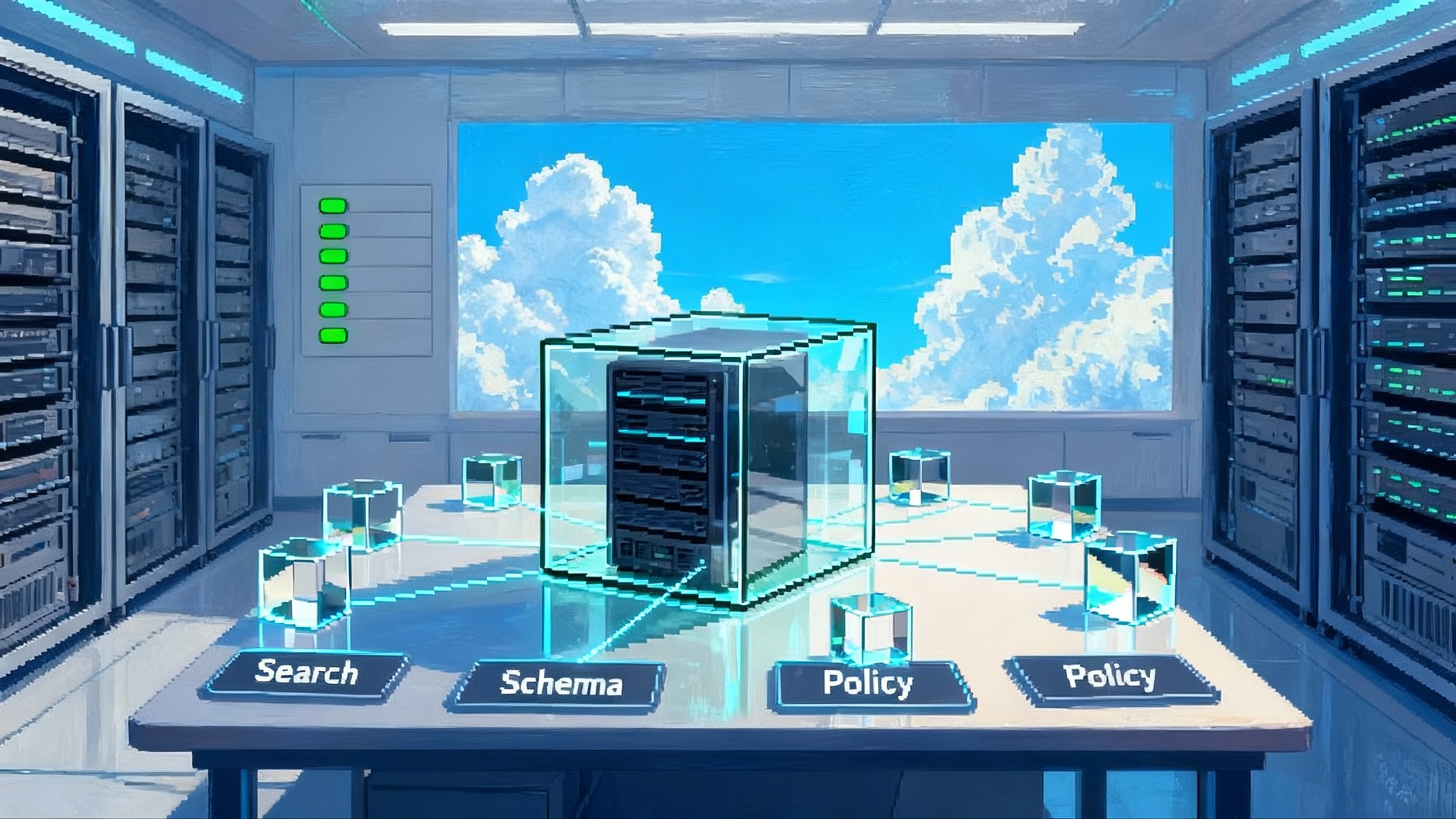

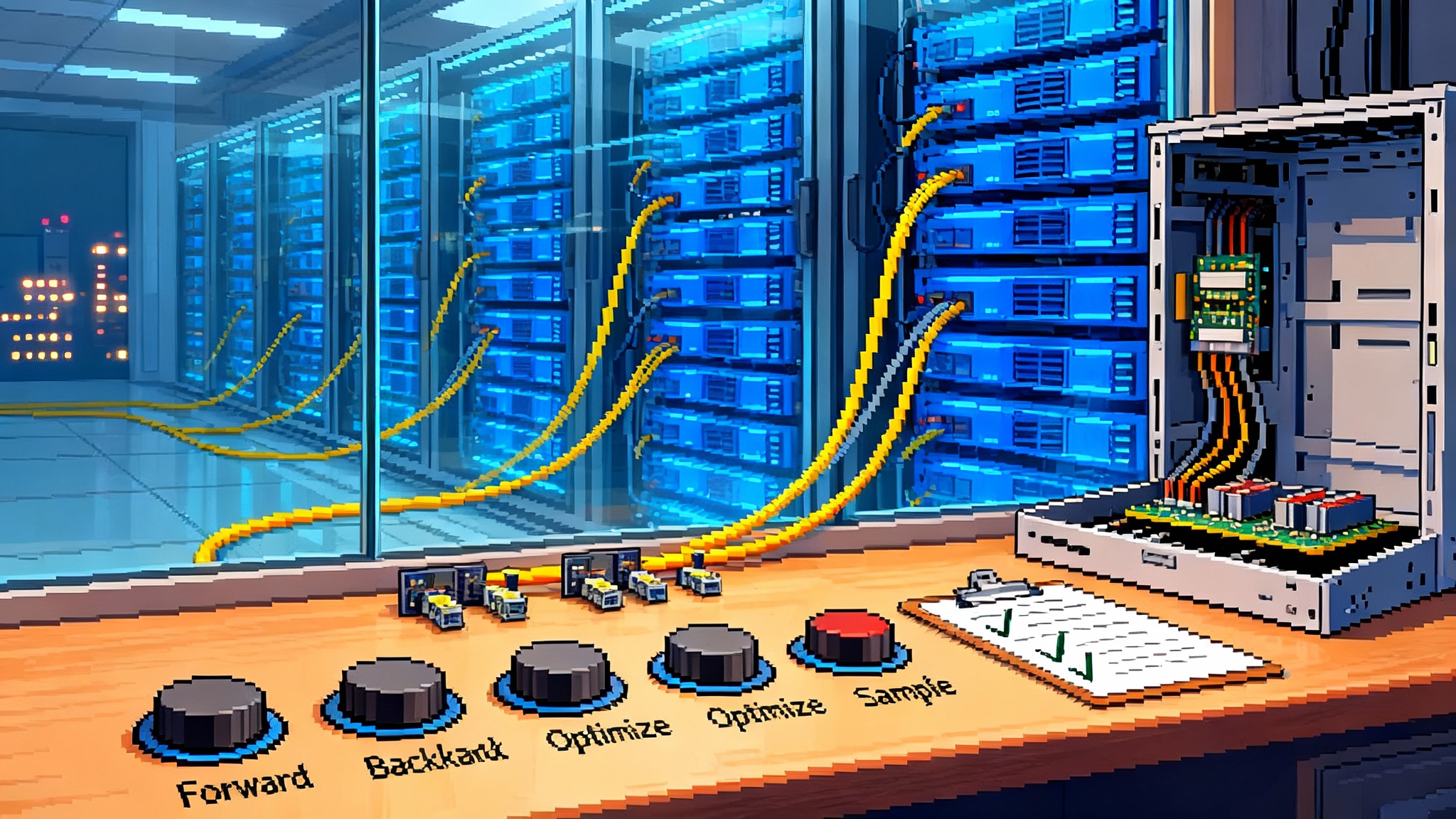

Tinker exposes a set of low-level primitives that mirror the way researchers really work: forward and backward passes to accumulate gradients, an explicit optimizer step, a sampling call for evaluation or reinforcement learning rollouts, and checkpointing for state. With those building blocks, teams can implement supervised fine-tuning, preference-based methods, or reinforcement learning without building a bespoke cluster or rewriting their stack for every model family. Tinker centers on low-rank adaptation, which means you can train compact adapters instead of touching every weight in the base model, and it supports open-weight families like Llama and Qwen up to very large mixture-of-experts variants. Explore the Tinker API documentation.

Early users range from academic groups at Princeton and Stanford to research collectives at Berkeley and Redwood Research, which hints at the target persona: people who care about knobs and metrics as much as throughput. Private beta status, a waitlist, and usage-based pricing staged after an initial free period round out a launch that feels intentionally practical.

Why this is a breakthrough, not a feature

For years, only well-funded labs could easily run the full supervised and reinforcement learning pipeline on top of competitive base models. The pain was never just the number of graphics processing units. It was the software: distributed training frameworks, failure recovery, reproducibility, logging, and the overhead of proving that a change in a loss function actually produced a robust improvement.

Tinker collapses that overhead into a narrow surface area while leaving the algorithmic decisions in your hands. You plan the training logic. You keep your dataset under your governance. You bring your own evaluators. Tinker is the scaffolding around your loop rather than a black box that asks for a comma-separated file and outputs a mystery model.

That division of responsibilities is the critical design choice. High-level automation can be fast but brittle, especially when a team needs to diagnose why a reward model is drifting or why a particular safety filter is over-blocking niche but valid inputs. Low-level control with managed infrastructure is a viable middle path because it shifts the operational burden without removing the handles that serious practitioners need.

From closed labs only to seed-stage friendly

The distance between a leading research lab and a startup used to be counted in clusters and staff. With a handful of careful abstractions, that gap narrows. Consider the four-function interface. It is simple enough for a two-person team to learn in a day, yet it composes into the same ingredients used by larger labs: supervised fine-tuning on curated instruction pairs, preference optimization with pairwise judgments, and final policy refinement with reinforcement learning from human or programmatic feedback.

Because Tinker centers on open-weight models, the artifacts you produce are portable. You can download adapter checkpoints, move them to your own inference stack, and keep serving options open. That reduces platform lock-in and helps legal teams reason about data boundaries and intellectual property. In other words, you can live with one foot in a managed service and one foot in your own production environment, which is where many enterprises want to be.

For readers following the broader agentic trend, this mirrors how vertical agents are moving from slides to systems. Our deep dive on how vertical AI agents rewrite ops shows why portability and evaluation discipline are winning patterns across sectors.

The plug-and-play pipeline, explained in plain language

Think of fine-tuning like teaching a hired expert your company’s playbook.

- Supervised fine-tuning is the classroom. You show before-and-after examples and the model learns to imitate the target responses.

- Preference optimization is the grading rubric. You collect pairwise comparisons or scores that tell the model which outputs are better and why.

- Reinforcement learning is the internship. The model tries tasks, gets feedback or rewards, and adjusts policy weights based on experience.

Tinker’s primitives correspond to real steps in each phase. The forward and backward pass is how you compute and store the learning signal. The optimizer step applies it. Sampling lets you test the model mid-flight, collect preferences, or run rollouts in a simulated environment. Save and load functions give you the safety net that most small teams skip at their peril. Because the base models are open weight, you can switch from an 8 billion parameter dense transformer to a 70 billion parameter one by changing a string in code, then rerun the same loop to validate scale effects.

What you can build now, with examples

-

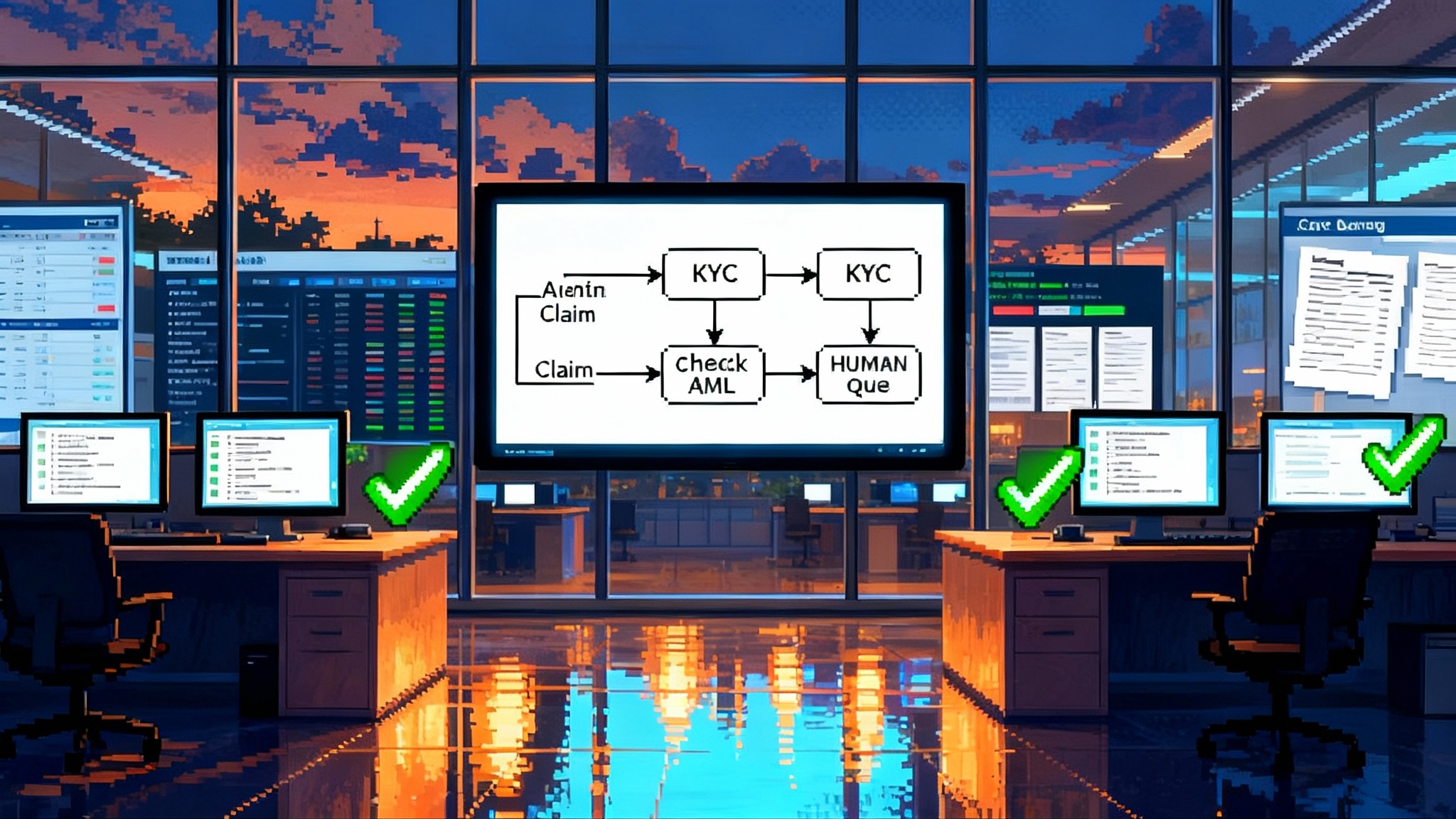

A claims analyst agent for an insurer that ingests policy language, extracts facts from incident descriptions, and drafts adjudication rationales. Start with supervised fine-tuning on a few thousand labeled claims, then add preference data from your best adjusters to capture tone, caution, and regulatory language. Add reinforcement learning to discourage shortcuts that skip required checks.

-

A bond desk analyst that summarizes covenants, flags risk factors, and maps issuer-specific terminology to standardized tags. Use supervised fine-tuning on historical memos, then instruct the model to always attach three supporting quotes from the prospectus, which you validate with a retrieval-based evaluator.

-

A clinical documentation aide that structures patient notes and suggests clarifying questions in specialty clinics. Keep data on-premise or in a private enclave, train adapters rather than full weights, and export the adapter for local inference to keep protected health information within your trust boundary.

In each case, you do not need to own the largest cluster to experiment. You need a clear training loop, a careful evaluation plan, and an operations path to move the artifacts into production. Tinker provides the rails, not the script.

Why this lowers cost in practice

Low-rank adaptation changes the unit economics. Instead of updating every parameter of a massive base model, you train small adapter matrices. That reduces memory pressure, shortens iteration cycles, and lets multiple experiments share the same underlying base model replicas. The effect is practical rather than theoretical. You spend fewer dollars to test more ideas per week, and you can reserve full fine-tuning for the rare case where analysis shows a measurable ceiling you cannot cross with adapters.

Because the service supports a span of sizes from compact dense models to very large mixture-of-experts, you can right-size a project from day one and postpone scale until your evaluation metrics justify it. Treat model size as a dial, not a decision. Pretrain the behavior on a small model to find your best curriculum and loss schedule, then graduate to a larger model only when gains plateau.

The 2026 wave: private, vertical agents become the default

The launch is well timed. In 2026, expect a noticeable shift from one-size-fits-all assistants to private, domain-specialized models. Legaltech firms will tune models on style-locked clause libraries and regulator guidance. Health systems will train specialty note-taking and coding agents that respect local workflows rather than generic templates. Financial services teams will build reasoning-heavy models for reconciliation, counterparty risk, and internal policy enforcement.

The common thread is not novelty. It is accessibility. Most teams already have labeled data in issue trackers, RFP archives, or electronic health record systems. What they lacked was an affordable way to iterate on training loops, attach evaluators that reflect in-house standards, and ship controlled changes with audit trails. Managed training that lets you bring your own data and algorithms while renting the distributed smarts of the cluster closes that gap.

We are seeing similar momentum in orchestration and agent teamwork. If that is your interest, read how RUNSTACK orchestrates chat-built AI teams. For data-heavy organizations, pairing a portable adapter strategy with safe data parallelism complements efforts like Agentic Postgres for parallelism.

Three friction points to watch closely

-

Evaluations that actually predict deployment behavior. The easy part is collecting exact-match metrics on supervised test sets. The hard part is building robust suites that combine adversarial prompts, tool-use scenarios, and long-context reasoning. Treat evaluations like unit tests for behavior. For supervised fine-tuning, reserve a cold-start slice that mirrors production prompts. For reinforcement learning, define episode types and reward traces that represent real variance, then run weekly drift checks. Do not trust a single score. Track goal-conditioned returns, safety violations per thousand interactions, refusal rates by category, and hallucination citations per one hundred outputs. The teams that win here will maintain a living evaluation registry the same way they maintain continuous integration pipelines.

-

Safety gating that balances recall and precision. Post-training often improves helpfulness while raising the risk of boundary crossings. Set up a multi-layer gate: a lightweight prompt-level classifier for fast rejects, a rules engine for policy-obvious cases, and a slower semantic filter for edge situations. Require positive evidence in high-risk flows, such as a retrieved source excerpt that supports a medical recommendation. Use allowlists for privileged tools like datastore writes, and attach rate limits and audit logs to anything that can trigger spend or send data off the network. Build reward functions that penalize unsafe wins so the model learns that high-risk shortcuts are costly, not clever.

-

Graphics processing unit arbitrage as a first-class concern. Training costs are no longer fixed, even in managed services. Price and throughput move with cluster load, region, and the mix of dense versus mixture-of-experts jobs. Design your plan to shift model size with the stage of learning rather than locking into a single scale. Pretrain behavior on a compact model to find your best curriculum and loss schedule, then scale up for the final few percentage points of accuracy only if your evaluations demand it. Export adapters and keep inference portable so you can choose serving vendors based on latency, geography, and compliance, not just convenience. Managed tools help, but your architecture should assume you will shop for capacity regularly.

A concrete playbook for the next 90 days

-

Days 1 to 30: Scope the target tasks and write a crisp definition of success. Choose an open-weight base model that fits your privacy, latency, and cost constraints. Build a minimal supervised fine-tuning dataset that reflects your domain, then write the smallest training loop that can learn it. Establish baseline evaluations that include both simple accuracy and behavior metrics, for example refusal rate, self-consistency on multi-step reasoning, and tool-use alignment.

-

Days 31 to 60: Expand data coverage and add preference optimization. Collect pairwise comparisons or ratings from your senior operators, and codify the common reasons for rejections. Introduce reinforcement learning if your task involves long-horizon decisions or tool calls, and wire up episode-level rewards that match business goals. Start safety gating now, not at the end. Track regressions daily with an automated suite and immutable checkpoints.

-

Days 61 to 90: Pressure-test at scale. Run load tests with production prompts, adversarial synthetic prompts, and long-context scenarios. Compare cost and throughput across at least two model sizes. Decide where portability matters and export adapters so you can serve on your preferred stack. Prepare a rollback plan that includes the last good checkpoint, a known-good gate configuration, and a playbook for restoring the previous behavior if a rollout misfires.

Implementation notes for small teams

- Keep the code surface tiny. Encapsulate your training loop in a single module with clear hooks for dataset readers, loss calculators, and evaluators. Small surfaces are easier to audit and swap.

- Separate roles for data and algorithms. Make it explicit who curates data and who edits the loop. This reduces accidental leakage and simplifies access reviews.

- Standardize on one logging vocabulary. Whether it is accuracy, AUC, or custom refusal rate metrics, choose names early and stick to them so dashboards remain interpretable months later.

- Treat checkpoints like releases. Tag, sign, and store them immutably. Include the loop version, data snapshot, and evaluator versions in the manifest.

- Budget GPU time like growth capital. Allocate fixed weekly budgets per experiment, then require a promotion review to scale from small models to larger ones.

What this means for enterprises and regulators

Enterprises now have a practical way to own what matters without owning everything. Data stays within a clear boundary. Training logic is reviewable and reproducible. Model artifacts are portable across providers. That makes it easier to meet internal policies on data retention, incident response, and vendor risk. It also gives compliance officers something tangible to audit, because the training loop and evaluation registry are human-readable.

Regulators will likely respond by asking for clearer disclosures about post-training. Expect requests for evaluation summaries tied to deployment context, documented safety gates, and reproducibility evidence for major runs. Teams that treat those asks as standard operating procedure rather than exceptions will move faster, not slower.

The bottom line

Tinker’s launch is a signal that fine-tuning is going mainstream. By shrinking the distance between a good idea and a credible training run, it turns open-weight models into platforms that any serious builder can bend to a specific job. The details that used to separate the biggest labs from everyone else, from distributed training to evaluation discipline, are becoming product surfaces that teams can rent, reason about, and improve.

If 2025 was the year open-weight bases matured, 2026 is set up to be the year those bases fragment into thousands of private, domain-specialized agents. The winners will not be the teams with the largest clusters. They will be the teams that learn the fastest with the cleanest loops, the sharpest evaluations, and the most portable artifacts. Tinker does not guarantee that outcome, but it makes it possible at startup speed.