Agent Bricks turns the data layer into the agent control plane

Databricks and OpenAI just put frontier models inside Agent Bricks. Here is how the data layer becomes the control plane for enterprise agents, what MLflow 3.0 adds to observability, and how leaders should act now.

Breaking: frontier models meet governed data where they live

On September 25, 2025, Databricks and OpenAI announced a multi‑year partnership that makes OpenAI’s frontier models, including GPT‑5, natively available inside Databricks’ Agent Bricks. The point is simple but consequential. Moving the strongest models to the data platform that already governs identity, policy, lineage, and cost collapses the last mile between intelligence and the information it must act on. You can read the official details in the Databricks and OpenAI partnership press release.

That announcement does more than add another model endpoint. It accelerates a shift that has been underway for a year. The real control plane for enterprise agents is becoming the data layer itself, not the application shell that sits above it. If your rules, identities, feature definitions, and evaluations already live in the platform, then enforcement and observability should live there too.

Why the control plane is shifting to the data layer

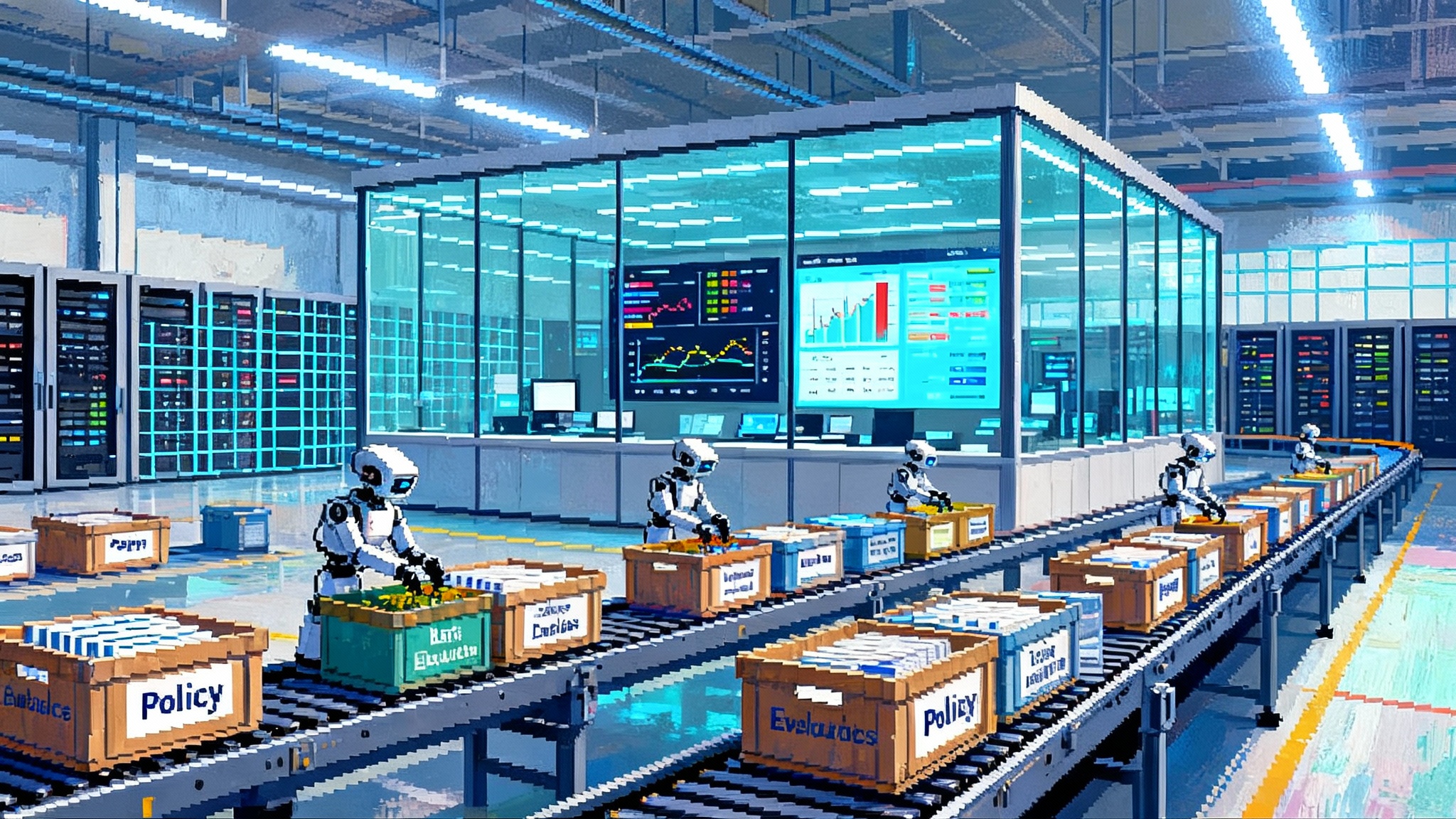

Every production agent needs five ingredients beyond a large model. They are policy, identity, context, memory, and feedback. Each belongs in the enterprise data layer because that is where durability, lineage, and access guarantees already exist.

- Policy lives with governance and lineage. When access controls, masking rules, and audit trails sit next to the data, the platform can enforce policy before an agent touches anything.

- Identity ties to directories, service principals, and catalog permissions. Who can call which tool against which table is not a question for a UI; it is a catalog decision.

- Context and memory are data artifacts. Documents, embeddings, features, and traces are produced, versioned, and consumed in the same place.

- Feedback and evaluation become datasets. Human ratings, LLM‑as‑a‑judge scores, adversarial tests, and regression suites belong alongside other data so they can be queried, joined, and reused.

When these ingredients live in the data platform, the platform naturally becomes the control plane. There is no need for an external orchestrator to mediate critical decisions. The platform already enforces policy, routes compute near the data, and records every action with lineage. That is where risk is reduced and where reliability is earned.

What Agent Bricks actually does

Agent Bricks is Databricks’ opinionated path from a task description plus your data to a measurable agent. Under the hood it focuses on four enterprise‑grade behaviors that matter more than a flashy demo.

-

It builds task‑aware benchmarks. Agent Bricks generates synthetic examples that reflect your domain and combines them with curated real data. Teams move from eyeballing chat transcripts to tracking metrics on datasets that represent real work.

-

It tries strong model candidates and optimizes routes. With OpenAI’s frontier models available on platform, model choice happens where your data, rules, and costs are visible. You can prefer a top model for critical cases and a budget route for routine tasks, all governed by the same catalog policies.

-

It evaluates with judges and rules you can audit. Evaluations become first‑class data. Security officers and product owners can inspect the judge prompts, thresholds, and outcomes because they are catalog objects.

-

It deploys on platform. Agents execute close to data, feature stores, and real‑time signals such as streams or events. There is no shuttling of sensitive information across clouds just to make a decision.

Consider two concrete examples. A claims triage agent reads incident reports, checks policy terms, requests missing documents, and escalates borderline cases. A quality agent in manufacturing correlates sensor anomalies, maintenance history, and supplier lots, then proposes corrective actions. In both cases, the agent is not “a chat box.” It is a data‑native system with measurable behavior that inherits governance from the catalog.

Observability grows up with MLflow 3.0

Traditional ML observability was built for static models with predictable inputs and outputs. Agents are different. They call tools, branch on rules, and evolve prompts. MLflow 3.0 on Databricks adds the kind of observability that generative applications and agent systems require: trace‑level logging across requests and tool calls, versioned evaluations across environments, and a consistent way to attach human and automated feedback to any agent version. The official product page covers these capabilities in more depth in the MLflow 3.0 on Databricks documentation.

In practice, this unlocks three day‑two operations that used to require hope and heroics.

- Transparent upgrades. When you swap a base model or change a prompt, MLflow captures before‑and‑after traces and scores. Product managers can prove a win before rolling out.

- Real‑time quality alarms. If a policy is breached or a score dips on a critical pathway, the streaming layer can trigger a rollback or route traffic to a safer fallback.

- Cross‑environment parity. The exact agent version and its evaluation live in Unity Catalog. A number in staging means the same thing in production because it points to the same evaluated artifact.

The decisive difference: data‑layer suites versus app‑layer stacks

Today’s agent tooling splits into two families.

-

App‑layer agent stacks. These emphasize developer ergonomics, orchestration graphs, multi‑agent loops, and plug‑in tools. They are fantastic for fast iteration and demos. Their control plane sits outside the data platform, which means security, cost, and quality checks must be wired back to the data plane through custom glue.

-

Data‑layer agent suites. These emphasize governed evaluations, catalog‑native prompts and tools, feature lineage, and deployment close to data. They may feel slower at the prototype stage, then accelerate once reliability, security, and cost predictability matter.

Neither is always better. The dividing line is the unit of value. If the unit of value is a quick demo or a narrow function, app‑first stacks shine. If the unit of value is dependable digital labor that touches customer records and triggers real actions, the data‑layer control plane wins because the platform already knows who can see what, how it was derived, and which guardrails apply.

For a broader market view, compare how Google’s stack positions a similar concept in Vertex AI adds sandbox and A2A, how Cisco frames collaboration agents in Webex becomes a cross platform suite, and how the edge is evolving in Cloudflare Remote MCP at the edge. The common thread is that control and trust flow from where data and policy already live.

From prototypes to dependable digital labor

Enterprises do not lack agent demos. They lack agents they would trust to run without a human watching the console. Moving from a proof of concept to dependable labor requires a progression that the data layer is uniquely built to support.

-

Define the job. Convert the business goal into a task contract that includes inputs, outputs, failure modes, and handoff rules. Store it as a catalog artifact that versions with the agent.

-

Build a representative benchmark. Use domain documents, past tickets, and synthetic perturbations that create hard cases. Treat this benchmark as a living dataset with an owner and data quality checks.

-

Establish guardrails first. Before a single user sees the agent, define approval rules, policy checks, rate limits, and escalation triggers. Enforce them with catalog policies and streaming alerts.

-

Run shadow mode. Route real traffic to the agent while keeping humans in the loop. Collect traces, labels, and costs. When quality hits an agreed threshold, graduate the job and expand scope deliberately.

-

Monitor with clear service levels. Tie quality and latency to service level targets. When thresholds are breached, automatically fall back to safer, more expensive routes, then triage via dashboards that show traces and costs side by side.

This progression turns an impressive demo into a reliable coworker. It also builds a repeatable factory for future agents because the artifacts are versioned, governed, and reusable.

Real‑time signals make or break reliability

Agents that only read yesterday’s batch files cannot close the loop. The control plane needs both state and events. Databricks brings streaming pipelines, feature serving, and vector search onto the same platform that stores policies and evaluations. When a feature like last‑login timestamp is catalog‑owned, everyone computes it the same way. When a fraud signal spikes, a streaming rule can throttle an agent or require extra verification. Real‑time data is not a bolt‑on. It is the nervous system that turns a smart head into a responsive body.

A useful way to plan reliability is to map each critical decision to the signals it requires, the policies that constrain it, and the recovery plan if an upstream system degrades. When those elements are platform objects, you can build automated fallbacks that are both fast and compliant.

A pragmatic build versus buy checklist for CTOs

Use this checklist to decide where to assemble from components and where to adopt an opinionated suite such as Agent Bricks.

-

Governance and risk

- Question. Do you need column‑, row‑, and document‑level policies with full lineage for this agent?

- Build if. You have a strong platform team that can integrate policy engines, catalogs, and audit logs across tools.

- Buy if. You want catalog‑native policies and lineage on day one so roles, masking, and approvals are inherited from the data platform.

-

Evaluation and safety

- Question. Can you maintain task‑aware benchmarks, judge prompts, and human review workflows as versioned artifacts?

- Build if. You operate a centralized evaluation team and have internal tools for test sets and scorecards.

- Buy if. You need a turnkey evaluation layer that stores datasets, traces, and scores next to models and prompts.

-

Data gravity and cost

- Question. Will the agent touch sensitive or high‑volume data that is risky or expensive to move?

- Build if. Data is small or non‑sensitive, and you can host orchestration near the application without violating policy.

- Buy if. Data gravity favors the lakehouse and you want to avoid egress, duplication, and inconsistent caches.

-

Real‑time and reliability

- Question. Does the agent need low‑latency features, streaming events, and fine‑grained throttling to be safe and useful?

- Build if. You own a robust eventing backbone and can stitch it to the agent orchestrator with rollbacks and safe fallbacks.

- Buy if. You want streaming rules, alarms, and fallbacks woven into the same plane as your catalog and models.

-

Extensibility and vendor strategy

- Question. Will you routinely swap base models, add new tools, or support both cloud and on‑prem environments?

- Build if. You prefer a best‑of‑breed stack and accept the integration tax to stay flexible.

- Buy if. You want model and tool polymorphism handled by the platform, with a small, well‑understood surface for custom code.

-

Operations and accountability

- Question. Who owns quality, latency, and spend for each agent, and how will they prove improvements over time?

- Build if. Your observability is mature and you can expose cross‑team dashboards without manual work.

- Buy if. You want trace logs, scorecards, and cost reports attached to agent versions in the catalog.

When in doubt, start simple. Use the suite for policy, evaluation, and deployment. Build custom code where your differentiation lives, such as proprietary tools or prompts. You can always insource more over time as economics and expertise change.

What this means for agent marketplaces

Markets emerge when discovery, quality, and integration are standardized. Expect three shifts over the next year.

- Catalog‑native listings. Agent templates ship as catalog packages that include prompts, tools, benchmarks, and policies. Installation feels like installing a data package, not a web app.

- Data‑first trust signals. Listings advertise lineage, evaluation datasets, and allowed data scopes. Trust is based on governed behavior, not just an average score on a public benchmark.

- Vertical bundles. Industries such as insurance, manufacturing, and retail will see bundles that combine a small set of tools, a schema contract, and an evaluation battery aligned to regulation or service levels.

Marketplaces will also intersect with the application layer. For example, the way we buy and deploy assistants is evolving, as seen in ChatGPT becomes a storefront. The winning marketplaces will drop an agent into a catalog and have it adopt local policies, identities, and data definitions without brittle wiring.

Agent‑to‑agent interoperability will harden

If agents live at the data layer, they need shared contracts.

- Tool calls with typed inputs and outputs that are catalog objects. This enables automated validation, lineage tracking, and permission checks.

- Event schemas for publish and subscribe. Agents should not guess what a downstream consumer expects. They should read the same schema from the catalog.

- Evaluation handshakes. Agents must agree on how to emit traces and scores so platform monitors can compare versions across teams.

Expect vendors to converge on schema conventions and catalog‑backed registries. This will feel more like service‑oriented architecture for agents than chatbots passing notes.

What to do Monday morning

- Pick one job that matters. Define a task spec that names inputs, outputs, guardrails, and escalation rules. Store it as a catalog artifact with an owner.

- Build a stress test. Create a benchmark dataset with missing data, edge cases, and policy landmines. Track it as a living asset.

- Stand up trace logging. Use MLflow 3.0 to capture traces, scores, and costs. Make one dashboard that shows quality, latency, and spend for the job.

- Decide model routes inside the platform. Start with a strong default and a safer fallback. Document when to switch and why.

- Run shadow mode for two weeks. Keep humans in the loop. Do not scale until the dashboard shows stable quality and spend.

- Only then consider marketplace templates or custom multi‑agent orchestration. Treat anything that bypasses the data plane as an exception.

The bottom line

The Databricks and OpenAI partnership matters because it puts state‑of‑the‑art models on the same rails as the data, rules, and evaluations that decide whether an agent can be trusted. The data layer is where enterprises already enforce security, measure quality, and manage cost. Turning that layer into the control plane for agents is not a philosophical choice. It is a practical one. With Agent Bricks and MLflow 3.0, the platform starts to look less like a playground for demos and more like a factory for dependable digital labor. The companies that embrace this shift will ship fewer chatbots and more reliable coworkers.