Claude Sonnet 4.5 and the Agent SDK make agents dependable

Anthropic’s Claude Sonnet 4.5 and its new Agent SDK push long running, computer using agents from novelty to production. Learn what is truly new, what to build first, and how to run these systems safely through Q4 2025.

From splashy demos to systems you can trust

On September 29, 2025 Anthropic launched two tightly coupled releases: the Claude Sonnet 4.5 model and the Claude Agent Software Development Kit. Sonnet 4.5 is presented as Anthropic’s most capable model for coding and computer use, while the Agent SDK exposes the building blocks needed to create long running, tool using agents with explicit guardrails. In Anthropic’s words, the goal is to move from single session chat to repeatable workflows that survive audits, outages, and handoffs. For an overview of the release, see the official write up in Anthropic’s Sonnet 4.5 announcement.

If you have been waiting for a model that can keep track of complex objectives, use real tools, and run for entire shifts without constant babysitting, this release is a material step. Anthropic reports multi hour to day scale focus, which matters because real business tasks are not one prompt long. They span authentication retries, paging through dashboards, fetching files, waiting on builds, reconciling results, and returning with verified outputs. With the Agent SDK, those steps can be composed into a service you can reason about.

What is actually new in this release

Here is what moves this beyond a shinier chatbot.

-

Memory that lasts and can be managed. The platform introduces purpose built memory tools and context editing so an agent can retain relevant state without dragging around everything it has ever seen. For enterprise teams, the difference is night and day. You can scope what persists, clear it when policies require, and reason about how memory affects action choice.

-

Permissions you can reason about. The Agent SDK supports explicit permission strategies with allow lists, block lists, and a permission mode that decides whether tools require human approval. That makes it feasible to roll out a powerful Bash tool in a read only mode first, then graduate to write access once guardrails and reviews are in place. Implementation details are covered in the Agent SDK documentation.

-

Subagents for specialization and parallelism. Instead of a single, overloaded assistant, you can define dedicated subagents with their own prompts, tools, and context windows. The orchestration layer routes work to them, which keeps the main agent focused while specialists handle security scanning, test running, or browser automation in parallel.

-

Better tool use and computer control. Anthropic expanded its execution capabilities from a Python sandbox to Bash with file creation and direct manipulation, plus first party support for creating documents and spreadsheets from a conversation. Reliable agents must touch real artifacts and operating environments, not just propose them.

-

Stronger alignment and a clearer safety envelope. Sonnet 4.5 ships with AI Safety Level 3 protections and classifier based filters for high risk content. For regulated teams, the presence of an explicit safety level and documented filters is not a marketing note. It is what you show to risk officers when you ask for approval to move from pilot to production. The announcement also highlights stronger results on coding and computer use evaluations.

Under the hood, benchmarks will not perfectly match your stack, yet they are a directional signal that the model navigates live systems more reliably than its predecessors. The real test remains your own scenarios.

The product leap: long running agents that use computers

A key claim is that Sonnet 4.5 can autonomously prosecute multi hour tasks without drifting. That capability turns a class of former demos into operational candidates. Think of concierge agents that file expense reports by driving a browser, site reliability agents that triage incidents across multiple dashboards, or finance agents that assemble close packages with evidence pulled from many systems. If the agent can run for 30 hours with checkpoints, memory, and explicit permissions, you can plan for weekend long runs that hand off to humans on Monday. The additive value is not just speed. It is the ability to move whole workflows under policy.

This shift lines up with a broader industry move to treat agents like services. We have seen similar patterns in cloud ops stacks and marketplaces that formalize how agents are deployed, observed, and governed. If that is your direction, you will find useful design parallels in our coverage of an ops layer for production agents and how a general chat interface can become action oriented in ChatGPT Agent goes live.

Four build patterns to prioritize now

Below are patterns we see moving fastest from prototype to production on this stack. Each includes a blueprint and the safety controls you should wire in from day one.

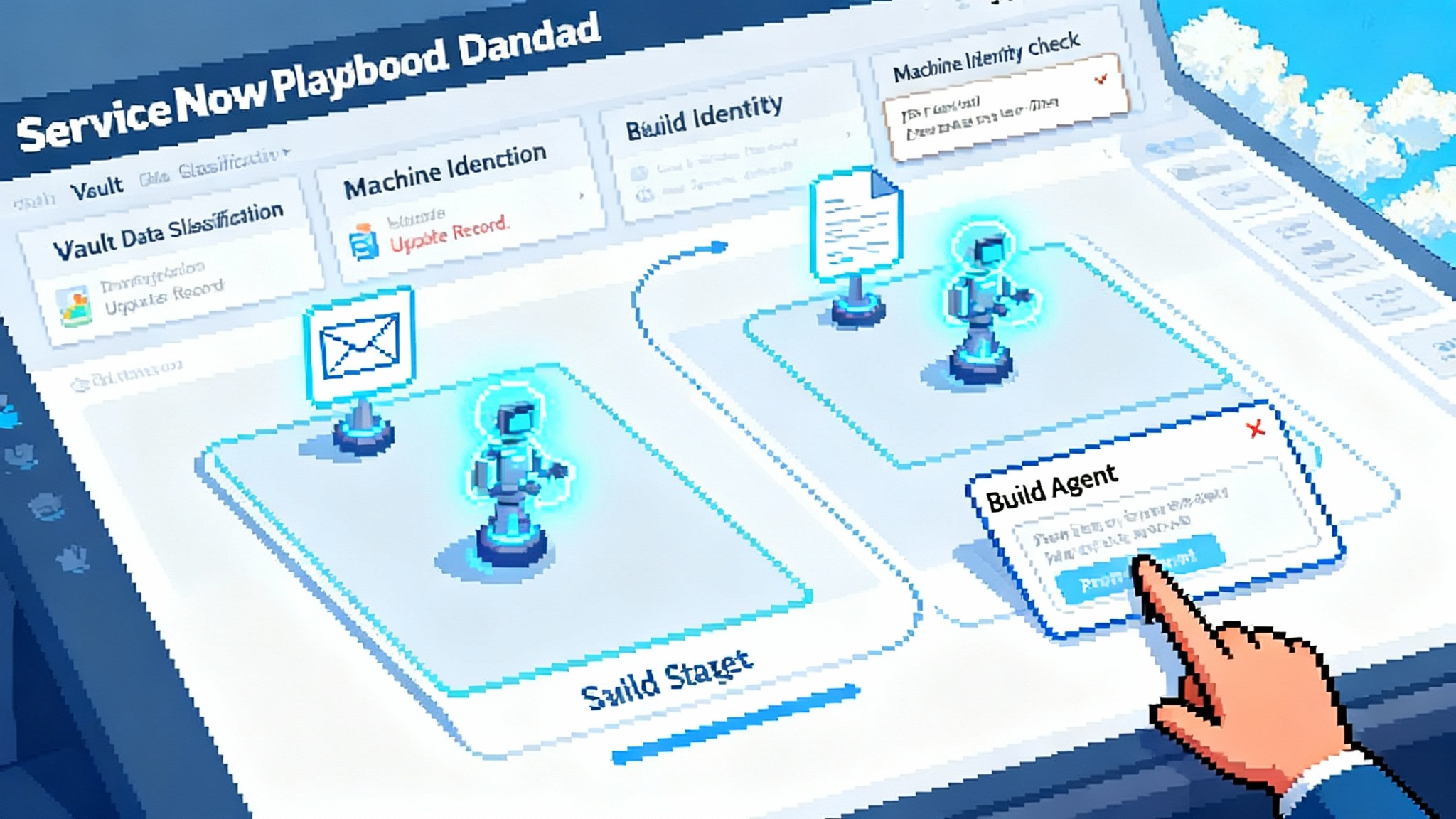

1) Operations runbooks that actually run

-

Blueprint

- Main agent: an incident commander with read write access to logs, metrics, ticketing, and a controlled Bash shell for diagnostics.

- Subagents: a log diver, a metric explainer, and a remediation draft writer. Each has tailored prompts and tool scopes. The remediation writer can propose shell commands but requires approval to execute.

- Memory: store incident context and a list of attempted actions with timestamps. Expire automatically after a retention window or when tickets are resolved.

- Output: a structured play by play with links to artifacts, commands, and ticket updates.

-

Why Sonnet 4.5 plus the Agent SDK

- Longer sessions let the agent watch a flaky system for hours. Bash and file tools let it produce real diagnostics. Permissions allow you to keep destructive actions behind an approval gate.

-

Controls

- Mandatory reviews on any command that writes to production. Require dual approval for sensitive namespaces. Log every tool call with request identifiers and retain per policy. Pin model and tool versions per run for repeatability.

2) Compliance evidence collectors

-

Blueprint

- Main agent: orchestrates a quarterly evidence sweep for controls like access reviews and backup verification.

- Subagents: an identity auditor that exports user lists, a backup verifier that pulls restore logs, and a change manager that compiles pull request histories.

- Memory: maintain an evidence checklist with status, data sources, and hashes of downloaded artifacts.

- Output: a signed package with a table of controls, evidence links, and exceptions ready for auditor review.

-

Why Sonnet 4.5 plus the Agent SDK

- Separating subagents keeps sensitive systems compartmentalized. Tool permissions restrict each subagent to the minimum scope. Long running tasks finish without a human poking the agent every hour.

-

Controls

- Define allowed tools per subagent. Use read only service accounts. Route any escalation, like granting a temporary permission, to a human approval step baked into permission mode.

3) Browser robotic process automation for knowledge work

-

Blueprint

- Main agent: plans steps end to end and allocates work to a headless browser subagent.

- Subagents: a navigator that clicks through portals and a verifier that screenshots before and after states, then extracts structured data from the page.

- Memory: keep a per site profile containing login steps, anti automation quirks, and field mapping schemas. Purge credentials after the task completes.

- Output: a machine readable log of actions with screenshots and extracted data for downstream systems.

-

Why Sonnet 4.5 plus the Agent SDK

- Improved computer use translates into fewer retries and less brittle scripts. The SDK gives you the primitives to keep state and route steps to the right subagent without a custom orchestrator.

-

Controls

- Force the browser subagent into a low permission environment with a read only filesystem. Require human approval before any file upload or purchase action. Record every navigation step and retain screenshots for nonrepudiation.

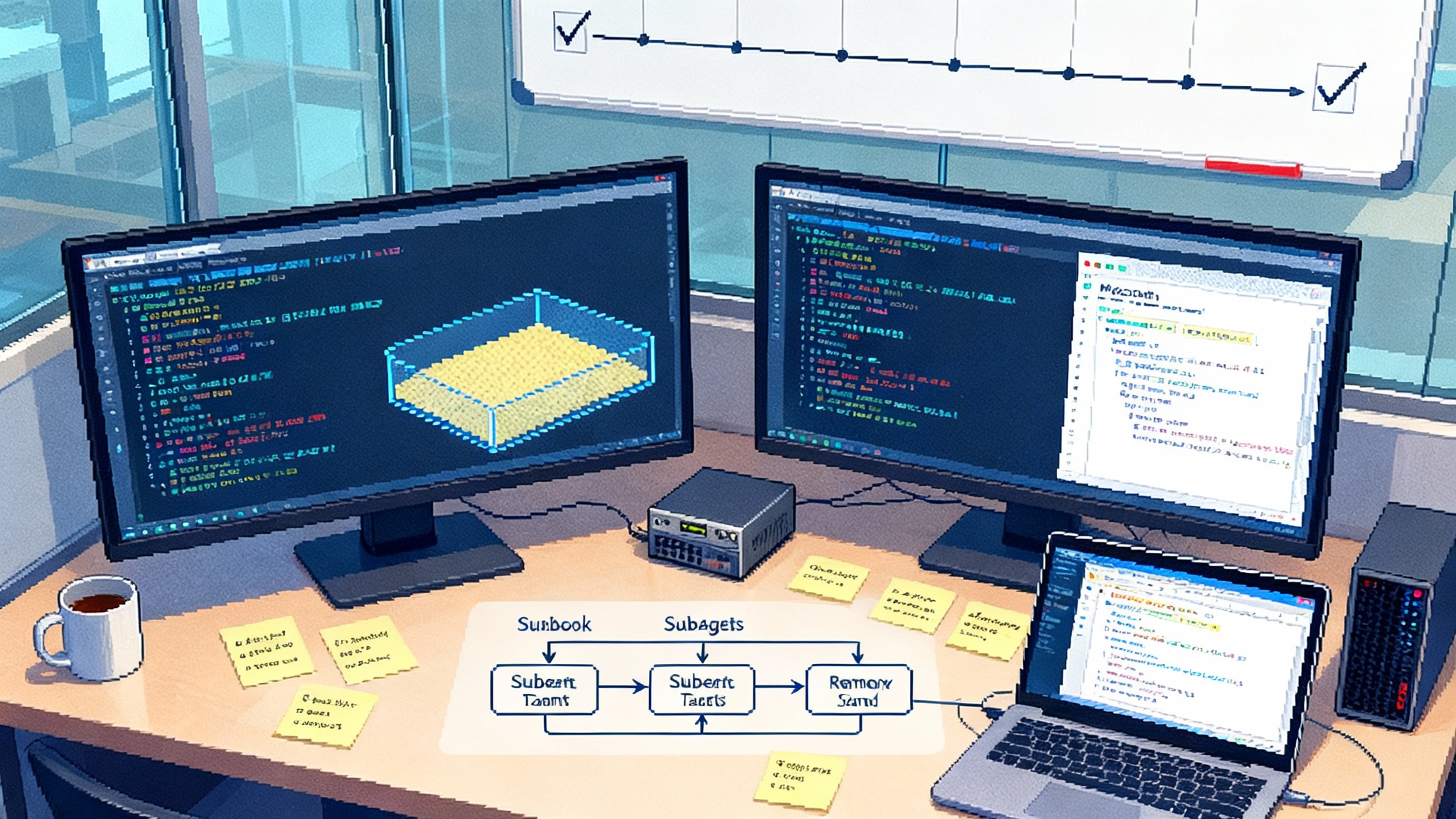

4) Codebase wide refactoring and modernization

-

Blueprint

- Main agent: drives a refactor across dozens of repositories with a plan that enumerates patterns to change, tests to update, and rollout stages.

- Subagents: a code reviewer that enforces language standards, a test runner that updates snapshots, and a security scanner that checks for vulnerable imports.

- Memory: a registry of modules touched, pull requests opened, and test deltas by repository.

- Output: a set of well scoped pull requests with passing tests and migration notes for human review. For a sense of how this lands in the developer workflow, compare to how the Copilot PR agent goes GA.

-

Why Sonnet 4.5 plus the Agent SDK

- Longer contexts and better reasoning help the agent carry the plan across many files. Subagents let teams parallelize linting, tests, and security checks. Bash execution and file tools let the agent run local builds and fix red tests automatically.

-

Controls

- Require a human code owner to land changes. Enforce a write blocker that only the review step can lift. Gate any repository wide action on a green test suite and a dry run diff.

How to evaluate and operate agents through Q4 2025

You will ship faster if you treat agents like services with formal objectives, guardrails, and observability. Here is a practical operating model for the next quarter.

Define clear service level objectives

- Task success rate: percent of runs that complete all required steps without human correction.

- Intervention rate: percent of tool calls that required human approval. Target a decreasing rate over time in low risk domains.

- Safety approval rate: share of escalated actions declined by humans. High numbers point to overreach or poor prompting.

- Output quality: domain specific checks such as benchmarked accuracy for reconciliations or unit test pass rates for code changes.

Pin these per agent, track them weekly, and publish a short runbook for how to respond when they fall.

Build a battery of pre deployment evaluations

- Scenario suites that mirror your real tools and data, not abstract puzzles. Include auth failures, slow pages, and corrupted files.

- Red team prompts that try to coerce unsafe actions and exfiltrate data. Capture and label failures to retrain prompts and tweak permissions.

- Safety envelope checks aligned to the protections described in the Sonnet 4.5 announcement. If your domain touches sensitive areas, document why AI Safety Level 3 is acceptable and which classifiers will trip.

Instrumentation and forensics from day one

- Log every tool invocation with parameters and return codes. Store request identifiers and correlate with chat turns for replay.

- Use your provider’s usage and cost interfaces to set budgets, alerts, and per agent cost centers. That helps detect drift and runaway sessions before they become incidents.

- Keep a durable, queryable trace. You need to answer after the fact which prompt, which tool, which parameter, and which version produced a given action.

Controlled autonomy with explicit permission tiers

- Read tier: the default for new agents. They can inspect logs, files, and dashboards.

- Write tier with human approval: agents can propose changes and queue actions that a human must release.

- Trusted tier for low risk environments: after weeks of clean runs, allow certain writes automatically while keeping break glass controls and alerts.

Tie tiers to environment classes such as development, staging, and production. The Agent SDK’s permission strategies are designed to support exactly this evolution.

People and process

- Owner per agent: each agent has a named maintainer on call during business hours.

- Weekly change reviews: prompt updates, tool additions, and permission changes go through the same change process as code.

- Documentation as an artifact: every agent should output a machine readable trace and a human readable summary at the end of a run.

Risks and unknowns to plan for

-

Classifier false positives and blocked conversations. Safety filters are a feature, not a bug, but they can interrupt flows. Design retry logic and graceful fallbacks such as switching to a lower risk model for non sensitive steps. Track where filters trip and tighten prompts and permissions accordingly.

-

Evaluation brittleness. Advanced models can behave differently in test like settings. What matters operationally is that evaluations look and feel like production. Avoid toy prompts. Mirror your real authentication, rate limits, and flaky networks.

-

Tool sprawl and permission creep. It is tempting to connect every database and internal system on day one. Resist it. Start with narrow scopes and graduate by evidence. Keep a quarterly permission review.

-

Vendor and model churn. Lock down versions and pin model identifiers in your pipelines. When you upgrade to Sonnet 4.5 in production, run a parallel shadow period to compare outputs and costs with the prior model. Track regression deltas.

A 90 day adoption plan

-

Days 0 to 15

- Choose one workflow from the patterns above. Write an objective definition, inputs, required outputs, and disallowed actions.

- Implement the agent with explicit permission mode, one or two subagents, and memory scoped to the task. Wire audit logging and request identifiers.

- Build a scenario suite of at least 20 realistic tests that include failure states.

-

Days 16 to 45

- Run the agent daily in staging. Track success rate, intervention rate, and cost per run. Fix the top three failure modes each week.

- Add a controlled production pilot with a write tier that requires human approval. Expand subagents for specialization and parallelism.

- If your organization has an upcoming audit, start the compliance evidence collector pattern in parallel.

-

Days 46 to 90

- Graduate the agent to the trusted tier for low risk environments once it hits your success thresholds. Keep high risk steps behind approvals.

- Document handoffs, escalation paths, and rollback procedures. Publish a dashboard with per run traces.

- Plan the next two agent workflows and reuse subagents and prompts where possible to standardize patterns.

How to make the most of the Agent SDK

The Agent SDK is opinionated in ways that help teams ship. It encourages explicit tool definitions, parameter schemas, and permission modes. It also codifies the idea that a complex agent is really a set of smaller specialists. If you have been wiring this by hand, the SDK gives you primitives so you can stop reinventing orchestration glue and focus on domain logic. Read the Agent SDK documentation with your security and platform teams at the table, then align on policy defaults up front.

Small choices compound into reliability. Require human approval for any write in production for the first month. Pin the model and tool versions per run. Emit structured logs for every tool call. Encode your SLOs in dashboards that product managers and on call engineers actually watch. Treat the agent’s prompt like code with change control. These are mundane practices, but they turn a demo into a dependable system.

For context on how other platforms are formalizing production practices, study how marketplaces and ops layers shape what teams can deploy. Our piece on an ops layer for production agents maps cleanly to the controls you will want alongside Sonnet 4.5.

The bottom line

Sonnet 4.5 pairs a model that can stay on task for real durations with an SDK that encodes the unglamorous plumbing of production agents: memory management, permissioning, disciplined delegation, and observable execution. The combination is what turns an impressive demo into an accountable system. If you prioritize the right patterns, wire controls early, and treat agents like services with objectives and on call owners, the path from idea to dependable workflow is finally short enough to walk.

For teams ready to act, begin with one workflow, one owner, and a narrow permission footprint. Build a scenario suite that feels like your real world. Track the numbers that matter, not vanity metrics. By the time Q4 2025 closes, you can have an agent that runs with confidence, hands off cleanly to humans, and pays for itself in saved hours and fewer late night incidents.