AWS AgentCore brings an ops layer for production AI agents

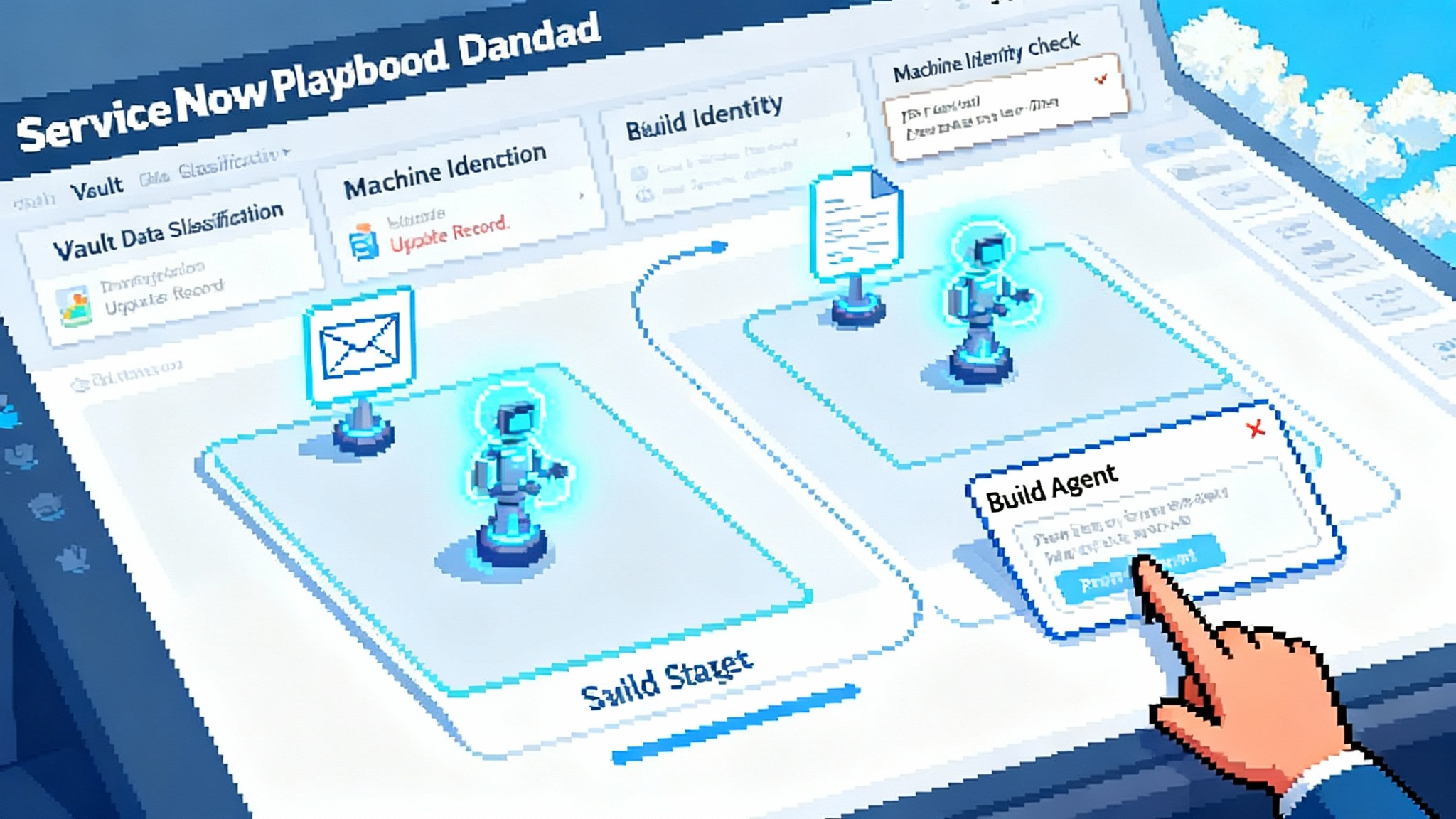

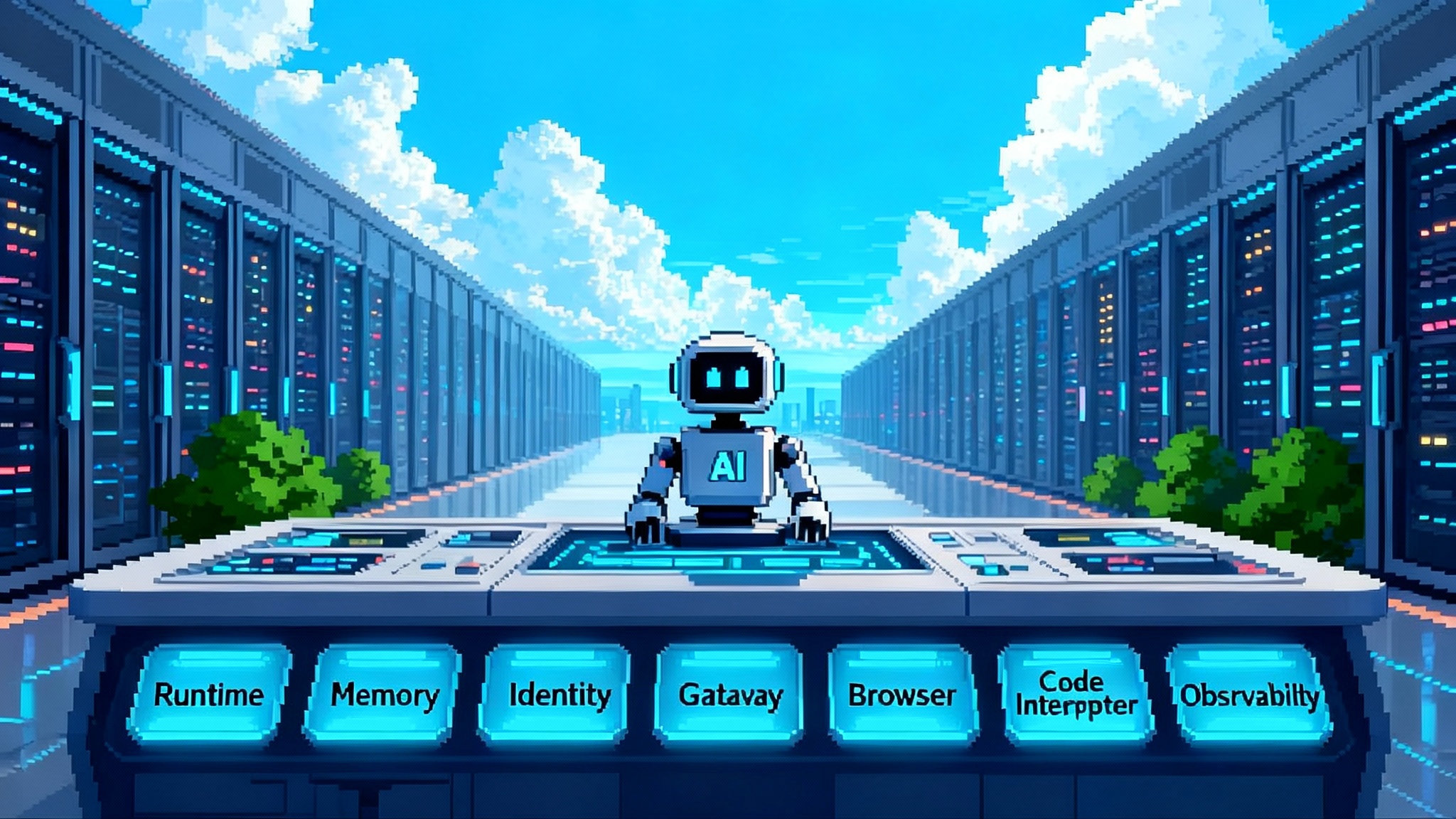

Announced at AWS Summit New York on July 16, Amazon Bedrock AgentCore bundles Runtime, Memory, Identity, Gateway, Browser, Code Interpreter, and Observability to turn agent prototypes into scalable production systems.

The news in one line

At AWS Summit New York on July 16, Amazon Web Services launched Amazon Bedrock AgentCore, a set of seven primitives that turns promising agent prototypes into production software: Runtime, Memory, Identity, Gateway, Browser, Code Interpreter, and Observability. You can think of it as an operations layer for agents, designed to work with your favorite open frameworks and models while meeting enterprise standards for security, scale, and audit. AWS framed it as a bridge between today’s creative agent experiments and tomorrow’s dependable systems, and for once the framing fits the features. The full rundown is in the official post, which names the services and how they fit together: Introducing Amazon Bedrock AgentCore.

Why this matters: agents finally get an ops layer

If building agentic apps in 2024 felt like flying a homebuilt drone, AgentCore is the control tower, the runway lights, and the flight recorder. Open frameworks such as LangGraph, CrewAI, LlamaIndex, and Strands Agents help you sketch agent behavior. What enterprises lacked was the production scaffolding around those behaviors: identity-aware tool access, durable memory, safe execution sandboxes, standardized telemetry, and a way to buy vetted tools that plug in quickly. AgentCore is that scaffolding.

Enterprises are also pushing toward heterogeneous stacks where agents cooperate across vendors and clouds. Efforts like Google’s push for cross vendor agents show why a portable operations layer matters. If your tools, identities, and traces are standardized, your agents can evolve without ripping out the foundation each time a new model or protocol shows up.

The seven primitives, in plain language

Below is what each primitive does and what it unlocks for real workloads, with concrete examples you can start with today.

1) Runtime: deploy, isolate, and scale

Runtime gives you a serverless execution environment built for long-running and multimodal agent sessions, with isolation at the session level. Instead of stitching together containers, webhooks, and ad hoc schedulers, you deploy an entrypoint and get an endpoint that can scale across users and workloads. For a support triage agent, this means a customer session does not leak context to another, and the agent can keep working while it fetches knowledge, calls tools, and drafts a response.

What it enables: long-running tasks, safe multitenancy, and a clean path from a local prototype to an internet-facing service without rebuilding your stack.

Practical tip: treat Runtime like an application platform, not just a model host. Define clear inputs and outputs for the agent entrypoint. Use environment segmentation to separate experiments from production.

2) Memory: short-term continuity and long-term recall

Memory stores interactions so an agent stays oriented across turns, and can also learn from past sessions. In practice, you can keep the last five steps for tight context windows, while persisting profiles or policies for future calls. Picture a procurement agent that remembers your vendor preferences, discount thresholds, and typical delivery windows. When legal tweaks a clause, the agent can surface that change in the next contract draft because it recalls relevant history.

What it enables: consistent experiences across sessions, fewer hallucinated callbacks to the user, and a defensible path to personalization that does not require custom fine-tuning.

Practical tip: separate transient conversation memory from durable business memory. Tag both with actor, session, and purpose so compliance teams can search and purge with confidence.

3) Identity: secure tool access by design

Identity lets agents call services as a user or as themselves with explicit consent and scoped credentials. That means an agent can request a Salesforce token on behalf of a salesperson, check a case, and log a note, while the same agent can use an API key to query a company database under a bot identity. You get token refresh, secure storage for keys, and a clear audit trail of who did what, when.

What it enables: verifiable, least-privilege access to tools without leaving credentials in prompts. It also reduces the hidden operational risk that lives in ephemeral scripts and shadow agents.

Practical tip: align scopes with real job-to-be-done verbs. For example, create identities for read_dashboard, create_ticket, and approve_refund rather than generic read and write scopes.

4) Gateway: one front door for tools and protocols

Gateway exposes your existing application programming interfaces, functions, and third-party software as agent-ready tools. It normalizes differences across specifications and protocols, including the Model Context Protocol, so your agents can discover and call tools in a uniform way. Imagine a claims agent that must tap a customer relationship system, a payments provider, an internal rules engine, and a legacy mainframe wrapped by a function. Gateway presents this zoo as a simple, searchable toolbox.

What it enables: secure tool discovery, authentication and throttling in one place, and cross-cutting policies like multitenancy and request transformation without custom glue code.

Practical tip: think like an API product manager. Curate tool catalogs by business domain, publish examples of valid payloads, and version tools so agents and humans both have consistent interfaces.

5) Browser: automation where there is no application programming interface

Enterprises live with portals that were never designed for programmatic access. Browser gives your agent a managed way to navigate and act on these sites at scale. A revenue operations agent can log into a partner portal, export a report, and reconcile it with internal data, while observability captures the steps for later review.

What it enables: practical coverage of the long tail of enterprise web tasks, with managed concurrency and isolation.

Practical tip: keep a registry of allowed domains and form selectors. Combine Browser with Identity so sign-ins are audited and ephemeral.

6) Code Interpreter: safe, verifiable execution

When an agent needs to transform data, run a calculation, or test a hypothesis, Code Interpreter provides an isolated environment to execute generated code. You avoid the trap of free-form code inside your application process, and you gain consistent logging of inputs and outputs.

What it enables: reliable data wrangling, unit-tested micro-computations, and a controlled way to let agents generate and run code without risking the core app.

Practical tip: define a library of approved utilities and datasets that the interpreter can import. Log snippets and results to Observability so humans can spot brittle patterns.

7) Observability: auditable trajectories, not just metrics

Agents are sequences of decisions. Observability records those decisions as traces and spans, with labels for prompts, tool calls, intermediate results, and outcomes. That gives teams a real-time view for debugging and a historical ledger for audit. Support for OpenTelemetry means these traces flow into the platforms your teams already use, such as cloud monitoring and agent observability tools, so security, data, and application teams have a shared source of truth.

What it enables: explainability for business stakeholders, incident response for security, and faster iteration for developers.

Practical tip: standardize on span names like plan, choose_tool, tool_call, verify_result, and commit_action. These labels make cross-team conversations precise and searchable.

A new Marketplace shelf for agents and tools

Alongside AgentCore, AWS introduced a new category in AWS Marketplace for prebuilt agents and agent tools. Instead of hunting through disparate listings, buyers can search for agents by use case, protocol support such as the Model Context Protocol, and deployment path, including direct use with Runtime and Gateway. That shortens procurement, centralizes billing, and gives compliance teams the guardrails they expect. Details and discovery are in the announcement: AI agents in AWS Marketplace.

What this unlocks right now

- Secure tool access: Identity and Gateway create an explicit contract for how agents authenticate and authorize across systems. Teams can approve, rotate, and revoke access without editing prompts or redeploying code.

- Long-running tasks: Runtime keeps stateful, multi-step workflows alive without brittle schedulers. Hand-offs between planning, tool calls, and verification happen in one durable context.

- Auditable trajectories: Observability captures the who, what, when, and why of agent decisions. That converts opaque behavior into a reviewable trail that legal, risk, and engineering can all understand.

- Model and framework choice: AgentCore works with open agent frameworks and multiple model providers. You keep your innovation surface while adding production discipline.

If you are tracking how security and governance are entering the AI supply chain, the Microsoft Security Store signals the same directional shift: first-class packaging, policy, and audit for components that agents rely on.

How to build on it today

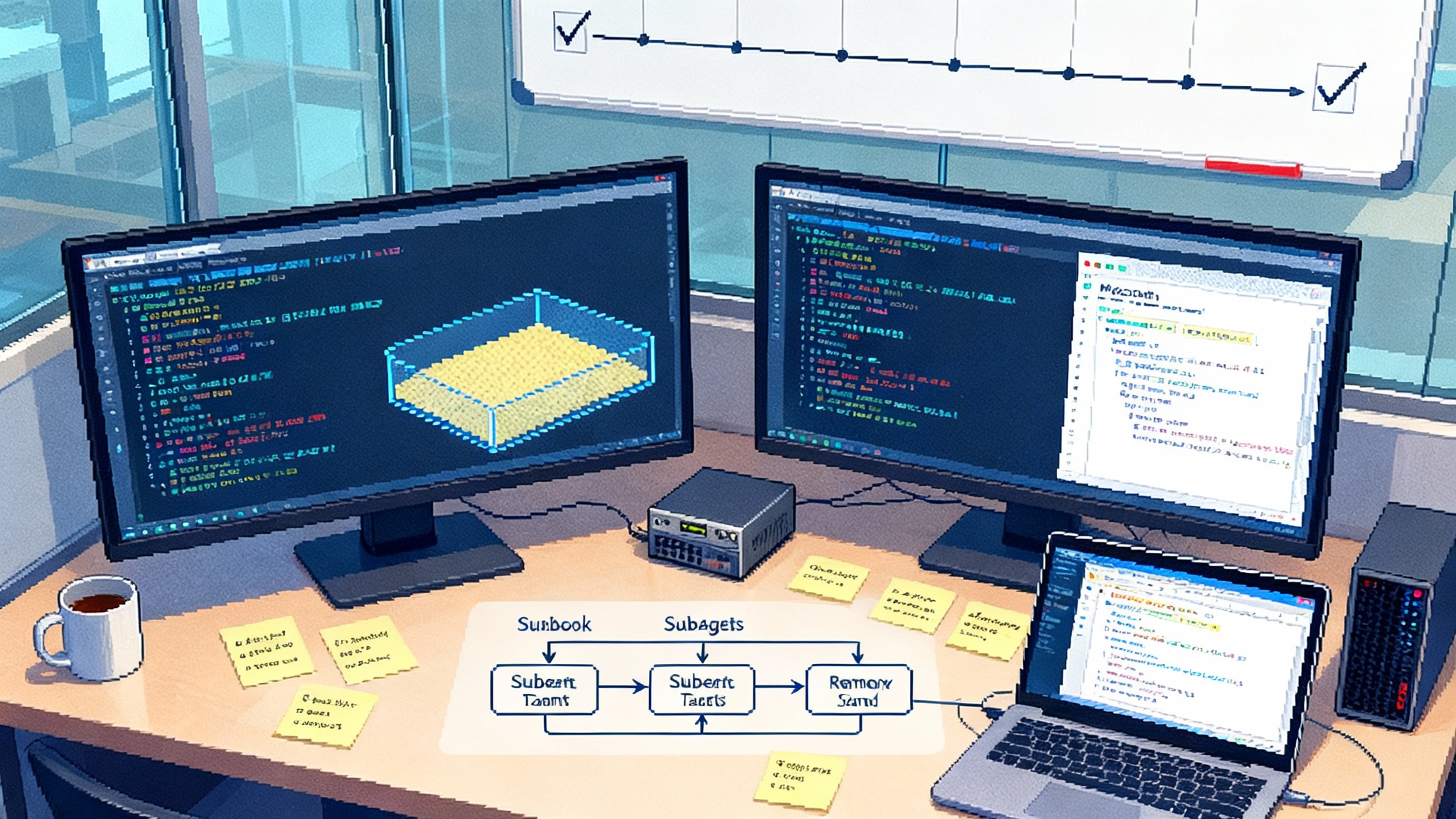

Here is a practical path for teams that want to get from prototype to production without losing momentum.

-

Pick the framework you already know. If your team is invested in LangGraph or Strands Agents, keep it. AgentCore is designed to be framework neutral, so your first win is operational, not architectural.

-

Define your agent’s contract. Write down a small schema for the entrypoint payload and the expected outputs. Treat the agent as a service with a stable boundary. That boundary is what Runtime will run and scale.

-

Wire in Identity from day one. Create two identities for every tool the agent will call: a user-delegated identity for actions that require consent, and a bot identity for background checks or enrichment. Scope both as narrowly as possible.

-

Wrap tools through Gateway. Start with three to five high-value tools. Publish normalized descriptions, inputs, examples, and error codes. If a vendor supports standard protocols like the Model Context Protocol, enable it. If not, wrap it behind a function or an existing application programming interface and let Gateway normalize.

-

Persist the right Memory. Keep short-term memory lean to avoid confusing the model. Store durable facts with clear provenance. Annotate every memory event with actor, session, and purpose.

-

Turn on Observability early. Define span names and tags, capture token usage, and keep an eye on error classes like timeouts and tool contract mismatches. Export through OpenTelemetry to the monitoring stack your operations team already trusts.

-

Test actions, not just answers. For each tool, craft playbooks that include both a happy path and at least two failure modes. Track tool success rate as a first-class metric.

-

Make model choice a late binding. Use a multi-model strategy. Keep prompts and tool schemas portable so you can switch or blend models without refactoring the ops layer.

If you want to see how this shift meets productized agents at work, look at how ChatGPT Agent goes live approaches actions, approvals, and audit in enterprise environments.

Stay portable without losing velocity

- Protocols: Build around the Model Context Protocol for tool connectivity, and use OpenTelemetry for traces. These two give you exits if you ever need to move parts of your stack.

- Models: Keep the agent service boundary model agnostic. Choose models per task, and make that choice configurable. When possible, keep extraction and planning prompts distinct so you can mix a reasoning model for planning with a smaller, faster model for tool calling.

- Data gravity: Store durable memory in systems you already govern and back up. Use Memory as an interface, not a silo.

- Vendor strategy: Prefer features that travel with you. Identity scopes, tool contracts, and telemetry taxonomy should all be cloud neutral on paper, even if they are implemented on AWS.

Who this disrupts, and how to respond

-

Agent platforms: Many startups sell runtimes, tool gateways, sandboxes, and observability as a bundle. AgentCore now provides those primitives from the cloud provider that already holds enterprise identities and networks. Startups in this space will need to differentiate higher in the stack with domain-specific blueprints, rigorous verification systems, advanced simulation for safety, and managed change control for prompts and policies. The most durable path is to integrate with Identity, Gateway, and Observability, and sell outcomes like closed-case rate, not just infrastructure.

-

MLOps and observability vendors: Model-centric tools that stop at prompt and token charts will feel incomplete next to agent trajectory traces. The opportunity is to ingest OpenTelemetry spans for agent steps, add agentic quality metrics such as tool success rate and plan divergence, and map traces to business key performance indicators like average handle time or first-call resolution. Vendors that tie model behavior to agent outcomes will gain relevance.

-

Systems integrators and consultancies: AgentCore lowers the scaffolding cost. The moat becomes packaged playbooks, governance frameworks, and prebuilt tool catalogs by industry. Expect customers to pay for implementation quality, not just headcount.

A 90-day adoption playbook

Two tracks follow. The first is for enterprises with governance needs. The second is for startups that want to ship quickly without painting themselves into a corner.

Enterprise track

Days 0 to 30: pick one high-friction workflow and set the guardrails

- Choose a workflow that already has clear outputs and acceptance criteria, for example support triage, invoice reconciliation, or claims intake.

- Name an accountable product owner. Define the agent’s service contract and success metrics such as resolution time and tool success rate.

- Provision a sandbox account. Stand up Runtime for a single agent, enable Observability, and export traces through OpenTelemetry to your standard monitoring system.

- Inventory tools. For each one, decide user-delegated versus bot identity, then set scopes in Identity. Wrap the tool in Gateway with a normalized contract and examples.

- Draft a privacy and audit plan. Decide retention for short-term and long-term memory, and map identities and traces to your record-keeping obligations.

Days 31 to 60: run a supervised pilot in production traffic

- Route 5 to 10 percent of traffic to the agent, with humans in the loop for approval of high-impact actions.

- Track agent trajectory quality. Measure plan steps, tool selection accuracy, and error classes. Use Observability to spot recurring failures.

- Iterate daily on prompts and tool contracts. Keep a simple change log that ties changes to metric deltas.

- Add Browser and Code Interpreter only if the pilot shows gaps that application programming interfaces cannot cover.

Days 61 to 90: scale, standardize, and hand off

- Increase traffic to 30 to 50 percent if metrics hold. Add rate limits and backoff in Gateway to protect downstream systems.

- Move identities to production scopes and run a formal access review with security. Enable scheduled token rotation.

- Publish a runbook. Include playbooks for timeouts, tool failure, and rollback. Instrument alerts on trajectory anomalies.

- Plan the second and third agents. Reuse your tool catalog, identity scopes, and memory taxonomy rather than duplicating effort.

Startup track

Days 0 to 30: ship your first paid workflow fast

- Pick one workflow that customers will pay for, and implement it end to end with Runtime, Identity, and two tools through Gateway.

- Keep Memory minimal. Store only what you need for the next turn. Focus on reliability.

- Use Observability from day one to show customers step-by-step traces of how the agent solved their task.

Days 31 to 60: add differentiation without lock-in

- Introduce model choice behind a configuration flag. Test a reasoning model for planning and a faster model for tool calls.

- Add Browser for one high-value site if it unblocks an important use case. Keep a strong allowlist.

- Define a simple evaluation harness: ten golden tasks, tracked weekly. Measure tool success and customer time saved.

Days 61 to 90: package for scale and distribution

- Harden identities and scopes. Add rate limits and retries in Gateway.

- Publish your tool contracts and trajectory labels so customers can integrate traces into their systems.

- Prepare an AWS Marketplace listing in the new agents category. Make protocol support, deployment options, and pricing crystal clear so procurement is smooth.

Practical pitfalls and how to avoid them

- Overstuffed memory: Long conversational histories often degrade model performance. Keep short-term windows small and move durable facts to structured stores the agent can retrieve.

- Leaky identities: Credentials in prompts or environment variables are an incident waiting to happen. Use scoped identities, short-lived tokens, and automatic rotation.

- Uncurated toolboxes: If everything is a tool, your agent will thrash. Start with a small, well-documented set of high-value tools and expand deliberately.

- Observability afterthoughts: If you add tracing late, you will miss the baseline. Capture spans and labels from the first experiment.

- Model monoculture: Tying your agent to one model creates brittleness. Keep the model decision configurable so you can swap or blend as needs evolve.

The bottom line

AgentCore turns agentic computing from a prototype sport into an operational discipline. With Runtime, Memory, Identity, Gateway, Browser, Code Interpreter, and Observability, the missing production pieces are now on the table. The smartest teams will combine them with open protocols and a multi-model approach, ship one valuable workflow, and let real telemetry guide the next. This is how agent operations become standard practice rather than a special project.