Vertex AI Agent Engine adds sandbox and A2A for enterprise

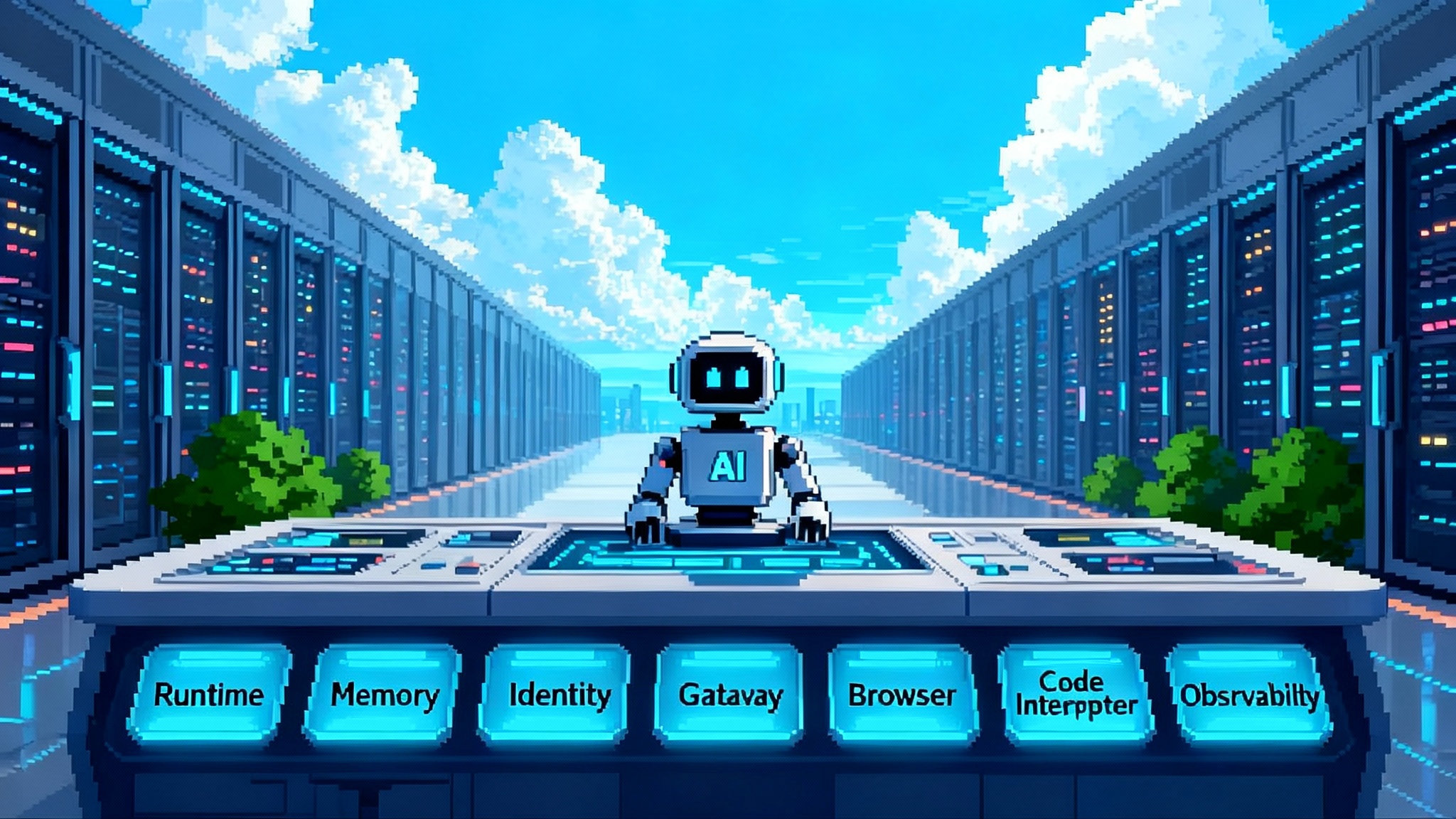

Google’s September 2025 Vertex AI Agent Engine update delivers a code execution sandbox, Agent to Agent interoperability, bidirectional streaming, and a first party Memory Bank UI, making governed production agents practical for the enterprise.

The breakthrough that makes agents feel production ready

In September 2025, Google introduced a set of primitives in Vertex AI Agent Engine that make enterprise agents feel less like lab projects and more like software you can operate at scale. The highlights are a secure Code Execution sandbox, support for Agent to Agent interoperability, bidirectional streaming, and a first party Memory Bank UI inside the console. A short note in the Vertex AI release notes for September 2025 signals a big shift in how you design and govern agent systems in production.

The headline is not just new checkboxes. These capabilities plug four gaps that kept many teams stuck at proof of concept. The sandbox gives you a safe place to run untrusted code. A2A provides a contract so agents across teams or vendors can collaborate. Streaming makes agent work observable while it happens. Memory Bank turns long term recall into something you can monitor, search, and govern.

With these primitives, enterprise agents move from clever demo to operational system that aligns with identity, logging, policy, and audit.

Why these four pieces matter together

Think of an enterprise agent program like running a railway. You need engines, carriages, signals, and a timetable. Before this release, most teams had a single locomotive pulling a few prototype cars on a short track. With the sandbox, A2A, streaming, and Memory Bank, you get the track standard, a switching yard, signal lights, and a dispatcher’s console. You can now operate many trains, switch them safely, see what they are doing in real time, and keep a record of every stop along the way.

The broader implication is build versus buy. All in one agent platforms look attractive because they promise safety, orchestration, and memory. If your cloud offers those as native, composable primitives that plug into identity, billing, logging, and policy, the calculus changes, especially for regulated workloads. You can assemble a solution from first party parts, keep data inside your perimeter, and still interoperate with partner agents.

If you are evaluating the production stack for agents, it helps to compare models. We have covered moves in other ecosystems like AWS AgentCore brings an ops layer and reliability gains from Claude Sonnet 4.5 and the Agent SDK. Vertex AI’s update fits into the same trend toward dependable, governable agents built on cloud primitives.

Deep dive: a safe place to run code

Many real agent jobs end with code. Crunching a spreadsheet, reshaping a dataset, scoring a model, or executing a deterministic report script are common examples. Vertex AI’s Code Execution feature adds an isolated sandbox where your agent can run generated or supplied code without touching your network directly. The Code Execution sandbox overview emphasizes isolation and control so you can unlock programmable steps while keeping guardrails intact.

Practical expectations for day one:

- Treat the sandbox like a short lived worker, not a database. Use any stateful window to chain steps, but persist important artifacts to your storage service with your own retention and classification policies.

- Keep tool surfaces small. Expose clear inputs and outputs, for example a tool that converts CSV to Parquet with a target schema, rather than a generic shell.

- Separate transformation from integration. Use the sandbox for pure computations. For operations that need network access or customer managed keys, route through a hardened microservice that enforces your VPC and encryption posture.

Security leaders will ask the right questions: what controls are available today, which are planned, and which must be handled outside the sandbox. Treat the sandbox as excellent for untrusted code and deterministic utility steps. For protected data or networked operations, design tools that keep sensitive work in your approved services and keep the sandbox offline by design.

The winning pattern is compute inside the sandbox, connect through your own service, and persist to your storage tier where your controls already live.

Deep dive: interoperability as a contract

Agents become truly useful when they can collaborate across domains. Procurement needs a forecast from analytics. Analytics needs a pricing call from a partner. Without a standard, everyone reverse engineers tool schemas and authentication flows and the system becomes brittle.

Agent to Agent, often abbreviated A2A, is a specification that defines how agents describe themselves, advertise capabilities, authenticate, exchange tasks, and return results. In practice it means your procurement bot can reliably ask the analytics bot for a forecast, and the analytics bot can call a partner’s pricing bot, all without bespoke glue for each connection.

What to design for right now:

- Publish a signed agent card for discovery that names your capabilities, input and output schemas, and your security posture.

- Keep your task model simple. Version skills and do not overload a single agent with unrelated capabilities. A smaller set of focused skills improves reliability and auditability.

- Expect multiple transports. gRPC and HTTPS both matter in enterprise environments. Build adapters and test both paths.

Interoperability only matters if others adopt it. The market signal in 2025 is clear. Major vendors and consultancies have begun building fleets of production agents that coordinate work over stable contracts. Whether you adopt A2A verbatim or a compatible internal profile, the pattern to follow is simple. Separate capability from container, ship domain agents on their own release cycles, and communicate over a shared protocol.

Deep dive: streaming that you can wire into your UI

Agents that hide their work erode trust. Streaming flips the experience. Your application sends a query and receives a live stream of actions, tool calls, intermediate results, and partial outputs. Product teams use this to show a work in progress timeline, to let a human approve a tool call, to inject an instruction mid flight, or to cancel a task early.

Key design tips:

- Offer two modes. Use bidirectional streaming for interactive tasks that benefit from mid flight instructions. Offer one way streaming for monitoring and non critical views.

- Model quotas and backpressure. Estimate peak concurrent sessions for operator consoles and investigative tasks. Build fallbacks that downgrade to server sent events or polling when thresholds are hit, and present a graceful UI state change.

- Surface the right events. Show tool calls, parameters, and outputs clearly. Add per step duration, retries, and error types so operators can spot systemic issues fast.

With streaming wired into your console, your analysts no longer stare at a spinner. They see steps, approve actions, course correct in context, and build trust.

Deep dive: memory you can actually govern

Most agent memory implementations feel like a notebook in a drawer. They work until you need to search across users, purge by scope, or explain why a recommendation happened. Memory Bank changes that by turning long term recall into a first party service backed by the console.

The practical benefits are immediate:

- You can fetch, list, and retrieve memories by scope such as user, session, tenant, or project.

- You can seed memories directly to bootstrap an agent for a new team or product line.

- When you develop with the Agent Development Kit, the default memory service integrates with Memory Bank, which keeps dev and prod in sync and gives product and compliance teams a shared place to review.

Most importantly, the console adds a Memory Bank tab so non engineers can see, filter, and manage memories. That is the bridge from clever demo to audit ready operations. It makes it easier to align retention settings with policy and to operationalize right to be forgotten workflows.

Governance is not just deletion. Standardize scopes so memories map cleanly to identities and tenants. Adopt a three key convention like user, tenant, and data domain. Create a short rubric for what qualifies as a durable memory versus an ephemeral step, and enforce it in code review.

Architecture blueprint you can build this quarter

A credible reference architecture for many enterprises looks like this:

-

Orchestrator agent in Agent Engine using the Agent Development Kit. It handles user sessions, calls tools, and writes key facts to Memory Bank under a consistent scope schema.

-

Code Execution sandbox as a worker for deterministic steps. The orchestrator sends code and files to the sandbox for data transformation, scoring, or report generation. Outputs are saved to storage with your encryption and lifecycle policies. Keep the sandbox offline by default and expose networked operations through a hardened service.

-

A2A connectors for partner and internal domain agents. The orchestrator calls your pricing agent or policy agent by sending tasks over an A2A contract. Each domain agent publishes a signed agent card and runs on its own deployment schedule. If you need to swap a vendor or move a service to another cloud, the protocol boundary stays the same.

-

Streaming interfaces for operators. Your web app or console subscribes to the orchestrator’s stream so analysts can see tool calls, approve actions, and correct inputs. When quotas are tight, fall back to one way streaming for noncritical views.

-

Memory in the loop, not as an afterthought. The orchestrator writes normalized facts and retrieves scoped memories at the start of each task. The product owner monitors and prunes memories in the console tab. Data governance owns retention schedules and deletion workflows.

This blueprint pairs well with an ecosystem approach. Payments, authentication, and post action verification continue to mature across vendors. For example, when you need to complete transactions, see how AP2 makes agent checkout real to plan for secure agent initiated payments.

Practical adoption plan

Week 1: Confirm security posture. Document which data classes can pass through the sandbox during preview. Stand up a Cloud Run or Kubernetes based microservice for any operation that needs network access or customer managed keys. Design a simple tool interface that calls that service with explicit inputs and outputs.

Week 2: Stand up the orchestrator and Memory Bank. Choose a scope schema, for example tenant, user, and domain. Implement one read path and one write path. Add a manual redaction step in your admin console for problematic facts while you validate patterns.

Week 3: Add streaming to your UI. Start with a simple events panel that shows tool calls, intermediate steps, and final output. Add pause and resume for human in the loop controls. Instrument with metrics like steps per task, error rate per tool, and average approval latency.

Week 4: Layer in A2A. Pick one internal capability that lives in another team, like pricing, and wrap it behind an A2A contract with a clear agent card. Wire the orchestrator to call it and handle failures gracefully with retries, circuit breaking, and schema validation.

Week 5: Run a tabletop on deletion and retention. Use the console to locate memories by scope. Prove you can purge a user or tenant on demand, then demonstrate the effect by running the same task again without that context. Capture the before and after behavior in a short internal postmortem.

Governance guardrails and current limits

Launch stage. Treat preview features like any pre general availability service. Use feature flags and escape hatches. Keep a rollback plan and monitor change logs.

Region and network. If Code Execution is limited to specific regions and denies outbound network access, plan around that. For outbound calls, do it from a hardened tool service, not inside the sandbox. Keep secrets out of generated code and restrict tool parameters to typed, validated inputs.

Interop boundaries. A2A style contracts are young even as adoption grows. Keep agent cards succinct and stable. Version your skills and deprecate deliberately. Avoid accumulating unrelated capabilities in one agent.

Streaming quotas. Model the concurrent connections you need for operator consoles and long running investigative tasks. Build a fallback. You can multiplex streams for noncritical views and reserve bidirectional sessions for tasks that truly require mid flight steering.

Cost awareness. Streaming and sandbox execution create new cost surfaces. Tag each tool with cost attribution. Set budgets and alerts. Publish a weekly cost and reliability digest to stakeholders.

People and process. Add a human in the loop for high impact actions. Create a lightweight playbook for production access that covers who can approve a tool call, how to override, and how to capture context for audit.

Build versus buy for regulated environments

If you are in financial services, healthcare, public sector, or a security sensitive enterprise, the question is simple. Does this push you toward assembling on cloud primitives rather than buying a monolithic agent platform? In many cases the answer is yes, with a few caveats.

- Data control inside your perimeter. Using first party services keeps identity, access, logging, and billing consistent with the rest of your stack. You can route all traffic through your ingress controls and enforce policy with the same tools your security team already trusts.

- Interoperability without vendor sprawl. A2A contracts let you invite partner or vendor agents to cooperate without handing them a direct line into your runtime. You control which agent cards you trust and under what scopes.

- Operational transparency. Streaming and Memory Bank reduce black boxes. When auditors ask how a claims decision was reached, you can replay actions and retrieve the memories that influenced the plan.

The caveats are practical, not theoretical. Preview status and the absence of certain controls mean you should not treat the sandbox as a place to handle highly regulated data. Keep sensitive operations on your controlled services. Use the sandbox for deterministic transformations of already permitted data. Design tools with explicit contracts that never fetch secrets or external data from inside the sandbox.

For teams comparing ecosystems, it is helpful to see how patterns rhyme across vendors. We have seen moves to formalize deployment and operations in other stacks, for instance in AWS AgentCore brings an ops layer. These trends point to a common destination where agent platforms look more like reliable software services than black box apps.

What good looks like in production

- Clear capability boundaries. Each agent publishes a small, stable set of skills with versioned schemas.

- Tooling as contracts. Tools accept typed inputs and produce typed outputs. No raw shell, no untethered network calls.

- Observability. Every tool call emits structured events with timing, inputs, outputs, and error surfaces. Streaming is integrated into your operator console.

- Memory hygiene. Facts are normalized before writing to Memory Bank. Scopes are consistent. Deletion and retention are tested monthly.

- Safety by default. The sandbox is offline. Networked operations run in services that enforce VPC, key management, and policy checks.

- Cost and performance runbooks. Teams know how to triage slowdowns, quota hits, and cost spikes in minutes, not days.

The bottom line

Enterprise agents stalled because four core pieces were missing or scattered across products. Safe code execution, interoperable collaboration, transparent operation, and governable memory are now available in one place and can be wired into your cloud control plane. That makes a build on cloud approach credible again, even for regulated workloads.

Start small. Pick a workflow that needs a little code, a little help from a partner agent, and a clear audit trail. Ship it with these primitives, instrument it like any service, and you will feel the difference between a clever demo and an operational system you can scale.