Databricks + OpenAI Make Agent Bricks a Data-Native Factory

Databricks and OpenAI just put frontier models inside the data plane. Here is why Agent Bricks now looks like a data-native factory, how Mosaic evals and Tecton features change the game, and what CIOs should do first.

The news and why it matters now

On September 25, 2025, Databricks and OpenAI announced a multi-year, 100 million dollar partnership to make OpenAI models, including the next generation of GPT, available natively inside the Databricks Data Intelligence Platform and its Agent Bricks product. The strategic intent is direct and consequential: run the strongest models where enterprise data already lives, under the governance and observability that regulated industries demand. Databricks detailed the agreement in its newsroom post on September 25, 2025 under the headline Databricks and OpenAI launch groundbreaking partnership.

The timing fits the market mood. Buyers have moved past pilot fatigue and want production agents that pass audits, respect data boundaries, and hit service levels. By bringing OpenAI models into the Databricks platform, the company can combine three levers that are often scattered across vendors: top-tier model performance, governed access to proprietary data, and built-in evaluation and tracing that withstand scrutiny.

Agents are moving into the data plane

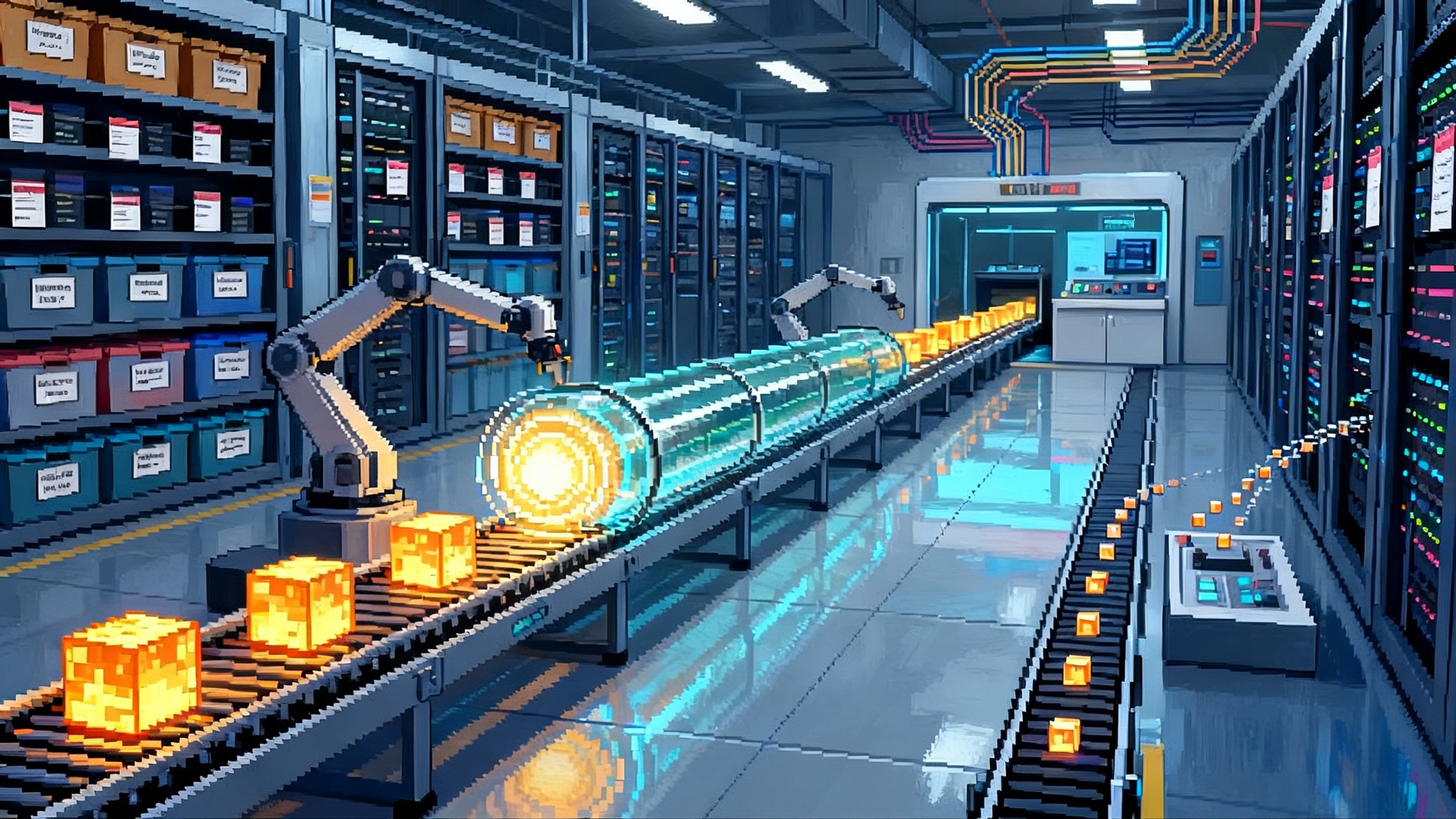

Over the last year, many teams built agents inside application stacks far from the data warehouse. That distance introduced friction. Imagine running a factory across town from the warehouse. Every part must be shipped over, inspected twice, then shipped back. Latency grows, costs creep, and governance becomes a tapestry of exceptions.

Moving agents into the data plane flips that geometry. Instead of exporting data to the model, you project the model into the governed data environment. Databricks already centralizes tables, files, features, permissions, and lineage. Adding OpenAI models directly inside that boundary turns the agent workbench into a neighbor of the warehouse shelves.

The practical effects are straightforward:

- Less copying. Retrieval happens against governed sources that already enforce row, column, and asset-level controls.

- Lower latency. Features and context assemble near the compute that will consume them.

- Cleaner compliance. Auditors examine one platform’s logs, catalogs, and approvals rather than stitching together reports from multiple vendors.

If you follow our coverage of agents becoming operational software, this shift rhymes with how we described the jump from chat to action in ChatGPT Agent goes live at work. Concentrating data, policy, and execution in one place is how teams turn demos into dependable systems.

What this unlocks today

Here are capabilities that become immediately practical for many enterprises.

1) Trusted retrieval on governed data

- Unity Catalog defines who can see which datasets and columns. With OpenAI models running natively, retrieval pipelines can inherit those exact policies. No parallel policy engine. No brittle glue code to re-express permissions.

- Retrieval becomes a query, not an export. Teams can apply standard query patterns, then ground the model with the resulting snippets, including structured Delta tables and unstructured document sources.

- Lineage and observability are integral. When an agent answers a question using three tables and a feature set, you can trace lineage to the original system of record.

2) Industrial-grade extraction

- Agent Bricks includes prebuilt bricks for information extraction that turn contracts, clinical notes, or invoices into consistent fields. Think of it as a specialized assembly line that stamps reliable structure from inconsistent text.

- Because the data never leaves the governed estate, privacy filters, data masking, and differential access policies apply without inventing a new control plane.

3) Orchestration with built-in evaluation

- Mosaic AI techniques inside Agent Bricks generate synthetic data and task-specific benchmarks. Teams get a feedback loop before touching real customer data.

- Combined with MLflow tracing, you can compare model variants on task metrics, cost, and latency, then lock in a configuration that meets a business threshold, not just a leaderboard score.

The punchline: agents stop looking like fragile demos and start behaving like measurable software components that sit beside data pipelines and dashboards.

Mosaic AI synthetic evaluations, explained simply

Most enterprises do not have perfectly labeled datasets for every agent task. Mosaic AI programmatically creates realistic task data and matching evaluation sets, then uses large language model judges to score outputs. Imagine hiring a QA team overnight that understands your domain well enough to grade consistently, then teaching that QA team to generate harder edge cases each time your agent improves.

This matters because evaluation drives iteration speed. With automatic evals, teams can try different grounding strategies, context windows, and tool use, then see which combination delivers the best precision, recall, and cost profile. In other words, the platform does not just compare models. It compares entire agent recipes.

Real time moves to the front row

Agents stumble when answers depend on streams rather than tables, such as a fraud score computed from the last five transactions or a routing decision influenced by the most recent sixty seconds of clicks. The Tecton acquisition strengthens Databricks here. Tecton built a real-time feature platform that materializes streaming events into low-latency features. Reuters reported the deal on August 22, 2025 under the headline Databricks to buy Tecton for agent push.

Concretely, this unlocks:

- Event-aware retrieval. Pull the last N interactions or transactions as features without building a separate microservice.

- Near real-time extraction. Parse a document or call transcript on arrival, then enrich it with streaming features before the agent decides what to do next.

- Low-variance performance. Because features are materialized quickly and predictably, latency and quality stay steadier during traffic spikes.

A simple rollout playbook for CIOs

You do not need a moonshot to capture value. A three-phase plan works for most enterprises.

Phase 1: Prove quality and safety in one narrow lane

- Pick a contained, high-value task with clear ground truth, for example contract clause extraction, insurance claim triage, or field-service troubleshooting.

- Build with Agent Bricks using governed datasets in Unity Catalog. Ground the model on a small, representative corpus. Instrument traces with MLflow from day one.

- Set numeric guardrails. For extraction, define minimum precision and recall. For assistants, define exactness thresholds tied to documented sources. Build automatic eval suites with Mosaic AI to track progress.

- Enforce governance in the platform. Use existing roles and masking policies rather than inventing new ones.

Phase 2: Wire in real-time features and tools

- Identify the top two live signals that improve quality or reduce latency, for example recent transactions or last-session actions.

- Materialize these as features, not ad hoc queries, so they can be versioned and reused across agents.

- Add tool use carefully. Start with one deterministic tool such as a database write or ticket creation. Log every call, arguments, and outcomes.

- Set service level objectives that combine latency, answer quality, and cost per request. Tie them to business metrics like claim touch time or first-call resolution.

Phase 3: Productionize across two more use cases

- Fork the successful agent into a related domain. Reuse the evaluation harness and tracing. Add models or tools only if the metrics justify the change.

- Establish change management. Route agent configuration changes through a standard promotion path with automated checks. Treat agents like data pipelines, with owners, on-call rotations, and rollbacks.

- Publish a runbook. Include failure modes, escalation steps, and a safe mode that disables write tools while preserving read-only answers.

We have seen the operational layer matter in other ecosystems as well. For a cross-vendor view on production concerns, compare this playbook to how AWS frames operations in AgentCore’s ops layer for agents.

Procurement and finance tips

- Negotiate model capacity ahead of peak periods. Align compute reservations with quarterly cycles to avoid throttling surprises.

- Budget by scenario, not by token. Express costs as dollars per resolved ticket or per extracted document so trade-offs are clear.

- Keep an exit plan. Even if you standardize on Agent Bricks, require exportable logs, prompts, and evaluation artifacts to reduce lock-in risk.

Security and compliance checklist

- Validate that data residency, access logs, and key management inherit from Databricks controls you already audit.

- Add content filters for personally identifiable information before retrieval, not after generation.

- Test prompt injection defenses with red-team prompts added to the evaluation suite. Fail builds that exceed a defined leak rate.

Security posture will keep rising in importance as more agents gain real privileges. For a sense of how vendors are packaging enterprise controls, see our analysis of Microsoft’s Security Store and enterprise guardrails.

Competitive map: what changes now

The partnership reframes competition around one question: who sits closest to enterprise data with built-in evaluation, observability, and governance.

-

Snowflake. Cortex and the model catalog make it easier to bring models to data, and the company is investing in document intelligence and open weight efforts. Strengths include performance for structured analytics and a growing developer ecosystem. The challenge is to match Databricks on end-to-end agent workflow depth, especially synthetic evaluation loops and open tracing across diverse agent recipes.

-

Amazon Web Services. Agents for Amazon Bedrock and the broader Bedrock ecosystem offer a deep model menu and tight links to operational systems. Bedrock shines when the rest of the stack is already in AWS and teams need a wide tool belt. The open question is whether customers prefer their most sensitive features, observability, and policy enforcement concentrated inside a general cloud layer or inside a specialized data intelligence layer.

-

Cloudflare. Workers AI, Vectorize, and the edge network are attractive for latency-sensitive inference and global deployments. Cloudflare’s strength is bringing compute to the edge with simple primitives for routing and caching. To compete inside a data-native agent factory framing, Cloudflare would need deeper catalog-level governance, lineage, and evaluation tied to enterprise datasets, or tighter partnerships with the platforms that provide them.

What shifts with the Databricks and OpenAI alignment is gravity. If governed data, features, lineage, and audit live in Databricks, then running frontier models inside that same boundary reduces friction on every project. Competitors will respond, but the bar has moved from hosting a model and a vector index to running measurable agent programs on governed data with real-time features and first-class evaluation.

Concrete examples to start this quarter

-

Commercial underwriting. Use Agent Bricks to extract key fields from broker submissions and financial statements. Ground answers in policy tables, then route ambiguous cases to a human with model-generated rationales. Add a streaming feature for recent loss events and re-score when new data arrives.

-

Field service guidance. Ground an assistant on device manuals and service logs. Add a tool that opens a work order, but require a confidence score above a target and a citation to a vetted source. Stream recent sensor readings as features to tailor next steps.

-

Accounts receivable automation. Extract invoice details, validate against purchase orders, and propose resolutions for mismatches. Log every write with trace identifiers. Evaluate accuracy weekly with Mosaic synthetic test sets that mirror your real invoice mix.

Each of these workloads benefits from living inside the data plane. You avoid brittle exports, inherit governance, and measure quality with the same discipline you apply to analytics jobs.

What to watch next

- Enterprise model mix. With OpenAI models native on the platform and open weights also in the catalog, expect a pragmatic split. Some tasks will favor the highest accuracy models, others will favor tuned open weights that meet cost targets with similar quality.

- Native guardrails. Expect tighter integration between content filtering, prompt injection defenses, and lineage so that evaluation failures can block promotion automatically.

- Cost telemetry. The teams that win will publish per-unit cost and quality dashboards that product managers can trust. This shifts conversations from tokens to unit economics.

For context on how front-line tools are becoming truly agentic, see our take on how agents moved from chat to action. The market is converging on the same conclusion: measurable operations and strong governance win.

The takeaway and your next step

The winners in enterprise agents will be the platforms that sit closest to enterprise data and ship evaluation, observability, and governance as first-class citizens. Databricks pushed the market in that direction by bringing OpenAI models into the data plane, industrializing evaluation with Mosaic AI, and tightening real-time signals through Tecton.

If you own the data plane and you can prove quality and safety inside it, you own the agent program. The practical next step for a CIO is to pick one workload and run the three-phase rollout above. Measure results, ship the workflow, and make the business case with real metrics. Agents stop being hype when they meet governance, evaluation, and real-time signals where your data already lives. That is the factory floor that matters.