Agent-to-Agent QA Arrives: LambdaTest Makes AI Testable

LambdaTest introduces an agent-to-agent testing platform that brings a reliability layer to AI. Multimodal scenarios, judge models, and cloud-parallel runs make chat, voice, and workflow agents dependable in production.

Breaking: a reliability layer for agents

On August 19, 2025, LambdaTest announced a private beta of an agent-to-agent testing platform built to evaluate the reliability of chat, voice, and workflow agents at scale. In the company’s words, it is a first-of-its-kind approach to validating conversation flows, intent handling, tone, reasoning, and safety across thousands of runs. The timing matters. Teams now trust agents with customer support, sales enablement, operations, and internal tooling, yet most still rely on sporadic spot checks or short prompt scripts. LambdaTest’s move signals a shift to agent-native quality assurance, where automated agents systematically test other agents in a controlled but realistic environment. If you want the high-level positioning and stakes, read the LambdaTest’s private-beta announcement.

Think of today’s agents as new pilots. We already let some of them fly night routes with passengers on board. What was missing was a wind tunnel and a simulator that could generate storms on command, then grade every landing with impartial metrics. Agent-to-agent QA is that simulator. It assembles test agents that craft edge cases, adversarial probes, and real-life chores, while judge agents score outcomes for factuality, safety, compliance, efficiency, and user experience.

Why this matters now

The pattern is familiar. When software moved to the web, we needed cross-browser testing and continuous integration. When machine learning hit production, we built monitoring for drift and fairness. Agents represent a third shift, and they bring two hard realities:

- They are stochastic. The same prompt can lead to different answers. Traditional deterministic scripts miss this by design.

- They are multimodal and tool-using. Agents read documents, browse internal systems, call actions, and respond through text or voice. Canned inputs do not capture this blend.

Agent-native QA absorbs these realities instead of fighting them. It evaluates agents statistically across distributions of tasks, users, and contexts, then expresses confidence in the outcomes rather than a brittle pass or fail.

From hand-written cases to multi-agent test swarms

A single agent against a single test script will always miss the corners that trip real users. Multi-agent swarms fix that by assigning roles and letting threads evolve over multiple turns.

- A driver agent behaves like a realistic user persona. It carries a goal, a mood, and a memory of prior interactions.

- An adversary agent probes for jailbreaks and failure modes. It uses ambiguous wording, subtle policy edge cases, and social engineering.

- A domain critic validates facts and business rules against sources of record, such as price lists, policies, or knowledge bases.

- A policy auditor evaluates safety, privacy, compliance, and brand tone.

The swarm generates conversation threads rather than single prompts. Each thread adapts mid-flight, the way real users do when a refund gets complicated, a clinician must respect insurance constraints, or a warehouse operator needs to reorder a part with a unit mismatch. This is closer to production reality than any hand-written one-liner.

A helpful metaphor is a traffic circle. In production, your agent is a car merging into a swirling flow. A test swarm recreates the flow at different speeds and densities. It asks whether your car signals, merges, yields, and avoids accidents, not only once but hundreds of times under varying conditions.

Multimodal scenario generation that mirrors reality

Real incidents almost never arrive as clean text. A customer shares a screenshot of a billing error. A sales rep pastes a spreadsheet of discounts. A caller mispronounces a product name. A workflow agent reads a contract, calls a pricing service, and writes to a ticketing system. A competent test harness needs to mirror that blended reality.

LambdaTest’s pitch includes multimodal inputs that combine text, images, audio, and documents into test ingredients. That lets a driver agent upload a contract PDF while speaking a question, then ask for a summary and a correction. The system can check whether the target agent extracts the right clause, respects a policy that forbids returning personally identifiable information, and produces a clear, auditable trace. This matters for agents that live in the browser or the desktop too, a theme we explored in the browser-native agent battleground. LambdaTest also plugs into a parallel execution cloud so thousands of scenarios can run at once and roll up into concise diagnostics. For an overview of what is bundled, see the Agent-to-Agent Testing product page.

Coverage for agents explained

Coverage used to mean line or branch coverage. For agents, coverage means distributions: user intents, modalities, accents, domain objects, policy constraints, and tool invocations. You will not get there one test at a time.

Cloud-parallel runs help in three ways:

-

Scale. You can run 10,000 multi-turn conversations in under an hour by fanning them across a large worker pool. This makes weekly or even daily full sweeps realistic.

-

Variance control. Because agents are non-deterministic, you must repeat trials. Running five to ten samples per scenario enables confidence intervals, not just a single pass or fail.

-

Combinatorics. Mixing personas, content types, tools, and policies creates a combinatorial explosion. Parallelism keeps the wall clock low enough to test meaningful slices on every build.

A pragmatic approach is layered coverage:

- Keep a core set of high-value scenarios on every pull request.

- Add an extended set nightly.

- Maintain an exploratory fuzzing pool that seeds long-tail cases weekly.

Tags let you target layers when a subsystem changes. If your retrieval stack gets an update, run the retrieval-tagged layers at higher frequency.

Metrics that finally matter

Agent QA needs metrics that correlate with user trust and business risk. Here is a concise set teams can implement now:

-

Hallucination rate. Share questions with gold references or trusted retrieval sources. Score a response as hallucinated when it asserts facts not supported by those references. Report a binary rate and a severity bucket.

-

Factual completeness. Many failures are omissions. Define checklists for required fields or steps per intent. Judge agents score whether the answer contains each element, then compute completeness.

-

Toxicity and harm. Run outputs through one or more classifiers that flag unsafe content. Track a weighted toxicity rate that considers severity in addition to frequency.

-

Bias and disparate impact. Simulate diverse personas and compare outcomes such as approvals, escalations, or discounts. Track disparity ratios per intent. For text outputs, add a representation score that catches stereotyped phrasing.

-

Policy adherence. Treat policies as executable rules. If a refund over 500 dollars requires identity verification, the test swarm should assert that the agent asks for it. Each rule becomes a pass or fail probe with context-aware exceptions.

-

Dialogue quality. Score groundedness, coherence, and tone using a judge ensemble plus a small human sample for calibration. Publish inter-rater reliability so teams can see when judges drift.

-

Cost and latency budgets. Track tokens, tool calls, and p95 latency per scenario. If a new prompt chain solves a task but doubles cost, the gate should warn you before the bill arrives.

Two practices harden these metrics. First, use multi-judge consensus. If a binary decision comes from three different judges with different architectures, the combined score is more stable. Second, store rationales and evidence with every decision so you can audit disagreements and retrain judges as labels evolve.

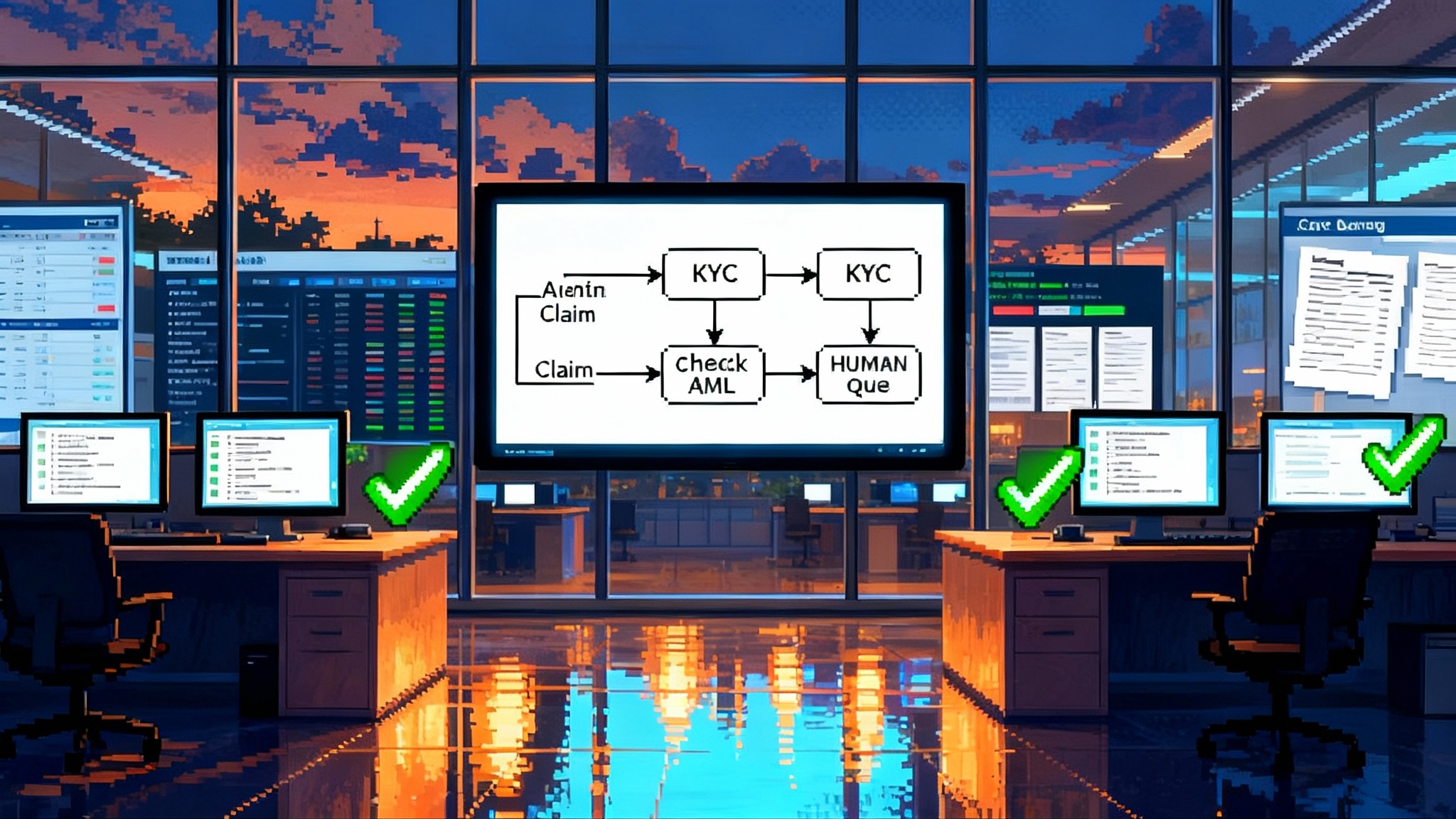

Policy checks as code

Policy documents are not enough. Convert them into rules with explicit scopes, like request types, user roles, and confidence thresholds. For example:

- Redact card numbers in outputs when confidence in the entity type exceeds 0.7.

- Block tool calls that reach external domains unless the user is on an allowlist.

- Require a supervisor escalation when the agent considers overrides to inventory rules.

Attach these checks to test scenarios so every run yields a policy score. Version the rules so you know which policy revision a build passed. When legal or brand teams update guidelines, rerun the suite against the new rules and see what breaks.

A four-week startup playbook

You do not need a large team to get started. Here is a pragmatic sequence a seed or Series A company can run over four to six weeks.

Week 1: instrument the agent

- Log every turn with timestamp, user persona, prompt template hash, model version, temperature, tool calls, and retrieved sources.

- Store audio inputs and transcripts for voice flows.

- Tag intents and outcomes so you can slice metrics.

- Capture cost and latency and set budgets per endpoint.

Week 2: build a high-signal test swarm

- Draft 30 to 50 scenarios that mirror revenue or risk moments, such as refunds and account changes.

- Create at least three driver personas and one adversary persona with tone, patience, and domain knowledge.

- Author policy checks for the top ten rules. Keep them simple and testable.

Week 3: put gates in the pipeline

- Add a pre-merge gate that runs the small suite in parallel and blocks when failures exceed thresholds. Use a five-run average to absorb randomness.

- Add a nightly expanded run of 200 to 500 scenarios. Publish a dashboard with hallucination rate, toxicity rate, policy adherence, and p95 latency.

- Add a weekly fuzzing job that auto-generates variants around recent incidents and top confusion triggers from production logs.

Week 4: set drift monitors and rollouts

- Create reference distributions for intents, tools, and outcomes. Monitor drift with tests such as KL divergence or population stability index. Alert when differences cross thresholds for two consecutive days.

- Use shadow traffic to compare a new model or prompt against the current champion. Route five percent of live traffic to the challenger after the gate passes. Promote or roll back based on stable improvements over several days.

- Establish a red team rotation. Every two weeks, craft new jailbreaks and policy edge cases. The best of these go into the adversary playbook.

If you already run CI, treat the agent suite as another stage. If not, start with a scheduled job that posts a short summary to the team chat after each run. For context on how API surfaces are shifting to agents, see our take on Theneo's agent-first API shift.

How multi-agent testers will specialize

Agent testing is a complex skill. Expect a marketplace of specialized tester agents and human experts in 2026.

- Domain validators. Agents that encode tax rules, medical coding, or export controls will validate workflows that a general judge cannot.

- Tone and brand reviewers. Judge agents trained on a company’s voice guide will catch phrasing that violates brand rules without being generically toxic.

- Adversarial prompt engineers. Specialists who craft evolving jailbreaks and leakage tests, often packaged as subscription adversary packs.

- Compliance auditors. Services that produce signed test reports for regulated releases, with reproducible seeds and artifact bundles that a regulator can replay.

On the business side, software buyers will evaluate vendors not only on features but on the quality of their agent QA programs. Expect procurement checklists to ask for the latest regression reports, policy coverage, and evidence that high-risk intents pass with strong margins. This mirrors how operations teams now weigh agent maturity in real deployments, as we saw when covering Codi's AI office manager.

Benchmarks, CI pipelines, and standardization

Benchmarks appear when buyers need comparability. We can expect two kinds.

- Task benchmarks with human-in-the-loop grading. These will measure how agents handle specific intents in finance, health, support, and operations with structured rubrics.

- Safety and policy benchmarks with public seed sets. These will resemble standardized jailbreak batteries, privacy probes, and redaction tests that any vendor can run.

For continuous integration and delivery, the key adoption path is plugin-level integrations. Test suites should run on pull requests and pre-release, then store signed result packages tied to build identifiers. Each artifact should include hashes of prompts, judge versions, policy rules, and seed sets. That is how teams will reproduce outcomes and defend releases.

Governance will likely converge on three ideas.

-

Controls as code. Policies will be versioned, testable, and promoted through environments, just like infrastructure.

-

Signed attestations. Release pipelines will attach attested agent QA reports to each deploy. The attestation proves the build passed a known battery at a known time with known rules.

-

Independent audits. As with penetration tests, agent QA audits will become a standard vendor obligation in higher risk industries. Expect cross-industry templates that reduce negotiation time.

How LambdaTest fits the stack

LambdaTest already operates a large-scale cloud for cross-browser and mobile testing. Bringing agent-to-agent testing into that cloud is a logical extension. The private beta bundles three things buyers care about: multimodal scenario generation, judge-driven scoring for bias, hallucinations, and completeness, and cloud-parallel execution married to reporting. If the company grows an ecosystem of prebuilt policy packs and judge ensembles, it could become a default hub for agent QA runs in 2026. Even if teams prefer to keep some test logic in-house, a cloud-scale harness simplifies running thousands of multi-turn tests reliably.

Just as important, the platform turns agent reliability from craft into discipline. When agents become predictable, unplanned escalations shrink, customer satisfaction rises, and compliance reviews get faster. The economics improve alongside trust.

What to do this quarter

- Appoint an owner for agent QA who has both product sense and risk instincts.

- Select a handful of intents that matter to business goals. Write scenarios that read like real transcripts, not one-line prompts.

- Start with a small swarm and a baseline judge ensemble. Expand only after the core gate is stable.

- Convert policies into code. You cannot test what you cannot execute.

- Set explicit photoreal targets. For example, hallucination rate below 2 percent, toxicity below 0.1 percent at high severity, policy adherence above 98 percent, and p95 latency under two seconds on priority intents.

- Budget for parallelism. Whether you use LambdaTest or roll your own, plan to run thousands of conversations per build to gain confidence.

The bottom line

Agent-to-agent QA is the missing rail for the agent economy. Without it, teams ship brittle copilots that charm in demos and stumble in production. With it, you get a reliability layer that measures what really matters. The idea is simple. Use agents to test agents at scale, turn policies into code, and make the results reproducible. If 2025 was the year companies tried agents in earnest, 2026 will be the year they industrialize them. The winners will treat QA as agent-native, invest in test swarms and multimodal scenarios, and wire the whole program into their pipelines. When reliability becomes programmable, velocity follows.