AWS AgentCore signals the start of agent runtime wars

Amazon previewed Bedrock AgentCore, a managed runtime for AI agents with isolation, identity, memory, secure tools, and observability. Here is what it changes for production teams and how to ship value in 90 days.

The news and why it matters

On July 16, 2025, Amazon Web Services introduced Bedrock AgentCore in preview, a cloud-native foundation for running production AI agents instead of fragile demos. The announcement set a clear goal: move agent development from lab frameworks into operational software that scales securely and predictably. AWS says AgentCore brings session isolation, long-running workloads, built-in memory, identity integration, a secure browser and code tools, and first-class observability under one roof. That is an ambitious claim, and it marks the opening salvo in the agent runtime wars. For specifics on regions, limits, and supported services during preview, see the AWS announcement on the AWS What’s New page.

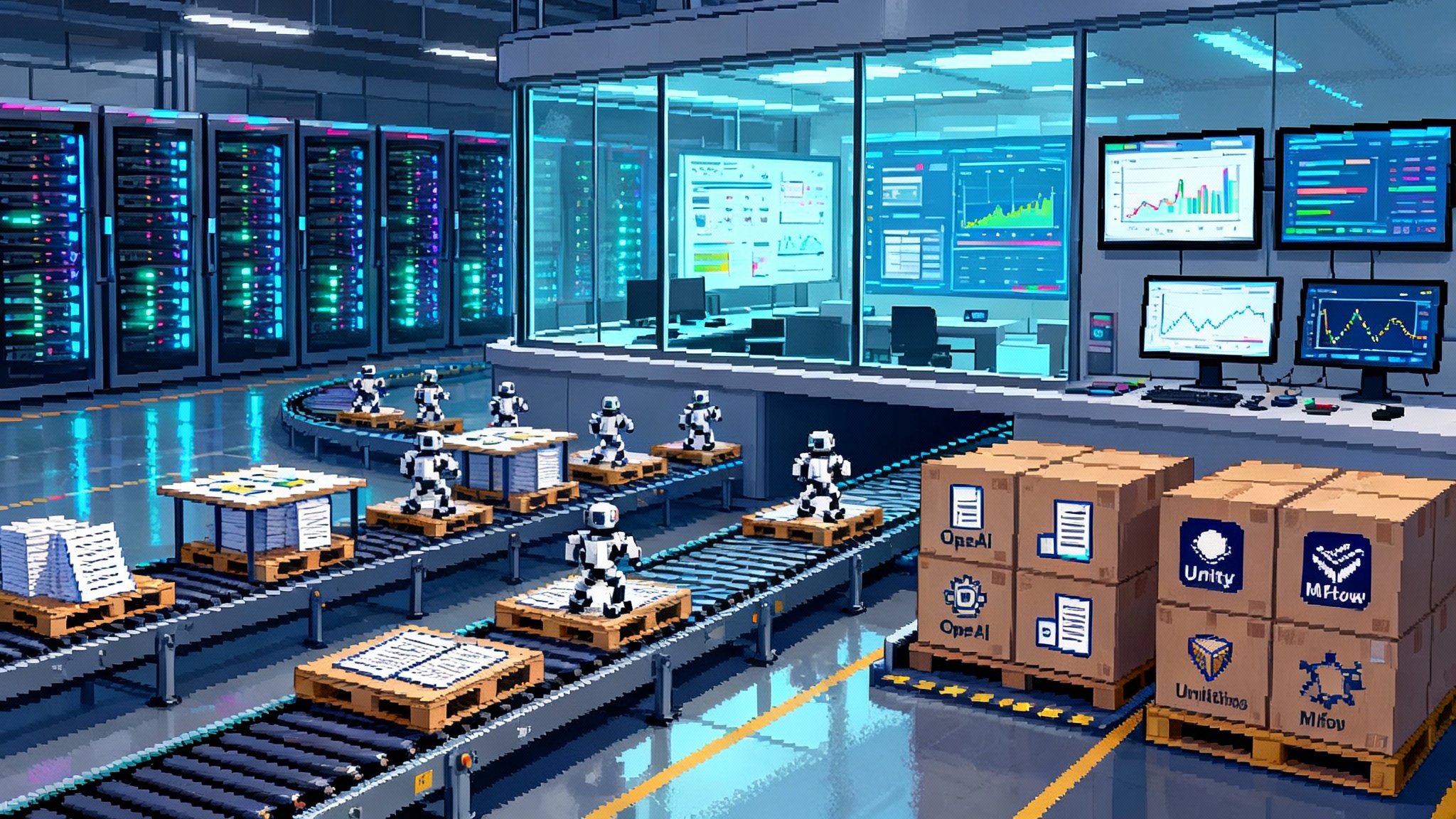

Why this matters now is simple. During the first wave of agent experimentation, teams stitched together open source libraries and scripts to build prototypes that often failed in production. They struggled with run isolation, tool security, session state, identity, and debugging. AgentCore’s pitch is to standardize the runtime so the hard parts become boring, observable, and safe. This is how databases, containers, and serverless crossed the chasm into enterprise operations. Agents are getting the same treatment.

From experimental frameworks to production ops

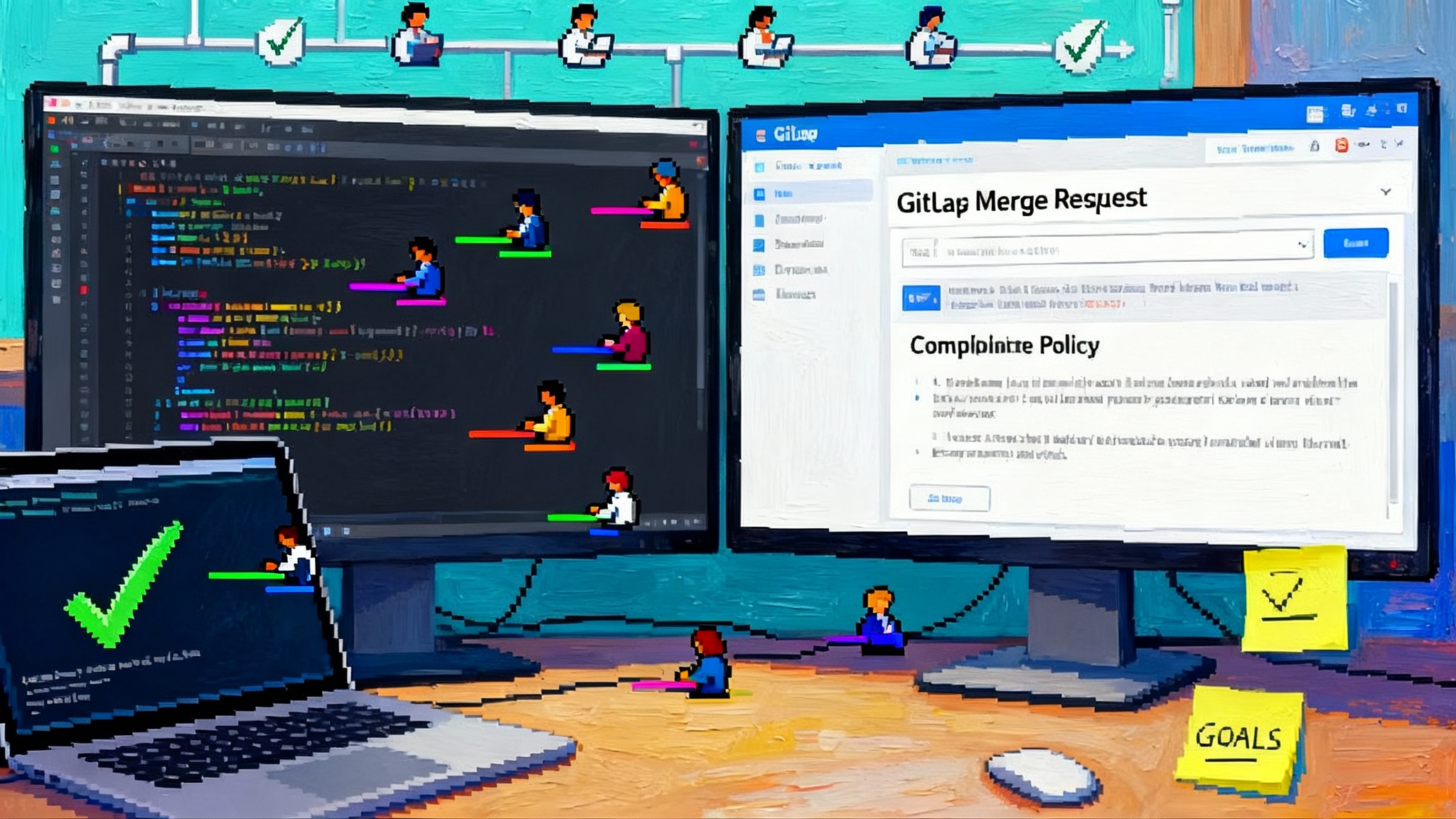

In 2024 and early 2025, the center of gravity lived in agent frameworks. Developers gravitated to LangGraph, CrewAI, and other orchestration kits to explore reasoning loops, multi-agent topologies, and tool use. That period produced valuable ideas and patterns, but it also exposed operational gaps. Enterprises need cross-tenant isolation. They need identity-aware actions aligned to least privilege. They need agents that can run for hours without babysitting, and they need traces and metrics that satisfy on-call, risk, and finance. AgentCore leans directly into those concerns.

The message is not to abandon frameworks. AWS says AgentCore works with models inside or outside Bedrock and supports popular open source stacks. The shift is about who owns the operational contract. Frameworks define behavior. The runtime is where that behavior becomes accountable in production.

If you have been following the platform race, you have seen the same arc elsewhere. Google has been pushing a consolidated enterprise view of agents that we covered in Google’s big bet on AI agents. And computer-use agents are starting to land in real environments, as documented in UiPath and OpenAI production agents. AgentCore arrives right as these threads converge, which is why it matters.

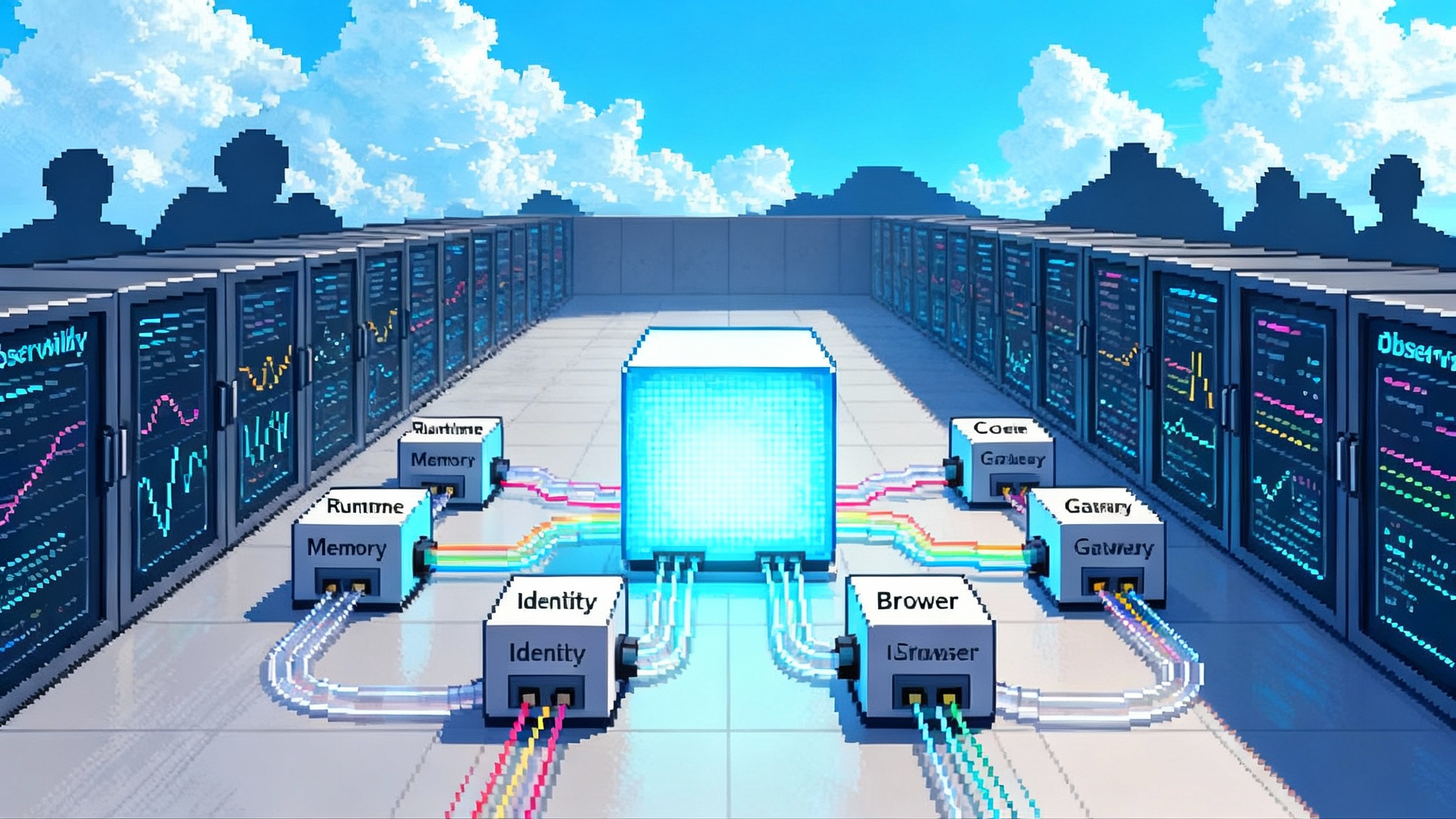

AgentCore’s primitives, explained with concrete examples

Think of an agent as a small team member who can read, plan, and act. To employ that team member responsibly, you need a desk, a badge, a notebook, a browser, a sandboxed shell for computation, and an operations console. AgentCore maps to those needs.

1) Runtime: standardized execution with isolation and long runs

The Runtime is the desk and the guardrail. It provides true session isolation so one customer’s run cannot bleed into another. It also supports long-running tasks for up to eight hours in preview, which is crucial for workflows like reconciling shipments, backfilling data, or handling multi-hop research. Picture a procurement agent that must fetch purchase orders, cross-check supplier portals, generate variance reports, and request approvals. That may involve dozens of tool calls, queue waits, and model invocations. A runtime that survives and accounts for long phases without leaking resources is the difference between a demo and a system you can page on-call for.

A pragmatic detail for builders: AWS describes low-latency starts and per-session isolation in the preview materials. If you have been hacking containers, sandboxes, and timeouts to keep agents alive, this single primitive is a relief.

2) Memory: short term and long term, managed

Agents need working memory for the current conversation and durable memory for long-running relationships. AgentCore Memory provides both without forcing you to stand up separate datastores and sync jobs. A sales operations agent can remember that an account prefers weekly rollups and that an ongoing forecast reconciliation is halfway done. That memory lets the agent pick up tasks where it left off and personalize behavior, while keeping storage and lifecycle under standardized controls.

3) Identity: agents that act on behalf of real users, safely

AgentCore identity integrates with existing identity providers like Microsoft Entra ID, Okta, or Amazon Cognito. You can let an agent act as the user or with its own service identity, then enforce least privilege through familiar policy. For example, a finance agent can pull a report from your data warehouse only if the signed-in analyst has access. It can request short-lived tokens when it needs to post an update to a ticketing system. The benefit is practical. You do not have to invent a parallel world of secrets and roles just for agents. You extend what you already run.

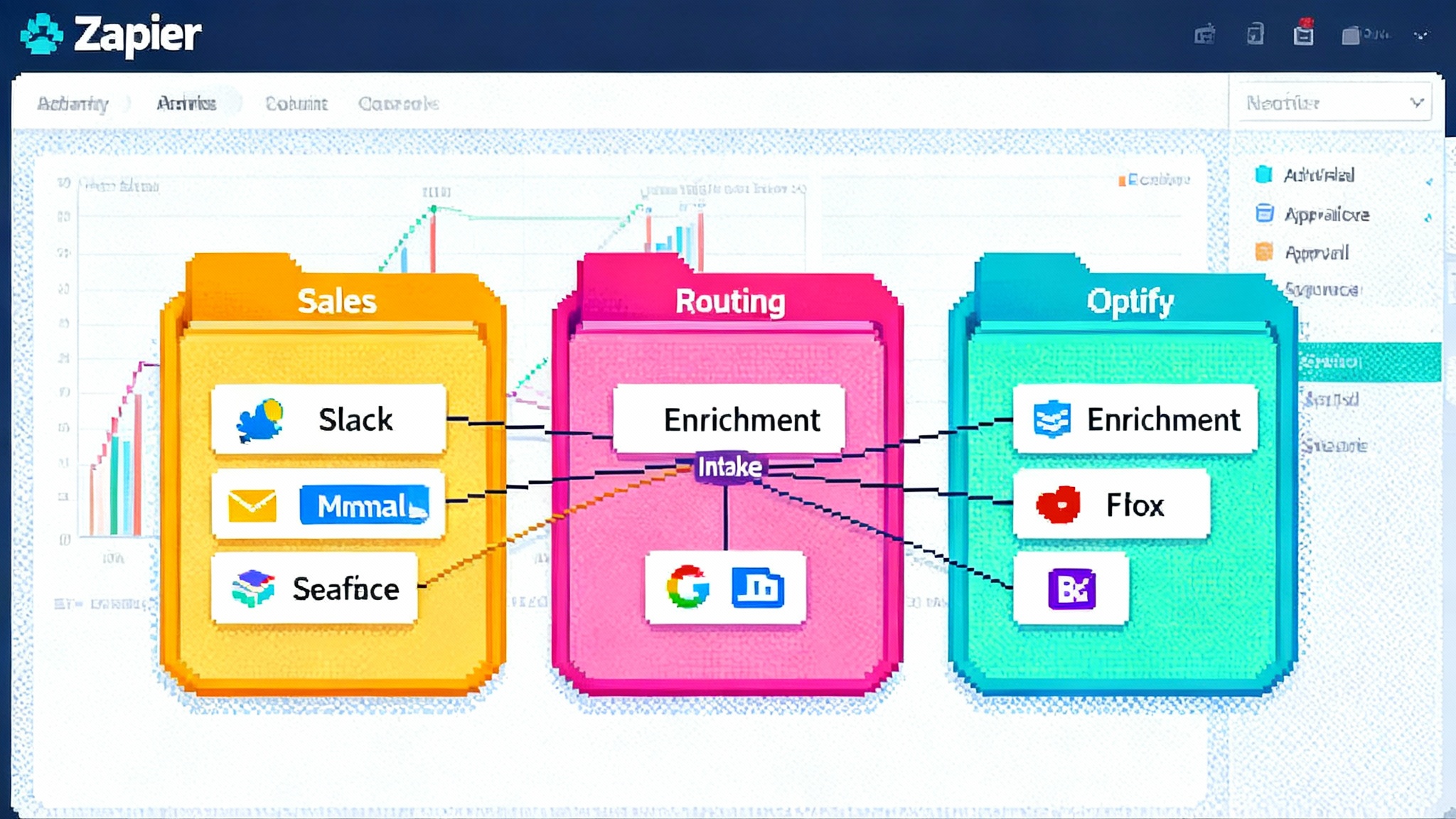

4) Gateway: MCP-native tool integration and service discovery

Tooling is where most prototypes stall. The Gateway turns existing APIs, Lambdas, and services into tools that agents can discover and call. Importantly, the Gateway is designed to be compatible with Model Context Protocol tools, which makes it easier to plug into the growing ecosystem of MCP servers. If your company already exposes a payment validation API and a shipment tracking service, you can list those as agent tools with minimal glue code. That turns integration from a bespoke project into a repeatable step.

5) Browser Tool: secure cloud browser for real web tasks

Many tasks still live behind web front ends, not clean APIs. The Browser Tool provides a cloud-hosted, isolated browser runtime that an agent can drive. That matters for partner portals, government sites, and legacy apps that will not get a modern interface anytime soon. The agent performs the clicks and form fills in a secure sandbox, not on a developer’s laptop. Operations teams get audit and control points instead of a swarm of headless browsers everywhere.

6) Code Interpreter: a safe sandbox for computational steps

Agents often need to transform data, run ad hoc calculations, or generate charts. Code Interpreter gives them a secure sandbox for those steps, with support for common languages. This is not a general shell with broad escape risk. It is a managed environment focused on targeted computation. A marketing analyst agent can join two datasets, calculate campaign lift, and emit a chart as an artifact, all without granting blanket workstation access.

7) Observability: traces, metrics, and OpenTelemetry compatibility

Production agents need the same visibility we expect from microservices. AgentCore Observability surfaces execution traces, key metrics, and dashboards powered by CloudWatch, and it is compatible with OpenTelemetry. You can instrument agent runs end to end, set alarms on failure rates or tool error spikes, and analyze tail latencies for different classes of tasks. When something goes wrong on a Saturday, the on-call engineer can find the problematic tool or prompt branch quickly instead of spelunking through ad hoc logs.

The sum of these primitives is not just convenience. It is an operational contract. Each one trades a pile of brittle glue code and implicit risk for a managed capability and measurable behavior. For specific definitions and the full service list, see the AWS documentation section titled What is AgentCore in the official docs.

The opening salvo in the agent runtime wars

AgentCore enters a crowded field. Cloud vendors and model providers are converging on the idea that agents need a proper runtime, not just a prompt and a loop. Microsoft is promoting an agent service inside Azure. Google is building on Vertex AI’s foundations. Model providers increasingly tout run isolation, tool catalogs, evaluation harnesses, and observability. Open communities push Model Context Protocol to standardize tools and connectivity. What makes this moment competitive is the pattern we saw with containers and serverless. Once operations concerns get formalized, ecosystems build around those contracts, and the platform that balances openness with operational excellence tends to win more workloads. For a broader view of how the supply chain is maturing, see how agent factories are arriving.

AWS’s angle is familiar. Bring the security model, the identity story, and the observability pipelines that operations teams already know. This does not eliminate lock-in risk or architecture tradeoffs, but it turns adoption into an incremental decision. Because AgentCore works with models outside Bedrock and with open frameworks, teams can hedge by keeping orchestration and modeling portable while betting on the runtime for scale and control.

What teams can ship in the next 90 days

Below are concrete projects that fit a 90-day window if you already have basic cloud guardrails in place. Each uses AgentCore’s primitives to cut risk and cycle time. The phases assume a conservative pace with security and measurement baked in.

Project 1: Support triage agent with human-in-the-loop closure

- Goal: Reduce median first response time and deflect level one tickets while preserving agent accountability.

- Architecture: Runtime for session isolation per conversation, Identity to act as the signed-in customer or support rep, Memory for conversation history and case context, Gateway to expose ticketing and knowledge base tools, Browser Tool for legacy partner portals where needed, Observability for traces and alerts.

- Day 0 to 30: Define two tools only: CreateTicket and RetrieveArticle. Wire them through Gateway with minimal transform code. Instrument traces and set alerting thresholds for tool errors above 2 percent and agent fallback above 15 percent. Pilot with internal staff.

- Day 31 to 60: Add EscalateWithSummary and UpdateTicket tools. Introduce a rule that any high-risk action requires human approval. De-bias prompts using seeded adversarial cases. Measure time to first response, intervention rate, and cost per resolved case.

- Day 61 to 90: Roll out to a small customer cohort behind feature flags. Add one Browser Tool flow for a specific partner portal. Tune memory retention policies to 30 days for privacy. Publish a playbook for on-call.

Expected outcome: 20 to 35 percent deflection on level one tickets, faster first responses, and traceable handoffs. The main blocker is often identity and policy mapping, which AgentCore Identity reduces by reusing your existing provider.

Project 2: Revenue operations analyst that closes the loop

- Goal: Automate weekly pipeline rollups and variance analysis, then open tasks in the sales system for human follow-up.

- Architecture: Runtime for long runs up to several hours during quarter close, Code Interpreter for computations and chart artifacts, Memory for persistent account preferences and previous decisions, Gateway to expose your data warehouse view and the sales system’s task API, Observability for cost and latency tracking.

- Day 0 to 30: Implement two tasks: FetchPipelineView and PostSalesTask. Use Code Interpreter to compute variance by segment and produce a PDF chart. Route outputs to an internal channel for review.

- Day 31 to 60: Add ApproveAndPost workflow with a human approval gate. Introduce rate limits at the Gateway so the agent cannot open more than N tasks per hour. Start measuring cost per run and the percentage of tasks accepted by managers.

- Day 61 to 90: Expand to three key regions and add memory-driven personalization, for example preferred rollup format per regional leader. Wire alerts for latency regressions and cost spikes. Harden with prompts that test edge cases like missing data and outliers.

Expected outcome: Less manual spreadsheet work, cleaner follow-through, and measurable cost per pipeline audit. The safe compute boundary of Code Interpreter keeps data transformations isolated and auditable.

Project 3: Vendor onboarding agent that spans web and APIs

- Goal: Shorten time to onboard new suppliers across a mix of modern APIs and legacy portals.

- Architecture: Runtime for multi-hour workflows, Identity to act on behalf of the procurement specialist with least privilege, Gateway to publish your internal Vendor API and DocCheck service as tools, Browser Tool for legacy portals, Memory to remember partial progress per vendor, Observability for run-by-run traces.

- Day 0 to 30: Publish three tools through Gateway: CreateVendor, UploadW9, RequestBankVerification. For portals, prototype one Browser Tool flow end to end. Instrument every step with spans and consistent attributes.

- Day 31 to 60: Add retry and backoff policies in prompts and tool wrappers. Introduce a pause-and-resume mechanism so long runs survive human approvals. Define service level objectives for completion time and success rate, then alert when tails exceed thresholds.

- Day 61 to 90: Expand to two additional portals. Add a compliance review step where the agent assembles an approval packet using Code Interpreter for formatting but not for calculations. Run a tabletop incident drill for failed verifications.

Expected outcome: Faster onboarding, fewer swivel-chair tasks, and a system you can operate like any other service.

How to evaluate readiness, with a bias for action

- Define one success metric per agent. Examples: ticket deflection rate, cost per completed analysis, onboarding cycle time. Tie it to a threshold that is either maintained or improved by the agent.

- Adopt an approval and rollback pattern from day one. Treat risky actions as requests that humans approve. Store a clean, reconstructable log of the agent’s intent, tools called, parameters, and outputs. Your change-management team will thank you.

- Set a budget and track it as a first-class metric. Observability is not only for errors. Watch cost per run, tool call counts, and memory footprint. When it spikes, the traces show you where.

- Keep orchestration portable. Use your preferred framework and keep prompts and graphs in your own repositories. Let Runtime, Identity, Memory, and Observability handle the heavy lifting. This gives you a hedge without sacrificing pace.

- Establish privacy and retention defaults. Memory is powerful. Decide what is remembered and for how long. Make it discoverable for audits.

For more on how enterprise stacks are consolidating around control planes and paved roads, cross-check our analysis in Agent factories are arriving and how browser-native agents are evolving in UiPath and OpenAI production agents.

Risks and tradeoffs you should name out loud

- Vendor concentration vs speed: AgentCore accelerates delivery by bundling primitives. The tradeoff is reliance on a single cloud for core runtime. Hedge by keeping framework code portable and by exposing your own services as Gateway tools using standard protocols like MCP.

- Browser automation scope: The Browser Tool unlocks real tasks on the web. It also introduces new surface area for brittle flows. Restrict it to well-defined paths. Add canaries that detect layout changes and fail closed.

- Memory governance: Long-lived memory improves outcomes and personalization but can drift into storing data that should be ephemeral. Enforce maximum retention and create explicit separate stores for regulated content.

- Human oversight fatigue: If you ask reviewers to click Approve on everything, they will approve everything. Focus your human gates on the 5 to 10 percent of steps that carry real risk or high blast radius.

What this means for the next year

Once a runtime standardizes isolation, identity, tools, and observability, the ecosystem professionalizes. Security teams can write policies against clear boundaries. Platform teams can provide paved roads. Vendors can ship MCP-compatible tools that plug into a common Gateway without bespoke integration for every customer. Buyers can evaluate agents using metrics rather than hype videos. This is how a field matures. We explored the momentum on the cloud side in Google’s big bet on AI agents, which complements the runtime-first approach in this piece.

Do not wait for a perfect standard. Pick one real workflow, publish two or three high-value tools through Gateway, turn on traces, and put an approval gate in front of risky steps. The point is not to find the fanciest agent. It is to ship a reliable one.

Bottom line

AgentCore is not exciting because it adds a new reasoning loop. It is exciting because it treats agents like software you can operate. That is the pivot from experimental frameworks to production ops. Whether your organization lands on AWS or another platform, the must-haves are now clear: a runtime with isolation and long runs, identity that maps to your real users and services, managed memory with explicit retention, secure built-in tools for the messy web and safe computation, and observability you can page on-call for. The runtime wars will reward teams that master those primitives and ship working systems. Start small, measure, and make progress every week. By the time the smoke clears, the winners will be the people who built agents that did real work, day after day, without surprises.

What this means for the next year

Once a runtime standardizes isolation, identity, tools, and observability, the ecosystem professionalizes. Security teams can write policies against clear boundaries. Platform teams can provide paved roads. Vendors can ship MCP-compatible tools that plug into a common Gateway without bespoke integration for every customer. Buyers can evaluate agents using metrics rather than hype videos. This is how a field matures. We explored the momentum on the cloud side in Google’s big bet on AI agents, which complements the runtime-first approach in this piece.

Do not wait for a perfect standard. Pick one real workflow, publish two or three high-value tools through Gateway, turn on traces, and put an approval gate in front of risky steps. The point is not to find the fanciest agent. It is to ship a reliable one.

Bottom line

AgentCore is not exciting because it adds a new reasoning loop. It is exciting because it treats agents like software you can operate. That is the pivot from experimental frameworks to production ops. Whether your organization lands on AWS or another platform, the must-haves are now clear: a runtime with isolation and long runs, identity that maps to your real users and services, managed memory with explicit retention, secure built-in tools for the messy web and safe computation, and observability you can page on-call for. The runtime wars will reward teams that master those primitives and ship working systems. Start small, measure, and make progress every week. By the time the smoke clears, the winners will be the people who built agents that did real work, day after day, without surprises.