UiPath + OpenAI Put Computer-use Agents Into Production

Screen-driving agents just moved from demo to deployment. UiPath pairs OpenAI models with Maestro, guardrails, and real benchmarks so enterprises can orchestrate auditable, cross-vendor computer-use automation at scale.

From sizzle reels to service-level agreements

For years, computer-use agents looked magical in tightly edited videos. A cursor darted across a browser, filled a form, handled a pop-up, and shipped a result without a brittle integration in sight. The demos were real, yet few teams trusted them in production. What they lacked was not only a smarter model, but the boring essentials that make enterprise systems dependable: governance, orchestration, evaluation, and accountability.

That changed on September 30, 2025 when UiPath and OpenAI announced a joint push to bring frontier models and first-class computer-use capabilities into UiPath’s agentic automation stack. The promise is straightforward. Agents that can literally use a computer are moving from proof of concept to governed, measurable operations. The announcement underscored shared benchmarking for computer-use tasks and end-to-end orchestration through Maestro. If you want the details, read the UiPath and OpenAI collaboration.

This article cuts through hype and focuses on the how. We outline practical guardrails, show how Maestro coordinates agents, robots, and people, describe benchmarks that reflect real UI work, and explain how interoperability standards reduce lock-in. We close with a six to twelve month outlook, a credible ROI instrumentation model, compliance patterns that scale, and a 90-day plan teams can start Monday.

What computer-use agents actually do at work

Think of a skilled temp who can read a short instruction, navigate a legacy claims screen, pull a PDF from an email, cross-check a record in a customer database, and file a ticket when a rule is violated. Computer-use agents are that temp, but tireless, instrumented, and auditable. In production, they routinely:

- Parse visual interfaces and dynamic layouts, not just structured APIs.

- Plan steps against a business goal, adapt when a button moves or a field label changes, and recover from routine faults like timeouts.

- Mix actions across systems that never had an integration budget, including browser apps, virtual desktops, Windows thick clients, and shared drives.

The difference between a demo and a deployment is control. Enterprises require the same safety and traceability from agents that they expect from human operators and classic robots.

Controlled agency with guardrails that operations trust

Controlled agency means the agent can act, but only inside defined boundaries. UiPath’s approach maps to patterns operations teams already use, which is why it lands well with risk and audit partners:

- Policy-scoped desktops. Restrict the agent to approved applications, windows, and domains. If it attempts to stray, the session halts and raises an alert.

- Role-based access and secrets. Use named, vault-managed credentials with least privilege. Retire shared passwords and local secrets entirely.

- Human-in-the-loop checkpoints. Route exceptions, approvals, and fraud flags to an approver queue. The agent pauses, presents screenshots and context, and resumes only after a decision. This keeps accountability for high-risk steps like vendor bank changes or claim denials.

- Safe tools and actions. Maintain allow-lists for actions such as file uploads, exports, or deletes. New tools go through standard change management, just like any capability.

- Deterministic replay and evidence. Store screenshots, cursor paths, and keystroke logs with timestamps so investigators can replay a task and see exactly what the agent saw and did.

None of these are flashy, yet together they unlock production value without letting autonomy outrun governance.

Maestro-level orchestration across agents, robots, and people

Most real processes are multi-actor. A triage agent classifies inbound emails, a computer-use agent extracts invoice data from a supplier portal, a classic robot posts to ERP, and a human approves exceptions. UiPath Maestro provides a single control surface to coordinate these handoffs while keeping the thread intact.

Key orchestration capabilities include:

- Routing and backpressure. Assign work by capacity, skills, and service levels. When queues spike, shed load to robots that handle structured subsets.

- Context-preserving handoffs. Pass session evidence, partial outputs, and risk labels between agents and humans so no one starts cold.

- Health, telemetry, and cost. Track run times, retries, model usage, escalations, and unit costs by process and participant.

- Change rollout. Promote a new agent plan or model version to a small ring, watch the right KPIs, then expand.

If you are already building an enterprise-wide control plane, see how this aligns with the rise of agent hubs as control plane.

Benchmarks that finally matter for computer-use agents

Traditional model benchmarks seldom predict production outcomes for UI tasks. What matters is whether an agent completes business work on actual interfaces while staying inside policy. When you design evaluations, make them look like your change control meetings.

Measure these five dimensions:

- Task success under UI drift. Run the same task through minor UI changes. Score the percentage of hands-free completions.

- Step efficiency and correction rate. Compare planned steps to executed steps, measure detours and backtracks, and penalize silent failures.

- Recovery behavior. Inject routine faults like slow networks or modal pop-ups. Measure time to recovery, escalation rate, and data integrity.

- Compliance hygiene. Verify that mask rules held, restricted windows never came into focus, and redacted fields stayed redacted in evidence.

- Business-grounded outcomes. Tie runs to dollars and service levels. For example, straight-through rate for invoices, time-to-resolution for exceptions, and net working capital impact.

Publish dashboards weekly. If a model upgrade looks faster but increases recovery escalations, the dashboard should catch it before your auditors do.

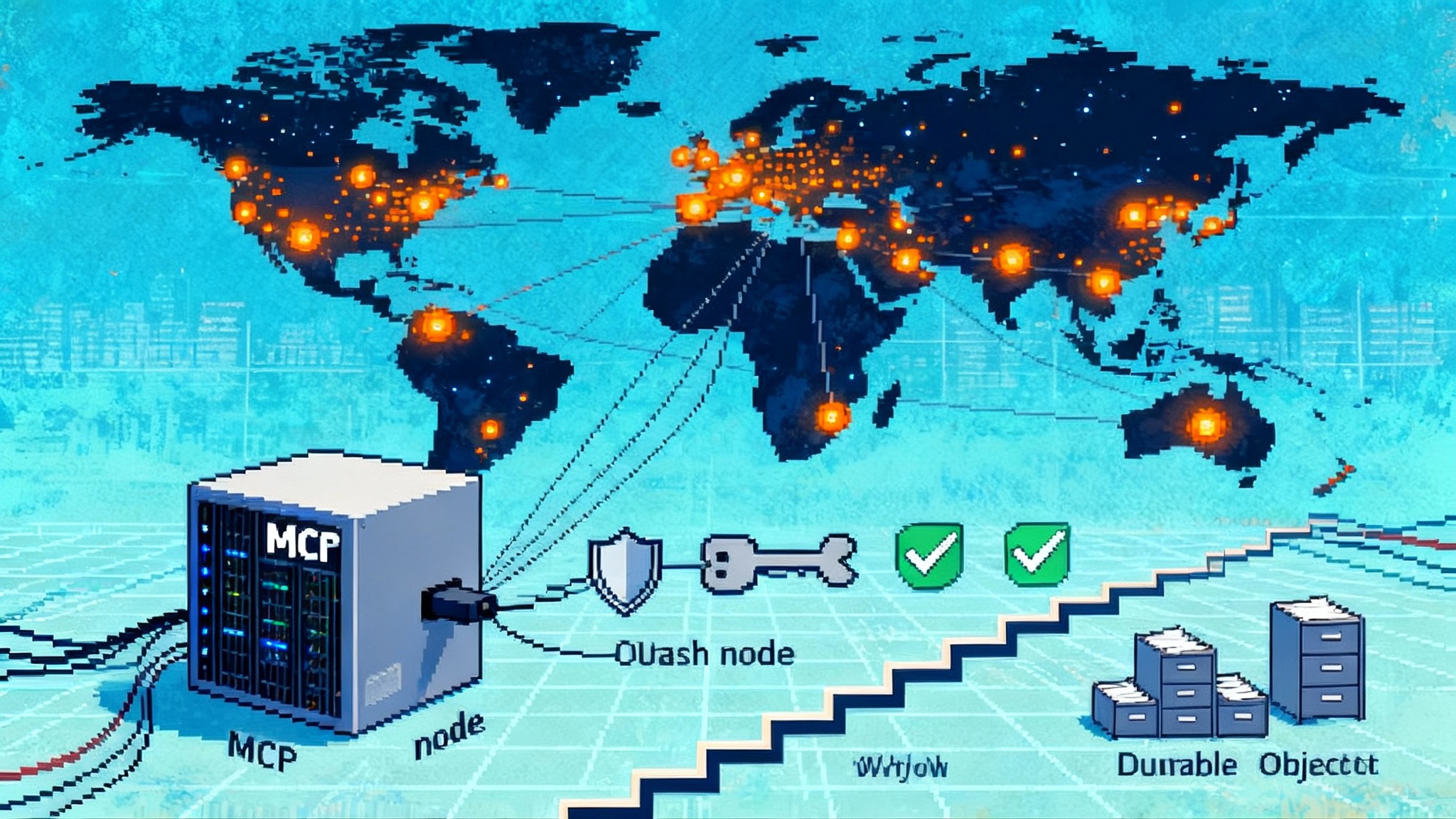

Interoperability that reduces vendor lock-in

Large organizations already run fleets of agents and copilots from different vendors. Interoperability is how you avoid single-stack monocultures and keep a healthy build-versus-buy mix.

Two standards matter most right now:

- Model Context Protocol. A standardized way for models to discover and invoke approved tools and data. With MCP, you add capabilities without bespoke connectors while improving governability. For a complementary view on where MCP is heading, examine remote MCP at the edge.

- Agent2Agent protocol. A cross-vendor messaging layer so agents can exchange goals, state, and results with security and observability. Microsoft is bringing A2A to Azure AI Foundry and Copilot Studio. For a deeper look, see Microsoft's Agent2Agent protocol overview.

Together, these mean your chat assistant can delegate a screen task to a UiPath agent, or a UiPath workflow can ask a partner domain agent to plan a subtask, all with consistent audit trails. The result is less lock-in and more optionality.

What is actually new in the UiPath plus OpenAI pairing

Beyond access to frontier models, three practical advances stand out:

- A ChatGPT connector for process owners. Teams can invoke UiPath-run automations and agentic workflows from ChatGPT Enterprise where business users already live, without context switching.

- A shared evaluation harness for computer-use tasks. Teams can compare models and plans across enterprise-relevant scenarios rather than synthetic tests.

- Maestro orchestration across stacks. UiPath agents, OpenAI-powered agents, and third-party actors are coordinated to optimize end-to-end outcomes, not just local steps.

Taken together, this is a path from research lab to change board, with evidence that satisfies engineering, operations, and audit.

Where computer-use agents will land first

Expect adoption to concentrate where user interfaces are stable, rules are explicit, and work is already centralized in shared services. Early beachheads include:

- Order-to-cash. Customer onboarding, portal-based credit checks, invoice matching, and statement reconciliation.

- Claims intake and adjudication. Capturing evidence from email and portals, populating claims systems, and launching straight-through paths when confidence is high.

- Financial close and controls. Fetching supporting documents from banking portals, compiling tie-outs, and submitting journal entries with approvals.

- Customer support triage. Reading tickets and attachments, retrieving entitlements from legacy tools, and filing precise internal tasks with context.

- Vendor management. Screening suppliers on public sites, capturing certificates, updating vendor profiles, and routing exceptions.

These domains share three traits: predictable UIs, clear unit economics, and established audit expectations. That is where dependable agents thrive.

How to instrument ROI so finance signs off

ROI stories collapse when the math is fuzzy. Use a simple, credible model and keep it stable from baseline through rollout.

- Define the unit of work. Pick an atomic task and normalize it. One invoice line item posted. One claim registered. One vendor update completed.

- Measure time and quality. Capture hands-on minutes, countdown minutes, rework rate, and exception rate before and after. Include recovery minutes when an agent escalates mid-task.

- Translate to unit cost and outcome. Convert minutes into fully loaded labor and platform costs. Include model usage, desktop infrastructure, orchestration overhead, and approval time. Report dollar savings together with cycle-time deltas.

Run a four-week baseline, then instrument pilots with the same counters. Maintain a unit-cost control chart so you can see drift and seasonality. Tie results to working capital or service-level penalties where possible, since those move executive priorities.

Compliance and audit patterns that scale past pilots

Auditors will ask five questions: who acted, what changed, under which authority, with what evidence, and how do you know the evidence is complete. Build these patterns early:

- Identity and authorization. Issue named, non-human identities for agents. Map privileges to job functions, not individuals. Rotate credentials on a schedule and on incident.

- Evidence and lineage. Store tamper-evident session logs with signed hashes. Keep step-level screenshots and typed keystrokes. Capture model version, tool versions, and prompts used. Maintain a linkable chain from each business record to its evidence bundle.

- Segregation of duties. Ensure the same agent cannot both create and approve a high-risk change. Use human approvals for policy thresholds.

- Data minimization. Mask sensitive fields in the viewport and logs. Apply allow-lists for data export. Prove masking held by sampling evidence.

- Change control and rollback. Treat agent plan updates and model swaps like code releases. Stage them, record evaluations, and keep rollback packages.

- Red-team the agent. Before production, run adversarial tests for prompt injection, overlay attacks, and malicious pop-ups. Document findings and asserts.

Do this, and quarterly audits become routine rather than disruptive.

A 90-day plan from pilot to production

You can move fast without being reckless. Here is a practical plan used by teams that ship value while controls harden.

- Map and measure the as-is process. Capture units of work, time, exceptions, and system touchpoints. Record baseline evidence on a representative sample.

- Define guardrails. Enumerate allowed apps and sites, define masking rules, set approval thresholds, and assign named non-human identities.

- Build the evaluation suite. Capture 20 to 50 realistic scenarios with seeded edge cases. Decide pass-fail thresholds and error taxonomies before you start.

- Develop the agent plan. Use UiPath’s agent builder with OpenAI models where they shine. Keep classic robots for structured sub-steps and cheap throughput.

- Orchestrate with Maestro. Wire handoffs between agents, robots, and human approvers. Route work by skills and SLAs. Turn on dashboards for success rate, recovery, and cost per unit.

- Ringed rollout. Start with a single team and shift only 20 percent of the volume. Expand rings weekly as metrics hold. Keep rollback packages warmed.

- Weekly governance. Review exceptions, false positives, evidence samples, and unit cost. Ship one improvement per week. Document the change log.

This sequence gets real work done while building confidence, a combination that sponsors and auditors recognize as sound.

Pitfalls to avoid

Even strong teams stumble on avoidable mistakes. Watch for these patterns:

- Over-permissive desktops. Letting an agent roam across the enterprise is fast and dangerous. Start with a narrow allow-list and widen by exception.

- Silent failures. A computer-use agent that clicks the wrong button without escalating can be worse than no automation. Make recovery metrics first-class.

- Update storms. When a target app ships a redesign, your task success can crater. Use canary rings and keep a hotfix plan ready.

- Evidence gaps. If you cannot replay a session, you cannot prove compliance. Treat evidence capture as a product feature.

- Vendor monoculture. A single stack can be efficient, but interoperability is your hedge. MCP and A2A are practical tools to keep options open.

How this fits the broader agent ecosystem

UiPath’s Maestro and guardrail model are part of a larger shift where orchestration becomes the enterprise nerve center for AI work. If you are mapping that landscape, read about agent hubs as control plane and how AgentKit as an agent OS is shaping developer ergonomics and tool discovery.

The bottom line

Agents that can use a computer were the last mile between large models and everyday business work. With UiPath and OpenAI aligning on benchmarks, orchestration, and guardrails, that mile is finally paved. The advantage now goes to leaders who deploy with discipline. Keep your guardrails tight, use ringed rollouts, instrument honest evaluations, and design for interoperability from day one. The next time someone shows you a flashy demo, ask two questions. When does this go live, and what will the cost per unit be when it does?

Quick checklist you can share with stakeholders

- Clear unit of work defined and baselined

- Policy-scoped desktop and least-privilege credentials

- Evidence capture with deterministic replay

- Maestro routing with backpressure rules

- Weekly dashboards for success, recovery, and unit cost

- Evaluation suite for UI drift and routine faults

- Ringed rollout plan with fast rollback

- Interop strategy for MCP and A2A

- Governance cadence with change logs and approvals

Ship this list with your proposal and you will shorten the time from pilot to production.

One final note on standards and timing

Standards mature unevenly. MCP will broaden tool discovery and policy controls, while A2A will normalize secure, observable agent-to-agent messaging across vendors. The UiPath and OpenAI announcement on September 30, 2025, is important because it couples those trends with enterprise-grade orchestration and evidence. That mix is what it takes to move from slideware to sustained value.