GitLab Duo Agents Move From Chat to Commits and Pipelines

GitLab’s Duo Agent Platform elevates AI from chat to code by creating real branches, commits, and policy aware pipelines. Learn how flows, rich project context, and MCP help agents ship trustworthy changes inside GitLab.

Breaking: agents that finally ship code

GitLab has turned a corner in how artificial intelligence shows up in day to day software work. With the public beta of the Duo Agent Platform, agents stop being a side chat and start participating in real code changes, reviews, and pipeline decisions. GitLab framed this shift on July 17, 2025 with a public beta and a plan to iterate toward general availability later in 2025. You can scan the specifics in GitLab’s own public beta announcement.

If you have been experimenting with code bots that answer questions but do not change code, this step feels significant. GitLab is not positioning Duo as a chat widget. It is building an agent layer inside the system of record for software: the place that already tracks issues, code, tests, pipelines, security findings, and compliance status.

From chatty assistants to code changing agents

The biggest difference between a chat assistant and the Duo Agent Platform is authority. A chat assistant suggests. An agent platform plans, acts, and leaves artifacts that other people can review. In GitLab’s case, those artifacts are branches, commits, and merge requests with linked issues, test results, and security scan outcomes. That matters because it puts the agent on the same playing field as every other contributor. The output is not a paste in chat. It is a diff with tests attached.

GitLab approaches this with two complementary experiences:

- An IDE experience for Visual Studio Code and JetBrains where you can direct agents with natural language, inject context from files and issues, and delegate tasks that range from small edits to multi file refactors.

- A GitLab web experience where agents can run flows that operate directly on your projects and pipelines.

Together they form a path from a question to a merge request without leaving the tools you already use.

How IDE native flows turn goals into merge requests

Think of a flow as a small team in a box. You give the team a goal, like migrating a Jenkins configuration to GitLab CI/CD, and the team divides and conquers. One member pulls context on the current repository and pipeline. Another drafts the target .gitlab-ci.yml. Another updates documentation, adds tests, or opens necessary issues. The flow keeps a shared plan, asks you clarifying questions when needed, and produces a merge request that links back to the reasoning and any open decisions.

Flows exist in the editor and in the web interface. In the editor you can kick off a flow from within the codebase, review proposed changes file by file, and approve or revise. In the web interface flows run in GitLab CI/CD, which means the work is visible to teammates, policies apply, and results are recorded. Because both experiences are backed by the same orchestration layer, a flow started in the editor can be continued in the browser and vice versa.

A few concrete examples show why this matters:

- Converting a Jenkinsfile to a GitLab pipeline definition. The flow inventories the current jobs, maps them to equivalent GitLab stages, and generates a first pass with comments for anything that requires a project specific decision. It runs the pipeline in a branch, catches failures, and suggests fixes.

- Turning an issue into a merge request. The flow reads the issue, finds related code and tests, scaffolds a branch, and proposes a minimal change with a checklist that links back to the acceptance criteria. It does not bypass review; it accelerates getting to a reviewable diff.

- Repairing a broken pipeline. The flow reads failing job logs, compares a recent successful run, and proposes a fix that adjusts a job script or a dependency version. It runs the fix and attaches the results to the merge request.

The key ingredient in all three is a plan that the agent writes down and follows. That plan is visible to you. You can interrupt it, provide hints, or add constraints. This is a subtle but important usability shift. Instead of agents acting like opaque autocompletes, they behave like junior teammates who narrate what they are doing.

Project aware context: why the system of record matters

Large language models are pattern engines. They get smarter with better instructions, but they get useful with better context. GitLab’s advantage is the depth of project context it can safely expose to agents. An agent within GitLab can see how your team names branches, how you structure directories, which linters you enforce, which license policies exist, and where secrets scanning has found problems. It can see which merge requests were reverted and why. It can detect that a service is containerized, that the team uses deployment approvals, and that a compliance framework applies to the group.

This is not generic coding. It is a personalized view of how your organization ships software. That is what turns an edit into an acceptable edit. It is also what allows an agent to suggest test updates in the style your team expects and to open issues with the right labels and templates.

Two parts of the design make this feel natural in practice:

- A lightweight language for delegation. GitLab includes slash commands such as explain, tests, and include. You can say include file x and issue y and the agent will ground itself in the exact materials you want it to use. That removes guesswork and reduces hallucination risk.

- Stateful collaboration. Agents in the editor and in the web interface remember the thread of the work, including partial attempts and your corrections. That memory gives you leverage. You can correct once and have that correction propagate across the plan.

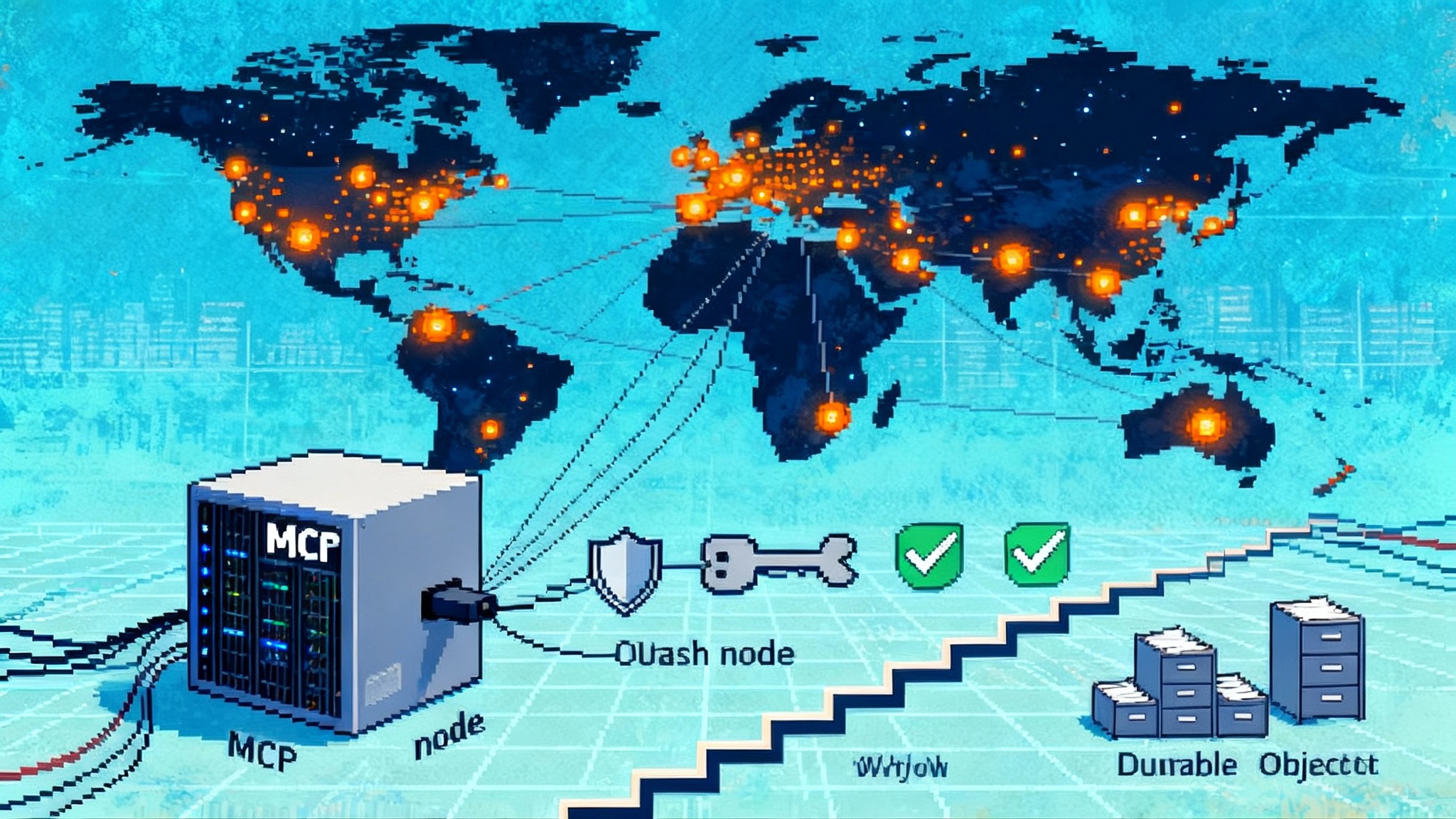

Model Context Protocol connects tools, securely by design

No single platform contains everything a developer needs. Design lives in a design tool. Customer tickets live in a support system. Incidents live in a pager system. GitLab’s Model Context Protocol support acknowledges that reality. Model Context Protocol is an open standard that lets assistants and tools exchange structured context and actions through a common interface. In GitLab, that means agents can connect to external data or tools when they need to, and it means other assistants can connect to GitLab as a server to get project context without scraping. The company’s docs explain both roles and the security model that wraps them in scopes, authentication, and explicit authorization prompts. For specifics, see the Model Context Protocol documentation.

This is more than plumbing. It gives teams practical choices. For example, you can let Duo act as a Model Context Protocol client to call a documentation search service your team already trusts. Or you can run GitLab as a Model Context Protocol server so that another agent, like a deployment copilot or a support triage assistant, can access issues, merge requests, and pipeline status through a controlled channel. Either way, it keeps tools loosely coupled and traceable.

From pipelines to policies: agents inside CI and compliance

Agents that act must obey the same rules as people. GitLab’s approach is to put agents inside the same CI/CD framework and attach the same policies. When a flow runs in the web interface it uses project and group policies for approvals, protected branches, and merge request rules. If your organization centralizes security policies, the same policy checks apply to agent generated changes. This is what enables safe autonomy. The agent can propose, run tests, and even trigger deployment previews, but the merge still goes through the checks your compliance team expects.

This matters in regulated environments. Many teams ask whether agents will sneak around controls. The design answer is no. The controls are the path. An agent proposes a change, pipelines run, compliance checks run, and the merge request records why a change passed. If a policy requires a human approval from a code owner or a security reviewer, that approval is still required. The difference is the speed at which a high quality, policy aware proposal can be produced.

A concrete example helps. Consider a library with a critical vulnerability. Today a developer might read the advisory, search the repo, estimate the blast radius, bump the version, update code that relies on old behavior, run tests, and write a mitigation note. With Duo’s agent layer, you can assign this as a goal. The agent scans for occurrences, opens a branch, bumps the version, updates code, runs the pipeline, and attaches a short response plan for runtime mitigation. Security policies still enforce required approvals. The output is a merge request that a human can review with far less manual setup.

What to try first: a three step field guide

If you are on GitLab Premium or Ultimate and have Duo features enabled, you can run a realistic experiment in a single afternoon.

- Install the editor extension and enable agentic chat. Start small. Ask the agent to inventory your repository. Use include to add context from a problem issue and a failing test. Watch how the agent cites what it is reading and how it asks for clarification when the goal is ambiguous.

- Run an editor flow. Pick the Jenkinsfile to GitLab conversion or a pipeline repair flow. Give the agent explicit constraints. For example, do not change any database migrations and keep all changes behind a feature flag. Measure how many manual steps the flow removes and where it still needs your guidance.

- Move the work to the web interface. Trigger the same flow in the browser so it runs against your project policies. Review the merge request, the test results, and the security findings in one place. Decide what guardrails you want to enforce before you allow the agent to propose changes on more repositories.

Along the way, write down the delegation patterns that fit your team. A small vocabulary of reusable prompts, such as prefer pure functions when refactoring or use parameterized tests for new cases, turns the agent into a better teammate.

Comparisons and where this fits in the ecosystem

GitLab’s approach puts the agent inside the system of record. That makes it comparable to broader enterprise patterns we have covered, like building an enterprise agent control plane to govern who can call what tools and under which policies. It also rhymes with cloud delivered orchestration models where vendors provide agent catalogs and guardrails, as seen in our look at the AWS Agents Marketplace comparison. And for teams that prefer to assemble their own stack around programmable assistants, GitLab’s flows echo the programmable agent OS pattern, but with the important twist that the output lands in merge requests with tests and policy checks attached.

The result is less about novelty and more about fit. If your delivery system already lives in GitLab, the Duo Agent Platform reduces integration tax. If it does not, the Model Context Protocol support gives you a path to connect other tools without lifting the gates on permissions or flooding logs with untraceable actions.

Risks, guardrails, and the new developer ergonomics

Agents with write access are powerful. That requires deliberate guardrails. Treat agent permissions like you treat a new teammate on day one. Start with a narrow scope. Use protected branches. Require approvals. Monitor token scopes for agent workflows and be explicit about what Model Context Protocol tools an agent is allowed to call. Keep secrets out of freeform prompts and rely on built in context injection rather than copy pasting credentials.

Then focus on the developer experience. The most common failure mode for early agent pilots is frustration. The plan is unclear, logs are noisy, and the agent either asks too many questions or too few. Put a human in the loop to coach the agent. Encourage short, concrete goals. Reward the agent when it follows the plan you prefer by merging those changes faster. Over a few weeks you will find a delegation style that feels natural to your team.

A practical checklist for pilot teams:

- Define a narrow set of repositories where the agent can open branches.

- Require two approvals on agent generated merge requests for the first month.

- Use code owners to route reviews to the right humans.

- Configure pipeline policies to block merges on failing security scans.

- Track a small set of metrics: time from task creation to merge request, number of review cycles, and percentage of merges that pass on first try.

The 2025 path to general availability

As of October 2025, GitLab has said it will expand the platform in monthly 18.x releases and target general availability later in 2025. Expect more flows, deeper integration in the browser, and broader editor support. Expect more control for administrators over which tools an agent can call through Model Context Protocol and how policies apply at the group or instance level. The through line is not more chat. It is more ways for agents to participate in real work where the project lives.

For organizations evaluating agents across vendors, this is the comparison to make. Does the agent sit outside your delivery system, or does it live inside your system of record where it can see the rules and be held to them? GitLab’s bet is that the latter unlocks speed without sacrificing control.

What this changes for platform, security, and compliance teams

- Platform engineering. The agent layer gives you a programmable way to roll out standards. Instead of a wiki page that explains how to migrate pipelines, you can publish a flow that does the migration, records the deltas, and opens follow up issues. This converts a best practice into an executable practice.

- Security. Agents help you shift left in a practical way. Vulnerability explanation and remediation can be pulled into the merge request, and policy enforcement stays intact. You get fewer unreviewed fixes and more reviewed changes with tests.

- Compliance. Instead of a parallel compliance pipeline, you get policy driven enforcement across the same pipelines that developers use. Agents do not get a side door. They pass through the same controls, which makes audits easier. The audit trail is the merge request.

The implication is that teams can raise the floor on quality without slowing down. When a new rule arrives, you encode it in a flow or a policy and let the agent apply it across repositories. Humans review exceptions and tricky edge cases.

Frequently asked questions teams raise

Will agents change code contrary to our standards? Not if you codify standards in policies and linters. The platform routes agent work through the same CI jobs and merge request approvals that apply to humans.

Can the agent touch production? Treat production access as you do for people. Keep deployment permissions separate and require approvals. The agent can stage changes and request deployment, but your rules decide what ships.

What about secrets and privacy? Favor built in context injection over copy paste prompts. Audit token scopes and restrict Model Context Protocol tools to those you trust.

How much oversight is required? Early on, plan for higher oversight while you learn where the agent over or under performs. Over time, shrink the review surface to riskier areas and let the agent run faster paths for low risk fixes.

Bottom line

The Duo Agent Platform points to a near future where multi agent teams and human teams work side by side inside the same project, the same pipelines, and the same policies. Flows turn intentions into steps. Project context makes those steps acceptable to your team. Model Context Protocol connections bring in the outside world without handing over the keys. If your goal is to ship secure software faster, put an agent to work where the work already happens, then measure how much time moves from setup to review. When the agent lives in the system of record, you are not just chatting. You are shipping.