Border Protocols for AI: Labs Turn Into Micro Sovereigns

In the week of October 6 to 7, 2025, OpenAI paired a new AgentKit with a public threat report, signaling a shift from research alignment to operational governance with real borders, rules, and auditable controls.

The week AI learned to build borders

On October 6, 2025, OpenAI launched AgentKit, a toolkit for building, evaluating, and deploying agents with guardrails and a connector registry. It arrived alongside new ways to embed chat experiences and enterprise controls. The next day, October 7, OpenAI published a public threat report cataloging disruptions of malicious networks and the enforcement mechanics behind them. Read together, these moves mark a turn from research alignment to something more concrete: operational governance.

The shift makes sense when you think in the language of borders. AgentKit and its surrounding components are the customs desks for data and tools, the patrol officers that spot abuse, and the administrative law that tells models how to behave. Research alignment asks what a model ought to do in theory. Operational governance decides who is allowed to cross, what they can carry, and how disputes get resolved at scale.

Why this felt like a border moment

- Customs for data and tools now live in product. A connector to a file store or an accounting system is an import lane that needs manifests, permissions, and inspection rules.

- Policing functions have public teeth. Threat intelligence, reason codes, and account level enforcement are being surfaced, not just implied.

- Administrative law is becoming machine readable. Policies, evals, and guardrails form a practical rulebook for behavior rather than a white paper alone.

Two announcements crystallized that pivot. The product launch on October 6 established a programmable border, documented in OpenAI's Introducing AgentKit. The enforcement narrative on October 7 showed the patrol function in action, summarized in OpenAI's October threat report. Put simply, tools and rules now ship together.

Customs for the model economy

Start with the customs desk because that is where most developer experience now sits.

Manifests and connectors

A connector registry is more than a convenience. It is a declaration form. When an enterprise admin enables a connector, they are declaring a shipment of data classes and tool scopes. A good manifest states what is being imported, the intended use, any retention rules, and how access is revoked. In practice, that means:

- Scoping fields like customer identifiers, financial records, or internal code snippets.

- Enumerating tool calls that the agent is allowed to make, such as sending emails, creating tickets, or posting payments.

- Defining rate limits and time to live so that access does not quietly become permanent.

This mindset dovetails with our argument that assistants are the new platform gateways. If distribution and data routing move into agents, the control plane must follow. For a deeper platform view, see how assistants as native gateways create new policy choke points that matter for both speed and safety.

App review as import licensing

The new app model converts conversational actions into mini applications that live in a marketplace. To get listed, a developer follows content standards, privacy disclosures, and design checks. That is import licensing by another name. A clean review process brings two benefits:

- Predictable time to market because the checklist is known upfront.

- Fewer incidents in production because risky patterns are flagged before release.

Cargo scanning and preflight checks

Before an agent touches sensitive content, it should pass through detectors for personal data exposure, jailbreak patterns, and unsafe operations. That is cargo scanning. You get a green lane if you pass, an inspection queue if you trip a rule, and a hard stop if you violate a bright line policy. The result is faster shipping for known safe classes and clearer remediation for the rest.

With these ingredients, the border stack looks familiar: registry, review, scanning, and audit trail. The message to builders is clear. You can ship faster if you treat data access and tool use like import flows with manifests and receipts. The message to buyers is even clearer. You will get switchboard control over what enters your environment and logs you can hand to an auditor.

Police and public intelligence

If customs handles intake, policing handles misuse. OpenAI’s report on October 7 offered three signals.

- The lab is willing to describe attacker tactics at a level that helps the ecosystem without publishing a cookbook.

- Enforcement is account based and policy bound, not vibe based. Reason codes and pattern descriptions make the rules legible.

- The lab coordinates with peers and, where appropriate, public bodies. That is how you get containment within hours rather than weeks.

That posture matters for market confidence. Enterprises want to know that if a botnet starts laundering prompts through public endpoints, someone is on duty, alarms will fire, and cross platform containment can happen quickly. The report also offered a useful boundary claim: most threat actors seem to bolt models onto old playbooks to move faster rather than inventing wholly new exploits. That sets expectations without either dismissing risk or overstating novelty.

Administrative law for behavior

The third pillar is administrative law. Models already operate under behavior policies. Labs publish usage rules, prohibited content lists, and allowed use carveouts. Evals turn those texts into testable criteria. Guardrails encode them into machine checks. Reinforcement tuning adjusts behavior based on graded outcomes.

This is how modern administrative states work. Legislatures set high level mandates. Agencies write and enforce rules that make daily life legible. In this analogy, lab policies are the rules, evals are the hearing record, guardrails are the inspectors, and model updates are amended rulings. The open questions are legibility and portability:

- Legibility is the difference between a policy that reads well and a policy that can be inspected by someone other than the author.

- Portability is the difference between a developer writing one set of tests that works across labs and a developer maintaining bespoke logic everywhere.

These questions connect directly to compliance. If models shape decisions that touch regulated data or consumer outcomes, we will need auditable change logs and repeatable tests. For a complementary perspective, see why enterprises are asking for auditable model 10-Ks that describe behavior, risk controls, and incident history with financial grade rigor.

Why the pivot matters now

Research alignment is necessary. It asks what is good behavior in general. Operational governance is different. It defines jurisdiction and enforces rules while products ship. That creates new power centers and new responsibilities.

- Power centers. Whoever controls the connector registry and app directory controls distribution and access. That gate decides what tools models can use and under what terms.

- Responsibilities. If you run the border, you must staff it. That means abuse detection, incident response, and clear appeal paths when developers feel their app was misclassified or throttled.

- Interoperability. The more your borders matter, the more other actors will demand standards for crossing them. That is how the web converged on transport security, cookie rules, and spam filters.

A concrete proposal for Border APIs

Acceleration without hand waving requires shared plumbing. Here is a pragmatic blueprint for a cross lab Border API. It does not require pausing feature work. It simply turns policies you already maintain into interfaces others can read.

Customs endpoints

- Declare. A developer or enterprise posts a connector or app manifest that lists data classes, tools, scopes, time to live, and intended model calls.

- Inspect. The platform returns a machine readable checklist of required guardrails, rate limits, and human review steps for the declared scopes.

- Approve. The platform issues a signed token with the final scope, expirations, and audit hooks.

- Revoke. The platform can invalidate the token with a reason code and remediation steps.

Police endpoints

- Alert. The lab emits near real time events for abuse detections with privacy safe identifiers and reason codes.

- Contain. Enterprise customers can push back pressure signals or request temporary blocks based on their own risk posture.

- Report. The lab publishes signed quarterly reports with case categories, response times, and enforcement counts. These should be readable by humans and digestible by machines.

- Appeal. Developers and enterprises submit structured appeals with evidence. Labs return status updates with clear timers and outcomes.

Administrative law endpoints

- Policy. Labs publish model behavior policies in a formal schema with references to norms. Each rule is addressable by an identifier.

- Test. Labs and third parties submit eval suites against rules. Results include false positive and false negative rates, as well as coverage metrics.

- Update. When a model or policy revision changes behavior, the lab issues a diff that downstream systems can interpret.

Safety telemetry and attestation

Standardized telemetry should cover guardrail triggers by category, cross tool call chains, model confidence where available, user approval events, and data class touches. Privacy constraints are non negotiable, so telemetry must be scoped, retained for a defined period, and gated behind role based access for auditors. Border tokens should include signed attestations that list which checks ran, which rules apply, and who graded them. Independent auditors can stamp a trust mark with scope and expiry, which procurement systems can verify at build or release time.

What changes for builders in 2026

The developer experience will change in three visible ways.

- Build against the border. Every agent or app ships with a manifest that declares required data classes, allowed tools, and enforced guardrails. A continuous integration step runs required evals and attaches the results to the build.

- Local to lab parity. Local runs simulate border responses. If a connector requires masking rules or a tool call requires user approval, you will see that in development exactly as it will appear in production.

- Cross provider portability. A shared border schema lets teams bring the same app to multiple providers with only policy deltas to resolve. That rewards designs that minimize vendor specific hacks.

Teams that move fastest will treat customs data like another dependency. They will version their manifests, keep evals in the repo, and teach product managers to read inspection checklists. The payoff is less friction in app review and fewer surprises in incident response. For context on how this interacts with product surface, revisit the idea of conversational OS governance where chat, approvals, and actions blend into a single flow.

What to do next

Labs

- Publish a Border API roadmap with draft specs for Declare, Inspect, Approve, Revoke, Alert, Contain, Report, Appeal, Policy, Test, and Update.

- Expose safety telemetry behind role based access and start a pilot with two independent auditors and three large enterprise customers.

- Convert at least half of your policy documents into machine readable schemas by the end of the first quarter of 2026.

Enterprises

- Treat connectors as import lanes. Require manifests with data classes, tool scopes, and retention plans for every new agent.

- Integrate border events into your security operations center. Abuse alerts and revocation notices should open tickets like malware detections do today.

- Fund an internal red team for agents and route findings into the same appeal channel you use with app stores.

Developers

- Keep evals in the repo. Make them part of continuous integration and display results in your release notes.

- Design for user approval moments. If an agent takes an action on the user’s behalf, include a clear confirm step with a readable summary of the tool call.

- Build with portability in mind. Treat policy and guardrail configuration as data so you can move between providers when needed.

Auditors

- Develop shared rubrics for guardrail coverage, false positive and false negative rates, and incident response times.

- Offer live certification with telemetry hooks rather than one off reports.

- Advocate for an open attestation format so trust seals can be machine checked.

Interoperability with public law

Labs cannot and should not replace the state. They operate jurisdictions inside their platforms. National law still sets outer bounds. The challenge is translation.

- Map rules to statutes. Labs should publish how each platform rule maps to legal obligations where they operate. If a connector rule implements a privacy requirement in California or a data transfer restriction in Europe, that link should be explicit in the policy schema.

- Respect local sovereignty without fragmenting the platform. The border should allow local overlays rather than forks. If a country requires extra disclosures for financial data, the border should flag and enforce that locally without breaking global portability.

- Share threat intelligence responsibly. Public reporting should continue with standardized redaction and reason codes so that public bodies can ingest the data and act without compromising investigations or user privacy.

This is not politics by proxy. It is plumbing that makes it easier for public enforcers to see what is happening and for private actors to comply without slowing down.

Competition, compliance, and market dynamics

Borders will become competitive surfaces.

- Fast track lanes. Labs will compete on how quickly they can inspect and approve safe classes of apps and connectors. That pushes toward better tooling and clearer rules because speed and clarity win.

- Policy transparency. Providers that explain rules in machine readable form will earn more enterprise adoption. Security teams do not only want a white paper. They want endpoints that map policy to events inside their own systems.

- Audit ecosystems. Independent auditors will consume telemetry and evals and sell certifications with live checks. Expect cloud marketplaces to integrate these marks so procurement can trust what it buys.

Compliance will change as well. Rather than static checklists, regulators will ask for live feeds that show whether border controls are working. Think of it like emissions testing for agents. You provide periodic lab results and the inspector can request a spot check at any time. The result is faster shipping with fewer catastrophic surprises because the border runs as part of the product, not as an afterthought.

A road map to 2026

Here is a grounded forecast.

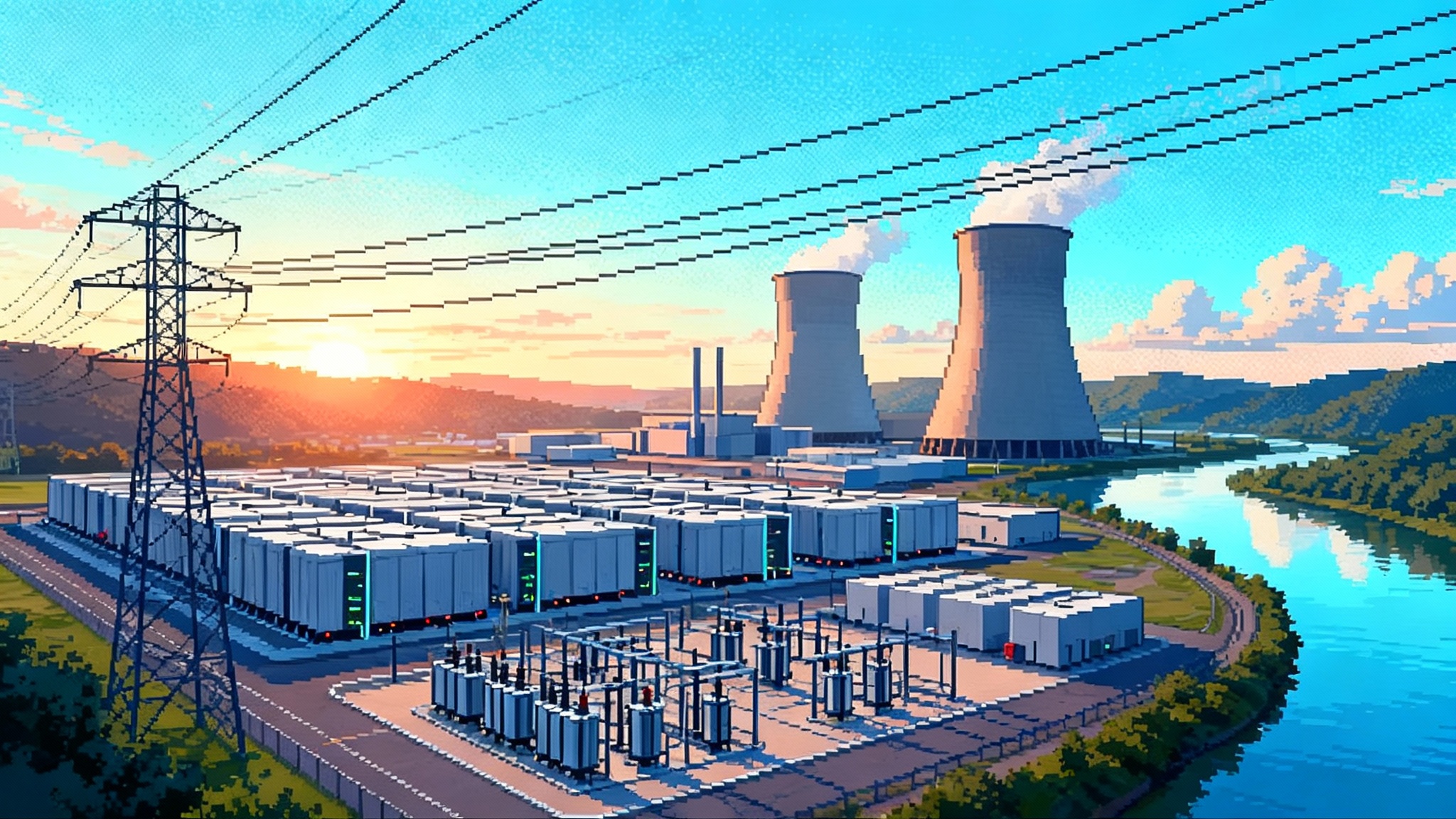

- Border APIs begin to converge by mid 2026. Two labs publish compatible specs for customs and policy endpoints. A third joins by year end.

- App directories become real channels. Developers see meaningful traffic from in chat app suggestions and adjust their roadmaps accordingly. The workflows to pass review feel closer to mobile app stores, but with clearer, machine readable rules.

- Enterprise buyers require border telemetry in procurement. If a vendor cannot stream basic guardrail triggers and revocation events, they do not clear security review.

- Regulators plug into standard feeds. Financial and health regulators begin pilots that consume safety telemetry from large platforms and use it to target audits.

- New developer tools emerge. Continuous integration plugins simulate inspections, attach attestations to builds, and warn when a change would trigger a revocation in a target market.

The net effect is not slower progress. It is faster shipping with fewer catastrophic surprises. Borders reduce chaos when they are predictable, inspectable, and interoperable.

Closing thought

AI needs borders because useful software touches real data and real users. The choice is not freedom or control. It is opaque gates or transparent protocols. By pairing a build stack for agents with public threat reporting on October 6 and October 7, OpenAI signaled where the industry is heading. Treat the platform like a micro sovereign with customs, police, and administrative law, then expose that machinery as interfaces that others can use. Do that, and the next wave of agents will cross borders quickly, safely, and with receipts that anyone can read.