GitHub Agent HQ: Mission Control for Multi‑Vendor Agents

GitHub just put third party coding agents inside your normal branches, pull requests, and reviews. Learn how Agent HQ runs best of N workflows, enforces governance by default, and gives platform teams a single control pane.

Breaking: GitHub turns agents into a first class workflow

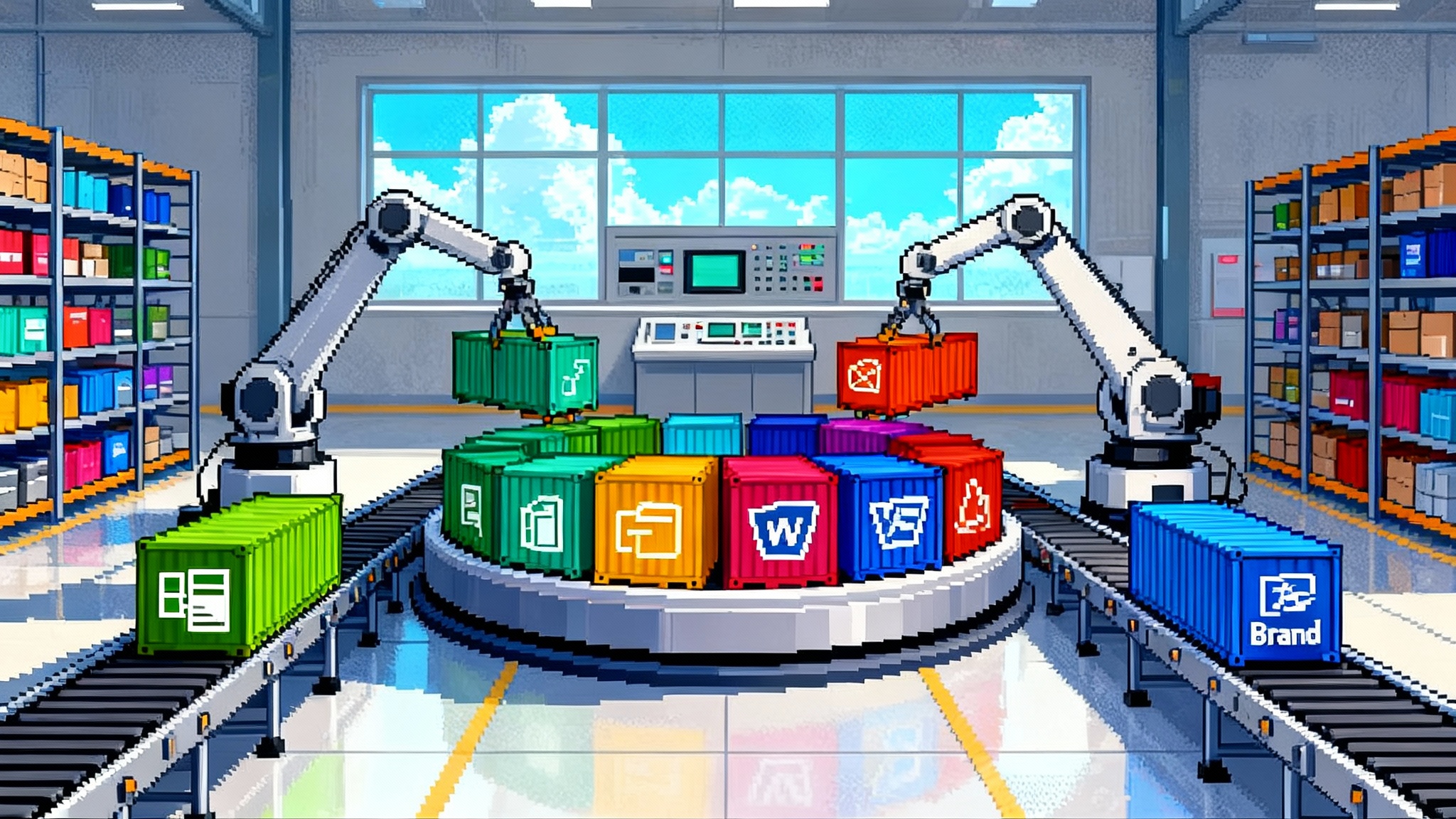

On October 28, 2025 at Universe in San Francisco, GitHub unveiled Agent HQ, a mission control that brings third party coding agents from OpenAI, Anthropic, Google, xAI, and Cognition directly into GitHub. In GitHub’s words, it is a single place to assign work, run multiple agents in parallel, compare their outputs, and land the winning change through the familiar flow of branches, pull requests, and reviews. For GitHub’s own framing of the announcement, see the Universe recap overview.

If you have ever kept several browser tabs open to ping different assistants, Agent HQ feels like plugging those tabs into the conveyor belt that already runs your software factory. Instead of bouncing between tools, the work stays inside GitHub where identity, permissions, branch protections, CodeQL scanning, and GitHub Actions already enforce how code gets made, checked, and shipped.

Why multi agent best of N matters

Think of a pit crew. One crew member changes the front left tire, another handles the rear right, a third refuels, and a fourth spots mistakes. With Agent HQ, you can assign the same task to several specialized agents at once and pick the best result. That best of N approach matters because large language models and agent planners have strengths that vary by language, framework, and task shape.

- OpenAI might excel at TypeScript ergonomics.

- Anthropic might be strongest at test scaffolding and safety first edits.

- Google’s agent could shine in Java refactoring.

- xAI’s tool might be the fastest at tracing long dependency chains.

- Cognition’s agent may bring end to end autonomy for full feature work.

Best of N transforms agents from a single roll of the dice into a parallel race with a review gate. Instead of trusting one answer, you orchestrate multiple attempts, watch the plans they generate, and compare diffs before anything merges. The result is faster exploration with a human in the loop at the decision point.

Mission control meets governance by default

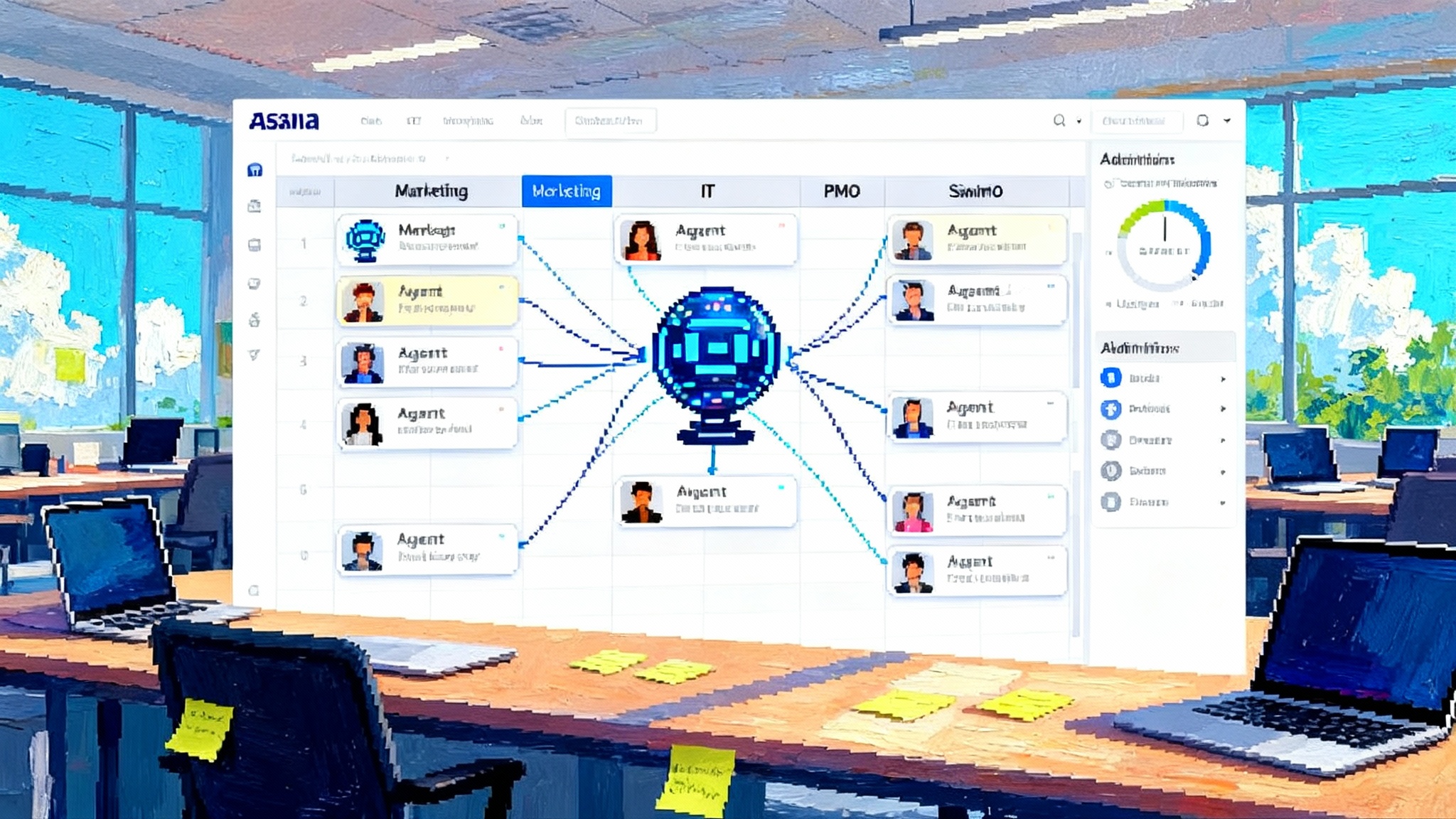

Agent HQ is not a separate application. It shows up where developers already live: the GitHub web interface, Visual Studio Code, mobile, and the command line. The benefit is that governance controls do not need reinvention.

- Identity and access: Agents act as named identities with specific permissions. You can scope their access to particular repositories or branches and use the same single sign on, role mapping, and audit trails you apply to humans. If your organization is formalizing identity for machine workers, see how agents as first class identities changes reviewability and compliance.

- Branch protections: Required status checks, linear history, signed commits, and restrictive merge policies continue to apply. Agent commits do not bypass them.

- Reviews and code owners: Pull requests filed by agents route to human reviewers through CODEOWNERS. Required reviewers and review limits keep your control points in place.

- Code scanning and policies: CodeQL and secrets scanning run just as they do on human commits. You can add additional checks that fire only on agent authored branches.

- Actions and environments: Agents trigger the same GitHub Actions pipelines, including environment protection rules and deployment approvals.

Put simply, Agent HQ meets the enterprise where it already audits. For a taste of how the control page looks and what you can monitor without context switching, GitHub provides a guide to the centralized agent management interface.

What a day in Agent HQ looks like

Imagine a payments team that needs to add a new fraud check. The team lead creates an issue that describes the rule, sample transactions, and edge cases. From Agent HQ, the lead assigns the task to three agents at once:

- An agent tuned for TypeScript application code

- An agent tuned for unit and integration tests

- An agent tuned for database schema migrations and performance

Agent HQ fans out the work. Each agent proposes a plan, asks clarifying questions in the issue thread, then opens a draft pull request. CodeQL flags a potential null dereference in one branch and a risky substring match in another. Only one agent’s plan accounts for rate limits from an external service. The lead promotes that plan to primary, adds a comment that sets a policy flag to require two senior reviews, and Agent HQ routes the request to the code owners.

The change merges after two approvals and a green Actions run. The other two agent branches automatically mark as superseded, but their diffs and logs stay in the audit trail. Nothing left the governance envelope.

The mechanisms that make it faster and safer

- Parallel plans reduce waiting. Best of N replaces trial and error with simultaneous exploration. This compresses lead time for changes that need significant reasoning.

- Reviews stay human, checks stay automated. Agents propose work. Humans approve. CodeQL and Actions continue to be the objective gate for security and quality, catching issues even when agents miss them.

- Context boundaries reduce blast radius. Because agents operate within GitHub permissions and branch protections, you can confine risky edits to sandboxes, short lived branches, or protected environments.

- Observable sessions. Each agent’s session log and plan make it clear why a change was made. That supports incident review and compliance without new logging infrastructure.

A 30 60 90 day adoption playbook

The fastest adoptions start small, measure, and expand based on evidence. Use this sequence as a template and adapt to your regulatory requirements.

Day 0 to 30: Prove value on a narrow front

- Pick three repositories with clear boundaries: one library, one service, one automation repo. Avoid your most critical path at the start.

- Define gate policies. Require CodeQL, dependency review, and secret scanning to pass on any agent branch. Require at least one human owner to approve agent pull requests.

- Establish a best of N rubric. For the trial, run two agents in parallel per task. Score outputs on correctness, tests produced, and clarity of plan. Capture scores in pull request labels.

- Assign owners for the pilot. One platform engineer, one security partner, and two senior developers per repo.

- Start with tasks that are low risk and recurring: test generation, small refactors, doc updates, dependency bumps.

- Instrument metrics. Record lead time per change, review time per pull request, and post merge defects found within 30 days. Use lightweight dashboards tied to labels and branch names.

Day 31 to 60: Expand scope and raise the bar

- Add two more agent vendors where licensing allows. Keep best of N at two or three agents per task to avoid reviewer overload.

- Introduce planning reviews. Require human approval of an agent’s plan before it begins editing on medium risk tasks.

- Move up the complexity curve. Allow agent work on non critical features behind flags, internal endpoints, or dark deployments.

- Add policy tiers. Define sandbox branches where agents can run freely, and protected branches where agents require explicit human kick off and two approvals.

- Tune CodeQL and Actions pipelines. Add custom queries that target patterns you see in agent diffs. Add a job that fails if the agent did not update or add tests.

- Begin cost and throughput tracking. Attribute Actions minutes and agent consumption to cost centers using tags or repository naming conventions.

Day 61 to 90: Institutionalize and federate

- Create reusable custom agents. Publish task specific agents for tests, documentation, dependency upgrades, and observability. Store agent profiles in a shared internal repository.

- Make best of N the norm for medium risk edits. For high risk changes, require human spike solutions or design docs the agent must follow.

- Standardize review aids. Auto attach checklists to agent pull requests. Include areas touched, assumptions the agent made, and a list of files without tests.

- Integrate with incident workflows. Include the agent session link in incident tickets. Require a human post merge review on agent changes touching authentication, billing, or data access paths.

- Publish the scorecard. Share the metrics weekly with engineering leadership and procurement. Use the numbers to adjust vendor mix and budgets.

How to measure what matters

Pick a small set of metrics that capture speed, flow, and quality. Make them visible to the teams that can act.

- Lead time for change: Time from task creation to merge. Track separately for agent authored pull requests and human authored pull requests.

- Pull request review throughput: Reviews completed per reviewer per day. Watch for increases without a drop in review quality.

- Defect escape rate: Post merge defects per thousand lines changed by agents, measured within 30 days of merge. Compare to the same metric for human changes.

- Test delta ratio: Number of new or modified tests per lines of production code changed by an agent. Low ratios suggest missing coverage.

- Rework rate: Percentage of agent pull requests that require more than two human review cycles or are superseded by another approach.

- Policy violation count: Number of times an agent triggered a policy block, such as missing required checks or editing restricted paths.

- Time to first meaningful diff: Minutes from task assignment to the first reviewable change. This reveals plan thrash or tooling friction.

These metrics are actionable. If defect escape rises, tighten CodeQL, expand test requirements, or constrain the types of tasks agents can touch. If time to first diff is slow, pre warm environments or reduce the number of tools an agent can call.

Procurement checklist for an enterprise agent fleet

Your procurement and vendor management partners can move faster if you give them a crisp list.

- Licensing model: Seat based, usage based, or hybrid. Support for bursty parallel runs under best of N scenarios.

- Data handling: Where prompts, code, and logs are stored. Data residency options for the United States and other regions. Retention periods and deletion guarantees.

- Training and privacy: Whether vendor models train on your code or prompts. Contract language that prohibits training on customer data.

- Identity integration: Support for single sign on, multi factor authentication, and SCIM provisioning. Ability to represent agents as first class identities with traceable actions.

- Audit and compliance: Support for immutable logs, export to your security information and event management tool, and evidence for SOC 2, ISO 27001, and if required, FedRAMP.

- Policy controls: Branch scoping, path restrictions, environment access rules, and the ability to disable dangerous tools at the tenant level.

- Security posture: Clear vulnerability response timelines, penetration testing summaries, coordinated disclosure, and a statement of software materials for the agent integrations.

- Support and roadmap: Named technical contacts, service level objectives, and a public change log. A commitment to publish deprecations with upgrade paths.

- Pricing governance: Tags or headers that make it possible to attribute agent usage to cost centers. Rate limits with hard caps to prevent runaway spend.

Security checklist to tighten the envelope

Security teams should formalize controls that match how Agent HQ works.

- Branch protections: Require status checks, signed commits, and linear history on all agent branches. Block direct pushes to protected branches by agent identities.

- Required reviewers: Enforce CODEOWNERS and minimum reviewer counts on any pull request authored by an agent.

- CodeQL and secret scanning: Treat agent branches like untrusted input. Turn on CodeQL for all languages present. Require secret scanning and dependency review to pass.

- Runner hygiene: Use ephemeral self hosted runners for agent work if you require on premise compute. Lock network egress to only required endpoints.

- Environment protections: For deployments, require approvals, wait timers, or manual gates before production. Use environment level secrets with least privilege.

- Path and tool restrictions: Limit agents from editing sensitive directories, such as authentication code, cryptography, or database migrations, without human kickoff.

- Logging and alerting: Ship agent session logs and pull request metadata to your security information and event management tool. Alert on unusual edit patterns, such as mass rewrite across unrelated domains.

- Prompt security: Sanitize context files and generated docs to avoid prompt injection. Keep the agent’s tool list small to narrow attack surface.

Running best of N without drowning your reviewers

Parallelism is powerful, but unmanaged parallelism creates noise. These practices balance exploration with reviewer attention.

- Cap parallel agents at two or three per task for day to day work. Use more only for high stakes changes.

- Approve a plan before edits begin for non trivial changes. Plans should declare files to touch, tests to add, and assumptions.

- Score outputs with a short rubric: correctness, tests, safety. Tag pull requests with the scores.

- Let automation cull weak branches. If a branch fails CodeQL or policy checks twice, auto close it and capture the learning in a label.

- Rotate vendor mix by task type. Keep a record of which vendor performed best on tests, refactors, or documentation. Use evidence to route future work.

What Agent HQ changes for platform teams

Platform engineering gets a standard way to govern third party agents without building a parallel control plane. Instead of asking each vendor for its own audit feeds, you can use GitHub’s identity, logging, and policy engine to keep a single source of truth. That reduces your overhead and makes security reviews less brittle. If you are also exploring cloud level primitives for agents, it is useful to compare how AgentOps as a cloud primitive changes the control plane boundary.

Meanwhile, developer experience teams can package repeatable custom agents that carry your coding standards, testing patterns, and internal tool know how. A team that dreads writing performance tests can call the performance agent. A team that struggles with dependency hygiene can call the upgrade agent. You are not just buying agents. You are building a fleet. If your organization is trying to standardize agent scaffolding, the pattern of agents from demo to deployable platform will help you define handoffs and policies.

What to pilot first

Start with tasks that amplify human attention rather than replace it.

- Test expansion and flakiness fixes

- Documentation alignment and example generation

- Small refactors that carry low business risk

- Observability additions such as metrics and traces

- Dependency bumps and configuration cleanups

These areas are measurable, low blast radius, and rich in repetition. They create a fast feedback loop that builds trust with stakeholders.

The road ahead

Agent HQ signals a simple truth: the future of coding is collaborative. Humans define intent and boundaries. Agents explore options. Governance keeps both honest. By making agents first class participants in GitHub’s normal flow, Microsoft and GitHub remove the scaffolding that once made enterprise adoption brittle.

Here is the practical next step. Pick three repositories, set strict gates, and run a best of N trial for 30 days. Publish the numbers, adjust the vendor mix, and then scale. With a mission control in the middle and a fleet at the edge, your software factory can move faster while staying inside the lines.