The Deliberation Economy: Time Becomes AI's Currency

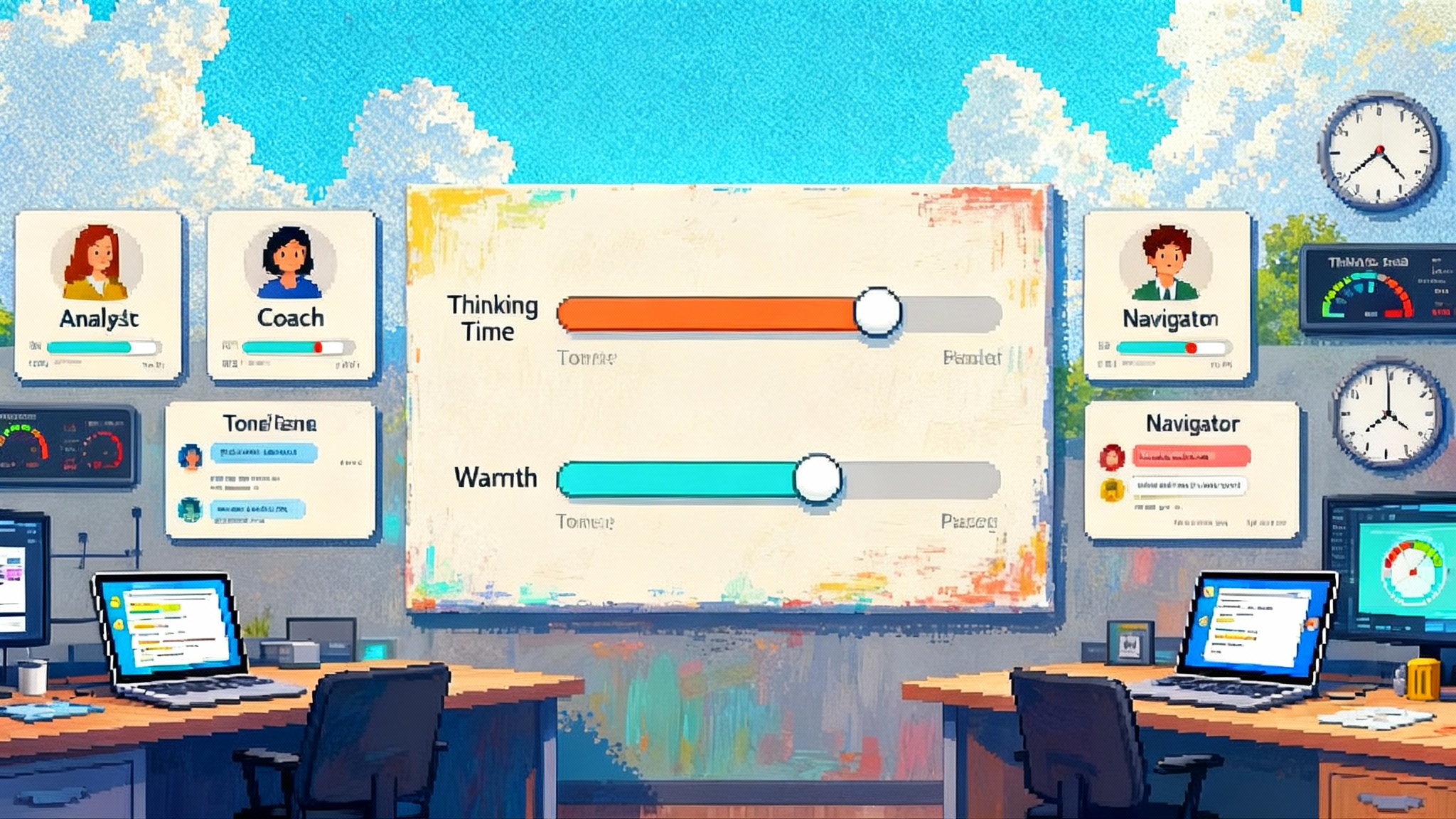

A new wave of controllable thinking modes makes time the real unit of AI. When you can dial how long a model thinks, product defaults, SLAs, routing, and UX all change. The real question is who sets the budget.

Breaking: AI budgets its own thinking

Something subtle but important is happening in modern AI systems. Product teams can now control how long a model thinks before answering. That knob changes the feel of every feature, from instant chat replies to complex code reviews. It also shifts what we measure, what we promise to customers, and how we design interfaces.

For years, we treated tokens as the atomic unit of intelligence. Tokens still matter for cost and context, but they do not capture what users actually experience. Users feel latency and reliability. When you can allocate deliberate time on demand, latency becomes purposeful. You are not just waiting for a server. You are investing attention the way a camera gathers light.

Time becomes the primary unit of intelligence

Think through a few everyday tasks:

- Summarizing a brief email. Extra deliberation rarely helps beyond a quick pass.

- Drafting a policy update. A bit more thinking adds structure, clarity, and risk checks.

- Refactoring a complex codebase or validating a tricky proof. More time pays real dividends as the system explores alternatives, runs tools, and self-corrects.

In each case, the optimal thinking time is different. That is the essence of the Deliberation Economy. We allocate attention where it yields the highest return, task by task.

Teams that embrace this mindset stop asking only which model is smarter and start asking a more practical question: how much thinking is worth it for this specific request. Once you make that shift, everything from architecture to pricing has to adapt.

From tokens to budgets: adjustable effort in the wild

What used to be research jargon like test-time scaling has turned into a product surface. Many teams now expose developer parameters or user-facing modes that trade speed for depth. The common pattern is programmable cognition: make the model think longer when the problem is hard, and pull back when it is not.

This is not a cosmetic setting. It is a budget line, like battery on a phone or bandwidth on a network. When you can control it, you redesign software around it. The most effective products move beyond a one-size-fits-all latency and instead treat thinking time as a resource to route, meter, and explain.

Why controllable deliberation rewires product strategy

When time becomes the unit, four practical questions appear in every roadmap meeting:

-

What should be the default cognitive latency for this feature? You need a defensible number. For a reply suggestion in support, 400 to 700 milliseconds may be the sweet spot. For high-stakes reconciliation, two to five seconds can repay itself if reliability rises by a few percentage points. Defaults become a strategy choice, not an accident of infrastructure.

-

What is our reliability per millisecond Service Level Agreement? Treat reliability as a curve rather than a single score. Chart accuracy at p50, p90, and p99 latency, and share the frontier with customers. A promise like this is concrete: within 800 milliseconds the system equals the old baseline, and after 2 seconds it reaches a higher tier.

-

How do we route by task difficulty in real time? Easy examples should take the fast path with minimal thinking. Ambiguous or high-impact cases should get more time or a stronger model. That requires a triage step that estimates difficulty or risk before the main call.

-

How do we present graded cognition in the interface? Users should understand when the system is thinking on purpose and what they gain by waiting. That might be a progress cue that shows which checks are running, or a staged answer that improves over time.

New UX patterns for graded cognition

This shift breaks the old spinner that implied a server was simply busy. The indicator now communicates investment. Expect several patterns to become common:

- Tap to deepen. The system returns a quick answer, then offers an Improve with more thinking button that refines reasoning, adds citations, or runs extra tests.

- Progressive solutions. For coding, the assistant proposes a plan, then a diff, then optional test runs, each stage buying more reliability with a clear time trade. For analytics, it sketches a chart, then reruns with outlier checks and alternative models.

- Reasoning receipts. Users do not need raw chain of thought. They need a concise log that explains how time was spent, such as which checks ran and what tools executed.

- Time sliders and presets. Light, Standard, and Heavy are useful in consumer chat, but enterprise apps will ship task-specific presets like Reconcile thorough with an estimated time and expected reliability uplift.

Great UX does not force the user to become a scheduler. It offers transparent choices and sensible defaults, and it lets users reverse decisions quickly when circumstances change.

Engineering for the Deliberation Economy

Delivering controllable deliberation takes more than a parameter tweak. It suggests a concrete architecture:

- Difficulty estimator. Before calling the main model, run a cheap classifier to estimate task hardness or risk. Signals may include input length, ambiguity heuristics, domain, and prior failure rates. Use that to set the initial budget.

- Budget controller. Start minimal on easy cases, upgrade to medium if confidence is low, and escalate to high or a stronger model if uncertainty remains after a second pass. Encode these policies centrally so product teams do not reinvent logic per feature.

- Reliability monitor. Track reliability-per-millisecond metrics by task type. Build dashboards that show the curve moving as models improve. Optimize for area under the frontier, not just a top-end score.

- Interruptible thinking. Allow the user or system to stop early, cache partial work, and resume. Checkpoint the process so the model does not start from scratch when the user taps Deepen.

- Tool-aware deliberation. Spend time where tools can amplify it. For example, allocate extra seconds to run a static analyzer or unit tests rather than more tokens of free-form reflection.

- Replayable receipts. Log thought summaries and tool traces so teams can audit and refine policies without exposing raw internal thoughts to end users.

An effective implementation treats time as a first-class resource, the same way we treat CPU, memory, or bandwidth.

SLAs for reliability per millisecond

Traditional service levels promise uptime and fixed response times. Adjustable thinking suggests a new shape of promise. Imagine a document analysis API with the following targets:

- By 500 milliseconds: minimal reasoning, 92 percent accuracy on entity extraction.

- By 1.5 seconds: medium reasoning, 96 percent accuracy and fewer false positives.

- By 4 seconds: high reasoning, 98 percent accuracy plus consistency checks.

Such a contract lets buyers match spend and patience to outcomes. Vendors can price tiers differently, and customers can pick per endpoint which tier they can afford. Security tools, underwriting, and coding copilots will often buy more milliseconds when the stakes are high. Marketing previews and casual chat will spend less most of the time, then spike when the content is customer facing.

Publishing these curves also sharpens internal decisions. Teams can see whether their scarce milliseconds are lifting reliability where it matters, rather than polishing easy wins.

Routing by task difficulty is now table stakes

Once thinking time is a budget, routing becomes an economic decision. The router asks two questions: how hard is this and how much is it worth to get it right. A ticket classifier that is 80 percent certain a case is a simple password reset should choose the fast path. If the same classifier flags a messy escalation with legal terms, route to slower thinking and perhaps a different model.

A pragmatic blueprint:

- Pre-classify every request into low, medium, or high difficulty using lightweight models.

- Start minimal or low on low difficulty, medium on medium, high on high.

- Watch confidence. If it drops below a threshold, increase effort or switch models.

- Cap maximum thinking per request based on business value and user patience.

- Cache results and reuse thought summaries to avoid paying for the same reasoning twice.

Do not guess thresholds. Measure them. Build an offline harness that replays a week of traffic across effort levels, then plot accuracy against latency. Move the budget where it counts.

Defaults for cognitive latency

Defaults are values and incentives embedded in code. If you set the default to extended thinking everywhere, users will feel drag and you will waste compute. If you set it too low, you will ship confident errors. Good defaults match the task’s inherent variance.

Helpful guidelines:

- One default per feature. Then expose a visible Deepen button that is one tap away.

- Treat the default as a target, not a floor. Finish early when confidence crosses a threshold.

- Show expected benefit in plain language. For example, Another 1.5 seconds will run a linter and unit tests.

Often the best default is not a point, but a pair: a fast initial answer plus a staged deeper pass that users can see and control.

Ethics and governance: who sets the thinking budget

Adjustable cognition introduces new power dynamics. Providers can set defaults that shape outcomes before any user decides. If a platform quietly lowers default thinking time in moderation, it could reduce accuracy on edge cases and change who gets flagged. If a coding assistant tightens budgets during peak hours to save compute, it could ship more defects.

Used well, these same knobs can reduce unfairness. You can devote more time to harder cases, which are often where bias hides. You can publish reliability-per-millisecond SLAs so customers know what they are buying. You can give organizations policy controls that set minimum budgets for critical flows like healthcare triage or financial approvals.

Transparency helps. Thought summaries let people see what checks ran without exposing raw chain of thought. Admins can log the budget used and the confidence achieved. In consumer apps, users should be told when they are on a fast path with lower reliability so they can opt in to deeper thinking when needed.

Market access matters as well. If robust thinking modes are gated behind premium plans, a cognitive divide can emerge where only some users get thorough reasoning. Product teams should test defaults across plans so free users are not stuck with brittle fast answers. In regulated sectors, minimum thinking budgets may become part of certification and audit trails.

Pricing and the rise of attention allocators

If time is the unit, pricing will follow. Expect providers to blend per-token and per-second charges, or to bundle thinking budgets into tiers. Observability and routing platforms will add features like budget control, difficulty estimation, and receipts. Benchmarks will adapt, publishing reliability at specific latency points rather than a single top-line score.

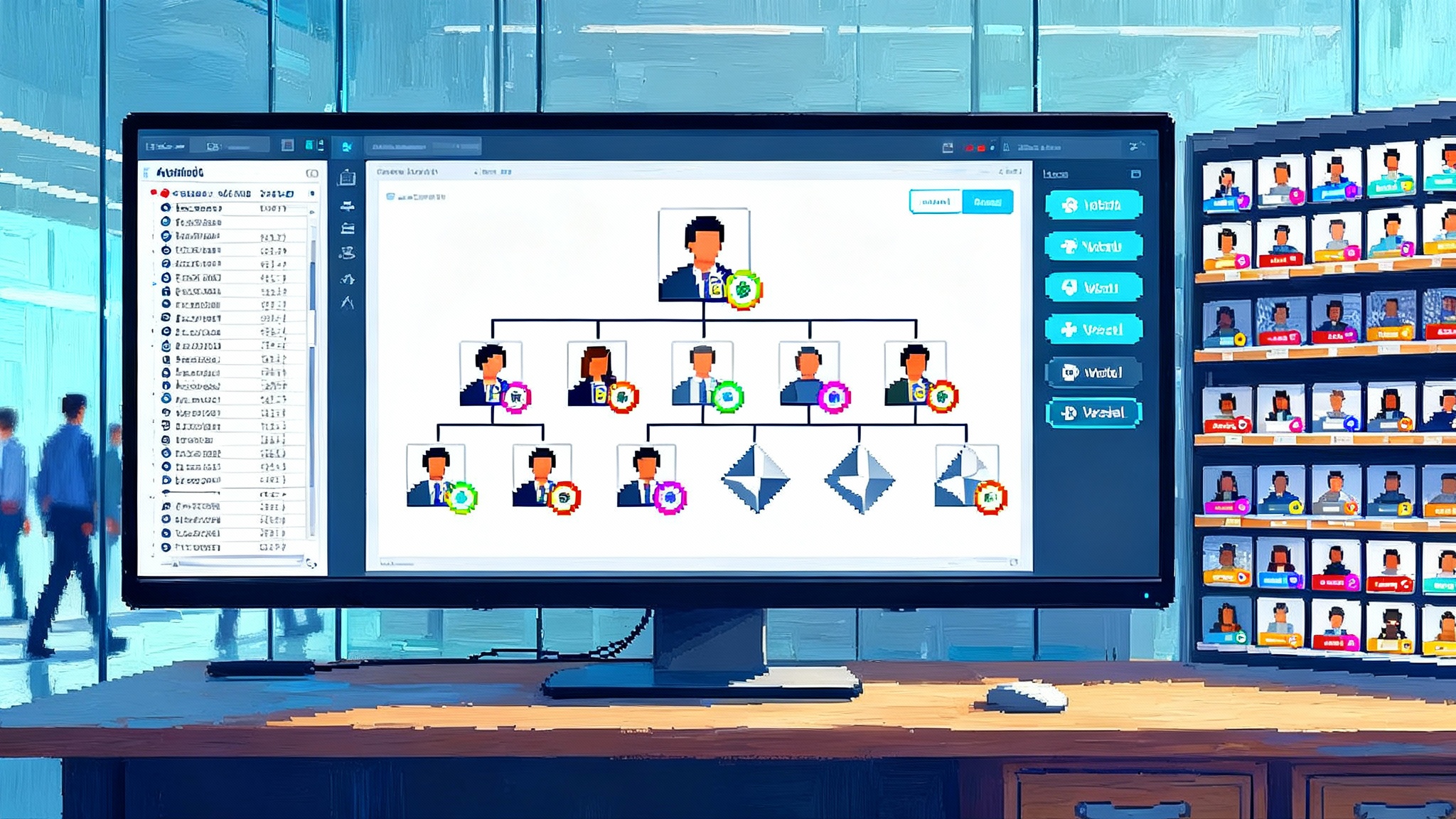

One likely outcome is the rise of attention allocators. These services sit between users and models and spend cognitive time shrewdly. They arbitrate latency and quality, choose when to pay for more thinking, and learn per customer what the best tradeoffs look like. Some will be developer libraries embedded in backends. Others will be dashboards with dials that business teams understand.

What builders should do now

- Instrument for reliability per millisecond. Record latency and outcome quality for every request and plot the frontier.

- Add a difficulty estimator and budget controller. Start simple. Even a rule-based triage improves average experience.

- Create UX for graded cognition. Offer a quick answer plus a visible deepening path with time and benefit estimates.

- Define cognitive SLAs. Publish targets by feature so customers know when waiting longer helps and by how much.

- Treat budgets as policy. In regulated flows, set minimum budgets and require receipts. In low-stakes flows, allow fast defaults but give users control.

- Experiment with routing. Compare minimal, low, medium, and high effort on real traffic. Lock in the mix that maximizes value for your domain.

Beyond chat: a philosophical break

Chat interfaces taught us to think about words. The Deliberation Economy teaches us to think about attention. Adjustable thinking turns AI into a manager of its own time. It reframes model comparisons as time-quality tradeoffs. It turns UX into a set of levers that let people control cognition. It invites regulators to look not only at what a model says, but also at how long it was allowed to think.

Two years from now, we will likely look back at this era as the moment when thinking stopped being a black box and became a setting. The teams that learn to budget thought with care will build products that feel both faster and smarter, because they spend time where it counts. The rest will keep paying, one slow spinner at a time.

Related reading on AgentsDB

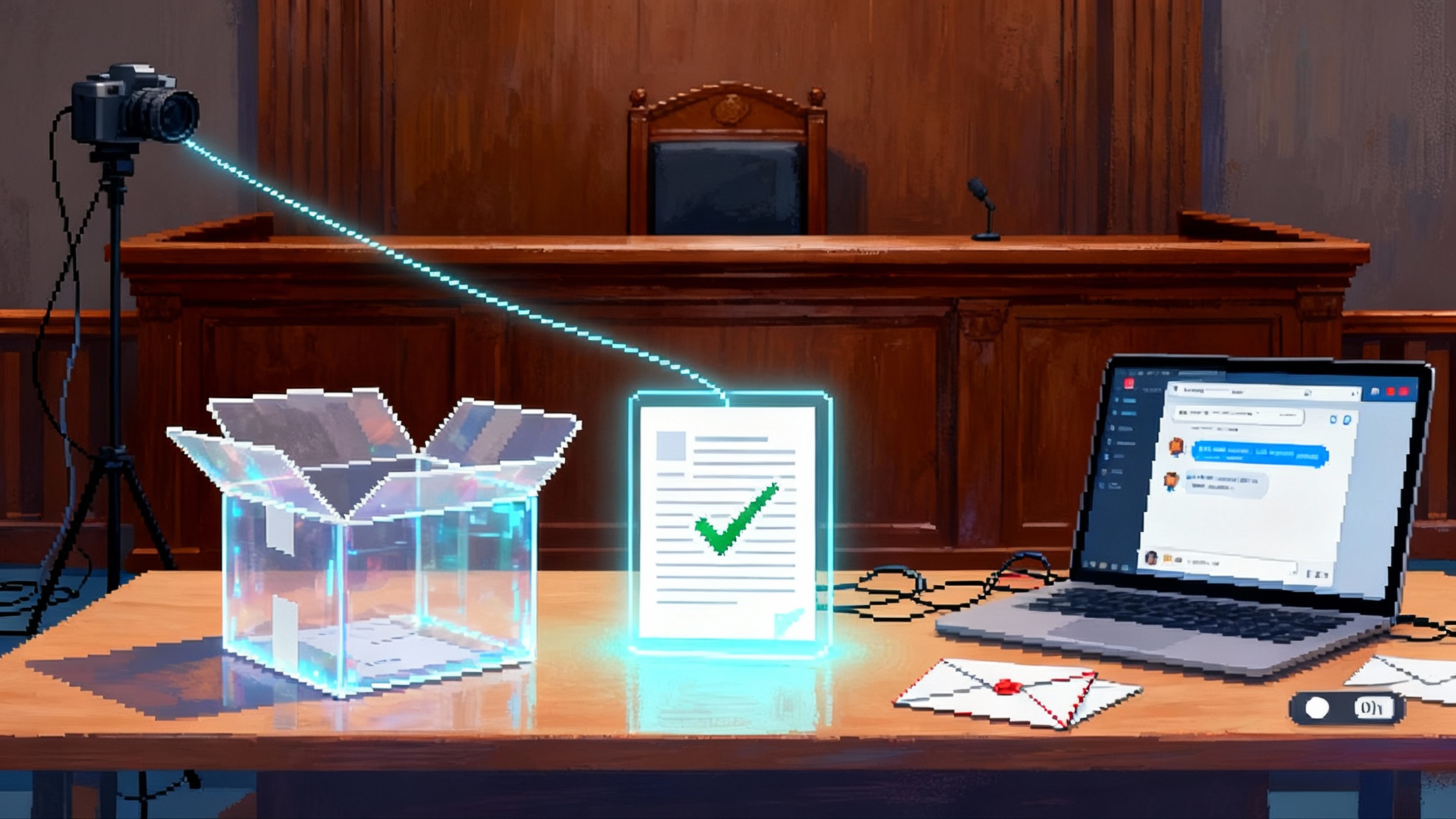

- Read how interface choices harden into power in GUI AIs rewire institutions.

- See why infrastructure thinking beats prompt hacking in intelligence as utility, not prompts.

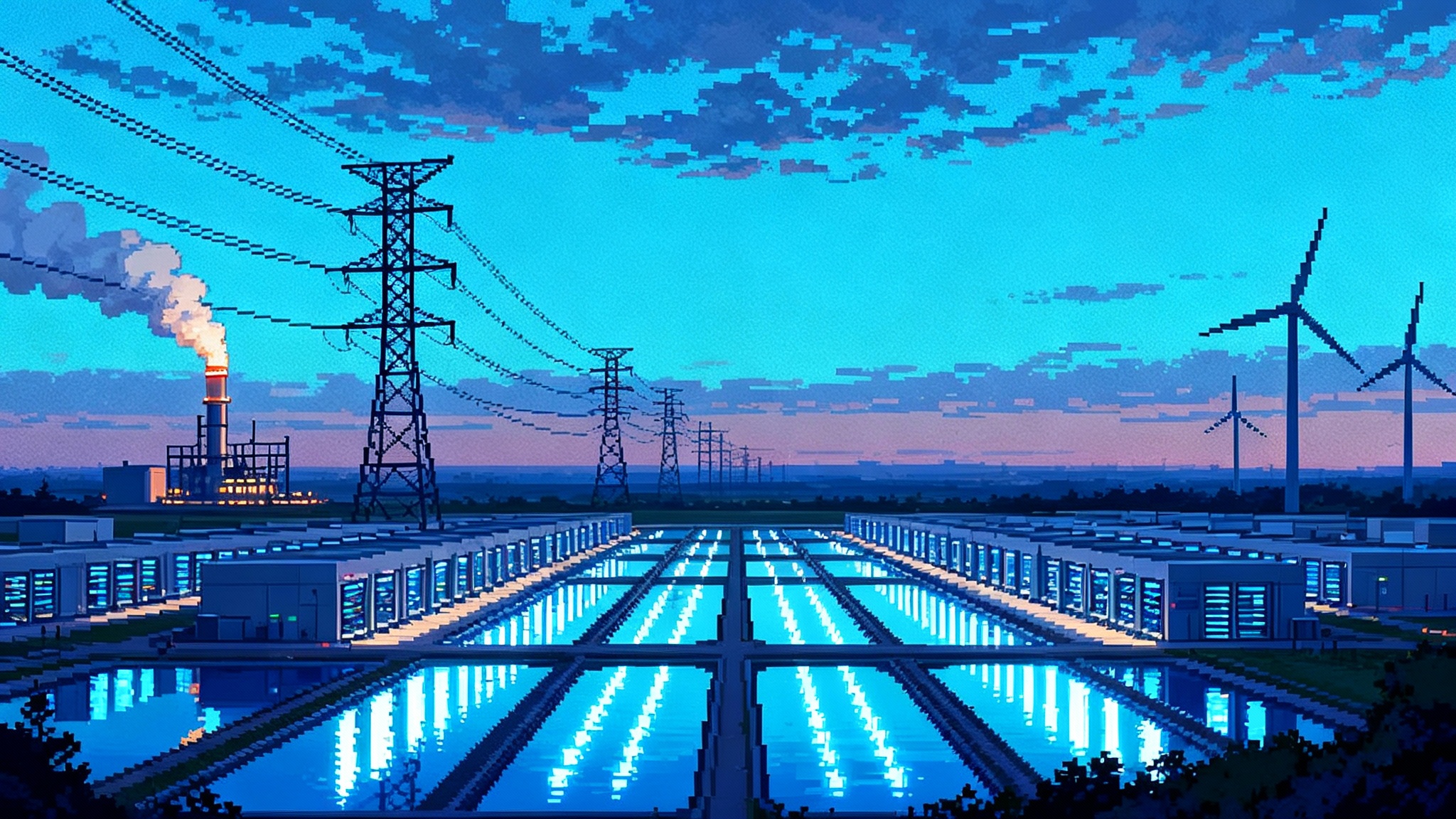

- Explore how energy constraints shape AI strategy in AI’s grid treaty and capacity limits.