The Time Constant of AI: Why Long-Horizon Agents Win

In late September 2025 Anthropic shipped Claude Sonnet 4.5, and days later enterprises unveiled agent SDKs and IDE integrations. The real race is not tokens or context but how long an agent stays smart across time.

Breaking news, and the week time became a feature

On September 29, 2025, Anthropic released Claude Sonnet 4.5 and, in the same breath, shipped a first party Agent SDK alongside major updates to Claude Code. The announcement framed a clear new direction: building agents that keep working, keep their bearings, and keep improving over many hours, not just many tokens. Anthropic’s note reads like a product spec for stamina, with memory management across long runs, permissioned autonomy, and coordination among subagents packaged for developers in a single kit. The center of gravity is shifting from a single clever answer to a durable line of work. See the official Claude Sonnet 4.5 release.

One week later, the enterprise world signaled that this is not just a lab dream. On October 7, 2025, IBM announced that Claude will anchor a new enterprise integrated development environment in private preview, and the companies published guidance to build secure enterprise agents using the Model Context Protocol. The headline was not a new benchmark, it was a workflow claim: a developer partner that can carry state through modernization, testing, and deployment while meeting governance requirements. That is an agent that survives time, interruptions, and audits. Read the IBM enterprise integration announcement.

These are not isolated press notes. Taken together, they mark a line we can draw through the current moment: the next competitive frontier is sustained algorithmic attention over wall clock time.

The time constant of AI

Every system has a time constant, a measure of how quickly it responds and how long it holds a state before decay. Thermostats have one. Supply chains have one. The new class of agentic models now needs one as well. For long-horizon agents, the time constant is the duration over which the agent can maintain coherent goals, a stable working set, and reliable progress despite interruptions, tool failures, and environmental drift.

Context windows are capacity. Token prices are cost. The time constant is stamina. In practice that means the difference between an assistant that gives a crisp answer and a colleague that spends a weekend migrating your codebase, files weekly updates, and resumes on Monday morning exactly where it left off.

If you think about the business lens, stamina maps to how much validated work an agent can push across the line per hour while keeping control of state and intent. This is why the winning teams will treat time as the scarce cognitive resource and design products to preserve it.

From responses to processes

Call this shift temporal agency. Intelligence stops being a response and becomes a process. An agent with temporal agency can:

- Hold a multi day plan, not just a multi step chain of thought.

- Protect its attention, so it does not forget what it is doing when the calendar changes, a login expires, or a teammate edits the spreadsheet.

- Recover gracefully after an interruption, instead of rerunning brittle steps from scratch.

- Keep a consistent style of reasoning, so progress today looks like a continuation of yesterday rather than a restart.

Imagine a claims processing agent facing a quarter end spike. On Friday it triages 8,000 documents, leaves itself checkpoints and pointers, and pauses. Over the weekend, the data warehouse changes a table name, an authentication token expires, and two folders are renamed by an administrator. On Monday, the agent rehydrates its memory, rolls forward through checkpoints, notices schema drift, fixes its queries, and resumes processing without a human re-prompt. That is temporal agency.

For teams thinking about the accounting of cognition, temporal agency naturally connects to the idea of treating memory as a balance sheet asset. We unpack that frame in our piece on memory as neural capital.

New metrics for long-horizon agents

The industry has been grading models with single shot accuracy and one off leaderboard wins. Those still matter, but they are not enough. For agents that live across time, we need metrics that measure endurance, recovery, and cost of progress.

-

Throughput per hour: Completed units per hour, where a unit is defined by the business. For code migration, a unit might be a module moved and tests passing. For invoice triage, a unit might be a document classified and reconciled. Track both net throughput and rework adjusted throughput.

-

Interruption recovery time: The mean time to recovery when a run is interrupted by a system event, a token, a meeting, or a permission prompt. Measure from the moment of interruption to the first successful resumption checkpoint with forward progress.

-

Agency half life: The time it takes for an agent’s plan coherence to decay to half its initial value under real workplace noise. Coherence can be scored with a rubric: goal alignment, state consistency, and dependency correctness. Run the same plan over a 30 hour window with scheduled distractions, then plot decay.

-

Drift sensitivity index: The fraction of runs that survive environment drift without human intervention. Drift events include schema changes, path renames, and access token renewals. The index tracks how many of these a plan can absorb before failing.

-

Cost of progress: Dollars per validated unit over time, not per million tokens. Costs should include tool usage, retries, and human approvals. The metric that matters to a business is not cheap tokens, it is cheap progress.

-

Long run stability score: Percentage of runs that finish within a budget of time and money while meeting acceptance tests. This is the new reliability badge for agent deployments.

These metrics change the shape of evaluation. Instead of a thousand disjoint prompts, you run a week long scenario with checkpoints, access controls, and live tools. You graph a timeline, not just a confusion matrix.

For organizations that care about governance and external reporting, these durability metrics complement the push for auditable model reporting. If an agent can explain what it did over 30 hours, under supervision, with cost and drift controls, it is by definition more auditable.

Architectures for continuous cognition

Long horizon agents demand different product designs. Start with these patterns:

-

Durable working memory: Give the agent a structured memory that survives process death, redeploys, and scheduled pauses. Use an append only event log for actions and observations, a small key value store for the working set, and a document store for artifacts. Keep the working set small and ruthlessly pruned to protect attention.

-

Checkpoints as first class citizens: Agents should snapshot plan state, tool handles, and key artifacts on a cadence. The snapshots must be idempotent to support rollback and replay. Treat checkpoints like save points in a game, not like a hidden cache.

-

Time aware planning: Plans should contain timeboxes, heartbeats, and dependency gates. Heartbeats assert liveness and allow supervisors to nudge or pause. Timeboxes prevent an agent from stuck loops by triggering review or escalation when a step overruns.

-

Supervisor and subagents: Use a simple supervisor that cares about goals and budgets, and separate subagents for roles like research, tooling, and review. Subagents can fail fast without losing the mission thread.

-

Idempotent tools: Tool adapters should be written as if they will be called twice after a network hiccup. Create replay tolerant operations with request identifiers and side effect journals. The goal is progress without duplication.

-

Guarded autonomy: Permissions should be scoped to tasks, not to the model. A permission prompt is a checkpoint, not a nuisance. The agent should ask for the least power that completes the step and release it when done.

-

Human in the loop at the boundaries: Put approvals at step boundaries where value concentrates. For example, approve a migration plan and a set of test results, not every file edit.

-

Cold start ritual: On rehydration, the agent should load the last checkpoint, run self tests, and produce a brief status memo that a human can skim. Make this memo the default opening move after downtime.

These patterns are not theoretical. They are the difference between a lab demo and a production colleague. Teams that adopt them will see sharper recovery, higher throughput, and a calmer operator experience.

Observability for agents that do not stop

If an agent is going to work for 10 to 30 hours, you need to see it work. Observability shifts from isolated traces to a living timeline.

-

Hierarchical traces: Capture spans for plans, steps, tool calls, and subagent conversations. A single run should read like a book with chapters and scenes. Link traces across restarts using a stable run identifier.

-

Timeline dashboards: Show progress percentage, units completed, budget burn, and risk flags on a time axis. Add markers for interrupts, approvals, and recoveries. If a product manager cannot tell what happened overnight, the timeline is incomplete.

-

Reasoning visibility without leakage: Retain short summaries of the model’s thinking at each checkpoint for audit. The summaries should be scrubbed of sensitive content and stored in a way that cannot be used to attack the model later.

-

Anomaly detectors: Train simple detectors on plan regularity. If tool calls spike or step durations trend upward, raise an alert, not a page. The point is to catch drift early without flooding humans.

-

Replay and diff: Make it trivial to replay a run from any checkpoint, then diff outcomes. This is how you diagnose whether a fix to a prompt, a tool, or a permission actually helped.

Observability is where temporal agency becomes legible. It is also where platform strategy shows up. The operating environment that lets agents work in long, supervised sessions becomes the control point for distribution and trust, as we argued in our piece on apps, agents, and governance.

Error budgets that speak time

Traditional Site Reliability Engineering uses error budgets to balance velocity and reliability. Agents need similar contracts, but the budget is temporal.

-

Continuity Service Level Objective: For every 24 hours of scheduled work, a run must spend at least 21 hours in forward progress. Pauses for approvals or nightly maintenance count, crashes do not.

-

Recovery Service Level Objective: After a planned or unplanned interruption, an agent must resume within 10 minutes with zero loss of accepted work. The default path is to load the last checkpoint and replay the minimal steps to rehydrate.

-

Drift Service Level Objective: For a standard library of drift events, 95 percent of runs complete without human help. The library should include credential rotation, schema rename, and a changed folder structure.

-

Cost Service Level Objective: Keep dollars per validated unit within a corridor over the run’s duration. If the agent begins thrashing, the cost curve will give you the earliest signal.

Put these in a runbook, then enforce them with monitors that speak the same language. The agent either stayed on plan, recovered fast, absorbed change, and kept costs steady, or it did not. There is no shortcut to durable reliability.

Where long-horizon agents land first

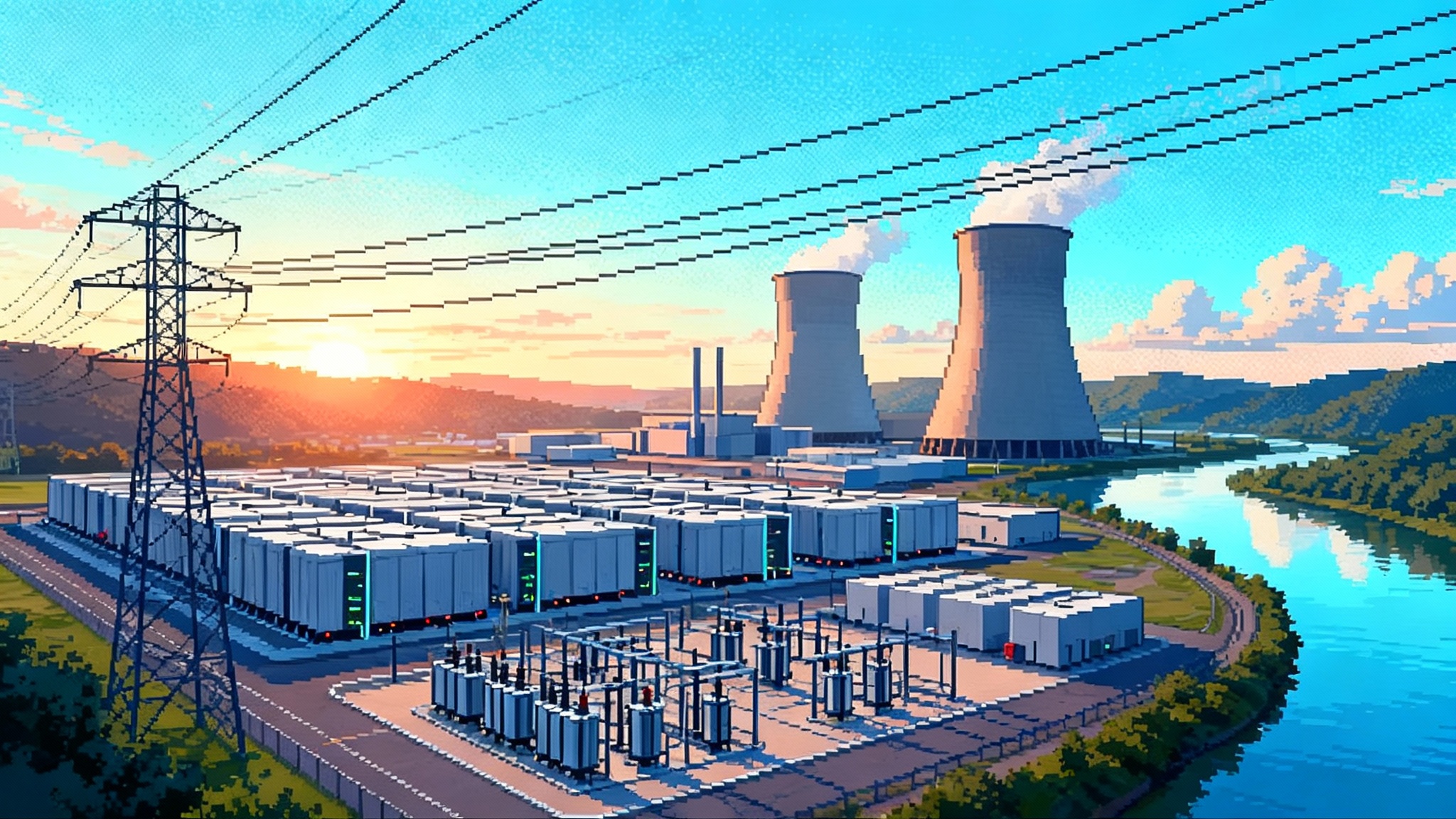

Back office time will be colonized first because the stakes are high in volume and low in real time risk. Expect wins in code modernization, data migrations, regulatory filings, invoice reconciliation, contract abstraction, and customer record cleanup. These jobs are made of predictable steps, noisy systems, and long stretches where nobody is watching. They reward stamina.

The frontier will then expand into live operations. Network operations centers will run agents that triage alerts for hours at a stretch and stage fixes behind approvals. Customer service will promote overnight responders into daytime collaborators that carry the case across shifts. Sales operations will assign pipeline grooming to an agent that preserves context across quarters. The common theme is a clock, a budget, and a supervisor.

To move responsibly, run in shadow mode first. Let the agent propose actions for a week while a human executes. Compare throughput per hour, recovery time, and plan coherence. Only then grant write permissions. Temporal agency is a capability that earns trust by surviving time, not by passing a demo.

Strategy: treat time as the scarce resource

Winners in this phase will manage time as the primary scarce resource of cognition. Here is a practical checklist to adopt now:

-

Choose time dense problems. If your team measures the work in hours rather than single answers, you have a good candidate. Start with a backlog that a human hates to babysit, like code upgrades, test generation, or large data reconciliations.

-

Budget time before tokens. Give every run a timebox and a heartbeat. Track dollars and minutes per unit. If the agent learns to hit the time budget, cost often follows.

-

Protect attention in memory design. Keep a tight working set, prune aggressively, and favor small stable pointers over large context dumps. Attention is a scarce cognitive asset; do not waste it on retrieve and forget cycles.

-

Make checkpoints unavoidable. Require a checkpoint after risky steps and before approvals. If your logs do not show save points, the agent has no past to return to.

-

Build idempotent tool adapters. Design every external action to be safe on replay. Assign request identifiers, write side effect journals, and plan for retries. The goal is resilience without human cleanup.

-

Assign an agent reliability engineer. Someone should own runbooks, SLOs, and drift libraries. This role is the bridge between product, security, and operations.

-

Use supervisor patterns. Keep one simple overseer that understands goals and budgets, and keep role agents narrow. Complexity belongs in the toolchain, not in the mission thread.

-

Measure agency half life quarterly. Run a standard 30 hour scenario with interruptions and drift, then publish the decay curve. Put it next to your conversion funnel and your latency graph.

-

Practice hot restarts. Treat every restart like an airline turnaround. Load, test, announce status, and push back. The craft of restarts is where temporal agency is either won or lost.

The playbook above aligns with what the market is telling us. Models are getting faster, context windows are huge, and prompts are polished. The bottleneck is no longer tokens or one off accuracy. The bottleneck is the clock.

What the Anthropic and IBM moves tell us

The September release aligned the model, the coding surface, and the Agent SDK into one story: make it easy to build long running agents that keep their head. The October enterprise push showed how this lands in real software lifecycles, with governance and security at the center, and with an IDE that expects agents to sit in the chair for the whole shift. The calendar is doing some of the talking. Product teams are tying progress to continuity, auditability, and cost per validated unit, not just a clever reply.

A closing forecast

Temporal agency will feel mundane before it feels magical. The first great products will look like nothing more than a reliable colleague who never forgets to save, always writes a status note, and picks up right where they left off. Back offices will get quieter as time sinks disappear. Then live operations will absorb the same habits.

When the market looks back on this season, we will not praise the biggest window or the cheapest token. We will ask a simpler question: whose agent made the most trusted progress per hour, kept going when the lights flickered, and treated time with respect. The companies that answer that question well will compound faster than the rest because they converted clock time into compounding work. In this new race, the stopwatch is mightier than the prompt.