After SMS, AI Search Flips From Scrape to Consent Economy

The UK’s decision to grant Google Search strategic market status resets the incentives of AI search. Expect provenance rails, compensation markets, and agent ranking protocols that reward trusted, licensed knowledge.

The hinge that moved the market

On October 10, 2025, the United Kingdom’s Competition and Markets Authority confirmed Google Search holds Strategic Market Status. That single designation shifts the center of gravity for AI search from scrape first to license by default. Search is no longer only about relevance and speed. It is now also about provenance, permission, and the economics of reuse. In other words, the rules now explicitly consider who gets to say what is true, who gets paid when that truth is reused, and how agents choose among competing sources. See the official announcement where the CMA confirms Google Search SMS.

If the 2010s optimized for harvesting the open web and ranking it, the mid 2020s are writing a new social contract. Assistants that summarize, answer, and act must prove where knowledge came from, what the owner allows, and what price clears the reuse. That is epistemic antitrust in action.

From Napster knowledge to Spotify knowledge

The last decade of indexing looked like music before streaming. Content was abundant, rights were ambiguous, and value pooled with aggregators. With Strategic Market Status now in force for general search, the industry is rewriting its playbook. The new baseline is that important content carries credentials, signals how it can be reused, and can be licensed on metered terms. AI search can still feel instant, but under the hood it must behave more like a clearinghouse than a scrape farm.

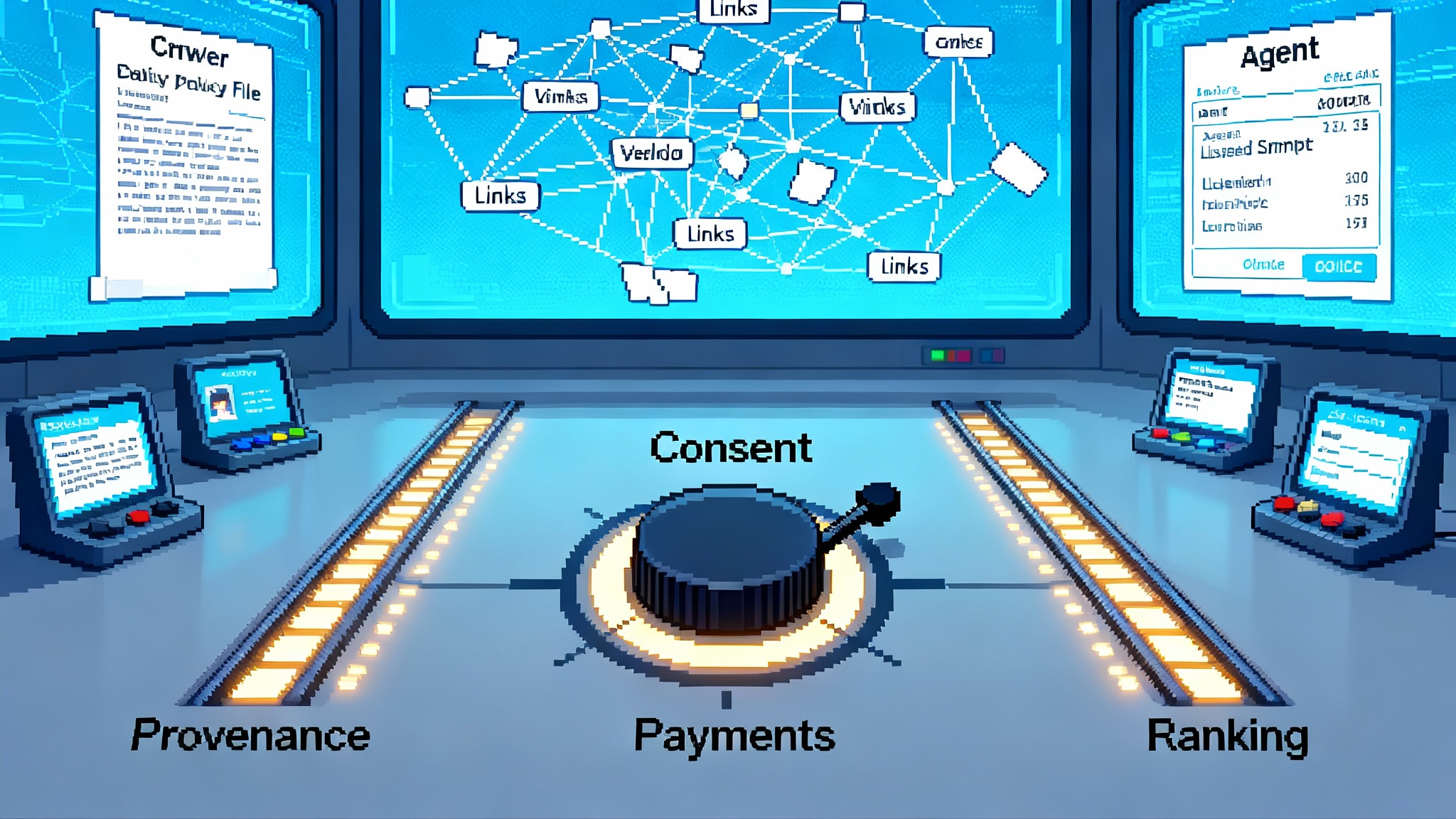

Music streaming won when provenance was reliable and licensing pipes were predictable. AI search is on the same trajectory. A three layer stack is taking shape.

The new epistemic stack

1) Provenance rails

Provenance rails make it cheap to prove source, intent, and permission. The ingredients are already here: portable content credentials that travel with media, extended robots style rules that are machine readable at the fragment level, and account level preferences that move across products. Instead of a coarse allow or disallow at the site level, publishers will express policies that map to model behavior. Snippet reuse, derivative training, question answering, and agent actions can each get separate flags with optional price tags.

Three concrete changes follow:

- Content ships with cryptographic signatures and tamper evident history. Publishers can attach an origin stamp, a set of permitted uses, and links to license endpoints. When an assistant ingests or cites content, it checks the stamp in milliseconds and caches the decision in a consent log.

- Robots rules evolve into robots plus. Policies get user agent aware, action scoped, and time limited. A news site might allow one sentence summaries during breaking windows, require payment for longer extracts, and disallow training on paywalled sections, enforced at the path and selector level.

- Consent becomes portable. An organization sets defaults and overrides them per series, author, or dataset. A creator can choose to be discoverable by consumer assistants but not by developer models, or the reverse. Assistants relay these choices across surfaces so users avoid dashboard overload.

Together, these rails turn provenance from a courtroom exercise into a protocol. The web stays open, but reuse shifts from implied to expressed.

2) Compensation markets

If provenance confirms who owns what, compensation markets decide how money moves. We already see revenue share deals for snippets and metered licenses for training access. The next phase will look more like a bidding market than a static contract.

A typical conversational answer blends ten to fifty sources. In a licensed model, those sources compete to be quoted at a given price and under policies the assistant can accept. That pushes value back into the link economy in two ways:

- Links become priced access points. A high trust medical publisher sets a per answer fee for reuse of a diagram and a per thousand impression rate for extractive summaries. An assistant routes to the most cost effective, highest trust source that satisfies the query, similar to an ad exchange routing bids in real time.

- Publishers gain new product surfaces. Beyond paywalls and display ads, they can sell authoritative snippets, verified facts, and structured updates. A finance site licenses a daily feed of credit card rate changes. A sports publisher licenses injury updates with strict freshness windows. Payment clears when the assistant serves, not only when a user clicks.

Compensation markets reduce legal fog and create new average order value for content. They will not end scraping, but they change the default. If taking without asking adds risk and asking is cheap, optimizers will learn to ask first.

3) Agent facing ranking protocols

Traditional ranking is a private art. Agents, however, are personal interfaces that will face utility style expectations in certain contexts. Expect choice screens for default knowledge partners, negotiated snippet lengths by vertical, and clear logs of why a source appeared in an answer.

Here is how this plays out:

- Choice screens shift from engines to knowledge partners. During setup you might select a news bundle, a health bundle, and a science bundle. Each bundle names publishers and specialized models, with visibility into fees and data policies.

- Negotiated snippets become a policy variable. A news provider may allow one sentence for free, two for a nominal fee, and three with an attribution card. For evergreen explainers, a publisher allows longer quotes in exchange for persistent citation and a higher per answer price. Agents embed these rules into ranking and layout.

- Explanations are standardized. When an answer blends six sources, the agent shows which sentence came from which publisher, with a clear price and timestamp. In a world where regulators care about gatekeeping, traceable ranking is proof of fair dealing.

How this reprices links

Search engines turned links into attention flows priced by auctions. Agents will turn links into licensed fragments priced by usefulness and risk. That reprices underlying content as follows:

- Freshness and exclusivity earn premiums. If your newsroom certifies a statistic was updated in the last hour, the assistant can pay more to use it early. Once the number is ubiquitous, the price falls.

- Precision beats volume. Broad coverage without provenance will be cheap and often ignored. Tight, well structured facts with clear ownership will be expensive and frequently reused.

- Context sells. A courtroom transcript is data. A court reporter’s curated summary is a product. Agents pay more for the product because it compresses user time and reduces hallucination risk.

For publishers, one high trust table can earn more than a hundred undifferentiated posts. For assistants, the tradeoff becomes explicit. Spend one cent for the pristine number, or risk the cheaper, fuzzier aggregate.

What this does to SEO

Search engine optimization grew around click incentives. In an agent world, the objective function changes. The new discipline looks like SLO, or supply licensing optimization. You still care about discoverability and relevance, but you also optimize for license clarity, snippet quality, and agent friendliness.

A modern checklist reads differently:

- Publish machine readable rights at the fragment level. Make policies easy to fetch and cache.

- Ship structured facts and short, self contained explanations. Think paragraph grade atomic units that can be reused without distorting your work.

- Maintain eval ready benchmarks. Provide test sets or accuracy bars so assistants can measure how your content improves answer quality.

- Track price elasticity. If your snippets never win, your price is wrong or your structure is weak. If you are always the cheapest, you may be underpricing trust.

As these practices spread, ranking signals will absorb them. Assistants will learn which publishers deliver clean, reliable fragments that play well with agents. Those publishers will see sustained, more predictable revenue even if raw pageviews flatten. For a broader view of how distribution power shifts, see how assistants become native gateways.

Why vertical, trustworthy AI search accelerates under clearer rules

Regulatory clarity rarely speeds up innovation, but this time it might. With SMS in place for a core gateway, suppliers and startups can design against a stable set of constraints. That reduces compliance unknowns and frees teams to focus on products.

Vertical search benefits first. Health, finance, law, travel, and education all have strong notions of provenance and liability. When licensing and permissions are explicit, vertical assistants can justify paying for better sources and build features around them. A travel agent that guarantees real time seat maps, a health assistant that cites current treatment guidelines, and a finance agent that binds to a licensed feed of corporate actions can launch without guessing whether rules will shift beneath them. This also complements the reality that long horizon agents win when they can rely on durable, trusted knowledge.

Trust becomes easier to demonstrate. When answers carry durable credentials and a receipt for licensed reuse, users can see the trail. When ranking decisions are documented, regulators can audit fairness. When compensation ties to measurable quality improvements, the content market starts to look less like a fight over crumbs and more like a tiered catalog.

Near term plays for startups

Startups have a window to build the picks and shovels of the consent economy. Three plays stand out.

1) Consent aware crawlers

Build crawlers that negotiate by default. They read policies beyond robots, authenticate where needed, and record every fetch as a consent event. They retry when policies change and update the cache when licenses expire. The business model is straightforward: sell access logs, policy mapping, and managed license enforcement to assistants and publishers who do not want to operate their own fleets.

Execution details:

- Implement a policy language that describes actions, scopes, durations, and prices. Map site level rules to fragment level behavior using selectors and templates.

- Add resolver plugins that talk to common license endpoints. Support payment rails and escrow. Handle retries and timeouts so assistants can fail soft without violating policy.

- Provide a publisher console that previews how an assistant sees content and simulates revenue under different settings.

2) Payable knowledge graphs

Turn verified, structured facts into a metered product. Ingest public records, standards, and publisher feeds. Assign provenance down to each edge. Sell query results that come with guarantees about origin and freshness. When an agent needs a safety recall date, a medication dosage range, or a corporate officer change, it asks your graph, pays cents for the answer, and receives the number with proof.

Execution details:

- Build deterministic pipelines that convert documents into graph edges with auditable lineage. Store hashes and timestamps alongside values.

- Design pricing per field, per query, and per freshness window. Offer a free discovery tier with older data, charge for current and guaranteed outputs.

- Ship an evaluator that shows how adding your graph reduces hallucinations and response time for baseline models.

3) Evals as markets

Turn evaluation into a clearing price. Create standardized tests for specific question types and verticals. Let publishers and data providers plug in content, run the evals, and see how their inclusion moves agent accuracy and user satisfaction. Pay providers from a pool allocated by assistants or advertisers in proportion to measured lift.

Execution details:

- Build open test harnesses with reproducible prompts and metrics. Allow blind runs so providers cannot game tests.

- Offer a neutral clearing service that verifies eval integrity and triggers payouts. Keep a public leaderboard and a private console with deeper diagnostics.

- Create vertical packs that help startups in regulated categories demonstrate compliance benefits. For the governance angle, align your reporting with auditable model 10-Ks.

Near term plays for publishers

Publishers should not wait for perfect standards or perfect deals. Practical steps can increase revenue and resilience now.

- Ship credentials and policies. Attach content credentials at publication and publish a clear policy file that names what assistants may do at different price points.

- Atomize your best facts. Package high value tables, timelines, and glossaries as addressable fragments with version numbers. Great fragments win repeatedly in agent answers.

- Instrument snippet performance. Track where your content appears in agent answers, how often it is chosen, and at what price. Use the data to revise structure and licensing.

- Offer bundles. Group your health coverage, your explainers, and your data graphics into a single license with simple tiers. Agents prefer fewer, cleaner integrations.

- Negotiate choice screen presence. If you lead a vertical, secure a branded slot in setup flows. Visibility at setup is worth more than a million anonymous inclusions later.

Practical implications for product teams

If you build assistants or search products, your retrieval and ranking pipeline needs new terms of trade.

- Add a budget variable to your reranker so it can choose among sources on price as well as relevance and trust.

- Add a provenance gate that fails closed when policies are missing or ambiguous. The default should be do not reuse unless rights are clear.

- Add an explanation bundle that shows users and auditors the why behind every answer, including which snippets were eligible and which were priced out.

- Add license aware caching and expiry so you can revalidate consent on schedule and avoid serving stale rights.

- Add evaluation hooks that measure how each source changes correctness, latency, and user satisfaction. Pay in proportion to lift.

These practices line up with the regulator’s early plans, previewed in the mid year consultation. For context, review the CMA roadmap of potential actions that preceded the October decision.

What to watch next

Three signals will tell you the consent economy is sticking.

- Choice screens appear in agent onboarding outside of mobile operating systems. When users pick knowledge bundles up front, licensing becomes a first class design variable.

- Publisher dashboards start to look like ad servers. Expect frequency caps for snippet reuse, dayparting for price, and flags for freshness windows.

- Developer kits add consent primitives. Retrieval libraries include policy resolvers and payment hooks as standard components, not as bolt ons.

The opportunity hidden inside regulation

Rules can feel like brakes, but sometimes they are the curves that let us go faster. With Strategic Market Status in view, the market can define lanes for provenance, compensation, and ranking. Builders can target clear problems. Publishers can price their best work. Users can see where answers come from and decide who deserves their trust.

We are moving from a world where the best crawler wins to a world where the best contract, the best provenance, and the best evaluation win. That future is not only fairer, it is more legible. When knowledge has a receipt, agents can act boldly. When ranking has a reason, regulators can judge fairly. When creators get paid for what they know, the web gets sharper. The consent economy is not a detour from speed. It is the road that lets AI search go the distance.