Agent Bricks turns your lakehouse into production agents

Databricks Agent Bricks promises a measured path from lakehouse data to production AI agents. Here is what shipped, why it matters, a week by week playbook, and how to launch with governance, observability, and cost control.

The breakthrough, in plain English

At the Data + AI Summit in San Francisco on June 11, 2025, Databricks introduced Agent Bricks, a workflow for building enterprise AI agents that optimize on your own governed data. The pitch is direct: describe the job you want an agent to perform, point it at approved lakehouse data, and let the platform generate task aware evaluations, fill data gaps with safe synthetic samples, and search the design space for the best combination of model, prompts, retrieval, tools, and guardrails. The intention is a production grade agent you can place on a visible cost and quality frontier, not a fragile prototype. Databricks summarized this in the official Databricks press release for Agent Bricks.

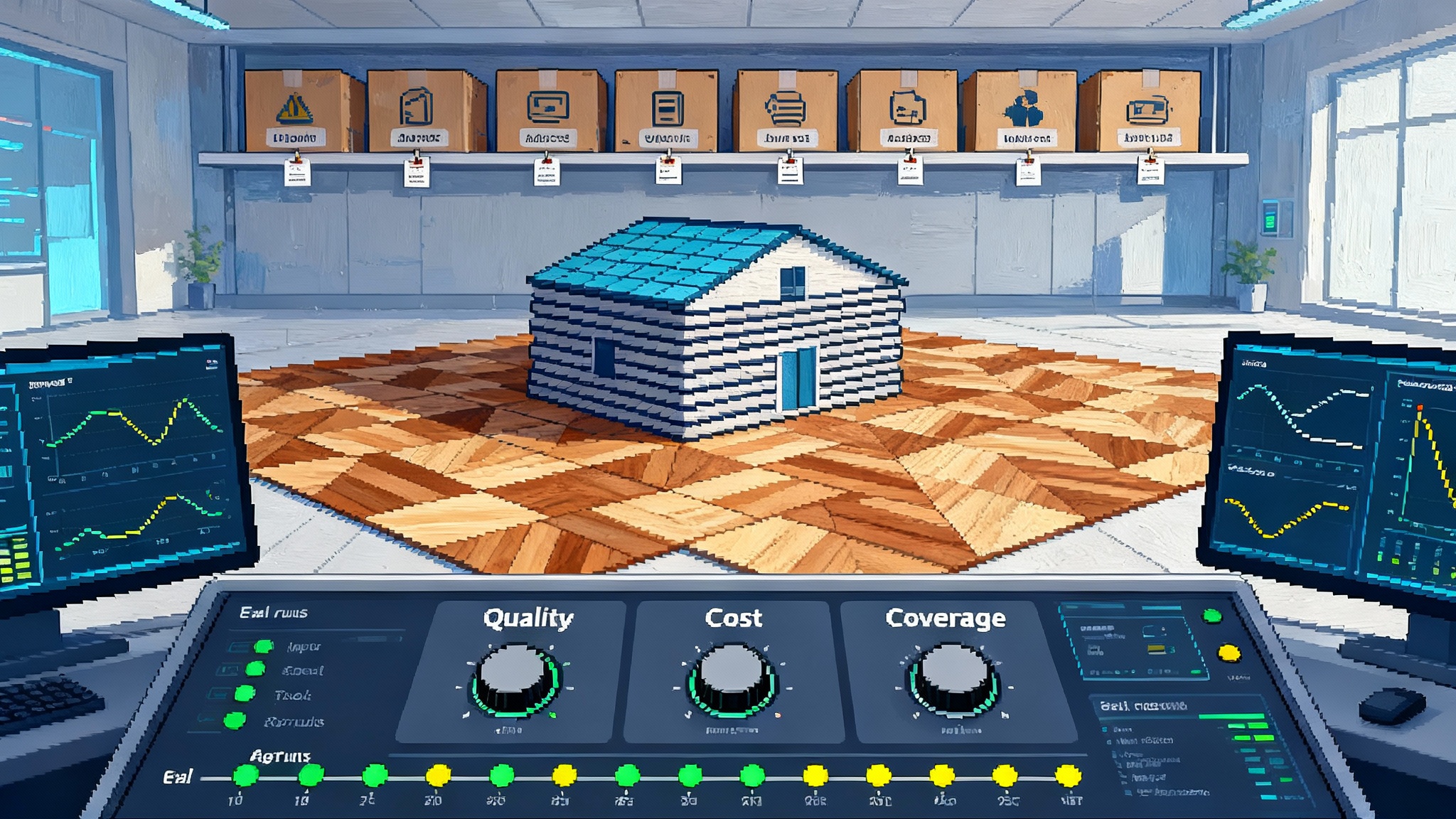

Why it matters right now: most enterprise agent projects stall for three reasons. First, there is no reliable yardstick for quality beyond spot checks. Second, teams lack enough task specific data to make improvements without risking leakage or privacy. Third, what looks fast and cheap in a notebook becomes slow and expensive under real traffic. Agent Bricks attacks all three with built in evals, domain tuned synthetic data, and optimization that elevates cost and quality as first class design variables.

Think of it like a wind tunnel for AI agents. Instead of hand tuning prompts on a dozen test inputs, you run the agent through a controllable tunnel that simulates realistic airflow. The tunnel measures lift, drag, and stability under many conditions. Then it recommends a design that meets your constraints, whether you care most about accuracy, latency, or unit cost.

What actually shipped

Agent Bricks is not a single button. It is a workflow that stitches together components many teams have been assembling by hand:

- Task aware evaluations. The system generates domain specific evals and LLM judges that score outputs against goals you define. Evals are code. They are versioned, reproducible, and auditable.

- Synthetic data generation. When labeled examples are limited, Agent Bricks creates synthetic inputs that mimic your data distribution. This lets you probe edge cases without moving sensitive records off platform.

- Cost and quality optimization. The workflow searches over models, prompts, retrieval strategies, and tool configurations to find Pareto optimal variants. You choose along a visible cost and quality curve.

- Multi agent orchestration. Patterns where narrow agents coordinate are supported, such as extractor plus verifier plus summarizer. Orchestrations are packaged like other assets and can be versioned and redeployed.

- MLflow style observability. Traces, evaluations, feedback, and versions are tracked end to end. You can reproduce a problematic output weeks later and see the exact prompt, tools, and data snapshot that produced it. Databricks positions this as part of MLflow 3.0. See the MLflow 3.0 unified observability overview.

If you are already on the lakehouse, the fit is pragmatic. Unity Catalog governs data, credentials, prompts, and agent artifacts. Jobs schedule evals, regression runs, and red teams. Lakehouse Federation and Lakebase can bring in operational tables when needed. The emphasis is less on a model menu and more on a measured path from intent to a monitored, reproducible agent.

Where it shines in the near term

Do not picture a sci fi general intelligence. Picture specific wins that most enterprises can achieve this quarter:

- Structured extraction at scale. Emails, contracts, claims, purchase orders, bills of lading, and clinical notes. Outputs land in tables with confidence scores and trace links.

- Knowledge assistance with receipts. Grounded answers that cite which rows or documents were used, with a verifier agent that flags low confidence spans.

- Text transformation with policy. Sensitive data redaction, tone transformation for customer replies, and regulatory formatting for disclosures.

- Case routing and triage. Agent clusters that classify, draft actions, and escalate with human in the loop checkpoints.

Each case benefits from task aware evals and a cost quality curve that lets you pick the right tradeoff for production rather than a show demo.

How Agent Bricks compares to other stacks

The production agent market is converging on similar principles: eval first development, strong governance, and observable traces. Databricks leans into lakehouse native integration. If you are evaluating options, compare these patterns:

- Google emphasizes a builder flow and policy checks that help teams cross the last mile to deployment. For a complementary perspective, see our take on the Google's Agent Builder playbook.

- Salesforce focuses on the front office, packaging agents directly into CRM workflows. For go to market teams, review the Salesforce Agentforce 360 blueprint.

- Many teams deploy agents at the edge for latency, cost, and data control. If that is your priority, read our analysis of the Cloudflare's edge deployment path.

Agent Bricks is best suited when your critical data already lives under Unity Catalog and your teams rely on MLflow for lineage, governance, and experimentation.

A week by week playbook from prototype to production

The plan below assumes you have data in the lakehouse and Unity Catalog in place. Adjust the scope to your team size, but hold the cadence. The theme is eval first development. Treat evals like the test suite for a software service and make them the gate for every change.

Week 0: alignment and guardrails

- Define the business task, the unit of work, and the agent’s boundary of responsibility. A unit might be one email processed, one invoice extracted, or one customer reply drafted.

- Agree on a starting service level objective. For example: 95 percent field level accuracy on five required fields, under 2 seconds median latency, under 2 cents per document.

- Inventory governed data sources and decide which columns or fields are allowed for grounding. Map retention and masking rules. Reserve a private holdout slice.

Week 1: eval first foundation

- Create seed evals that mirror production tasks. Start with 50 to 200 real examples if you have consents and permissions, otherwise use templated examples that capture structure and tricky cases. Tag each case with scenario labels such as language variant, document subtype, and length.

- Wire up Agent Bricks to autogenerate domain specific judges that score results. Make the fail conditions explicit. For example: wrong tax ID is a hard fail even if other fields match.

- Establish a simple baseline agent. Use a clear model and prompt, minimal retrieval, and no tools yet. Run the baseline against the evals and record the initial cost and quality.

Week 2: synthetic data and frontier finding

- Generate synthetic data to broaden coverage. Focus on tail cases from Week 1 errors. Add multilingual variants, rare document layouts, and adversarial inputs that trigger hallucinations.

- Let Agent Bricks search the design space. Enable retrieval variations, different models, and prompt templates. Constrain the budget so you explore dozens of variants, not thousands.

- Choose a candidate on the Pareto frontier. Document the tradeoff and set it as the current best. Lock the eval suite and point a nightly job at it.

Week 3: tool use and multi agent orchestration

- Introduce tools that reduce mistakes. Common wins are a schema validator, a policy checker, or a calculator for totals and taxes. Tools can cut cost by allowing a smaller core model.

- Add a verifier agent. Let one agent do extraction, then a second agent check consistency and either fix or flag for review. This pattern lifts accuracy without doubling cost.

- Expand evals to capture tool specific failure modes. Include broken links, timeouts, and malformed tool outputs. Give the runner a chaos mode that randomly degrades a tool to simulate outages.

Week 4: grounding loops and human in the loop

- Add a grounding feedback loop. Store production traces with inputs, retrieved context, tool calls, outputs, latency, and cost. Sample a slice each day and feed it back into the eval set.

- Create a review queue for low confidence or high risk cases. Use MLflow metrics to set thresholds. Measure reviewer load and time to resolution.

- Red team the agent. Author adversarial tests that try to push the agent into policy violations. Add these as permanent evals with zero tolerance.

Week 5: governance, SLOs, and preproduction

- Promote prompts, datasets, tools, and agents to Unity Catalog with versioned lineage. Require approvals for changes to anything that affects user facing outputs.

- Define error budgets for cost and quality and wire alerting. For example: if median cost per unit rises more than 20 percent for a day, page the owner. If the quality score drops below 94 percent on the daily run, block deployment.

- Launch a preproduction canary. Mirror a small percentage of live traffic to the agent and compare decisions to the current process. Measure drift between preproduction and evals.

Week 6: production launch and rollback drills

- Go live behind a feature flag. Start at 5 percent of traffic, ramp to 25 percent, then 75 percent, then 100 percent. Hold a rollback playbook and practice it. You should be able to revert to the previous agent version in minutes.

- Publish an owner’s manual. Document the unit cost, SLOs, known failure modes, escalation paths, and how to file feedback. Treat the agent like a service with on call ownership.

- Archive a golden run. Freeze an eval set and versions for a verifiable snapshot. This becomes the audit anchor if a regulator or partner asks how the system behaved on a specific date.

Week 7 and beyond: continuous improvement

- Refresh evals weekly from production traces. Keep a private holdout that never enters the training or synthesis loop.

- Schedule quarterly red teams for new failure modes, new languages, and policy updates. Update kill switches for tool outages and model degradations.

- Explore multi agent specializations. Split the task into narrower subtasks and let a router dispatch. Compare to the single agent baseline on cost and quality.

Guardrails, governance, and observability

The subtle shift with Agent Bricks is that it treats evals, judges, and traces as first class artifacts tied to Unity Catalog. That creates a clean path for auditability and change management. MLflow 3.0 extends this with richer traces, judge definitions, and feedback capture so you can answer questions like: what version of the prompt produced this output, which tables were read, which tool timed out, and how much did this decision cost. For a deeper overview, see the MLflow 3.0 unified observability announcement.

A practical tip: treat every change to prompts, tool routing, and retrieval constraints like a code change in a regulated service. Require review. Pin versions. Attach a ticket number. If something goes wrong at 2 a.m., the incident commander should be able to reconstruct the exact state and roll back within minutes.

Risks you should plan for and how to mitigate them

- Eval drift. Your eval suite stops reflecting real traffic. Quality looks stable in tests but drops for users. Mitigation: refresh evals weekly from production traces, keep a private holdout set, and monitor a drift score between live inputs and eval distributions. Add shadow evals that are never used during optimization.

- Governance gaps. Prompts, tools, and routing logic change without approvals. A helpful workaround becomes a dependency. Mitigation: store everything as versioned assets in Unity Catalog. Require code review for prompt and tool changes. Use deployment jobs that block promotion unless eval thresholds are met.

- Cost overruns. A model change or vendor latency spike raises unit cost. A background job starts hammering the agent. Mitigation: set unit cost budgets and alerts. Add rate limits and concurrency caps. Prefer tool assisted smaller models when quality is equal. Consider a fallback mode that skips expensive tools when not strictly needed.

- LLM judge bias and brittle evals. Automated judges drift or learn to pass a pattern rather than the underlying task. Mitigation: mix automated judges with a small, rotating panel of human reviewers. Calibrate judges on a gold set. Randomize prompt formats to discourage overfitting.

- Privacy and leakage. Synthetic data or traces leak sensitive details, or agents over retrieve sensitive columns. Mitigation: use masking and retention rules at the table level. Only allow retrieval from approved columns. Separate noisy sandbox runs from production and scrub traces before sharing.

- Vendor and model drift. External model providers update behavior and silently change outputs. Mitigation: pin model versions, run daily regression evals, and rehearse rollback to a previous agent build. Keep a second independent model as a dark launch fallback for critical tasks.

What buyers should ask on day one

- Show the cost and quality frontier for this task, with models and prompts listed.

- Show the evals and the gold holdout set. How often are they refreshed from production traces, and who approves changes.

- Show a trace for a failed case and the exact versions of prompts, tools, and data used.

- Show the rollback drill. How fast can we revert to a previous agent.

- Show how access to data sources is governed. Which fields are permitted for retrieval.

If a platform cannot demonstrate these five, it is not production ready.

A practical checklist to ship with confidence

- One task, one metric. Pick a single business task and define a unit of work and a clear score.

- Evals as the gate. Treat the suite like tests that must pass before deployment.

- Start narrow. Use a small model with tools and a verifier agent before jumping to a heavyweight model.

- Budget the unit cost. Agree on a ceiling and alert well before hitting it.

- Version everything. Prompts, routes, tools, datasets, and evals live under governance.

- Drill rollback. Practice the revert until it is muscle memory.

Closing

Agent Bricks will not write your business strategy. It will not remove the need for domain expertise or careful change management. What it offers is the missing rigging for enterprise agents: a measurable way to tune on your own data, a clear cost and quality dial, and observability that makes root cause analysis and audits routine. The companies that win with agents over the next year will not be the ones who ship the flashiest demos. They will be the ones who ship the most boring dashboards. Dashboards that show eval coverage, drift, cost per unit, and error budgets holding steady while traffic climbs.

If you adopt one mindset from this launch, make it this: treat agents like services. Give them tests, budgets, owners, and versioned change logs. With that discipline, the promise of lakehouse native agents moves from pitch deck to reliable production system. Once that foundation is in place, your internal agent app store in 2026 becomes a question of when, not if.