Cloudflare’s Edge Turns MCP Agents Into Real Services

Cloudflare just turned the edge into an agent runtime. With a remote MCP server, Workflows GA, and a free Durable Objects path, teams can ship authenticated, stateful agents that run close to users.

Breaking: the edge becomes the agent runtime

On April 7, 2025 Cloudflare announced three pieces that click together like a kit: a remote Model Context Protocol server, Workflows graduating to general availability, and a free path for Durable Objects. The first two turn the network itself into an agent runtime. The third makes it inexpensive to try. If you have been waiting for the moment when agents stop living in a single developer terminal and start living on a global platform, this is it. See Cloudflare’s own framing in the April 7 press release on remote MCP.

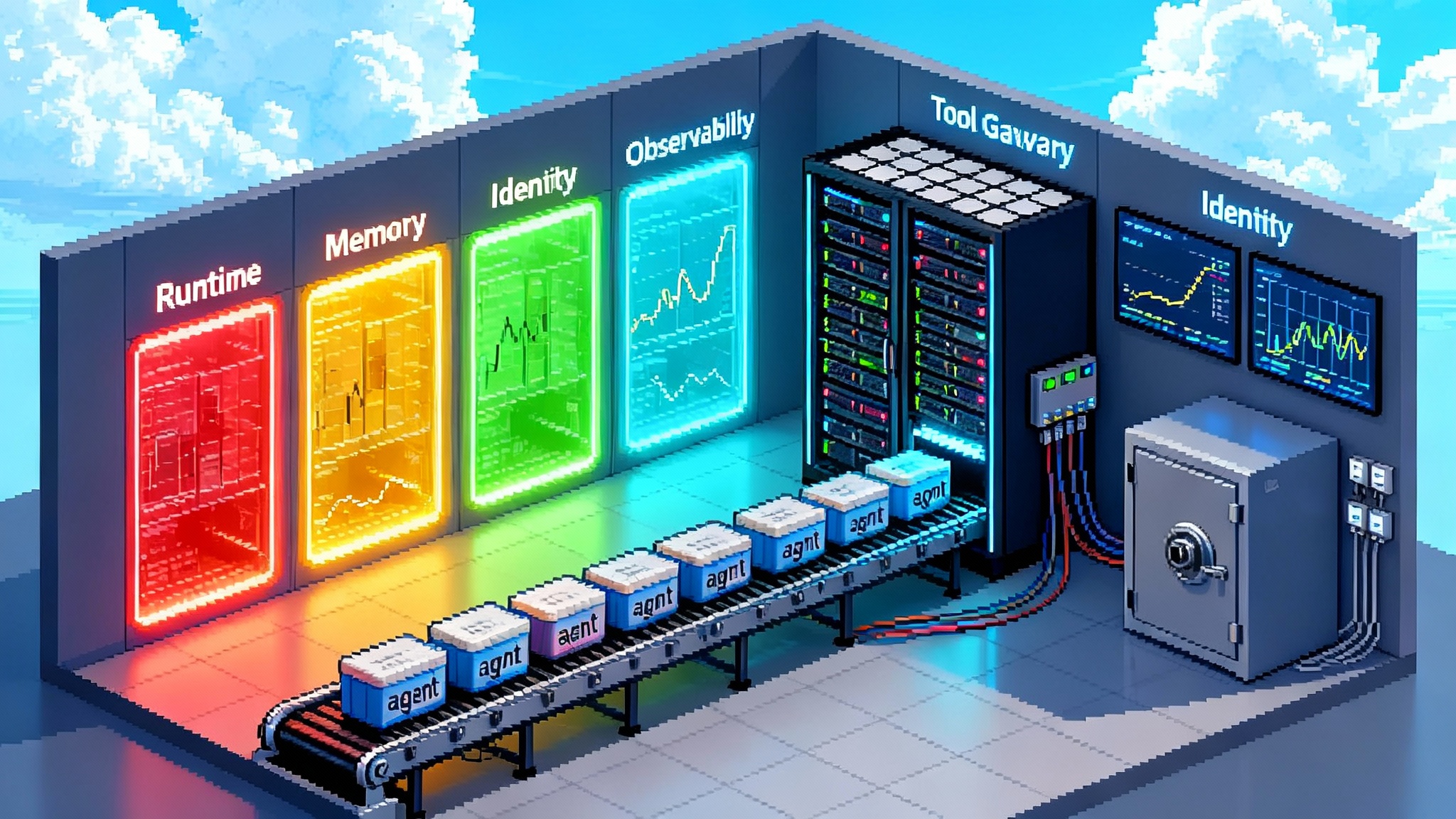

What makes this different is the choice to run the Model Context Protocol as a secure, internet reachable service that sits on Cloudflare’s edge. Add Workflows for long running, reliable steps, and Durable Objects for per user or per resource memory, and you have the three ingredients that agents were missing: delegated tool access, durable execution, and state.

From fragile demos to services with global reach

Model Context Protocol is the wiring that lets an agent call tools. Think of MCP as a power strip for capabilities. You plug in adapters for email, calendars, repositories, data stores, or payment systems. Historically, those adapters ran on a developer machine. That was fine for demos but painful for the real world. If your laptop sleeps, your agent sleeps. If you change networks, callback URLs break. If you need enterprise single sign on, you end up hand rolling brittle OAuth flows.

Cloudflare’s remote MCP server flips that model. You publish your agent’s tools as a Cloudflare service that any compliant agent can discover and call. Because the service runs on a global network, your tools execute near users and can be reached from anywhere your customers work. Because it is not tied to a single machine, it can scale and fail over. And because it sits behind a mature authentication front door, you can offer clean, delegated access without building it from scratch.

In practical terms, your calendar tool becomes a real endpoint. Your code deploy tool becomes a safe, auditable action. Your knowledge base lookup becomes a low latency call that does not cross an ocean just to parse a page.

What running MCP at the edge unlocks now

-

Delegated authentication that enterprises accept

Cloudflare called out partnerships with providers like Auth0, Stytch, and WorkOS. This matters because agent actions should use the user’s permission, not a shared super token. With delegated auth, your agent can request scoped permission to send an email, commit to a repository, or file an expense. Users see exactly what will happen and can revoke access later. Administrators can enforce policies and audit trails. -

Persistent memory without bolting on a database

Durable Objects let you colocate compute and storage for a given identity or resource. Treat each user, team, chat session, or project as a single addressable object that holds state. You can maintain conversation history, preference profiles, tool credentials, or work queues without managing servers. Cloudflare also introduced a free plan path for Durable Objects that uses a SQLite backed store, which lowers the barrier to ship. -

Human in the loop by design, not as a hack

In September 2025 Cloudflare shipped an Agents SDK update that added tool confirmation detection and smoother streaming primitives. That gives teams a first class way to pause an agent before a consequential action, surface a clear review prompt, and continue after approval. The details are in the September Agents SDK announcement. -

Sub 100 millisecond interaction paths for common tools

Cloudflare states that its network already runs code within roughly 50 milliseconds of 95 percent of the online population. Moving tools to the edge removes an extra round trip to a central region. For agents that read a calendar, check a cache, or write a small record, you can often keep the full request under 100 milliseconds. That is the difference between a chat that feels present and one that feels distant.

Why this reshapes the agent platform race

Before this spring, teams choosing an agent platform were stuck between two unsatisfying options. You could run a demo stack on a laptop with great local tooling, or you could use a cloud functions style setup where long running steps and memory felt bolted on. Cloudflare’s move makes the edge, not a region, the default execution model.

-

The runtime advantage

Agents call tools frequently and unpredictably. The best place to run those calls is close to the people clicking buttons. The edge does that by default. You do not need to tune regions or add a content delivery layer around your own service just to shave latency. -

The memory advantage

Stateful agents need to remember threads, decisions, and outcomes. Durable Objects give each user or resource a stable home that behaves like an actor. You get serialized updates, natural backpressure, and local storage. It is a mental model that matches what agents do: keep context, act, and learn. -

The reliability advantage

Workflows give you durable execution for minutes, hours, or days. Retries and waits are not homegrown loops. A trip booking agent can start a search, sleep until a price drops, then buy and notify. A procurement agent can wait for a manager approval event, then continue and file a receipt. -

The cost and distribution advantage

The free Durable Objects path means teams can prototype without a purchase order. Pay for what you use aligns with agent traffic patterns, which are spiky and user driven. And because a large share of the web already runs through Cloudflare’s front door, you inherit routing and protection that are hard to assemble on your own.

This shift also rhymes with a broader pattern across the stack. On the client side the browser has started to look like a lightweight agent host, a trend we covered in Atlas Agent Mode as runtime. On the data side, operational platforms are moving from analytics to action, as seen in our Cortex Agents Go GA analysis. And at the hardware level, longer contexts and better memory maps change what is practical for tool use, highlighted in our piece on the NVIDIA Rubin CPX context breakthrough. Cloudflare’s edge hosted MCP slots neatly into that arc by making the network itself the place where tools, state, and orchestration meet.

A reference architecture you can ship this quarter

Here is a concrete path to go from idea to production in one quarter. The focus is a narrow, valuable workflow that your team already does weekly. Replace meetings with mechanized clicks.

1) Choose a job with real value and bounded risk

Examples: support triage, marketing draft generation with data joins, internal release notes, expense classification with policy checks. Avoid open ended assistants. Aim for agents that read, decide, and act against two or three systems.

2) Define the tool surface as MCP endpoints

List exactly which actions the agent can take, which inputs are required, and which outputs you will record. For a support triage agent: read ticket, look up customer entitlement, summarize context, file an internal handoff, and reply with a holding note. Implement each as a tool in your remote MCP server.

Tips for tool design:

- Prefer verbs over nouns. Tools should do one clear thing.

- Keep inputs small and structured to ease approval prompts.

- Emit structured outputs so Workflows can branch deterministically.

3) Wire in delegated auth

Use an identity provider to request user consent for each system. Keep scopes tight. Store per user tokens inside that user’s Durable Object, not in a shared registry. Add an admin only route to revoke or rotate credentials. In your tooling code, always check that scopes match the requested operation.

4) Give each user or resource a Durable Object

Model memory explicitly. Examples: a per user object that stores a preference map and recent interactions, or a per account object that manages a shared queue of pending actions. Use the object to enforce idempotency by recording a task identifier before you call an external system. If a retry arrives, you will see the duplicate and skip.

5) Orchestrate long steps with Workflows

Create a Workflow definition for multi step tasks. Include retries with backoff, event waits for approvals, and compensating actions for partial failures. Keep your Workflow logic focused on orchestration. Keep your tools small and deterministic. Emit structured events from both sides.

6) Add human in the loop at the moment of consequence

Use the Agents SDK tool confirmation pattern to pause before anything that spends money, sends messages to customers, or changes code. Surface the proposed action with the minimal data needed for confidence, the exact tool and inputs, and a clear approve or reject choice. Record the approver and timestamp in the Durable Object.

7) Optimize for sub 100 millisecond tool calls

Co locate tools and state. Cache common reads in memory inside the Durable Object. Prefer incremental updates over full document writes. For external services, use regional endpoints that are close to your expected users. Measure tail latency, not just averages.

8) Build observability that matches a service, not a demo

Log every tool invocation with a task identifier, the triggering user or workflow instance, the inputs, the success or failure, and the duration. Sample prompts and model outputs for quality review, with sensitive fields redacted. Add a runbook for every tool that describes failure modes and safe manual overrides.

9) Ship behind a narrow rollout gate

Start with a single team or geography. Put a kill switch in configuration, not code. Add a per user rate limit to catch loops. Track three numbers each day: tasks attempted, tasks completed, and tasks that needed human correction. Aim to reduce the third without allowing silent failures.

10) Write your decommission story now

Every new agent increases blast radius if it goes wrong. Define a way to disable tools, freeze state, and export audit logs. Practice that drill before you scale up.

A week long case study: support triage you can feel

Imagine a support triage agent for a software company. The goal is not to replace people. It is to shorten the time from ticket creation to a high quality first response and a clean handoff.

-

Day 1: Tools

Implement tools for reading a ticket, fetching entitlement from your billing system, summarizing the context, creating an internal handoff, and drafting a reply. Deploy them to a remote MCP server on the edge. -

Day 2: Memory

Create a Durable Object keyed by the support account. Store a recent ticket digest, common preferences, and a short list of known hot topics. Keep a rolling window of the last 50 actions for audit. -

Day 3: Auth

Use delegated auth to request per agent permissions to the help desk and billing systems. Scopes only for read and create, not delete or admin. Save tokens per human agent in the Durable Object, and rotate them automatically if refresh tokens are present. -

Day 4: Orchestration

Build a Workflow that starts on ticket creation, branches based on plan tier, waits up to two hours for a human confirmation if the draft reply contains a risky clause, then continues. Retries of external calls use exponential backoff and give up cleanly with a task note. -

Day 5: Human in the loop

The agent presents a proposed first response with a confidence score and the exact tool calls it plans to make. A human agent can approve, edit, or send back for revision. Every decision is logged. -

Day 6: Latency tuning

Measure end to end time from ticket to first response, and per tool latency. Cache entitlement lookups for five minutes. Collapse multiple reads into a single batched call where the upstream API allows it. Target under 400 milliseconds for the full draft generation and under 100 milliseconds for each tool call. -

Day 7: Rollout

Ship to 10 percent of incoming tickets. Monitor escalations and customer satisfaction. Keep a standing review to examine misfires and update prompts or tools. If the graph of corrections per day goes down, expand coverage.

That one week sprint is not a fantasy schedule. It works because the platform pieces do the heavy lifting. You are not building an auth layer, a durable execution system, and a state store in parallel. You are composing them.

Practical guardrails and gotchas

-

Prefer verbs over nouns in tool design

Tools should do one thing. Avoid omni tools that accept dozens of optional parameters. Small tools chain well in Workflows and are easier to review in human approvals. -

Make idempotency a first class concept

Generate a task identifier at the first user intent. Pass it through every tool. Record it in the Durable Object before external calls. On retry, check and skip. -

Keep secrets and tokens per user, not per app

Store tokens in each user’s Durable Object. Never share a super token. Rotate regularly. Record the scopes you asked for and the scopes you actually received. -

Measure tail latency and budget for it

Sub 100 millisecond averages are great, but p95 and p99 matter for perceived quality. Put explicit budgets in your Workflows and add backpressure in your Durable Objects to avoid overload. -

Test failure paths, not just happy paths

Simulate timeouts, partial writes, and duplicate events. Your Workflows should clean up after themselves, and your Durable Objects should tolerate being restarted with state intact. -

Build a paper trail that security teams will like

For each action, capture who asked, what was proposed, who approved, what happened, and where the artifacts live. Keep those records for the period your auditors expect.

What to watch next

-

A marketplace of remote MCP tools

The obvious next step is a registry of vetted tools with clear scopes and pricing. Expect popular software vendors to publish official MCP adapters that you can drop into your service without custom code. -

Better cross vendor patterns for delegated auth

Early partnerships are a signal. The next wave will be standardized scopes for common actions like send email, create calendar event, or open a ticket across providers. -

State that learns

Today, Durable Objects give you clean state. Tomorrow, expect tighter patterns that combine that state with small model adapters, so the agent can learn preferences per user without shipping data off platform. -

Observability that explains, not just records

You will want traces that show why an agent chose a tool, not just which tool it chose. Expect richer, inspectable reasoning traces to become a first class feature.

The bottom line

Agents get real when they run where your users are, remember what matters, ask for permission, and wait when they should. Cloudflare’s remote MCP server, Workflows, and Durable Objects supply those properties out of the box. Add the September Agents SDK upgrade for clean review prompts and you have a stack that feels like software engineering, not stagecraft. If you have been experimenting at the edges of your product, start one production agent now. Keep the surface small. Give it real tools with narrow scopes. Persist memory where it belongs. Put a human in the loop at the moment of consequence. Measure the right latencies. Ship behind a gate, then expand. The edge just became the agent runtime. It is time to build like it.