Please provide a topic and angle to craft your feature

Great features start long before the first paragraph. This guide shows how to choose a topic and sharpen an angle that turns AI news into a story readers finish and share, with checklists, examples, and pitfalls to avoid.

Why topic and angle decide whether readers stay

Most writers open a new doc and start typing. The best ones start by choosing a topic and sharpening an angle. Those two choices determine whether your feature feels urgent, useful, and trustworthy. They drive your interviews, your structure, and the takeaways you promise in the first six sentences.

In the fast moving world of AI and agents, the difference is dramatic. A vague topic like model releases becomes a clear story when reframed as a question with stakes. For example, instead of covering yet another agent platform announcement, you can ask how it changes developer economics, what teams must do this quarter, and the specific risks that rise as a result.

This playbook gives you the tools to make those choices quickly and well. Use it to frame a feature, pressure test your thesis, and ship a story readers save and share.

Topic vs. angle, made simple

- Topic is the concrete subject or event. It answers what happened and who is involved.

- Angle is the lens or claim that gives the story meaning now. It answers why this matters, for whom, and what to do next.

Think of topic as the location and angle as the route. A good route turns a busy city into an unforgettable walk.

The three angle families that work

You can produce a strong angle fast by picking from three families. Each one includes a promise to the reader and a test you can run before writing.

- Change first

- Promise: explain what just changed and why now.

- Good when: a release, policy, or price move shifts the default choice teams make.

- Test: can you name a before state, an after state, and a trigger that caused the shift?

- Stakes first

- Promise: spell out winners, losers, and immediate actions.

- Good when: the move creates clear tradeoffs across cost, speed, and risk.

- Test: can you list three near term consequences, and a counterfactual if teams ignore them?

- Implementation first

- Promise: show how to adopt the change in a small number of steps.

- Good when: readers need a pattern, reference stack, or decision tree more than a hot take.

- Test: can you outline a four step plan with a measurable checkpoint for each step?

Turn news into a story with the nut graf

Once you pick an angle, write a nut graf that states your thesis, who it affects, and what the reader will get by the end. Keep it to three to five sentences. If you need a refresher, see the practical overview in the Poynter guide to nut grafs.

A strong nut graf includes:

- The change: what is new right now

- The stakes: why readers should care today

- The path: the sections and takeaways you will deliver

Write it before you draft your lede. Then test every paragraph against that promise.

Build your sourcing plan before you type

Angles decide sources. Map the people and artifacts you need to verify claims and fill gaps.

- Primary sources: company docs, release notes, pricing pages, policy texts, and data tables.

- Decision makers: leaders who own adoption, budget, or risk.

- Practitioners: engineers, designers, analysts, and operators who live with the tradeoffs.

- Independent experts: researchers, auditors, or integrators who see many teams.

Use a simple grid: claim, source, verification step, and potential counterpoint. For sensitive claims, follow the practical checks in Nieman Lab on verification practices.

A five part outline that rarely fails

You can adapt this outline to most AI features without feeling templated.

- Set the scene

- Lede anchored in a real moment: a release, a customer shift, or a number that changed.

- One data point or quote that shows stakes.

- What just changed

- Describe the change in plain language.

- Name the old default and the new default.

- Why it matters now

- Map effects on cost, speed, quality, compliance, and control.

- Show contrasts across company sizes or industries.

- How to act

- Provide a four to six step plan with checkpoints.

- Include metrics, budget cues, and risk mitigation.

- What to watch next

- Give readers a short list of signals that will prove or disprove your thesis.

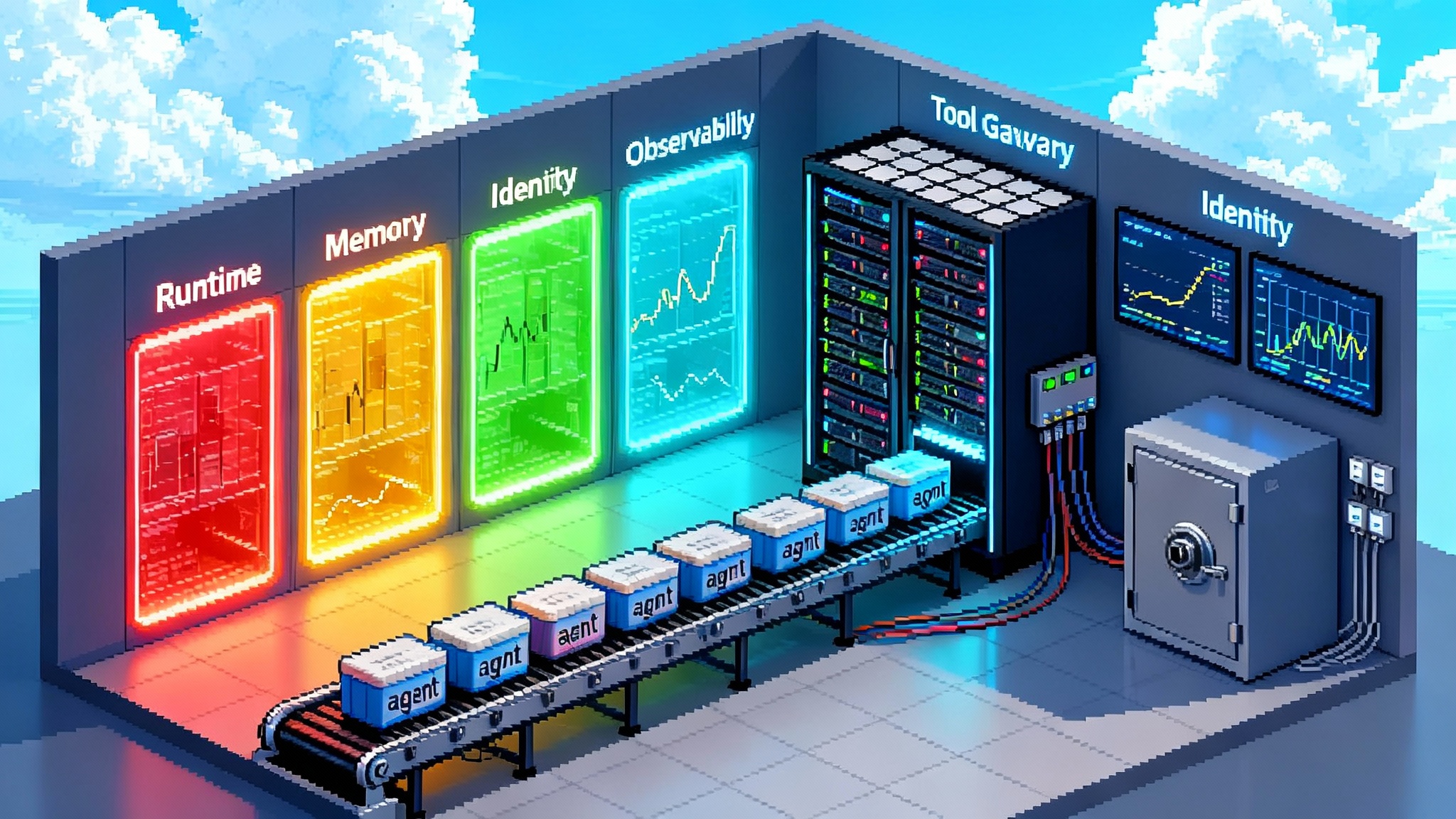

Concrete examples from the agent stack

These example angles show how to transform a broad topic into a focused story.

-

Topic: New runtime for agents at the edge

Angle: Edge runtimes turn agents from demos into services by improving reliability and latency, with clear changes to deployment and monitoring. See how we framed this in our analysis of Cloudflare’s edge turning MCP agents into real services. -

Topic: Tooling that converts prototypes into production agents

Angle: Developer kits now bridge the gap between an idea and a durable system, changing roles and workflow in product teams. Compare the implications we outlined in OpenAI AgentKit turning agent ideas into production reality. -

Topic: Hardware that expands context for autonomous workflows

Angle: Memory and context length change what an agent can hold and coordinate in one pass, which alters cost curves and evaluation. We explored this theme in NVIDIA Rubin CPX breaking the context wall for agents.

Use these as patterns, not templates. The angle is always shaped by the evidence you can gather this week.

The angle pressure test

Before you draft, run your angle through these stress checks. If it fails any, sharpen or switch.

- Falsifiability: what evidence would prove the angle wrong next month?

- Specificity: can you state the change, the who, and the action in one sentence?

- Contrasts: can you name three tradeoffs that a smart skeptic would raise?

- Timeliness: what happens if the story waits two weeks?

- Reader benefit: can you list two decisions a reader can make faster after reading?

If you cannot answer in writing, you do not have an angle. You have a topic.

Make the thesis measurable

Readers trust numbers. Promise a result you can quantify, then deliver the math.

- Cost: expected savings in dollars per thousand requests, or hours saved in triage.

- Quality: accuracy or resolution rates tied to a clear test set or KPI.

- Speed: cycle time reductions from ticket to fix, or from brief to production.

Do not drown the reader in decimals. Pick a single yardstick and report it consistently.

Reporting fast without cutting corners

Speed and rigor can coexist if you set constraints.

- Limit interviews to three voices who represent different roles.

- Pull only two external data sources and reconcile differences.

- Log every claim with a timestamp, a link, and whether it is confirmed or provisional.

- Write quotes last. Paraphrase until the structure is solid, then add the strongest lines.

When time is tight, write the nut graf, the how to act section, and the what to watch next section first. Those parts force clarity and reveal missing evidence.

Write with structure the reader can feel

Formatting is a service to your reader, not a decoration. Use it deliberately.

- Short paragraphs that contain one idea.

- Descriptive subheads that preview the point.

- Bulleted lists for steps, risks, and signals.

- Bold sparingly to highlight definitions and actions.

If a sentence does not move the argument forward, cut it. If a paragraph repeats a point, combine them. Clean writing signals clean thinking.

The four step adoption play you can reuse

When your angle is implementation first, this reusable play gives readers a path.

- Scope the job to be done

- Define the decision or workflow the change affects.

- Name the success metric and the boundary conditions.

- Pick a reference stack

- Choose the minimum viable tools to test the claim.

- State one reason for each choice and one known limitation.

- Run a controlled pilot

- Add a start and end date, a budget cap, and two exit criteria.

- Record baseline metrics for an apples to apples comparison.

- Decide and scale

- Adopt, modify, or kill based on the metric you declared.

- If you scale, document new risks and controls.

The play works for releases, pricing moves, and policy shifts. It works because it forces a decision rather than a vague sentiment.

Common traps and how to avoid them

- Kitchen sink background: if your background section is longer than your analysis, you are reciting, not reporting. Cut half and move on.

- Vendor ventriloquism: if your angle can be pasted into a press release, it is not yours. Add independent evidence or a dissenting voice.

- Shallow numbers: if you cannot explain the denominator, do not publish the percentage.

- Passive outcomes: if readers cannot act within a week, your angle is not practical enough.

A live example, step by step

Imagine a company ships a new agent orchestration feature that promises lower latency and higher task success. Here is how a strong feature would form.

- Topic: a release that changes how agents plan and act inside existing data platforms.

- Angle: the release reduces tail latency and increases end to end task success for customer support workflows, shifting the build vs. buy decision for data teams this quarter.

Nut graf draft:

Customer teams finally have a way to reduce agent stalls while keeping costs in check. A new orchestration layer moves planning closer to the data and cuts retries that waste tokens. For data teams, the change tilts the build vs. buy equation for support workflows. This feature explains what changed, how it alters cost and reliability, and the four steps to test it safely this week.

Outline:

- Set the scene: a real support workflow, the wait time pain, a single number.

- What changed: the new orchestration primitives and how they differ from the old chain.

- Why it matters: reductions in retries, clearer routing, fewer hallucinations.

- How to act: a four step pilot with guardrails.

- What to watch: model drift, tool limits, governance handoffs.

This is not hypothetical. We see similar patterns across releases analyzed in our pieces on edge runtimes, developer kits, and hardware context. The structure holds even as the names change.

Checklists you can paste into your doc

Angle checklist

- One sentence thesis that names a change, a stakeholder, and an action.

- Three concrete effects within this quarter.

- A counterargument that a smart skeptic would make.

- One falsifiable signal you will watch after publication.

Sourcing checklist

- Two primary documents and one independent expert.

- One current customer or practitioner.

- One informed skeptic with no vendor ties.

Editing checklist

- Nut graf fulfills the promise and sets scope.

- Each section header previews the point in plain language.

- Metrics are defined once and used consistently.

- Links are descriptive, relevant, and few.

Style rules that build trust

- Prefer short words to long ones. Prefer active voice to passive voice.

- Define acronyms on first use and do not assume domain knowledge.

- Avoid hype. Use numbers tied to a method rather than adjectives.

- Attribute uncertainty. Say what you do not know yet.

These rules are not decoration. They reduce cognitive load, which is a gift to the reader.

How to pick a headline that carries weight

Your headline is a contract. Make it specific, future aware, and useful.

- Name the outcome or the audience.

- Avoid clever wordplay that hides the point.

- Keep it within a tight character range so it displays well on mobile.

If you can pair a clear headline with a subhead that states the benefit, your click through rate and completion rate will rise together.

Publish with a forward view

Your story should not end at publication. Readers trust writers who revisit their own claims.

- Add a calendar reminder to check your falsifiable signal in 30 and 90 days.

- When the signal moves, append a brief update at the top of the story.

- If the result contradicts your thesis, explain what you missed and what changed.

Nothing builds loyalty like a writer who grades their own work.

A closing template for your next feature

Feel free to copy this and fill it in before your next draft.

- Topic: the event, release, or policy

- Angle: the change, the audience, and the action

- Nut graf: three to five sentences that promise value

- Sources: two primary docs, one practitioner, one skeptic

- Outline: scene, change, stakes, action, signals

- Metric: the single number you will track

- What to watch: two signals that will confirm or contradict your thesis

Commit to that plan, and your piece will write itself. You will spend less time fighting the draft and more time serving your reader with clarity and courage.