AgentKit turns demo bots into shippable enterprise agents

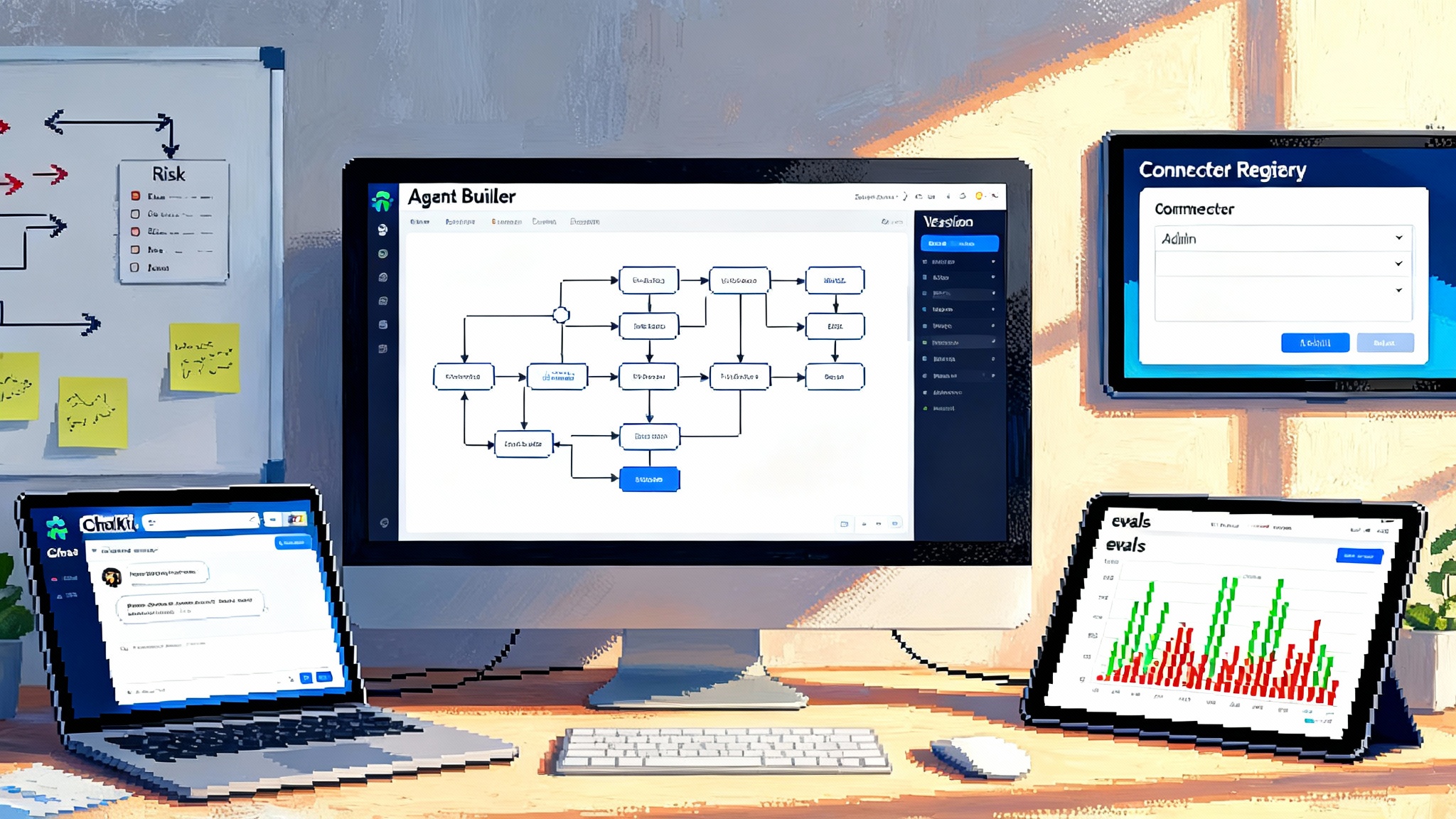

OpenAI just turned agents into real software. AgentKit adds a visual builder, embeddable ChatKit UI, trace graded evals, and an enterprise connector registry so teams can design, ship, and govern agents at scale.

The DevDay moment when agents grew up

The era of novelty chatbots is ending. At DevDay 2025, OpenAI introduced AgentKit, a set of building blocks that treats agents like real software you can design, ship, govern, and improve. The pitch is direct and practical: bring agents out of slideware and into production. On October 6, 2025, OpenAI laid out the core components and published details in its own announcement, which you can read in Introducing AgentKit from OpenAI. The claim is simple. If you want to move from experiments to outcomes, you need shared parts, clear controls, and measurable quality.

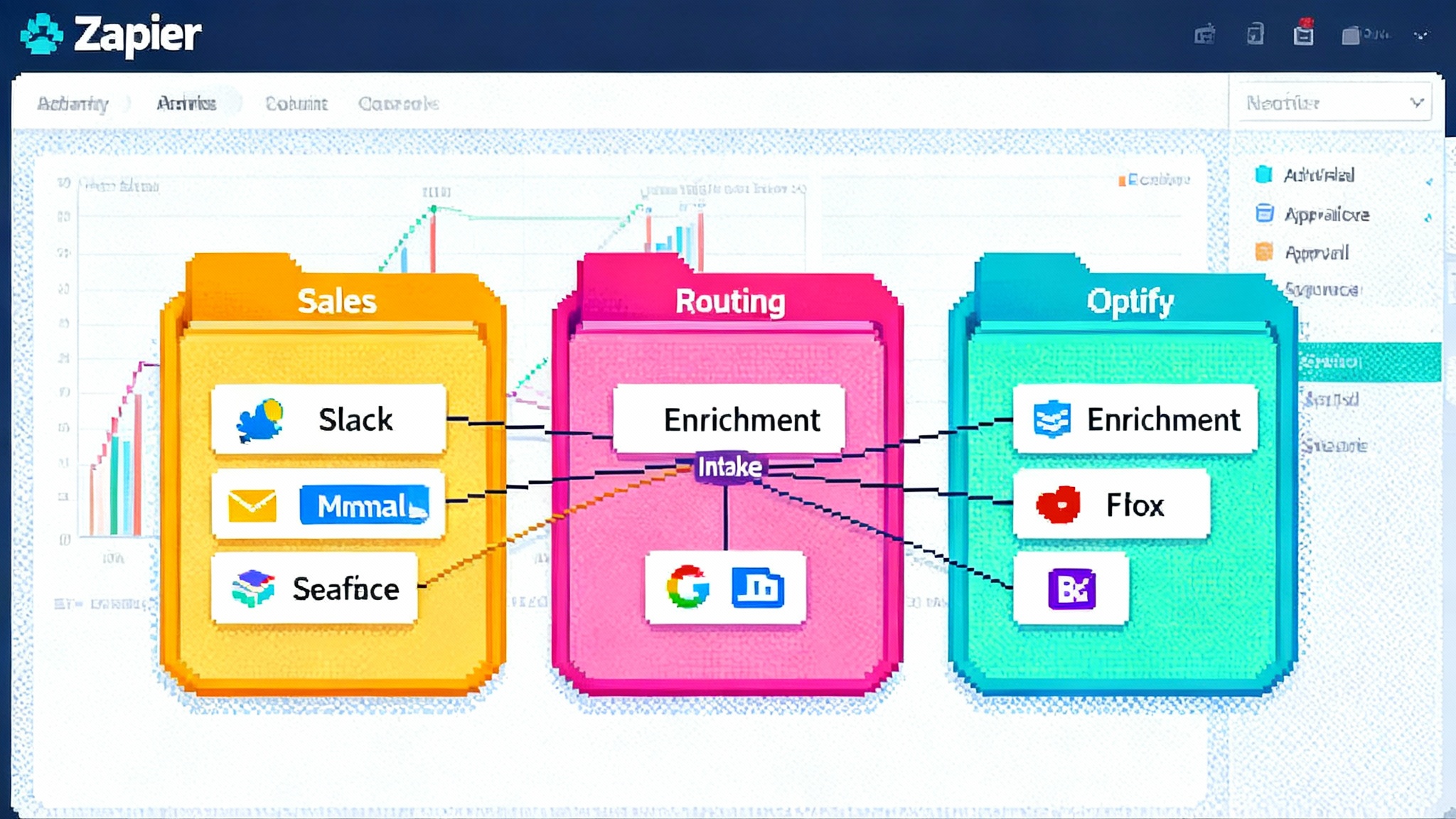

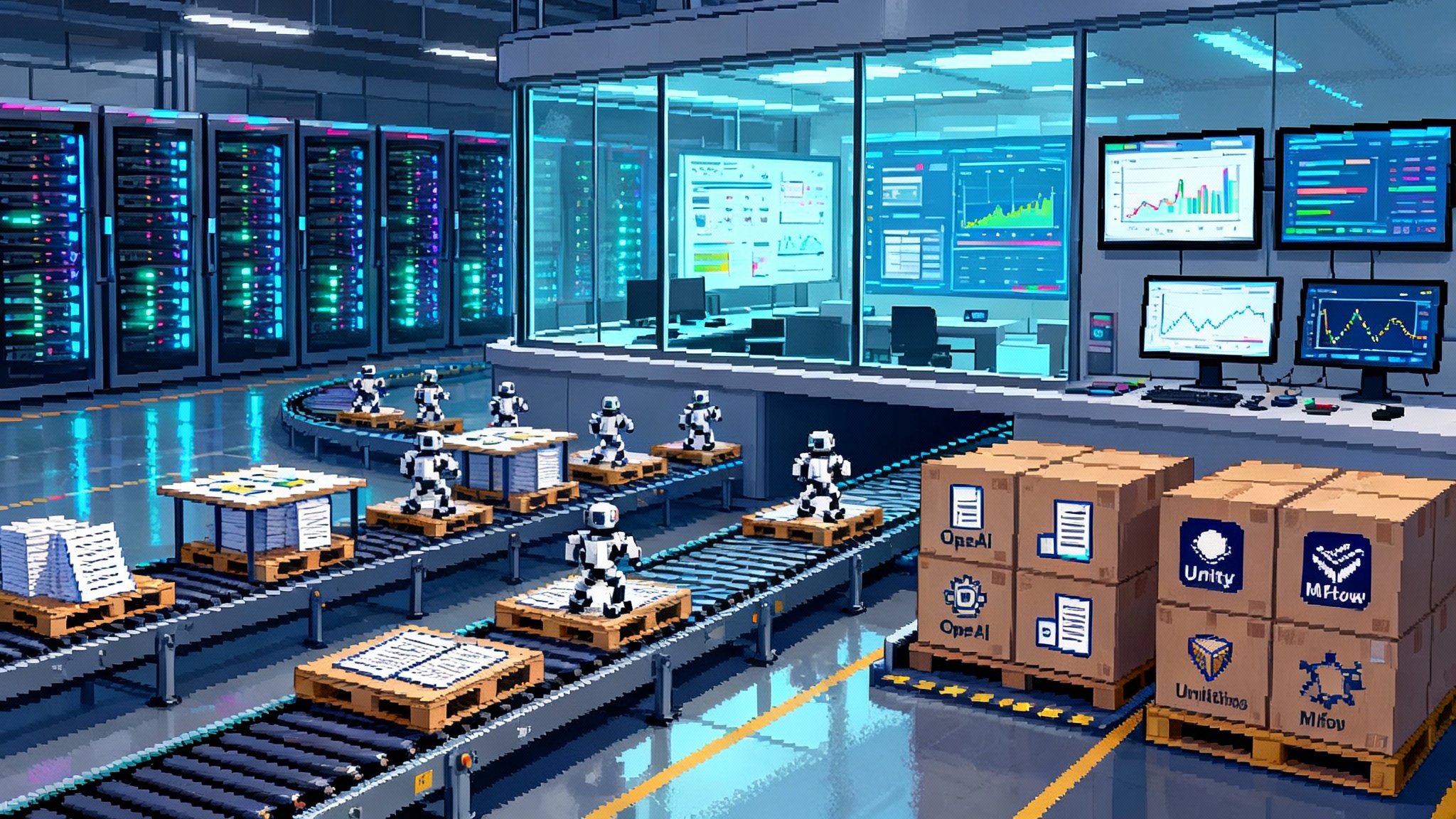

Think of AgentKit like a factory floor for agents. Instead of wiring together brittle scripts, one team assembles the workflow on a visual canvas, another drops in a production ready chat interface, a third defines success criteria, and compliance approves which connectors an agent can touch. You get a common set of parts and a shared set of guardrails. The result is not a demo. It is a shippable system.

What AgentKit includes in plain terms

AgentKit is a product suite with a clear division of labor:

- Agent Builder helps you design and version multi step workflows on a visual canvas. It supports drag and drop nodes, preview runs, and guardrails.

- ChatKit is a front end toolkit to embed customizable chat based agent experiences in your product without months of one off user interface work.

- Evals expands to include datasets, trace grading across full runs, automated prompt optimization, and support for evaluating third party models.

- Connector Registry gives administrators a single place to control which data sources and tools agents can use across an organization.

OpenAI also maintains an overview of the platform that summarizes goals and expected benefits. For a structured tour of capabilities, see the OpenAI agent platform overview.

Agent Builder: from whiteboard sketch to versioned workflow

Every production agent starts as a sketch. Historically that sketch became a patchwork of prompts, scripts, and webhooks spread across different repositories with no single source of truth. Agent Builder brings that sketch onto one canvas. You lay out nodes for retrieval, tool calls, policy checks, and human handoffs. You connect them with lines that declare how data flows and where decisions get made. You press run to preview a flow, bake in an inline evaluation, and then version the entire workflow so product and legal can review what changed.

A helpful way to picture this is a film storyboard. Each panel shows a scene in the agent’s journey: gather context, decide which tool to call, draft a response, run a policy check, and ask for human approval when needed. Storyboards help everyone discuss the same movie. Agent Builder gives teams the same shared artifact for agents and turns vague intent into a reproducible program.

Two implications follow immediately:

- Shorter build loops. A developer and a subject matter expert can build together in the same interface, so product decisions happen where the work is visible.

- An audit trail you can trust. Versioning means you can answer the hard question after an incident or a failure: exactly what changed and why.

If your organization is already mapping the rise of platform level agent runtimes, this slots into the larger story we covered in our look at the agent runtime wars. A visual builder is quickly becoming table stakes, but maturity shows in how you version, test, and approve each change.

ChatKit: shipping a conversation, not just an API

Many promising agents never launch because teams underestimate the front end. You need a chat surface that looks on brand, supports streaming updates, handles grounded citations, and deals with authentication, roles, and session recovery. You usually also need accessibility that meets company standards. Teams easily spend weeks recreating the same patterns.

ChatKit is a set of building blocks for that surface. It gives you a customizable chat user interface that slots into your application and speaks the same events as your agent. It supports common interaction patterns like follow up questions, suggestions, and tool results that render as structured cards. It focuses on the chores that stall launches so your team can focus on the domain specific parts users actually care about.

If you are a startup, this matters because your first version has to feel real in a browser, not only in a terminal. If you are a Fortune 500 company, this matters because you want consistent interfaces across products with fewer custom forks per business unit. Treat OpenAI’s time savings claims as a benchmark to beat in your own rollout plan.

Evals with trace grading: measure the whole run

Calling an agent successful because it answered five questions correctly in a sandbox does not move the needle in production. You need evaluation that tracks behavior over hundreds or thousands of real traces and grades the middle steps, not just the outcome. That is where trace grading comes in.

OpenAI’s expanded Evals add four practical capabilities:

- Datasets let you build and grow a bank of real tasks over time. You do not need to design a perfect benchmark on day one.

- Trace grading scores an entire run. You set pass criteria and let graders evaluate the last 100 or 1,000 executions to find regressions and drift.

- Automated prompt optimization proposes improved prompts based on grader outputs and human annotations so you close the loop faster.

- Third party model support lets you compare OpenAI models with other providers in one place, which is useful when procurement wants options.

A concrete example: imagine a customer support agent that indexes manuals and calls internal tools to issue refunds. Your pass criteria might include four checks. Did the agent retrieve the correct policy section. Did it select the correct refund tool. Did it fill parameters accurately. Did it present a compliant final explanation. Trace grading lets you score those checks across a week of traffic. If a new prompt improves final answers but increases tool misuse, you will see it immediately.

Connector Registry: enterprise plumbing for trust and speed

Most large organizations already allow agents to touch shared drives, ticketing systems, and messaging tools. The problem is that every team wires those connections differently, which creates policy drift. The Connector Registry gives administrators a central place to define which connectors exist, which scopes they expose, and which workspaces can use them. The early list includes Dropbox, Google Drive, Microsoft SharePoint, and Microsoft Teams, along with Model Context Protocol servers.

This matters for two reasons. First, it prevents the common launch delay where security only discovers a rogue connector during final review. Second, it reduces the rework cost when a vendor rotates credentials or changes an application programming interface. Change it once in the registry and all dependent agents pick up the update.

Guardrails as a first class building block

Agent Builder supports a guardrails layer that masks or flags personally identifiable information, detects jailbreak attempts, and applies policy checks before an agent proceeds. Because guardrails are modular and open source friendly, teams can standardize a baseline set of protections across business units and still add domain specific rules when needed. In practice, this allows you to route certain tasks into a human in the loop workflow while letting low risk tasks flow straight through.

Here is a simple mental model. Treat guardrails like airport security. Everyone goes through a basic scanner. If something triggers a rule, pull that bag aside for a manual check. Do not shut down the airport. Keep the line moving while adding scrutiny where it matters.

What is available now and how to plan

As of October 6, 2025, OpenAI states that ChatKit and the new Evals capabilities are generally available. Agent Builder is in beta, and the Connector Registry is rolling out in beta to customers with a Global Admin Console. OpenAI positions all of these as included with standard application programming interface model pricing. That pricing claim is important for planning. It suggests you can modernize how you build agents without a separate platform contract. Review your current usage forecasts and rerun your cost model to confirm the totals for your case.

If your organization practices staged rollouts, treat AgentKit like any other platform change. Start with one agent and one business unit. Prove the cycle time reduction in a measured way. Then templatize your approach and scale the pattern to adjacent teams. For more on how this consolidates into a coordinated map of systems, see our take on the enterprise AI control plane.

Reinforcement fine tuning to push the ceiling

OpenAI is also offering reinforcement fine tuning that targets model behavior in agent contexts. In practice this helps with two pain points. First, teaching an agent to call the right tool at the right time rather than relying only on prompts. Second, setting custom graders for what your organization values most, such as regulatory phrasing or latency targets. Fine tuning will not replace a clear workflow or a good user interface, but it can raise the ceiling of what your agent can reliably do within that workflow.

For teams that already run offline evaluator jobs, plan to compare three versions on a fixed dataset. Baseline prompts only, prompts plus reinforcement fine tuning, and prompts plus fine tuning plus updated guardrails. This turns an expensive debate into a measurable tradeoff between performance, reliability, and cost.

How startups can use AgentKit this quarter

A founder with a dozen people cannot afford a multi quarter platform project. The advantage of AgentKit is that you can commit to tight, specific milestones.

- Pick one workflow with a clear economic signal. For example, lead qualification, onboarding, or scheduling. Avoid the temptation to build a general assistant.

- Build the first pass in Agent Builder and use a template to cut setup time. Wire only the connectors you need and keep scopes narrow.

- Use ChatKit to ship a real interface fast. Set an internal service level objective for response time and track it.

- Create a 50 task dataset that reflects your real traffic. Use trace grading to find where the agent fumbles. Fix the top two failure modes, then retest.

- Ship to 5 percent of users as a canary. Watch regressions in trace grading. Roll forward if clean. Roll back if not. Keep logs so you can explain every change to customers.

By week four you should know if the agent saves money or time. If not, kill it and pick a narrower use case. For context on how the broader ecosystem is industrializing around this idea, revisit our analysis of how agent factories arrive.

How large enterprises can standardize without slowing down

Enterprises often have dozens of teams building agents in parallel. Without a shared platform, the result is redundant code, scattered policy, and inconsistent interfaces. AgentKit offers a way to harmonize your efforts without stopping the program.

- Establish a small platform group that owns the Connector Registry and guardrails. Their job is to prevent policy drift, not to micromanage every workflow.

- Create a reference implementation using Agent Builder. Publish versioned templates for common patterns like retrieval augmented generation, human review, or multi tool orchestration.

- Mandate trace grading with organization level pass criteria. Let teams add domain specific checks, but keep a shared baseline.

- Standardize on ChatKit as the default interface unless a product has a documented reason to diverge. This saves you a month per project in user interface work and unifies training and help content.

- Review reinforcement fine tuning for a small set of high value workflows. Use a fixed evaluation dataset and treat compute spend like any other budget line item.

Measured this way, the benefit of AgentKit is not only speed. It is the ability to show executives and auditors a clean map of what is live, what is changing, and how quality is measured.

Risks, gaps, and how to mitigate them

No platform decision is free. Anticipate these issues and plan accordingly.

- Beta components. Agent Builder and the Connector Registry are in beta, which means features and interface details can shift. Keep a changelog and pin versioned templates so you can roll back if a change breaks a workflow.

- Vendor concentration. AgentKit tightens your linkage to OpenAI services. Mitigate with evaluation support for third party models and by keeping your domain logic in versioned code or templates you control.

- Evaluation blind spots. Trace grading is only as good as the checks you define. Start simple. Expand the rubric as you observe new failure modes in production.

- Connectors and least privilege. A central registry reduces risk, but scopes can still sprawl. Adopt a quarterly access review the same way you would for identity and access management.

- Human in the loop cost. Adding approvals everywhere will kill your gains. Use guardrails to narrow the paths that require review and let low risk tasks flow through.

A 10 day starter plan

Day 1: Pick a single high value workflow and define a success metric such as tickets resolved without escalation or minutes to complete a task.

Day 2: Build the minimum viable workflow in Agent Builder. Include a single connector and a single guardrail.

Day 3: Use ChatKit to embed the agent in a test environment. Invite internal users from product, support, and legal.

Day 4: Draft a 50 task dataset for Evals and define pass criteria for trace grading. Include at least one policy check.

Day 5: Run 100 executions. Fix the top two issues. Rerun the evals.

Day 6: Expand the dataset to 100 tasks. Add a second guardrail if needed. Do not add more connectors yet.

Day 7: Launch to a 5 percent canary. Monitor trace grading results. Track user feedback in a shared document.

Day 8: If clean, raise to 20 percent. If not, roll back and fix.

Day 9: Prepare a one page summary with metrics, a screenshot of the Agent Builder version, and a list of guardrails and connectors in use.

Day 10: Decide. Ship to everyone, iterate for another week, or stop and choose a smaller problem.

What changes next

AgentKit does not invent the idea of agents. It standardizes the messy parts that kept them from shipping at scale. Visual workflows cut coordination cost. A shared user interface removes weeks of repetitive work. Trace graded evals turn stories into data. A connector registry gives security and compliance a real handle on risk.

Startups gain a faster path to product proof. Enterprises gain a map and a control plane. In both cases, the goal is the same: move from experiments that excite to systems customers can trust. When you can design, ship, measure, and govern agents in one place, you spend less time stitching tools and more time building value. That is how prototype season ends and production season begins.