AI takes the mic: MeetGeek agents attend your meetings

MeetGeek has launched AI Voice Agents that join Zoom, Google Meet, and Microsoft Teams as real attendees. They speak, take turns, and update your CRM or ticketing tools in real time. This guide shows the ROI of a focused 30 day pilot.

Breaking: AI shows up to your meeting

On October 31, 2025, MeetGeek announced AI Voice Agents that join Zoom, Google Meet, and Microsoft Teams as real participants. These agents do not just take notes. They listen, speak, follow instructions in real time, and push updates into your systems while people are still talking. The company framed the launch as a jump from passive transcription to active participation with integrations across enterprise tools. If you want the top line claims and compliance posture, see the official launch announcement details.

For the past few years, meeting software was a silent scribe. It captured audio, produced transcripts, and generated summaries. Useful, yes, but the conversation still relied on a human to turn words into action. MeetGeek’s move is a shift toward agents as attendees, not just observers. It is a notable moment in the march from chatbots to workbots, a path we have explored in our browser becomes the agent analysis.

From silent scribe to speaking teammate

A speaking agent changes two stubborn bottlenecks that summaries never solved.

- It improves the meeting while the meeting is happening. The agent can nudge toward missing details, clarify a date or quantity, and correct misunderstandings before they spread.

- It turns talk into action without waiting for a recap. Records, tickets, tasks, and follow ups get created as facts are spoken, not hours later.

Picture a sales discovery call. The AI notices budget was never discussed. It waits for a natural pause, then says, “Before we wrap, can we confirm a budget range so we can qualify next steps correctly.” That subtle prompt prevents a missed field that would otherwise require another email. On a support triage call, the agent gathers the product version, reproduces steps, and exact error wording, then opens a ticket with a clean summary. When a specialist joins, everyone is already fifteen minutes ahead.

In operations, the agent can handle routine verification calls. It confirms a tax identification number, cross checks a shipping address, summarizes results, and logs a checklist directly in an ERP. That is not a calendar event anymore. It is a background task that completes reliably.

Why voice and turn taking matter

Speaking changes the physics of a meeting. Three mechanics matter most.

-

Turn taking. Humans trade the floor using silence, breath, intonation, and eye contact. A competent agent must detect those cues, decide when to take a turn, and yield gracefully when interrupted. Without this skill, the agent is either a wallflower or a bulldozer. Timing at the level of milliseconds separates helpful from annoying.

-

Real time understanding. A note taker can wait for post processing. A speaking agent cannot. It needs streaming recognition, incremental intent detection, and a way to recover from a misheard name or number. If it is unsure about a shipping code, it should restate the code as a question, not a fact.

-

Fast synthesis. Good teammates speak briefly. To do that, the agent must compress context, pick the most important question, and keep turns short unless asked to elaborate. Short, precise turns earn trust.

The practical effect is a new meeting dynamic. You can let the agent handle the cold open on a candidate screen, then a recruiter joins for the last ten minutes to assess nuance. You can run three discovery calls in parallel with an agent qualifying each lead against the same checklist, then a salesperson jumps into the one that matches your ideal customer profile. For a deeper view on evaluating agent behavior, see how teams are adopting agent-to-agent QA methods.

Under the hood, in plain language

If the agent is a teammate, what is inside the suit. Four layers tell the story.

- Ears. The platform streams audio to a low latency speech recognizer that outputs words as they are spoken. It also diarizes, which means it labels who said what, so the agent replies to the right person and logs the right names.

- Brain. A planning model turns partial words into intent. Did the customer answer the budget question indirectly. Did they give a number. Should the agent ask a follow up or move on. The plan updates as people speak.

- Voice. Text to speech converts the response into audio with natural cadence. For turn taking, the agent should barge in only when invited by silence or a direct question, and it should stop the instant a human interrupts.

- Hands. The agent writes to systems like Salesforce, HubSpot, Jira, Notion, or a home grown database through connectors or webhooks. This is the leap from summaries to action. When a call ends, tasks and records already exist.

MeetGeek’s own positioning emphasizes this stack and the operational outcomes it unlocks. The company describes assigning agents to specific meeting types, running multiple calls in parallel, and syncing outcomes into connected tools. A high level feature map is on the Voice Agents product page.

What changes for sales, support, and ops today

Here is how agents as attendees move the needle this quarter.

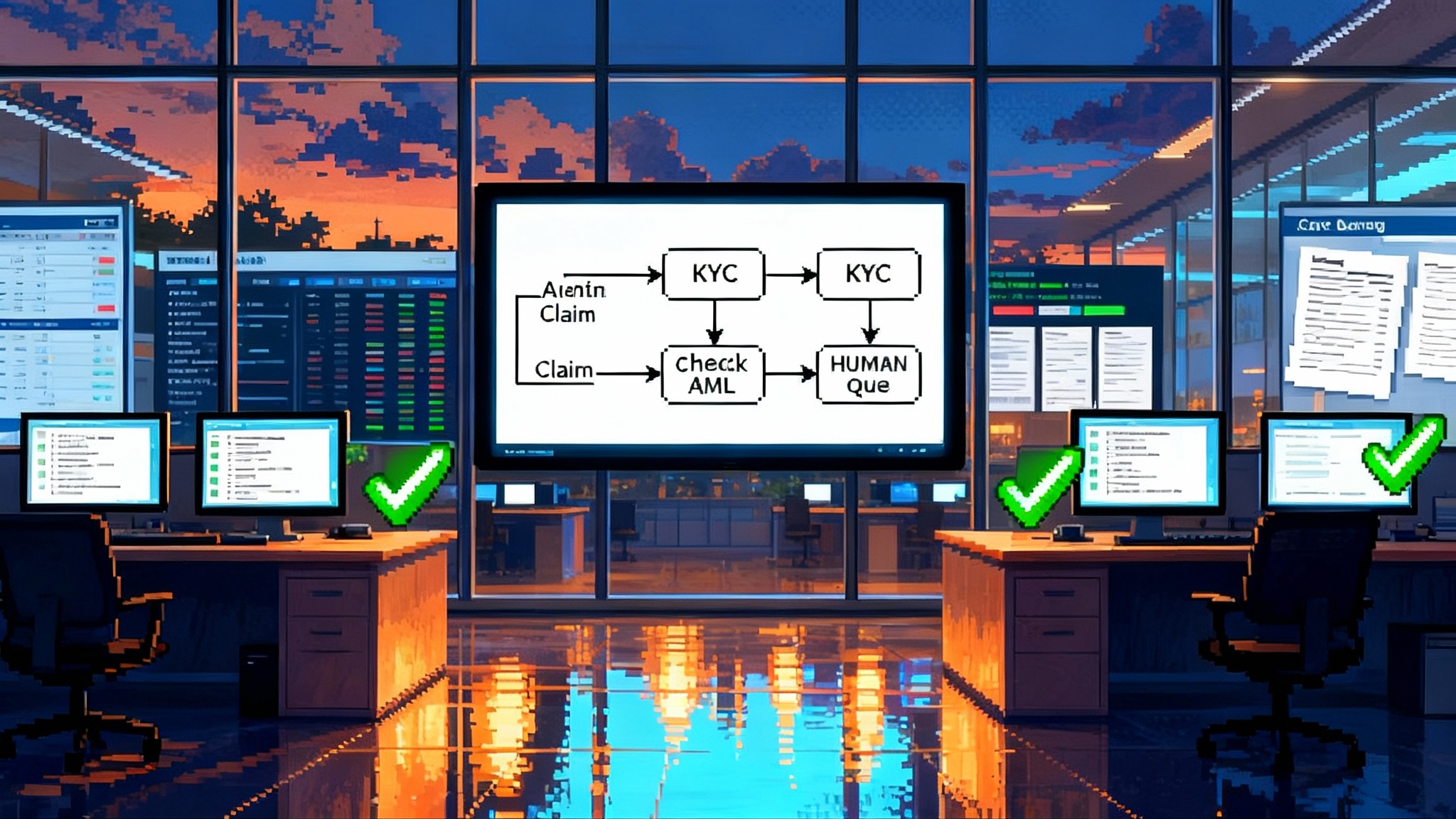

- Sales discovery and demos. The agent follows a clear qualification script, logs answers to your CRM, and prompts for missing data. It can draft a summary in your email client before you leave the call. You can measure time saved per rep per week and the percentage of discovery fields completed on the first call.

- Inbound triage for support. The agent collects environment details, reproduces steps, and captures error messages with exact wording. It opens a ticket with reproduction notes and priority. A human specialist joins only when needed, which shortens queues and improves first contact resolution.

- Recruiting screens. The agent runs consistent first pass interviews with the same five questions, the same rating scale, and structured notes. Once a candidate passes, the recruiter focuses on motivation and team fit instead of basic facts.

- Operations and compliance calls. Think vendor verification, routine policy acknowledgments, or checklist driven inspections. The agent handles the script and writes the outcome to the right repository. The benefit is predictability and clean audit trails.

If you are already exploring automated back office workflows, compare this approach with our coverage of AI office manager automation. The common thread is moving from after the fact summaries to in the moment actions that stick.

Why startups may outrun the suites

Incumbent suites are powerful, yet startups have three edges in this race.

- Incentives. A suite that already owns meeting, email, and documents has little reason to disrupt its own interfaces mid quarter. A startup wins only by changing the experience, so it takes bets on new patterns like agents as attendees.

- Velocity. A small team can ship a new interrupt rule, a new escalation phrase, or a new integration in a week. Turn taking is an art, not a checklist. The teams that tune quickly will feel more human more often.

- Vertical focus. Startups can build for a narrow job. A screening agent for a healthcare clinic sounds different and asks different questions than an agent for enterprise software discovery. Even if both use the same base models, the prompts, guardrails, and integrations are specialized.

This does not mean the suites will miss the moment. It means the first wave of revenue producing agents may come from companies that assemble best of breed components and ship faster, then plug into larger ecosystems once patterns stabilize.

Guardrails that earn trust

Autonomy without care breaks trust. Buyers should insist on these controls and test them in pilots.

- Clear, audible consent. Every participant must know an AI is present and speaking. Put the agent’s name in the invite, show it as a tile in the meeting grid, and have it introduce itself with one sentence and a purpose. If anyone withdraws consent, the agent should leave the meeting.

- Recording and logging transparency. The agent should announce what it will store, for how long, and where. The system should produce searchable logs that show what the agent did, when, and why. That matters for audits and for learning.

- Access control and redaction. Limit who can see transcripts and audio, and redact sensitive fields like payment card numbers. Give administrators retention and deletion controls that match company rules.

- Certifications and readiness. Look for SOC 2 Type 2 certification. Ask how the vendor meets HIPAA obligations when relevant. Confirm whether the vendor supports GDPR requirements and regional data hosting in the United States or the European Union.

- Escalation and human override. An agent should recognize when it is out of depth and invite a human to take the floor. Write this into the instructions, and test it during the pilot.

- Platform quirks and beta limits. Early agents may have constraints on speaking duration, barge in behavior, or require specific host permissions in certain meeting platforms. Plan for these and write a runbook.

The 30 day pilot playbook

You do not need an overhaul to test this. You need a narrow problem, a clear metric, and a month of focused execution.

Week 0: scoping and success metrics

- Pick one workflow that repeats at least five times per week. Sales discovery and support triage are strong candidates. Avoid strategic reviews or board meetings.

- Define one primary metric and one secondary metric. For sales, a primary metric could be discovery field completeness on the first call. A secondary metric could be time to next step scheduled. For support, a primary metric could be time to first full ticket with reproduction steps. A secondary metric could be customer satisfaction on the first reply.

- Write a consent and disclosure script of one or two sentences that the agent will say at the start. Keep it clear and friendly.

Week 1: setup and rehearsal

- Create a dedicated agent profile for the chosen workflow, not a general purpose bot. Give it a specific name, role, and tone that match your brand.

- Write a prompt that includes goals, the exact questions to ask, information to capture, when to yield to a human, and what to log where. Specify how to confirm key facts. Add a rule to restate numbers and codes to avoid mishearing.

- Connect the right systems. Tie the agent into your CRM, ticketing, or documentation tools. Use a sandbox first and verify field mappings end to end.

- Rehearse with internal volunteers. Run five calls where teammates try to confuse the agent. Interrupt it mid sentence. Speak quickly. Use acronyms. See how it recovers. Update prompts and escalation rules after each test.

Week 2: limited production

- Invite the agent to real calls for the chosen workflow with one small team. Keep a human present and instruct them to let the agent lead unless it falters.

- Track the primary and secondary metrics daily. Also capture qualitative notes from humans in the room. Where did the agent help. Where did it stumble.

- Tighten the instructions. If the agent asks a question that is already answered, add a rule to check for that field before speaking. If it misses a necessary follow up, add a rule to ask whenever a trigger phrase appears.

Week 3: automation and integrations

- Turn on automatic actions after the call. For sales, that might be sending a follow up draft and logging a next step. For support, that might be opening a ticket with correct labels and a one sentence summary.

- Add a supervisor review queue. Humans approve outbound emails and key updates for a few days. If accuracy holds, loosen the supervision.

- Start measuring meeting time saved and parallel capacity gained. For certain workflows, the agent can run a call without a human present until the last few minutes. Quantify that change.

Week 4: evaluation and decision

- Compare the pilot team’s metrics before and after. Did discovery completeness rise. Did time to next step fall. Did support tickets include reproduction steps more often. Use numbers, not vibes.

- Debrief with customers or candidates who spoke to the agent. Ask whether the conversation felt clear and respectful. Ask what felt robotic or repetitive.

- Decide on graduation criteria. If the agent met the primary metric, expand to a second workflow. If it fell short, identify the top three failure modes and schedule a second pass with specific fixes. Share results with stakeholders and document the runbook.

How to prepare your stack

A successful pilot depends on solid plumbing and clear rules of engagement.

- Identity and access. Use least privilege. Give the agent a service identity with only the fields and objects it needs. Turn on logging at the integration layer so you can trace writes and deletes.

- Data hygiene. Standardize picklists, field names, and validation rules before the pilot. The agent can only write cleanly if the data model is predictable.

- Error handling. Decide what the agent should do when something fails. Should it retry. Should it pause and ask for human help. Put those rules in writing, then encode them.

- Human factors. Teach the team how to speak with an agent in the room. Acknowledge it. Use natural pauses. If you interrupt, the agent should stop. These social rules make a larger difference than most settings screens.

How to measure ROI without hand waving

Leaders will ask for proof. Set up measurement like you would for any new system.

- Time saved per role. Have reps and agents track minutes saved per call for one week before and during the pilot. Be conservative. If the agent saves five minutes on a twelve minute discovery call, that compounds fast over a week.

- Quality lift. Measure discovery field completeness, ticket reproduction quality, or interview notes structure. These are leading indicators that reduce rework.

- Conversion and throughput. Watch meetings per rep per day, time to first next step, and queue age for support tickets. These lag a bit but matter to the business.

- Error rate. Track corrections required by humans on outbound emails, ticket labels, or CRM entries. This prevents lipstick on a pig accounting.

Where this is heading

Agents will grow from single purpose assistants into orchestration hubs that coordinate several systems at once. Expect richer task graphs, smarter recovery when people talk over the agent, and better multi speaker awareness that tracks who has agreed to what. Expect more controls too, including per meeting disclosures, granular access rights, and default data residency options by region.

We also expect the boundary between meeting agents and browser agents to blur. An attendee that can speak and a browser that can operate websites are converging into one class of worker. As we argued in our browser becomes the agent analysis, the interface is just another surface for an agentic workflow. Voice adds speed and trust when done well.

The takeaway

An AI can attend, speak, and do the work. MeetGeek’s Voice Agents are a clear signal that the market has crossed from note taking to action taking. If you run sales, support, or operations, a 30 day pilot is not a science project. It is a practical way to deliver cleaner data, faster handoffs, and fewer dropped balls before the quarter ends. Start narrow, measure honestly, and graduate from summaries to action. Teams that learn this skill early will talk about work more clearly and see more work get done while the room is still talking.