AI That Clicks and Types, and the New Software Social Contract

Google just put agents on the same screens people use. With Gemini Computer Use and Gemini Enterprise, automation shifts from hidden pipes to visible clicks and keystrokes, creating a safer, auditable path to speed.

The browser just became the civic commons of software

In October 2025, Google shipped two moves that quietly redrew the map of automation. First, it unveiled a specialized model that can operate software the way people do. Rather than calling hidden interfaces, it clicks, types, and drags across visible screens. In its own announcement, Google framed this as a developer preview for user interface control and showed it working with a real cursor and keystrokes. If you want the primary source, see how Google introduces the Computer Use model.

Two days later, Google announced Gemini Enterprise, a managed platform for deploying these capabilities in the workplace with governance, identity, and administrative controls. The pitch is direct: organizations can create and run agents that work across the tools employees already use, with policy and oversight built in. Read the strategic positioning in Sundar Pichai outlines Gemini Enterprise.

At first glance, Computer Use looks like a feature release. Look closer and you will see a move from closed interfaces to public screens, and with it a new social contract. An agent that can do the job on the same surface a human sees is no longer asking for privileged backend access. It is earning trust in plain sight. That creates a shared, auditable space between people and machines, and it can accelerate capability while improving safety if we encode the right norms into the interface layer.

From APIs to public screens

For a decade, automation relied on application programming interfaces. If a service provided clean endpoints, you could integrate. If not, you were blocked. That model made service owners the gatekeepers of what could be automated. Robotic process automation tried to bridge the gap by scripting clicks, but scripts were brittle and narrow in scope.

Screen native agents are different in two ways.

- They perceive the full screen. A capable model can read text, detect visual structure, reason about forms and tables, and decide which element is the right one to interact with. It does not memorize coordinates. It interprets context.

- They act with human primitives. The agent opens a tab, types into a field, scrolls a pane, drags a file, and clicks submit. There are no secret back doors. These are the same public roads a person uses.

This interface first approach changes practical reality.

- Reach: If a task is possible for a person with a browser, it is in scope for an agent. You do not need the vendor to publish a new endpoint or grant special keys.

- Transparency: Actions can be logged at the user interface layer. A recording of the cursor and keystrokes is legible to managers, auditors, and customers.

- Portability: Reasoning about patterns like nav bars, tables, filters, and checkouts makes agents more resilient when a page changes its exact layout.

When agents operate through the same surface as people, screens become a civic commons where etiquette, safety, and accountability must be negotiated.

The civic user interface guideline

A civic commons works when rules of the road are visible and easy to follow. Driving is possible because roads have lanes, speed limits, and signals. We need the equivalent for user interfaces if agents and people are going to share the same streets.

Call this a civic user interface guideline. It is not a standards body. It is a practical set of patterns and defaults that make agent behavior safe, debuggable, and respectful of human users.

Here is a pragmatic first edition.

- Human visible identity: When an agent is active in a browser session, show a clear presence indicator. A small banner in the window chrome with agent name, current task, and who authorized it is enough. No overlays that block interaction. A subtle but persistent marker wins.

- Rate and rhythm etiquette: Default to human like pacing with configurable ceilings. For example, no more than one form submission per second and no more than three concurrent tabs per site unless the site declares higher limits. Predictable rhythm avoids load spikes.

- Respect for occupancy: When a human is typing in a field, agents should wait. If the cursor is in a form, the agent can observe but not act. This prevents race conditions and surprises.

- Visibility into intent: Before risky actions, present a short, plain language confirmation card that a person can read or that can be stored in a log. Example: I am about to transfer 4,000 dollars from account A to account B using the Q3 vendors template.

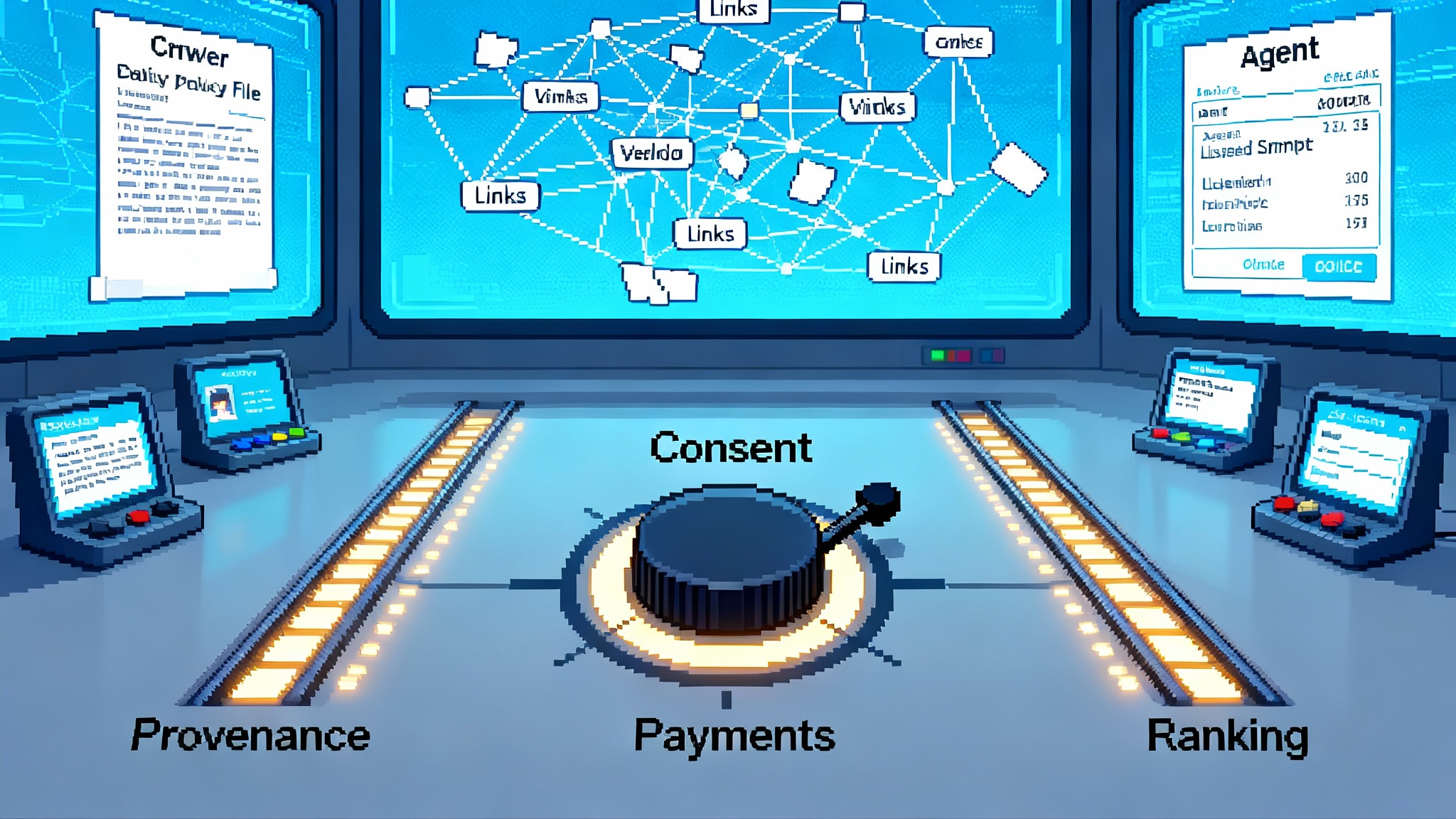

- Consent receipts: When an agent logs in to a site, record who authorized access, which scopes are in use, and when authorization expires. Store the receipt in the agent platform so it is auditable even if the site does nothing special.

These guarantees can live lower in the stack, not just in websites. The browser can enforce pacing. The agent runner can enforce consent receipts. The organization can enforce identity and logging. You do not need every site to agree before the norms work in practice.

Default logging at the interface layer

Default logging makes some teams nervous. It should make them feel safer.

API integrations often provide structured logs for free. Screen integrations do not. That does not mean structure is out of reach. You can design for it.

A minimally useful default log for agent actions in the browser includes:

- Timestamped actions: click, type, select, upload, submit. Include a representation of the target element that blends text content, semantic role, and a selector path, hashed for privacy.

- Screen thumbnails at checkpoints: capture before a form is filled, after it is filled, and after submission. Store at low resolution with on device redaction for sensitive fields like passwords and account numbers.

- Intent and outcome in natural language: what the agent intended to do and what happened. Example: Intended to file invoice 88314 for 1,120 dollars. Outcome was success. Confirmation number 44719.

- Error captures: there is rarely a stack trace at the user interface layer. Capture the visible error message, the element clicked right before the error, and the agent plan for its next step. This speeds triage.

With structure like this, you get a ledger that people can actually read. A supervisor can answer questions in minutes. Did the agent cancel the wrong shipment, or did the site reject a duplicate request because the order was already fulfilled. You do not need to reconstruct intent from server logs because the intent is recorded in plain language.

If you care about privacy, you control it with scope, redaction, and retention. Scope limits which sessions are logged. Redaction strips sensitive fields before storage. Retention windows make sure records age out unless a case is open.

For a deeper treatment of why logs themselves can be a teaching tool for agents, see our take on post incident logs teach AI.

What actually changed in October 2025

Agents have manipulated websites for years in demos and in production. The shift in October was formalization. Google created an official, supported path for building agents that operate on screens with mainstream tooling and cloud support, not research code or a fragile plug in. Then it wrapped those capabilities in an enterprise offering that organizations can buy, govern, and support. One piece teaches the agent to drive. The other issues the license, sets speed limits, and installs the dashcam.

Together they shift integration power from closed interfaces to public screens. If a site is usable by a person, it is more likely in scope for a well designed agent. That is a meaningful redistribution of capability.

From RPA to generalist interface coworkers

Robotic process automation automates repetitive tasks by mimicking human actions. It has extracted value from legacy systems and brittle workflows. Its weaknesses are equally well known. Scripts break when a page changes. Branching logic is painful to maintain. Exceptions bounce the entire process back to humans.

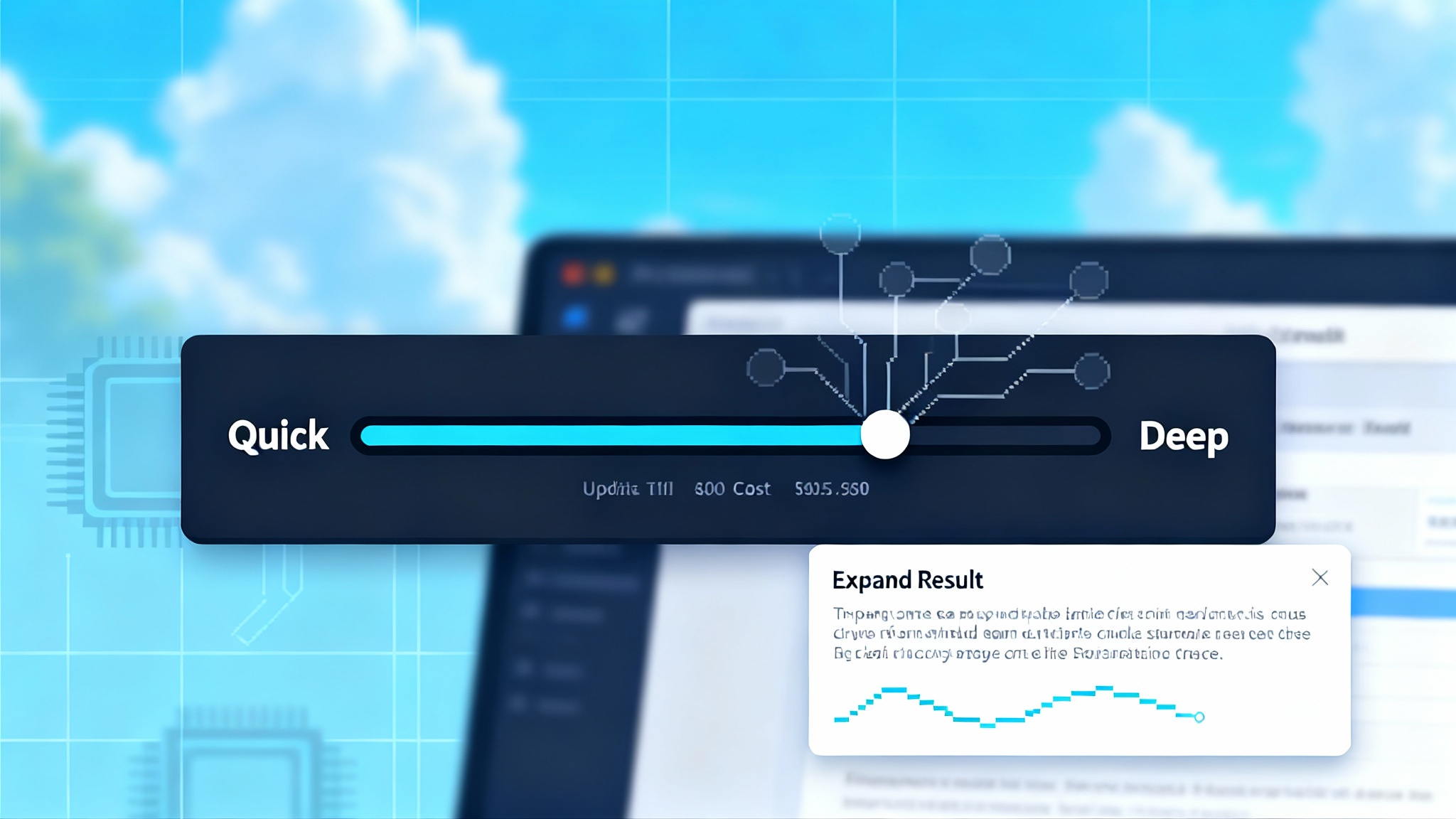

Generalist interface coworkers are different. They can read and infer. Give one a goal like reconcile this purchase order with the supplier invoice and it can open the right systems, search for the right number, export a copy, compare line items, ask a clarifying question, and file the result.

Expect three labor shifts.

- From doers to directors: People will specify goals, constraints, and checkpoints, then supervise agents during execution. The skill shifts toward orchestration and judgment.

- Exception handling as frontline work: Agents will funnel edge cases to specialists who can decide and teach the agent what to do next time. It looks like customer support quality assurance, but for internal workflows.

- Nontechnical teams gain leverage: With prompt patterns and reusable playbooks, a finance analyst can maintain steps for vendor onboarding or card reconciliation without writing code, so long as goals and constraints are clear.

If you run operations, define three roles right now, even part time.

- Agent supervisor: owns outcomes for a set of processes, reviews logs daily, approves risky actions, and tunes playbooks.

- User interface wrangler: maintains selectors, visual anchors, and page hints for target applications so agents have stable signposts.

- Safety steward: sets rate limits, approves consent scopes, and audits logs for privacy and fairness.

These titles map to concrete capabilities. They determine whether this technology makes teams faster or creates a mess.

Design implications for teams that build software

If you ship a browser based product, assume agents will use it. Help them do it safely.

- Publish an automation etiquette file: Add a small machine readable manifest at a well known path that declares rate limits, preferred selectors for critical actions, and rules for off hours traffic. Think of it as a robots file for actions rather than crawling. Keep it human readable.

- Stabilize semantic anchors: Give clear labels and roles to critical buttons and fields. Keep anchors stable across releases. Avoid auto generated identifiers on pay, submit, and delete.

- Create an agent sandbox account type: Let enterprises provision agent accounts with explicit scopes and short lived tokens. Treat these accounts as first class objects in the admin console with clear audit trails.

- Render reversible previews for irreversible actions: Before destructive changes, render a precommit summary that is easy to capture in logs so supervisors can review it later.

These steps help humans and agents. Accessibility improves when labels are clear. Reliability improves when identifiers are stable. Support costs drop when questions can be answered from a standard log. For the policy frame behind permissions and approvals, our view in the constitution of agentic AI is a useful companion.

What browsers and agent platforms should do next

If screens are the new commons, lower layers should carry more of the burden.

- Provide a standard identity surface: Reserve a small, consistent space in the window chrome to declare who is driving. This avoids a mess of overlays and reduces spoofing.

- Offer built in consent receipts: Expose a simple panel that lists the sites an agent is using in this session, who authorized it, and the scopes. This makes spot checks easy for humans.

- Bake in politeness defaults: Throttle outbound requests and submissions per domain, with a way for sites to advertise allowed bursts. Everyone benefits during peak hours.

- Support human handover: Provide a universal key or button that pauses the agent and returns control instantly without breaking state, plus a clear resume gesture. Shared control must feel safe.

A governance model that fits the interface era

Regulators and auditors have struggled to follow automation that moves through closed, server side pipes. The interface era is easier to govern because it is visible.

A practical oversight model can be simple and effective.

- Require default logging at the interface: For regulated tasks, log agent sessions at the user interface layer with redaction for sensitive fields.

- Define retention windows by risk: For example, keep high risk action logs for one year and low risk logs for 90 days.

- Mandate precommit previews and approvals: Require supervisor approval for irreversible actions over a threshold value.

- Encourage etiquette files: Reward compliant vendors with reduced audit frequency or faster certifications.

The goal is not a new compliance maze. It is a handful of clear norms that prevent after the fact confusion.

Why interface first can speed responsible capability

Putting more action at the surface sounds risky. Done correctly, it increases safety.

- Debuggability: When an agent fails, a person can see what it saw and what it tried. You do not need privileged backend traces to understand what happened.

- Social legibility: Managers and customers can watch the same screen a machine uses. Behavior is less abstract, which builds trust.

- Aligned incentives: Sites have reasons to declare etiquette to protect capacity and reputation. Agents have reasons to follow it to avoid throttling and bans. Enterprises have reasons to enforce it to speed audits.

All three forces push toward clarity at the surface. That is how complex systems get safer while still getting faster.

How to adopt this responsibly in your company

You do not need a moonshot. Start small and visible, then grow with intention.

- Pick a browser native task with medium risk: Updating supplier addresses, creating calendar holds with agenda templates, or filing warranty claims under a set dollar limit are good candidates.

- Write the playbook in plain language: Specify starting conditions, goals, allowed actions, constraints, and stop rules. Produce a one page version that an agent and a human can both follow.

- Turn on default logging from day one: Capture the action list, the thumbnails, and the intent and outcome messages. Review every run for a week.

- Add human checkpoints where they change outcomes: Require approval for payments over a threshold or for irreversible deletions. Do not add signoffs that do not affect risk.

- Expand scope deliberately: When stable, raise the dollar limit, add one more site, or allow after hours runs. Change one variable at a time.

Treat adoption like onboarding a new teammate. Watch closely, give clear feedback, and widen the remit as confidence grows. If you are planning for marketplaces, read our view on the agent app store moment to see how templates and interface native norms will meet in practice.

Three quick vignettes

- Claims processing: An insurer uses an agent to prefill claims from scanned documents, then routes edge cases to a specialist. The agent operates through the vendor portal with visible identity and standard rate limits. Logs show which fields the agent filled and which ones a human corrected. Reviewers use those logs to teach the agent new edge patterns.

- Research sprints: A product manager defines a research brief, and the agent opens competitor docs, extracts pricing pages, fills a comparison table, and writes a markdown summary. The agent throttles requests per site and pauses whenever the manager starts typing notes in the same tab. The final packet includes a screenshot ledger so findings are easy to audit.

- Accounts payable: A finance team allows the agent to approve invoices under 1,000 dollars. Every action is logged, and the precommit preview shows vendor name, amount, and purchase order link. If something looks off, a supervisor hits pause, fixes the data, and resumes. Exceptions become updates to the playbook.

Do not wait for an agent app store

Marketplaces for agent templates will be useful. The main event is already in your browser. Screens are now the meeting place between people and machines, and October’s releases turned that meeting place into a first class platform.

If you build products, publish your etiquette file and stabilize your anchors. If you run operations, appoint a supervisor, a wrangler, and a steward, and turn on default logging. If you build browsers and agent platforms, ship identity surfaces, consent receipts, politeness defaults, and instant handover.

This is the new social contract of software. We can grow autonomy and accountability together if we teach our agents the interface and we write our norms into the streets they drive. The browser is ready. Now it is our turn to make it a good city.