Mind Meets Matter: How Custom AI Chips Rewrite Cognition

OpenAI and Broadcom are co-designing custom AI chips, a shift that binds reasoning patterns to interconnects, memory, and packaging. As models learn the dialect of silicon, the frontier moves from raw scale to smarter choreography.

Breaking news and a bigger story

On October 13, 2025, OpenAI and Broadcom announced a multiyear plan to co-develop and deploy custom artificial intelligence processors with capacity targets measured at massive scale and a first phase slated for the second half of 2026. The systems emphasize Ethernet fabrics over specialty interconnects, and they will power both OpenAI and partner data centers. The headline sounds operational. The implications are cognitive. See the OpenAI Broadcom chip partnership.

The current wave of custom chips is not only about cheaper tokens or more energy efficient data centers. It is about the mind of a model adapting to the matter beneath it. As labs co-design silicon, boards, memory stacks, and network topologies with training objectives and inference patterns, the substrate begins to shape the character of machine intelligence. The architecture ceases to be a neutral stage. It becomes part of the script.

From neutral accelerators to cognitive instruction sets

Most developers still picture accelerators as beefy calculators. You hand them tensor operations and they crunch away. But the new custom chips are tuned for specific sequences of thinking. Interconnects favor certain communication patterns. Memory hierarchies reward some forms of attention and punish others. Even the layout of on-chip scratchpads, cache, and high bandwidth memory recasts what a model can do quickly, cheaply, and at scale.

Call these emergent preferences cognitive instruction sets. Not instruction sets in the classic sense of opcodes, but recurring reasoning moves that hardware encourages.

- A fabric optimized for all-to-all traffic invites mixture-of-experts gating and rapid expert exchange.

- A fabric optimized for localized neighborhoods favors routing trees and sparse attention over global softmax.

- A memory hierarchy that can pin large key value caches next to compute encourages long context summarization and retrieval heavy dialogue.

- A hierarchy that prefers streaming bursts encourages chunked planners and staged lookups.

Over time, models learn to think in the dialect that silicon makes easy.

The physics underneath the thought

Three forces make this more than rhetoric.

-

Interconnects decide who talks, and how often. Whether a cluster relies on Ethernet with congestion control, vendor specific links, or custom optical fabrics, the network is a policy. If collective operations are cheap and predictable, you get different training regimes than if they are expensive and jittery. Sharded optimizers, expert dispatch, and speculative decoding all live or die on collective bandwidth and latency.

-

Memory decides what a model can remember, and where. High bandwidth memory stacks, in-package SRAM, and host memory with compression form a ladder of costs. If you can park large key value caches or retrieval indexes near compute, you can keep context around and reason over it. If you cannot, you must discard, summarize, or fetch remotely. That choice changes outputs.

-

Packaging decides how big a thought can be. Advanced packaging joins many smaller dies into a single logical device. Chiplets stitched by interconnect schemes such as Universal Chiplet Interconnect Express let designers mix and match compute and memory. This is not a footnote. It sets the ceiling for batch sizes, expert counts, and the size of sparse matrices that can live on a single logical accelerator.

These are not hypothetical constraints. Google’s sixth generation Tensor Processing Unit, Trillium, doubles key bandwidths and adds a specialized SparseCore that speeds up very large embeddings, which in turn changes what is practical for ranking and retrieval heavy workloads. With Trillium, Google describes a scale out path that ties model graphs to a building scale network, which makes communication patterns a first class design variable. Read the Google's Trillium TPU overview.

Why the OpenAI Broadcom move matters

The OpenAI and Broadcom plan is a public proof that top labs will not just buy racks, they will shape them. Reading between the lines, the collaboration signals three bets.

-

Bet one: inference first designs will relieve pressure on scarce general purpose graphics processors. Training will still need peak flexibility, but many real world sessions consist of retrieval, bursty attention, and short bursts of planning. Those patterns deserve their own datapaths and memory layouts.

-

Bet two: standard Ethernet is good enough when you control the full stack. If you can bring scheduling, congestion control, and operator fusion into alignment with the network fabric, you can match or beat proprietary interconnects for many workloads. That is not a universal truth, it is a workload specific choice, which is the point.

-

Bet three: models are now a systems problem. You can no longer talk about models without talking about placement, collectives, and hot data. The mental model shifts from a function call to an itinerary through silicon.

A slightly accelerationist case for co-design

Size still helps, but bigger alone is running into diminishing returns when the bottleneck is coordination. The next leaps will come from reorganizing how models use time and space.

-

Sequence as a resource. Many large models underuse the network in long stretches and then spike traffic at layer boundaries. Co-designed kernels that keep communication steady and predictable can unlock higher utilization. Think of it like metering green lights for tensor traffic across a city.

-

Memory as habitat, not a bucket. When the memory hierarchy becomes programmable, you can treat the act of remembering as a learned behavior. Retrieval is not a single operator, it is a routine that spans on-chip caches, high bandwidth memory, and local storage. Co-design turns that routine into a cheap reflex instead of an expensive excursion.

-

Reasoning as choreography. Mixture-of-experts, speculative decoding, and planner executor loops all depend on how fast the cluster can agree. If the interconnect can commit small consensus decisions cheaply, you can afford more branching and backtracking. If not, you prune aggressively. The chip teaches the model how to dance.

The argument is not that hardware determines thought. It is that hardware shapes the cost landscape, and models evolve toward the lowlands. Co-design makes the lowlands the places we want minds to linger.

Openness will look different

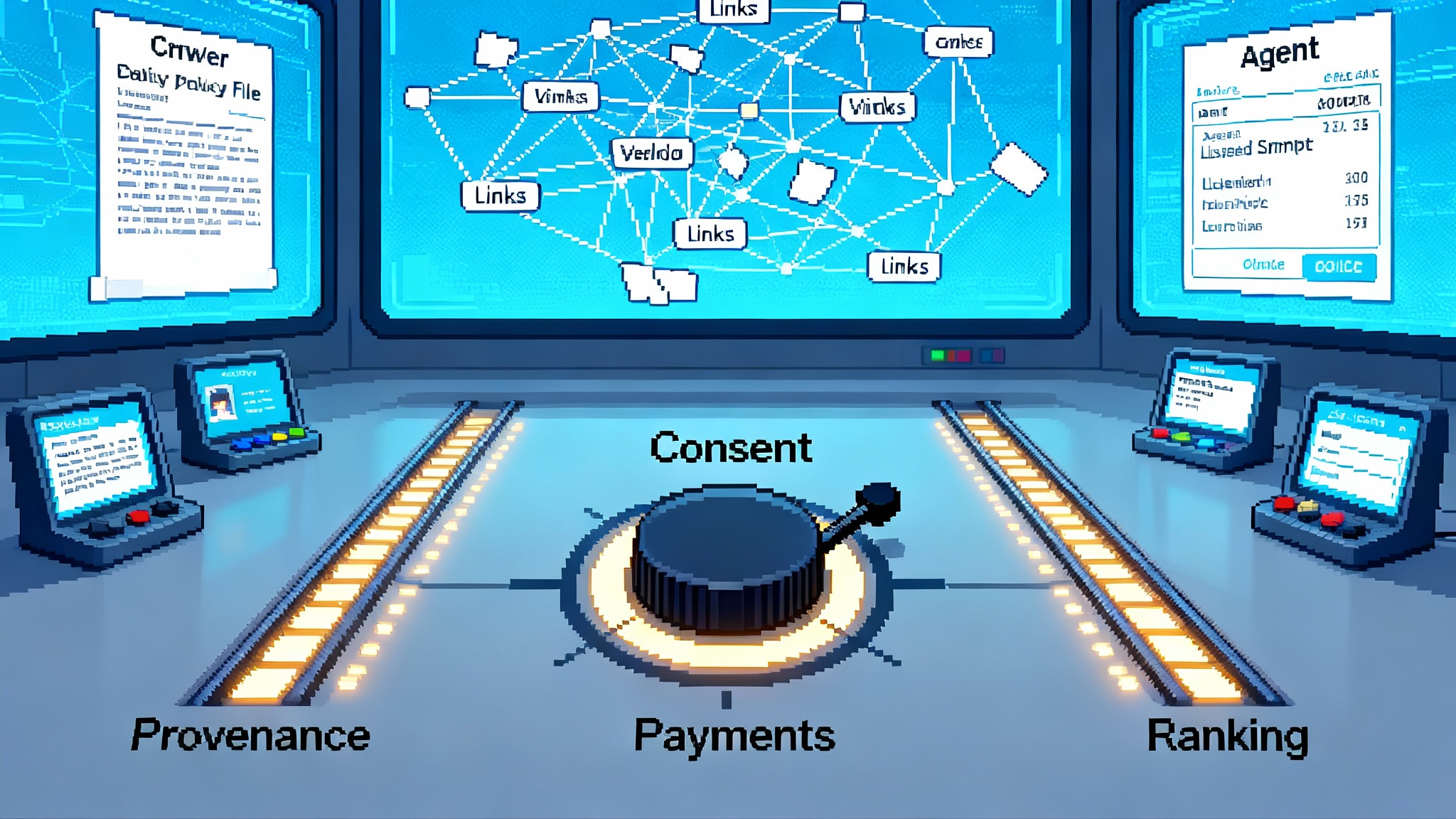

Open research usually means open weights, open code, or open papers. As hardware co-design becomes the frontier, openness will include three new artifacts.

-

Hardware intent. A short, precise spec that describes the intended cognitive instruction set. It should include the preferred communication patterns, caching strategies, and operator fusions. Think of it as the model’s native habitat description. This dovetails with governance ideas we explored in the constitution of agentic AI.

-

Placement and scheduling traces. Reproducibility will depend on how jobs were placed in the cluster and how collectives were scheduled. Sharing anonymized traces will let peers validate speedup claims without disclosing every vendor detail.

-

Memory maps. Researchers should disclose how key value caches, retrieval stores, and intermediate activations were laid out across the hierarchy. Without that, benchmarks are not portable and claims about context handling are not comparable.

Expect a cultural split. Some labs will publish most or all of this metadata and win with reproducibility and community tools. Others will hold it back and compete with end to end performance. Both strategies are rational. The opportunity for the ecosystem is to build neutral formats so that portability does not require the same vendor every time.

Competition beyond Nvidia takes shape

Nvidia remains the center of gravity, but the competitive map is getting richer as new players emphasize distinct cognitive instruction sets.

-

Broadcom and lab partners will tune for inference heavy workloads and Ethernet centric scaling. That points toward fast expert dispatch, efficient memory bound tasks, and predictable latency across commodity networks.

-

Google’s TPU line leans into compiler driven specialization, tight collective operations, and specialized engines for embeddings. That makes retrieval centric and recommendation workloads natural citizens.

-

Microsoft’s Maia program focuses on cloud native integration and algorithmic co-design across cooling, power, and compilers. Expect performance to emerge from systems thinking, not only raw flops.

-

Amazon’s Trainium family optimizes price performance and vertical integration with its cloud stack. That rewards developers who design for Neuron compiler idioms and NeuronLink communication.

-

Meta’s MTIA focuses on ranking and recommendation paths at web scale, a reminder that not every breakthrough comes from pure language models.

-

Startups in reconfigurable fabrics and optical interconnects are pushing in-network compute and ultra low latency collectives. If they hit, reasoning patterns that look like database query plans will speed up materially.

This is not a horse race for a single crown. It is a garden with different soil types. Seeds grow differently depending on where you plant them.

The developer mental model is changing

For years, the safe abstraction was an API that hid hardware behind a familiar list of operations. That is no longer sufficient. To get the most out of the new chips, developers should think like systems choreographers.

-

Target reasoning patterns, not just operators. Instead of asking whether a given accelerator supports flash attention, ask what it wants for long context reading. Does it prefer chunking with local recompute, or wide caches with sparse refreshes? Match your chain of thought to the chip’s memory grammar.

-

Design for the fabric. If your interconnect handles many small collectives well, choose expert routing and speculative decoding schemes that fragment work into small agreements. If it prefers large, scheduled collectives, batch your decisions and fuse them into phases.

-

Treat caches as first class citizens. Persist per session memory near compute when you can. If the hardware supports fast on package key value stores, restructure prompts to maximize cache hits and minimize remote retrievals.

-

Co-design with the compiler. Modern compilers like XLA and vendor toolchains are not afterthoughts. They decide whether your elegant operator graph becomes a series of cache thrashes or a smooth pipeline. Profile for memory stalls and collective wait time, not only floating point rates.

-

Align data with packaging. Chiplet boundaries often mirror bandwidth cliffs. Align tensor partitions and expert placements with those edges. You will gain more by respecting those edges than by chasing theoretical flops.

These practices tie directly to economics. If you want to understand how latency budgets translate into dollars, revisit our note on dialing up thinking time, which explains why paying for more deliberation can be cheaper when the stack is aligned.

What to build next

If you run a lab, schedule co-design sprints that include model architects, compiler engineers, and network operators. Give them a single end to end metric, such as tokens per joule at a fixed latency percentile for a target workload. Put the metric on the wall. Ship a new kernel or a new routing policy every two weeks. Treat hardware feedback as gradient information, not as an after the fact constraint.

If you run a startup, pick a habitat. Decide whether you are building for Ethernet centric clusters, proprietary interconnects, or hybrid topologies. Then shape your product around the cognitive instruction set that habitat encourages. You will move faster with deep fit than by aiming for universal portability.

If you build tools, create interpretable telemetry. Developers need timelines that show when the model was thinking, when it was waiting to talk to its peers, and when it was fetching memory. Put these next to the token stream. Let people see the choreography behind each answer.

If you are a researcher, publish hardware intent, placement traces, and memory maps alongside weights and code. It will raise the bar for your own papers and save your reviewers time. It will also make your results useful on more than one cluster.

If you are a developer, learn to read a roofline chart and a network topology diagram. Neither is exotic. Both tell you where to spend your next month of engineering. Many of the biggest gains come from cutting wait time on memory and collectives, not from inventing a new layer type. And if you are planning deployments, be mindful of siting, permitting, and grid realities. The real world constraints discussed in power, land, and local consent will shape what is possible as much as any compiler flag.

A note on energy, placement, and policy

Custom silicon is often framed as a purity game about peak efficiency, but the strategy here is broader. Ethernet centric fabrics make it easier to spread inference capacity across more locations, to blend with existing data center footprints, and to flex around local supply constraints. That matters for policy and community consent. It also reduces stranded capacity when newer chips arrive, because standard networks resist obsolescence better than exotic links.

This geographic flexibility feeds back into model design. If you know a session might span two nearby pods rather than one giant box, you will choose routing policies and cache strategies that degrade gracefully. The design center shifts from absolute peak batch to percentile latency under partial contention. That shift invites more careful thinking about how a model spends time, and it connects economic goals to engineering goals.

Evaluation needs to catch up

As the mind meets the matter, models will likely feel more situated and more resource aware. They will get better at handling long, messy context because caches live closer to compute. They will get faster at dispatching work because clusters coordinate better. They may become more modular, because experts mapped to chiplets and pods can be trained and swapped with less friction. They will also become more diverse, because different hardware habitats reward different thought styles.

Diversity is healthy. It makes the field less brittle. It also makes evaluation harder. Benchmarks need to grow beyond leaderboards that hide the hardware. We will need suites that test portability, resource elasticity, and behavior under constrained collectives. We should report not only accuracy and tokens per second, but also tokens per joule at a given tail latency percentile, cache hit rates for long context tasks, and sensitivity to collective jitter. That is how we will tell whether a claimed improvement is a real improvement, or just a format change.

The bottom line

The OpenAI and Broadcom deal is a milestone, but it is also a mirror. It reflects a truth that has been building for years. Intelligence is not just parameters and prompts. It is networks, memory, compilers, and packaging. When we co-design those layers with our algorithms, we are not only making the same minds faster. We are giving minds new ways to think.

The acceleration will come from that alignment. Not from the biggest model on paper, but from the best dance with the hardware underneath.