The Causality Ledger: Post-Incident Logs Teach AI Why

Europe's draft incident guidance and October threat reports are turning AI safety into an operational discipline. See how a causality ledger of logs, traces, and counterfactual replays turns compliance into speed.

Breaking: incident reporting becomes the forcing function

Something quiet but important is shifting. In fall 2025, the European Commission released a consultation that includes a template for reporting serious AI incidents. It sketches a near future where, after a harmful failure, providers must file structured accounts of what happened, how it happened, and how it was fixed. You can find the Commission’s consultation and template via the official announcement of the draft guidance and reporting template.

Days later, major labs published threat and misuse updates, including OpenAI’s October 2025 roundup of nation state and criminal attempts to bend models to malicious ends. The point is not public relations. It is a clear signal that misuse will be investigated, disrupted, and documented at useful speed. See OpenAI’s October note on disrupting malicious uses of AI.

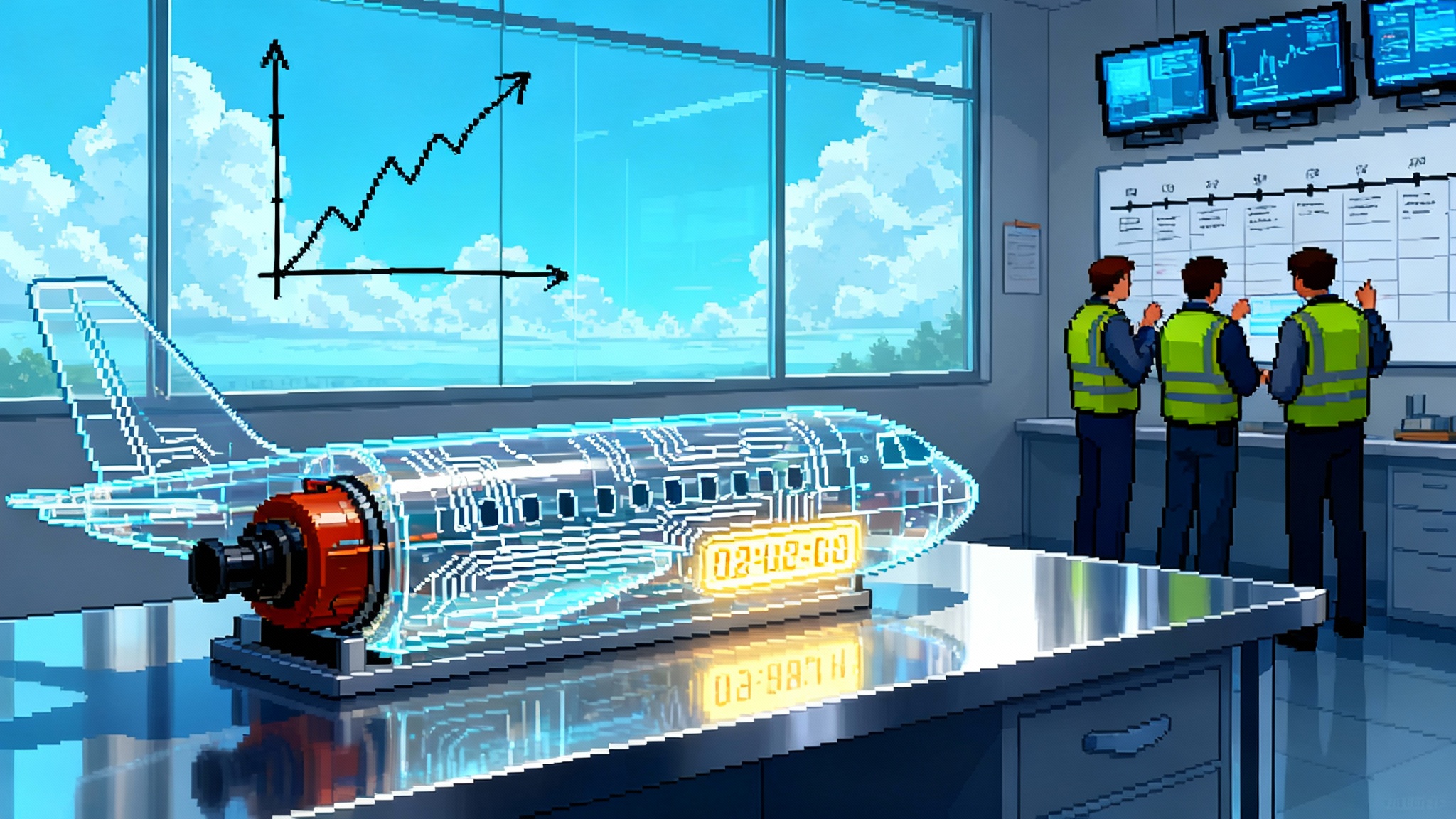

Put these together and you get a new playbook. Incident reporting moves from static ethics talk to a living discipline that forces systems to show their work. Not slogans. Not vibes. A flight recorder you can replay.

From vague ethics to operational epistemology

Operational epistemology sounds academic. In practice, it means proving how a system knows what it claims to know, using artifacts others can inspect. For artificial intelligence, those artifacts are logs, traces, test harnesses, and structured post-incident narratives. Consider three everyday moments:

- A customer agent grants a refund and records the policy rule it applied.

- An autonomous research agent issues a memo and records the sources it used and the tools it called.

- A content filter blocks a post and saves the rule, score, and context that triggered the action.

If you must file a report within days of a serious incident, you cannot rely on memory or screenshots. You need causal records that line up with reality. You need a causality ledger.

What a causality ledger contains

A causality ledger is an append-only, signed trail that makes failures legible and fixes testable. In production it looks like this:

- Flight recorder logs. Input summaries, tool and function calls, model versions, policy checks, and output signatures, all time stamped and bound to a unique run identifier.

- Standardized causal traces. Step by step execution graphs that show which subcomponents influenced which decisions, with stable identifiers that let auditors replay the same path.

- Counterfactual audit trails. Controlled replays that answer what would have happened if the guardrail had fired, if a different data source were used, or if a safer policy had been in place. Store these replays alongside the original to prove that the fix addresses the real failure mode.

- Near real time disclosures. Short bulletins for high severity cases that share indicators, mitigations, and whether the incident is ongoing, contained, or resolved.

All of this can respect privacy and intellectual property. Hashing, redaction, and cryptographic commitments can prove that you know a specific fact without revealing the raw data, and can prove that a timeline was not altered after the fact. Financial systems have long reconciled trades with cryptographic receipts. This borrows the pattern. The goal is not to expose secrets. The goal is to preserve verifiable structure.

Why mandatory filings accelerate safer deployment

Mandatory incident reporting is often framed as a tax on speed. In practice, it does the opposite when coupled with good engineering.

- Faster root cause analysis. When production agents write causal traces by default, incident teams do not need to reverse engineer behavior from scratch. They load the trace, jump to the failing step, and test candidate fixes with counterfactual replays.

- Lower change risk. When a fix is proposed, teams run it through the same replay harness across a representative set of real incidents. The result is a measured risk reduction, not a guess.

- Insurance and procurement wins. Insurers and large buyers ask two questions: how do you detect problems, and how do you recover. A causality ledger provides defensible evidence for both, which translates into better premiums and faster vendor approvals.

- Better red teaming. Standardized traces and templated postmortems help external researchers produce precise, reproducible reports. That attracts higher quality findings and speeds remediation.

This is not a compliance burden. It is a learning loop.

What the first wave of guidance gets right

The early European templates push teams to describe causal links between a system and an incident, not just the outcome. Causality demands mechanisms. It requires a plain language chain like this:

- The agent received a customer request and pulled context from three sources.

- A prompt injection in Source B bypassed a guardrail due to a parser gap.

- The model called an email tool with unescaped parameters and sent a message to the wrong distribution list.

- The safety policy did not trigger because the content classifier was below the threshold for sensitive data.

- The patch escaped the parameters, raised the classifier threshold for this route, and added a structural guard that blocks tool calls unless the context provenance includes an approved domain.

When your template expects this level of detail, teams build to deliver it. They add provenance tags to retrieved documents, keep receipts for tool calls, and label policy decisions in the trace. The reporting tail ends up wagging the engineering dog. The system gets better.

For leaders thinking about external assurance, this aligns neatly with the case for an auditable model 10K. Structured incident traces make financial grade claims possible without guesswork.

The coming AI NTSB

Aviation safety changed when black boxes met independent investigators. Expect the same shape for artificial intelligence: an AI NTSB that starts as a consortium of regulators, insurers, and industry labs. Its value would not be punishment. It would be shared language.

Such a board could pilot three powers with narrow scope and strong confidentiality:

- Limited data access. Subpoena-like access to ledger entries related to critical incidents, bounded by clear rules and audited access logs.

- Anonymized factual reports. The authority to publish deidentified narratives that focus on mechanisms and failures of supervision.

- Actionable recommendations. The ability to issue safety recommendations that reference telemetry profiles and replay standards.

When five labs describe the same class of failure in five different ways, no one learns fast. An AI NTSB would turn scattered lessons into canonical playbooks.

Telemetry standards for autonomous agents

Standards do not need to be perfect to be useful. Start with a minimal schema any agent can emit:

- Run identifier. A globally unique ID per task, bound to the model build and configuration digest.

- Tool calls. A list of invocations with structured parameters, return codes, and latency, plus links to sanitized input and output summaries.

- Data provenance. Content addressed hashes for retrieved documents or data rows, including trust level and origin tag.

- Policy checkpoints. Which rules evaluated, what the scores were, and which gates passed or failed.

- Guardrail events. Triggers for abuse, privacy, or security violations, plus the disposition taken.

- Outcome signature. A compact hash of the final output and any user visible side effects.

Two additions make this schema transformative:

- Counterfactual slots. For each high risk decision, a place to store replay results under alternate policies or inputs. Lightweight to store, heavy in payoff.

- Cryptographic commitments. A Merkle root for each trace, time stamped by a trusted service, so that any later change is detectable.

Standards bodies can shepherd this as a profile on existing observability formats. Vendors can ship adapters in developer kits. Cloud providers can offer managed storage with privacy filters built in. The point is not reinvention. The point is convergence on a small, practical core.

Red team marketplaces that fit AI

Bug bounties helped the web by aligning researchers and defenders. AI needs the same marketplace, tuned for safety and misuse, not only security bugs. A working market would ship with four parts:

- Test packages. Standard scenarios for jailbreaks, prompt injection, model collision, data exfiltration, and autonomy errors, each with metrics, reproduction steps, and redaction rules.

- Scoring and payouts. Clear severity ladders that pay more for systemic findings that transfer across models or products.

- Escrow and timelines. A neutral platform that holds funds and enforces disclosure deadlines so companies can patch before public release.

- Trace attachments. Submissions must include the minimal trace that reproduces the issue, which nudges companies toward the standard telemetry schema.

This is not theory. Companies already run security bounties and safety programs. The missing piece is a shared market that plugs directly into the causality ledger and rewards reproducible mechanisms over one off stunts.

Near real time disclosure without chaos

Not every incident needs a blog post. High severity incidents should get a status page entry and a short public bulletin within a day. A good bulletin is structured and boring:

- What changed

- What failed and why

- Who was affected and how many

- What we did and when

- What is still at risk

- What customers should watch for

The lesson from other industries is simple. Fast, factual disclosure cools speculation, deflates rumor cycles, and prevents duplicate harm. Paired with expert reviews and anonymized after action reports, it builds the sturdy kind of trust that comes from admitting error and explaining repairs.

Implementation patterns and pitfalls

Teams that adopt causality ledgers quickly discover a set of practical do’s and do nots. The patterns below reflect real constraints in busy product environments.

- Start with high leverage routes. Do not instrument everything at once. Begin with the user journeys that combine sensitive data, tool invocation, and external actions. The content filter that can email customers is a better first target than the internal summarizer.

- Limit personal data at the source. Redact early. Use structured summaries and deterministic hashing so that traces are useful in aggregate without storing raw payloads. Set retention windows per field, not per trace.

- Avoid trace explosions. Capture step boundaries and inputs and outputs at those boundaries. Avoid logging every token or every micro function call. You want causal structure, not a firehose.

- Make replay cheap. Design your test harness so a replay feels like running a unit test. Developers should be able to load the failing trace and flip between live and counterfactual runs inside the same interface.

- Bind traces to builds. Every trace should record the model artifact digest and policy version. Otherwise you cannot tell if the fix helped or if the model simply changed.

- Separate signal from narrative. Store a machine readable trace and a human readable memo. The memo tells the story. The trace proves it.

These practices keep the ledger useful without turning it into a compliance warehouse.

What builders can do this quarter

You do not need to wait for formal rules or a new board. Pick three moves and implement them in the next 90 days:

- Add a flight recorder. For any production agent, record tool calls, data provenance tags, policy checkpoints, and output signatures. Store redacted samples for sensitive tasks. Bind each run to a model build digest.

- Ship a causality memo with every fix. When you patch a guardrail or prompt, include a one page counterfactual: what would have happened before the fix and what happens now. File these memos in your knowledge base.

- Stand up a disclosure page. Define severity levels, write your first three template bulletins in advance, and do a tabletop drill. It feels like overkill until the first time it saves you.

- Join or seed a safety marketplace. Start with a private program if needed. Pay for scenario quality and transferability, not just one off exploits.

- Tie incentives to the ledger. Reward teams that close the loop from incident to trace to counterfactual fix. Make that loop visible in performance reviews.

If your organization operates complex agent stacks, you will also benefit from the context in border protocols for AI labs and why long horizon agents win. Causal traces make borders enforceable and long horizon decisions safer to ship.

A practical forecast for 2026

- Early AI NTSB pilots launch. A multi stakeholder board runs its first independent reviews and publishes deidentified narratives. The most cited sections are the causal graphs and replay outcomes, not policy statements.

- Telemetry profiles converge. Observability vendors and cloud providers agree on a minimal schema for agent traces. Open source libraries make it trivial to emit compliant events from popular frameworks.

- Red team marketplaces mature. Safety bounties become normal. Researchers compete on scenario craftsmanship that breaks entire classes of systems. Vendors compete on how quickly they incorporate findings and publish counterfactual fixes.

- Compliance becomes continuous. Incident filings populate dashboards where regulators and insurers can see the pace of mitigation. Organizations with the fastest incident to fix cycles win enterprise deals.

None of this slows deployment. It speeds learning. Once you can explain a failure with evidence and show that the fix works under counterfactual replay, you can ship with confidence. The real friction today is not caution. It is ambiguity.

The real prize: AI that can show its work

The goal is not to generate more paperwork. It is to build systems that can show their work under stress. In the same way a pilot trusts a cockpit because each instrument is calibrated and logged, users will trust agentic systems when their decisions come with trails that engineers and investigators can verify.

The emerging mix of incident templates, public threat reports, and shared replay standards points to a new accountability stack. It blends engineering practice with institutional oversight. It rewards teams that keep ledgers, not slogans. When the next serious incident happens, the sharp question will not be who to blame. It will be how fast we can learn, prove the fix, and move forward with systems that now know how to explain themselves.