When Language Grew Hands: After Figure 03, Homes Compile

Humanoid robots just crossed from chat to chores. Figure 03 and vision language action models turn rooms into programmable runtimes. Learn how homes compile routines and why teleop data and safety will decide the winners.

The moment language touched the world

On October 9, 2025, a line moved. Figure AI unveiled a home‑oriented humanoid called Figure 03 that looks less like a factory fixture and more like something you could live with. Soft textiles, safer batteries, palm cameras, and a sensing stack shaped for household clutter signal an important shift. We are crossing from talking about tasks to doing them. The machine is built around Helix, Figure’s vision language action system, and its debut turns the home into the next software platform. For the core claims and design choices, see the announcement in Figure 03 designed for Helix and the home.

At the same time, Google DeepMind made the thesis explicit. Language is now an input to perception and motion, not just to a chat box. In March, the team introduced Gemini Robotics as a vision language action model that turns words and pixels into motor outputs. In plain terms, we just gave language hands. DeepMind’s framing is clear in Gemini Robotics brings AI into the physical world.

If the last decade taught models to chat, the next one will teach them to tidy, fetch, load, and cook. The leap is not only new hardware. It is the idea that a living room, a kitchen, and a garage are now programmable spaces.

From tokens to torque

Large language models reason in tokens. Homes demand torque. The new pipeline is tokens to trajectories to torques. You say, put the pot on the stove. The model grounds your instruction in a map of the kitchen, localizes the pot with vision, plans a path around the open dishwasher, checks that the stove is off, then produces timed joint commands for a safe grasp and a stable carry. The last step runs at hundreds of control updates per second. It is nothing like a chat turn.

What changed is not just scale, it is structure. Vision language action systems fuse three things in real time. They fuse perception, world memory, and action.

- Perception handles clutter, glare, occlusion, and deformable objects.

- World memory recalls that the green sponge lives under the sink and that the top drawer sticks.

- Action converts human intent into touch, motion, and force profiles that recover when the first attempt fails.

The software stack is less like a chatbot and more like an operating system for hands.

Think of a home as a collection of typed interfaces. A drawer is an interface with detents and travel limits. A knob is an interface with rotational friction and end stops. A stretchable trash bag is an interface with elastic hysteresis. If a robot learns these types, your command is compiled against the physical constraints of your house.

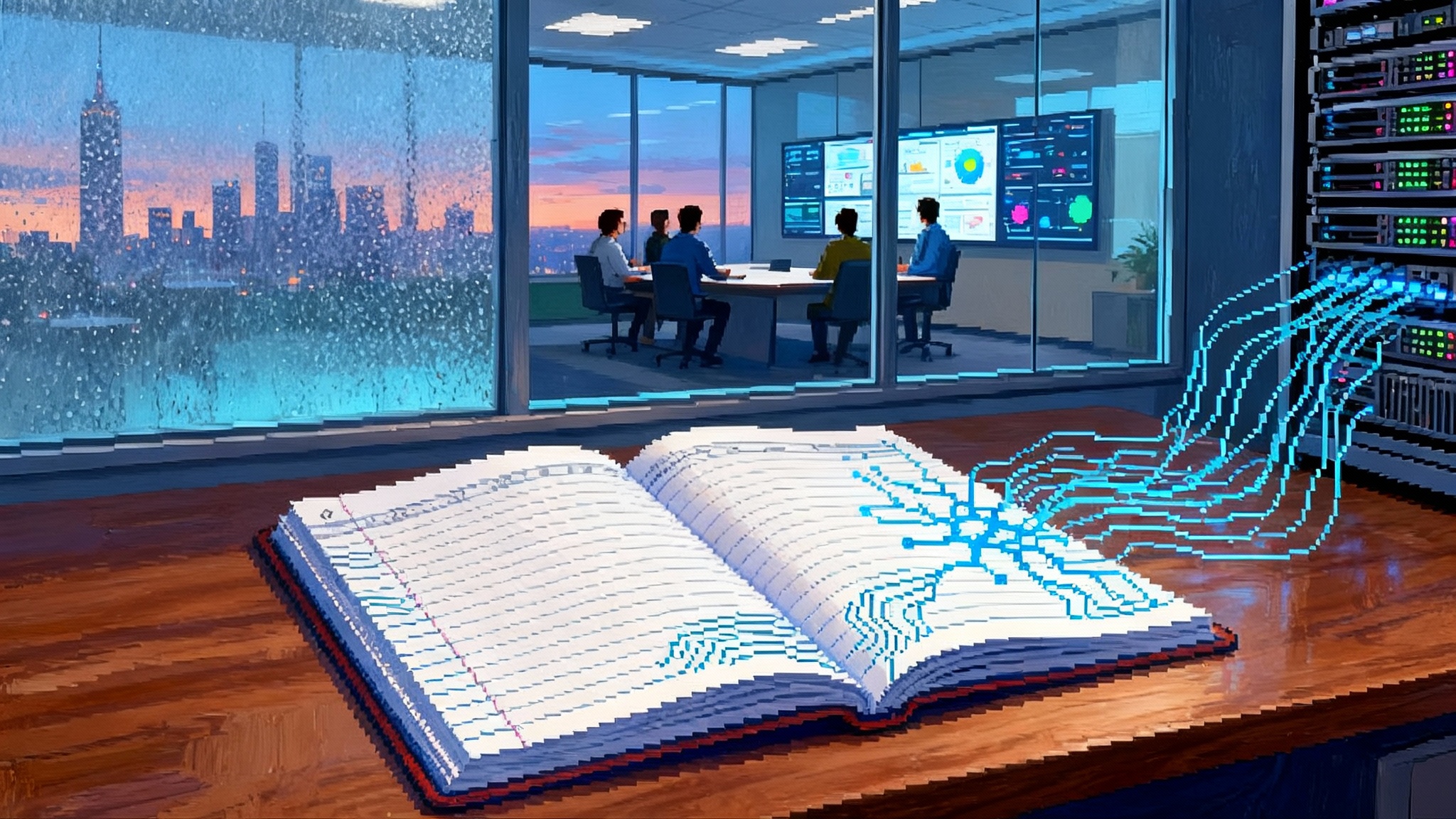

The home is a programmable runtime

The right metaphor is neither smart speaker nor app store. It is a runtime. The environment and the chores define the APIs. Routines become functions, rooms become namespaces, and reachable shelves become addressable spaces. A weekly routine is a cron job with real consequences: move the ladder, wipe the fan blades, pick up the dog toys so the robot vacuum can do its job.

Once you see the home as a runtime, the critical features change. The important primitives are not only a faster model or a bigger battery. They are the elements that make the runtime safe, learnable, and useful.

- Persistent spatial memory that survives furniture moves and seasonal changes.

- Object embeddings that encode grasp points, surfaces to avoid, and likely locations.

- Skill libraries that store reusable behaviors like load a dishwasher or fold a towel, parameterized to your appliances and linens.

- Human‑in‑the‑loop editing so you can record, inspect, and fix a behavior step by step.

A home runtime demands interfaces that ordinary people can shape. You should be able to show the robot how to clear the breakfast table once, then name it and schedule it, with the robot previewing its plan and the risks before it starts. This is a natural extension of the conversational OS moment, where language becomes the fabric for organizing intent and permissions.

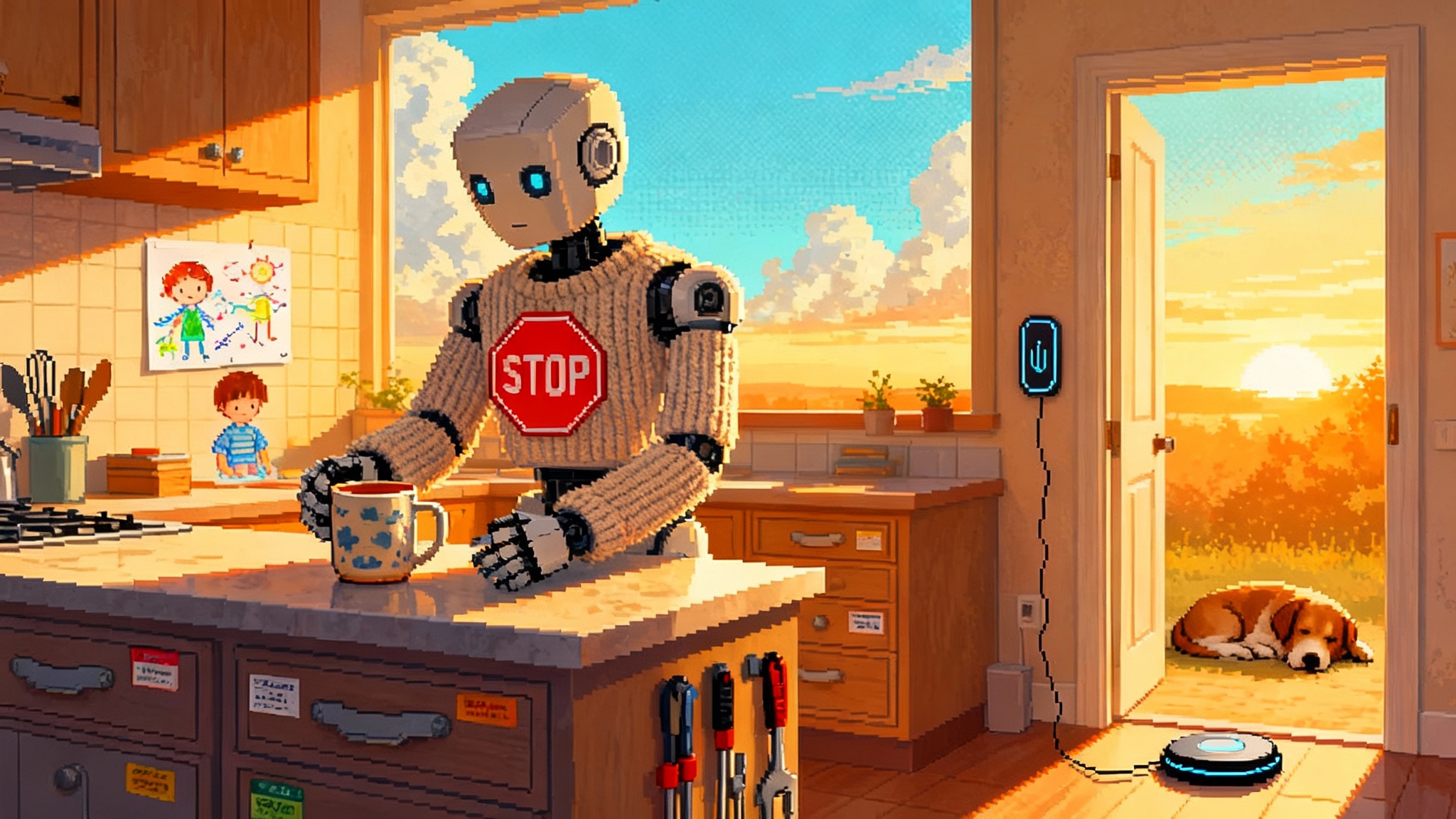

Physical affordances become part of alignment

Alignment used to mean avoiding harmful text. In the home, alignment means not crushing a mug, not opening a hot oven over a toddler’s fingers, and not walking while the cat darts underfoot. Safety becomes a property of plans, contact forces, and recovery behaviors.

Think about alignment in three layers.

- Task alignment: Is the goal allowed and helpful in context. Make toast while the infant sleeps might be allowed. Chop vegetables while a preschooler plays within reach might be blocked.

- Tool alignment: Is the tool choice safe for the material and the skill. If the onion is small, the robot chooses a smaller knife at a slower stroke and may ask for supervision.

- Trajectory alignment: Are forces, speeds, and contact points safe. The robot throttles speed near soft tissue, avoids pinch points, and yields to contact when reaching into a drawer.

Physical affordances give useful constraints. Detents tell the system where the end is. Self closing hinges push trajectories toward safe states. Textures and materials give tactile signals that the grasp is stable. Product teams can lean into this. Build ovens with handles that communicate where fingers and sensors should go. Use visible patterns on safe sides of tools. Add compliant skins on edges that trade a tiny hit to efficiency for a big win on safe contact.

The key point is pragmatic. Alignment is no longer only about what the model says. It is about what the body does, with sensors and materials that turn risky moments into manageable events.

Teleoperation data pipelines are the new moats

Confident household skills do not come from synthetic prompts. They come from demonstrations and corrections at scale. The winners will capture and compress millions of minutes of human teleoperation and robot assisted practice, then turn that corpus into robust skills.

A modern teleop pipeline looks like this.

- A pilot teaches a skill in first person, with haptic gloves, joysticks, or hand over hand guidance, while cameras and tactile sensors record multi view video and contact traces.

- The system segments the session into reusable chunks, grasp, lift, rotate, align, insert, verify, and tags the context, the dishwasher is Bosch, the cup is ceramic.

- A training job turns that into a policy, then an evaluator stress tests it in simulation and again on hardware with counterfactuals, cup on the upper rack instead of lower, drawer misaligned by five millimeters, slippery surface.

- The skill ships to a beta library with a watchdog that asks for help when confidence drops, and the teleop system captures those rare failures to refine the policy.

Two moats form quickly. The first is distribution. Place more robots, collect more messy data. The second is failure density. If your robots attempt harder tasks more often, you see the most valuable errors first. The team that turns errors into training accelerates away. This is where long horizon agents win because compounded learning over many cycles beats isolated demos.

Failure sensitive UX beats perfect demos

In the home, graceful failure is a feature. The robot should narrate its plan in plain language, highlight risky steps, and pause for consent when stakes rise. It should show a live mini map of hands and objects so you can see and veto interactions. Controls should be tactile and simple. A physical stop paddle on the chest. A one tap rewind to the last safe state. A review pane that surfaces short clips of near misses so you can label them and get a better version tomorrow.

Interfaces also need recovery, not only prevention. If the robot drops a mug, it should apologize, sweep the shards, and notify you that it will avoid ceramics until you approve a retrain. If it cannot reach the top shelf, it should fetch a step stool from the closet and try again only if you have whitelisted that behavior. These are product choices that turn fear into trust.

What household safe should mean

Household safe needs a crisp, testable definition that spans labor, liability, privacy, and design. Here is a concrete proposal.

- Labor: Maintain a capability register that lists which paid tasks a robot can perform in the home and with what reliability. If a home care agency deploys robots, publish displacement and augmentation metrics by job category. Encourage workforce boards to trigger wage insurance or training funds when robot hours cross thresholds.

- Liability: Treat a home robot like a licensed driver. Require a per robot safety log with immutable records of plans, contacts, and operator interventions. Tie insurance rates to actual incident history and to verified skills, not just a brand name. If a policy is canceled after a safety incident, force a cool off retraining period with proof of improvement.

- Privacy: Default to on device retention for first person video. If cloud learning is enabled, store de identified motion traces and tactile signals by default, and only short video clips for rare errors with explicit per event consent. Add a data light switch by room, like a camera shutter you can hear and see.

- Design: Adopt visible affordance tags for common home fixtures. A blue ring means grasp safe. A red chevron means pinch risk. A dotted line printed along a drawer’s side gives a visual rail for palm cameras to track. Publish printable tags and low cost kits so renters can retrofit without tools.

This is how we translate abstract safety into everyday practice. It also points toward more auditable operations. If robots become household labor, then some version of auditable model 10 Ks will follow them into kitchens and garages.

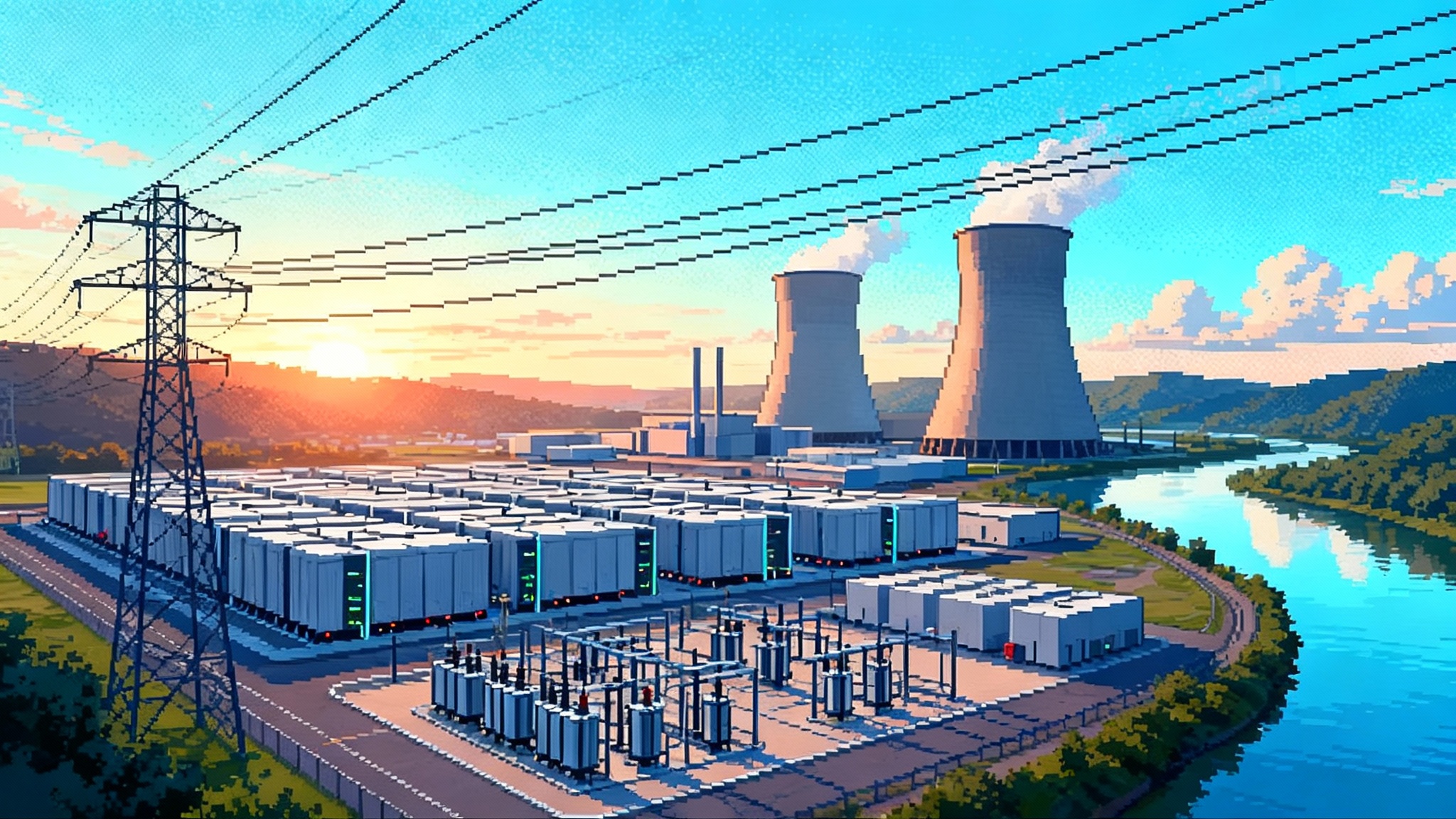

Why now favors accelerationists

Regulatory norms and data rights will harden. The companies that embrace embodiment now will shape those norms and secure the right to learn from the homes that choose to host them. Waiting means you train on synthetic kitchens while a competitor trains on real ones.

The acceleration playbook is specific.

- Run instrumented home pilots with clear danger budgets and hands on oversight. Start with tasks that have obvious value, like laundry, dish loading, and pantry organization. Keep interventions cheap, target under 15 seconds per incident.

- Invest in failure capture. Every hesitation, regrasp, and near miss is a labeled example tomorrow. Build tools that turn those moments into updates within a week.

- Treat teleop minutes like fuel. Recruit and train operators who can teach calmly, and pay for throughput, not hours. Build scheduling and routing to swarm hard homes for fast learning bursts.

- Create a household skills lab. Buy ten brands of dishwasher and twenty types of mug. Measure success not in average time, but in worst case retries and cleanup cost.

- Partner with insurers. Offer a telemetry profile that maps to premiums. Safer robots earn cheaper policies, which lowers adoption friction.

- Ship visible safety design. Soft skins, rounded edges, and safety validated batteries communicate respect for the context.

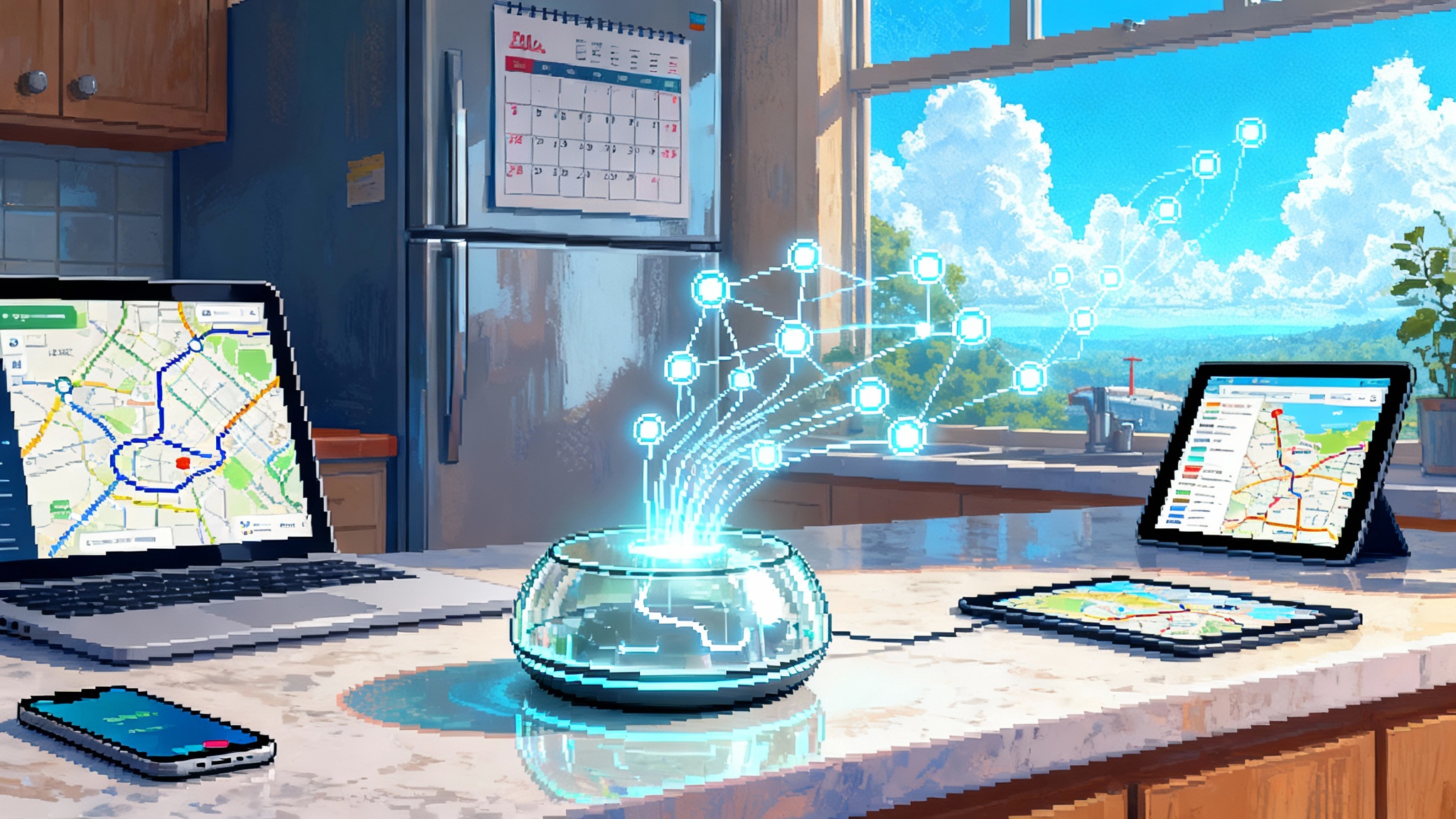

The quiet platform shift inside the house

Look at the components that will feel ordinary soon. Wireless inductive charging in the feet and a mat near the door. A wardrobe of washable soft covers that match your decor. A shelf with tools the robot can use, labeled with affordance tags. A skill editor on your phone that lets you rename and schedule a routine. A privacy light on the forehead that tells you when training is active.

This is what a programmable home looks like. The robot does not replace your choices. It compiles them into action and handles the friction that stops most routines from sticking. It is a compiler for daily life, and it will sit alongside the gravitational pull described in platform gravity, where assistants become the front door to digital experiences.

What this means for builders, policymakers, and households

- Builders: Shift from prompt engineering to behavior engineering. Hire controls experts who can teach a model to negotiate friction, backlash, and compliance. Build a library of failure first benchmarks that capture the worst cases your users dread.

- Policymakers: Write rules that are measurable in kitchens, not only in labs. Define incident reporting standards that include contact forces and human proximity, not just vague severity scores. Offer fast track approvals for teams that publish their safety logs and verify their skill libraries.

- Households: Start with a single high value routine. Ask your robot to do it twice a day for a week and keep score. Require narration and explicit consent before risky steps. If it feels opaque, return it. If it improves every day, keep teaching it.

The second link between worlds

A model that can read a manual and then plug in a power strip without a brittle script is not a parlor trick. It is the beginning of everyday embodiment. DeepMind named the pattern. Vision language action is a model family with motor control as a native output, and that is why it generalizes across kitchens and makes new skills feel like software updates rather than bespoke rewrites.

The difference this week is that we saw a machine shaped for homes, with product decisions that acknowledge dogs, toddlers, and tired adults. You could dismiss fabric covers as cosmetics. You would miss the point. Materials and motion are part of alignment now.

Conclusion: the day homes started to compile

We will remember this fall as the moment the interface shifted from screens to surfaces. A robot that can see, remember, and manipulate objects turns a house into a runtime where chores become code. The next decade belongs to teams that treat teleoperation data as strategy, that design for graceful failure, and that prove what household safe really means. The path is clear. Give language hands, then teach those hands good habits. The winners will start today, while the norms are still wet ink.