After Watermarks: Likeness Rights and Active Provenance

Watermarks and disclosure labels are blinking. California set new chatbot rules, YouTube is adding likeness detection, and research shows robust watermarks can be erased. Here is a person centric plan for real trust online.

The week trust labels blinked

Three announcements in six days told a clear story. On October 13, 2025, California signed a law that requires companion chatbots to disclose that conversations are artificially generated, adds break reminders for minors, and compels platforms to publish protocols for crisis response and basic transparency statistics. If you want the primary source, the governor’s office posted a readable summary under the heading Governor Newsom signs child online safety and chatbot safeguards.

Five days earlier, on October 8, YouTube said it will expand likeness detection in Studio so creators can find and request removal of videos that use their faces without permission. This extends a pilot that started with a handful of top creators and moves toward open beta for Partner Program channels.

One day before that, on October 7, a new paper reported that diffusion based image editing can regenerate pictures in a way that wipes out robust watermarks while preserving what the human eye cares about. The gist is simple. If you think of a watermark as a faint signature in the pixels, a generative model can repaint the same scene from a text prompt and the signature disappears. The authors titled it Diffusion based editing breaks robust watermarks.

Taken together, the message is stark. Labels and watermarks help, but they will not carry trust on their own. We need a layer that treats people as first class actors, not only content as a container.

Why watermarks and labels cannot do the job alone

Watermarks are like ultraviolet ink on currency. They work until the counterfeiter learns the trick. In the synthetic media era, the trick is regeneration. A diffusion system can push an image into noise and pull it back again, keeping composition and style while re sampling every pixel. The model does not need to detect the watermark to erase it. It only needs to reproduce what the viewer notices and discard the rest.

Researchers are now demonstrating attacks that explicitly target the watermark signal as part of that loop. Detectors that once survived cropping or compression now fail after a few guided diffusion steps. The effect is not a bug in one watermarking scheme. It is an asymmetry. A watermark is a fragile secret hidden in one artifact. A model is a general machine that can remake the artifact from a short description. As the ecosystem moves toward regeneration and editing, a static signal can promise less.

Labels face a different failure mode. A label tells you a process was used. It does not tell you who consented, who gets paid, or whether a person’s identity was copied or shielded. A video can carry an authenticity label and still feature a voice the speaker never approved. A platform can comply with a disclosure rule and still make it hard for a singer to block an unauthorized clone.

The week’s headlines made that gap visible. California elevated disclosure and crisis protocols. YouTube promised detection and takedowns for faces. The watermark research showed that technical labels alone cannot defend consent. The next step is overdue. We need person centric trust primitives.

From passive to active provenance

Think of provenance in two tiers.

- Passive provenance answers what and how. What tool produced this, what camera, what model, how it was edited, when it changed, who signed the file. That is helpful for investigation and education.

- Active provenance answers who and whether. Who has the right to use this voice, whether the person has consented, whether the license allows synthetic derivatives, whether the person has blocked this model family, whether the consent has expired. That is the layer that makes identity programmable.

Passive provenance rides on watermarks, signatures, and standards that bind edit histories to files. Active provenance adds people, policies, and enforcement paths that operate across models and platforms.

If you have been following our work on governance, you will hear echoes of the consent forward pattern we explored in From Prompts to Permissions: The Constitution of Agentic AI. The arc is the same. Durable consent must be machine readable, portable, and auditable, or it will be ignored at the speed of production.

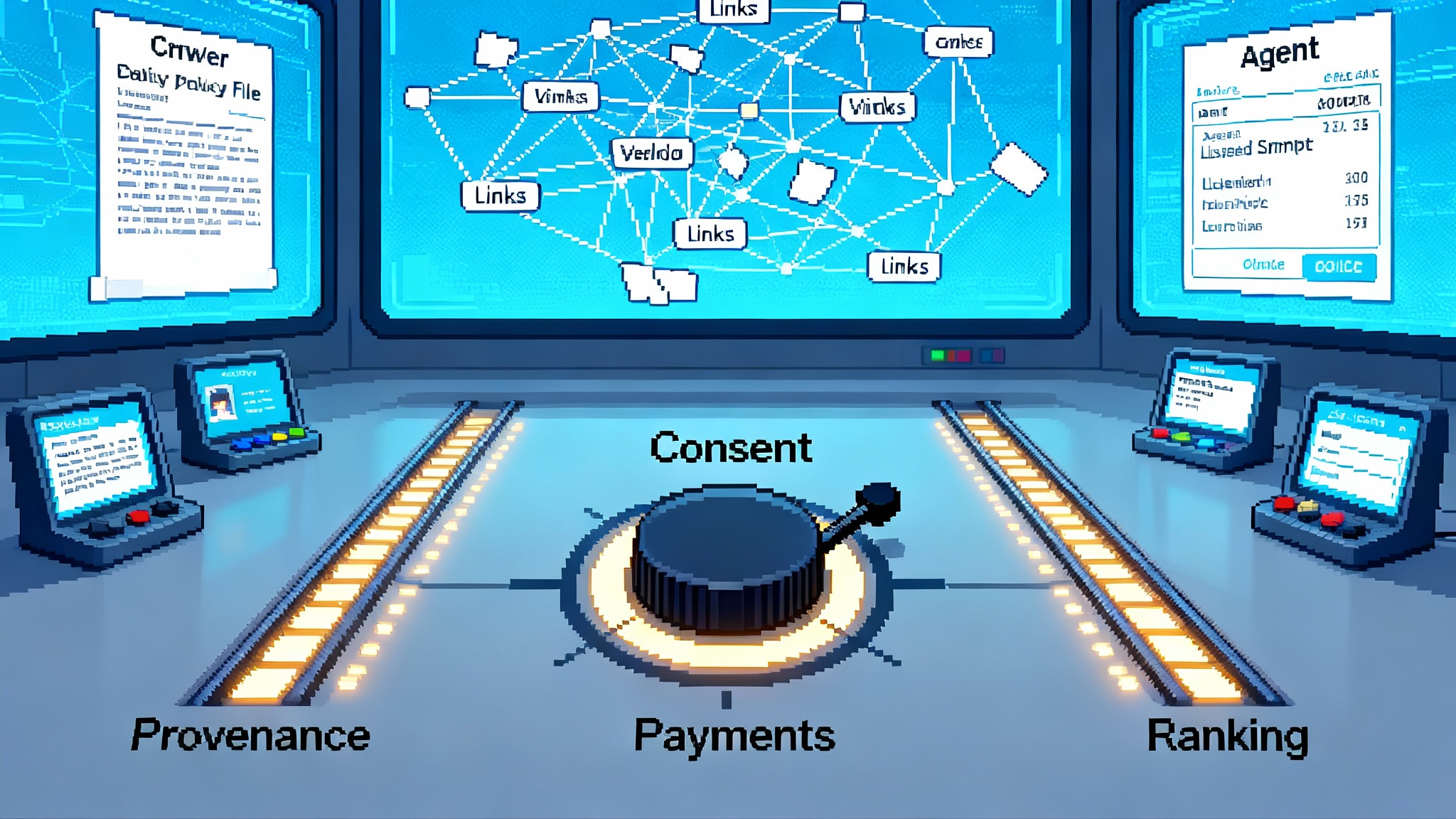

Three person centric building blocks

Here are three building blocks that turn identity into a programmable surface while keeping builder velocity high.

1) Cryptographic identity that a phone can prove

A person should be able to assert this is me, and I approve this specific use, without exposing private data. The pragmatic path uses credentials rooted in hardware on devices people already own. Modern phones and laptops ship with secure elements that can hold keys and sign statements. Passkeys and device bound credentials can sign a short consent message that says, in effect, Alice authorizes voice clone training with model X for advertiser Y for one year. The device never reveals the seed or a government identifier. It emits a signed attestation tied to a public key.

Model providers and platforms do not need to become identity companies. They only need to verify a signature against a public key and check a revocation list. The resolution of public keys to real people can be delegated to trusted registrars, creator agencies, or unions. The point is that a person can carry a portable identifier that speaks for them, not a fresh account per platform.

2) Machine readable consent receipts

Consent needs memory. Screenshots and email threads do not scale. A consent receipt is a small, structured document that travels with the asset and can be checked by a model or an upload pipeline. It answers who, what, where, when, and limits.

- Who: the subject’s public key and an optional human readable label

- What: the attribute covered, like face, voice, body likeness, name, or signature style

- Where: the platforms and model families allowed, or a restriction to named registries

- When: start date, expiration date, and whether renewal requires a fresh prompt

- Limits: whether derivative training is allowed, whether the likeness can be used in ads, whether satire is permitted, whether resale is allowed

A consent receipt should be signed by the subject’s key and, if applicable, countersigned by an agent or attorney. Receipts should include a revocation endpoint and a one click pause switch that flips status from green to yellow during disputes. Pipelines can ingest the receipt and enforce it in real time.

3) A likeness wallet that works across model ecosystems

People manage money with wallets. They should manage their digital self with a likeness wallet. The wallet holds keys, consent receipts, and simple toggles. It lets a person do the following.

- License their voice or face for a production, with rate cards and scope

- Escrow a likeness for safekeeping during legal negotiations, with releases that unlock upon payment

- Block model families or use cases outright, such as political ads or adult content

- Review a ledger of where and how their likeness was used, by whom, and under which receipt

- Flip a global do not clone flag that model registries can cache and enforce at inference time

The wallet does not need to be a new social network. It can be an application that speaks open formats and exposes simple application programming interfaces. Platforms and models can implement a read only check that returns a green, yellow, or red status along with a signed receipt hash. Auditors and courts can verify the logs independently. Creators and celebrities can delegate wallet management to agents. Everyday users can rely on default settings that are conservative and simple.

How this meets the moment

YouTube’s plan to expand likeness detection is a promising way to find unauthorized videos and remove them. It will work better if a creator can supply a wallet backed receipt that says allow parodies, block ads, with a signature that Studio can verify. Detection triggers a decision. The receipt makes the decision consistent and legally legible across borders.

California’s chatbot disclosure law creates a new baseline signal to users. It becomes more than a warning label when paired with identity primitives. A teen using a companion chatbot could receive an affirmation that the bot will not impersonate a friend, that the system enforces a do not clone list for known minors, and that any synthetic media involving a real person must check for a receipt. Agencies charged with oversight can request anonymized logs that prove the checks happened. This shifts the law from telling, toward respecting.

Finally, the new research on watermark erasure underscores the point. If a model can rewrite the answer key, the grade must depend on something the model cannot counterfeit easily. That is a person’s cryptographic consent and the attestations from their device.

A lightweight architecture teams can ship this quarter

Builders do not have to wait for a grand coalition. Here is a practical blueprint that ships in quarters, not years.

- Start with verification. Add a step in onboarding that lets a creator bind a public key to their account using a device based challenge. Store only the public key and a key identifier.

- Accept receipts. Support a simple receipt format in JSON with fields for subject, scope, model families, expiration, and limits. Require a signature from the bound key. Cache active receipts in a fast store.

- Check at the edge. At upload and at inference, call a consent check service with a claimed subject and use case. The service returns allow, require notice, or block. Fail closed for unknown subjects when content claims to depict a real person.

- Log for audit. Write append only logs that include receipt hashes, decision results, and timestamps. Expose a privacy preserving transparency report that aggregates checks by category and outcome.

- Add an appeal lane. When detection flags a possible likeness without a receipt, give the uploader a way to assert parody, newsworthiness, or public interest and route to human review. Keep friction low but capture the claim in a durable record.

- Integrate with model registries. Models that support likeness checks at inference can call the same service before producing a voice or face. If status is red or expired, the call returns a block reason and a short record for auditing.

- Build a wallet companion app. This can be minimal. Keys, receipts, toggles, and a log viewer. Support delegation for agencies and families.

If you want to go deeper on audit and reasoning, pair this with the logging approach we described in The Causality Ledger: Post-Incident Logs Teach AI Why. Causal logs turn an opaque decision into a trace that an auditor can replay. If you want to explore how consent turns into an economic posture, see After SMS, AI Search Flips From Scrape to Consent Economy. Both pieces complement the architecture here.

Open authenticity protocols, upgraded

Standards that bind provenance to files are improving, and they should continue. The most useful move now is to carry a person’s receipt alongside those signatures and to define a short rule set models can evaluate quickly. The rule set should be public, testable, and open source. Platforms will still compete on user experience and policy nuance, but the core schema for a consent receipt and the interface for a check should not be proprietary. This keeps costs down for small developers, encourages third party audits, and makes it easier for creators to move between services without losing protection.

The practical trick is to avoid baking brittle assumptions into the standard. Focus on the primitives. Keys that can sign and be rotated. Receipts that declare scope and time. Logs that can be verified and redacted for privacy. A network of registries that cache global do not clone flags. Everything else is an implementation detail.

On device attesters make this fast

One fear is that verification will slow down the creative loop. It does not have to. Modern devices can sign consent receipts locally and answer proof of possession challenges instantly. This is not a new identity regime. It is a signature that says the same device that enrolled this key approved this action. Builders can cache checks, batch logs, and preflight verification in the background while a user edits.

Attesters also help with fraud. A person can require that a new receipt be bound to a recent on device challenge, not just a stored key. An agent can require proximity proof at signing, so a producer cannot activate consent with a stale copy. The cost is small because the operating system handles the cryptography and the application sees only a success or failure.

Policy that invites innovation instead of freezing it

California’s new law sets disclosure and safety duties for chatbots that target minors or can be mistaken for humans. Legislators and regulators can now create safe harbors that encourage consent checks without forcing one workflow.

- Safe harbor 1: if a platform checks for signed consent receipts when content claims to depict a real person, and logs the result for audit, then liability is limited for uploads that slip through.

- Safe harbor 2: if a model provider enforces do not clone lists at inference, then the provider receives protection from claims aimed only at general purpose capability.

- Safe harbor 3: if a wallet vendor offers clear export and revocation, then platforms that honor those receipts can rely on the vendor’s signatures.

This approach invites interoperability and responsibility without freezing one standard in statute. It encourages experimentation, measurable results, and rapid upgrades. It also aligns incentives. Platforms want fewer disputes. Creators want predictable controls. Model providers want clear boundaries. Users want the truth about who is in the frame and whether they agreed to be there.

Concrete next steps for the four groups that matter

- Platforms: integrate likeness detection with receipt checks, publish a quarterly log of how many checks, how many blocks, and how many appeals succeeded. Add a visible opt in that lets creators bind a key today.

- Model providers: expose a single endpoint that accepts a consent receipt hash and returns allow or block for your model family. Cache do not clone lists locally and update frequently.

- Creator agencies and labels: issue wallets to clients, manage rate cards and scopes, and publish public keys for your roster in a directory so platforms can verify signatures immediately.

- Policymakers: define disclosure and crisis protocols as California did, then add safe harbors tied to receipt checks and on device attestations. Fund open source implementations of the receipt schema and the reference verifier.

The human frame around technical trust

It is tempting to treat provenance as a property of files and tools. That frame is incomplete. The internet is full of people, not only content. The question that matters to most viewers is not which model rendered a scene. It is whether the person depicted is a participant or a victim. That question can be answered in a format that machines and courts understand. It can be answered in milliseconds.

A portable key lets a person speak for themselves. A receipt encodes scope and time. A wallet publishes a simple green or red status that a model or platform can check at the edge. The infrastructure to do this already exists inside devices and developer toolchains. The missing piece is the decision to make person centric trust the default.

The big picture

Watermarks and provenance labels remain useful. They help honest people stay honest and give investigators a map of what happened to a file. The past week showed their limits. Diffusion models can regenerate media in ways that wash away hidden tags. Labels tell us that a tool was used, not whether the person in the frame agreed.

The next layer is active provenance backed by person centric primitives. A cryptographic identity anchored in the devices people already carry. A machine readable consent receipt that captures scope and limits. A likeness wallet that lets people license, escrow, or block their face and voice across model ecosystems. Paired with open authenticity protocols and on device attesters, this turns identity into a programmable resource that builders can check in milliseconds.

Trust on the internet moved once already, from passwords to passkeys. It can move again, from watermarks to wallets. The sooner we make that leap, the sooner creative tools and personal rights can grow together, with speed where builders need it and control where people deserve it.