Cursor 2 and Composer bring parallel agents to the IDE

Cursor 2 introduces a multi-agent IDE and a fast in-editor model called Composer. Teams can plan, test and propose commits in parallel from isolated worktrees, turning code review into the primary loop.

The day coding turned parallel

On October 29, 2025, Cursor shipped a release that feels like a hinge in developer tools. The company introduced a redesigned multi-agent editor and its first in-house coding model, Composer, built to live inside the IDE rather than in a remote chat box. Cursor framed the shift plainly: agents first, files second. The announcement landed where developers actually work, in the editor, not in a lab demo or a benchmark thread. For details, see the launch post, Introducing Cursor 2.0 and Composer.

If you have tried agentic coding before, you probably used a single assistant that edits a file, asks for feedback, and repeats. Cursor 2 changes the unit of work. Instead of a solitary helper, you spin up a small team. Each agent plans, edits, tests, and proposes a diff. The editor coordinates, compares, and helps you merge what wins.

From single prompts to agent teams

The leap is not just more model calls. It is a workflow change. Multi-agent coding breaks a task into lanes and runs them concurrently:

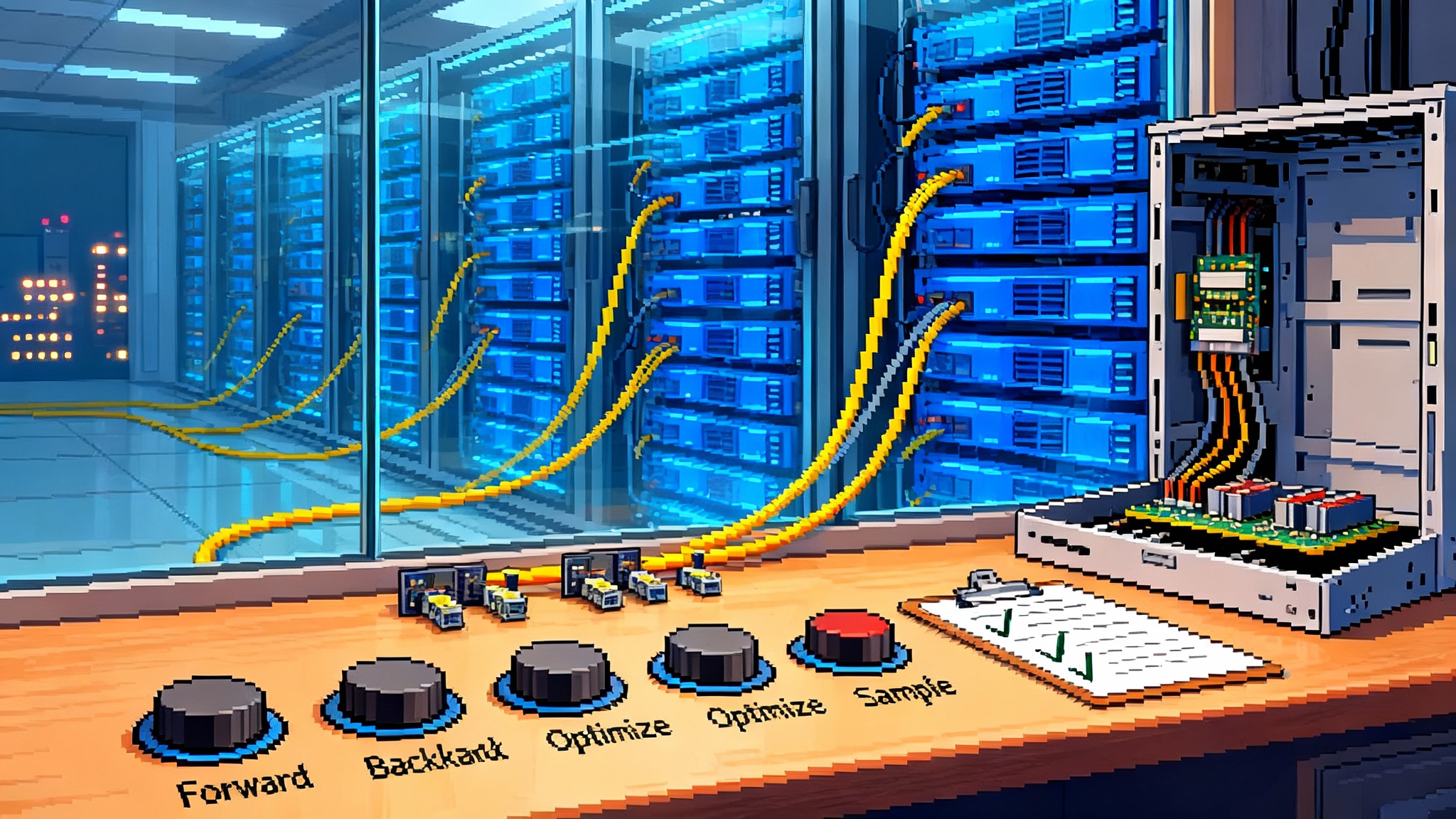

- A planner agent proposes a sequence of steps and allocates subtasks.

- A builder agent edits code and writes new modules.

- A tester agent generates tests and runs them.

- A verifier agent checks logs, traces, and diffs for regressions.

- A reviewer agent summarizes trade-offs and prepares a commit message.

This is the same division of labor teams do by hand, but now it happens inside the editor and it happens in parallel. The effect is cycle time compression. Instead of one assistant trying an approach, waiting on tests, then asking what to do next, you get several plausible approaches in the same time window and a short list of the best diffs to review.

Think of it like a pit crew. In a one-assistant world, the car enters the pit and one mechanic changes the tires, then refuels, then wipes the windshield. In a multi-agent world, five crew members do their roles at once while the driver watches the timer. You still approve the plan, but you do not wait in a serial line for every step.

Isolated worktrees make agents safer teammates

Concurrency only helps if work does not clash. Cursor’s design isolates each agent’s changes in its own copy of the codebase, most often through git worktrees or remote sandboxes. The practical result is simple. Agents cannot step on each other’s edits. You get parallel exploration without merge chaos.

If you have used feature branches, this will feel familiar. Each agent’s environment is a disposable branch, created on demand with a clean working tree and the tools it needs. The editor corrals the outcomes back into a human-readable review. You see a roll-up of diffs across files, a plan summary, and a proposed commit title that captures intent. Approve, and the changes land. Reject, and the agent’s worktree is tossed. No hunting through temp directories, no guessing which file changed when.

The payoff is not only safety. Isolation encourages bolder exploration. An agent can rewrite a module or try a different library without risking the main branch. That opens space for higher-variance experiments that often produce better results on hard problems.

Why an in-editor frontier model matters

Agents are as fast as their slowest turn. Composer exists to keep turns short while holding enough context to work across large repositories. Latency matters especially when you fan out to multiple agents because any delay multiplies. A slow model makes parallelism feel like traffic, not progress. A fast model turns parallelism into throughput.

Co-locating the model inside the editor reduces cognitive and operational overhead. Context is fresher. Tools like semantic search, grep, the terminal, and an embedded browser feed the model directly, so it does not waste tokens rediscovering the repo structure. In plain terms, Composer can look around the project like a teammate who already cloned the repo and ran setup.

Another gain is review speed. With agents proposing diffs instead of paragraphs, the human task becomes code review, not prompt engineering. You do not need to reconstruct how the assistant got here. You see what changed, why it changed, and how it tested that change.

The agent console is the inflection point

The most important visual in Cursor 2 is the agent console. It is a sidebar that shows active agents, their plans, and their status. You can start several agents on the same goal, let them run to completion, and compare their diffs side by side. The console is not chatter. It is an operations view for code changes.

Two design details make it practical:

- A diff-centric review panel aggregates edits across files. You can approve or request changes without tab-hopping.

- A native browser tool lets agents test user flows in the editor. For web work this removes a whole layer of alt-tab and copy-paste.

When everything sits in one place, agentic coding feels less like talking to a bot and more like managing a team. That framing matters because it clarifies your role. You direct, you approve, and you hold the bar. The agents do the busywork and the first pass.

What parallel agents change in practice

Consider a concrete scenario. Your product manager asks for a feature flag that gates a new checkout flow behind an environment variable with a fallback to existing behavior. In a single-assistant world you might prompt back and forth for an hour, fix a test, re-prompt after a linter failure, and eventually block on a flaky integration.

With Cursor 2, you can brief a goal and launch three agents:

- Agent A implements the flag and wiring in the backend service.

- Agent B updates the frontend route guard and adds telemetry.

- Agent C writes unit tests and a stub integration test that hits the route and verifies the old path still works when the flag is off.

All three run in isolated worktrees. Agent C fails a test because the telemetry event name changed. No problem. It updates the test, re-runs, and posts a green result. You now have three diffs and a one-page summary. You pick Agent A’s backend diff and Agent C’s test diff, ask for a combined proposal, and merge. That hour of serial trial and error collapses into one review session.

The same pattern works for refactors and bug hunts. Launch two or three hypotheses, let each agent chase its approach, and take the best outcome. You spend your time on the review where judgment is the scarcest resource.

Guardrails teams need right now

Parallel agents are power tools. They need guardrails. Here are the controls that enterprises should put in place before scaling usage beyond a pilot:

- Security and access

- Issue least-privilege credentials to the editor and to agent sandboxes. Give agents the rights they need for the task and no more.

- Prefer ephemeral tokens with time-boxed scopes over static keys. Rotate by default and expire aggressively in non-production environments.

- Use network egress policies for agent sandboxes. If a tool does not need the public internet, do not let it out.

- Scan dependencies and lockfiles that agents introduce. Require policy approval before adding a new package or permission.

- Sign commits from agent runs with a dedicated identity that cannot sign releases. Keep a clean audit trail without expanding blast radius.

- Provenance and accountability

- Record who launched the run, which model and tools were used, the prompts and files touched, and the test artifacts produced.

- Attach that record to the pull request so reviewers see evidence without switching systems.

- Adopt a software supply chain posture that treats agent output as generated artifacts. Use attestation and build provenance so downstream systems can verify claims like who authored a change and which tests passed.

- Evaluation and quality gates

- Gate merges behind unit tests, integration tests, and a linting suite that runs in the agent’s own sandbox. Fail fast inside the agent workflow.

- Maintain a regression suite of real incidents or hairy tickets. Run it on a schedule to catch drift in model or tool behavior.

- Measure not only success rate but also recovery time. An agent that fails quickly and chooses a new plan can still save hours compared with one that dithers.

Early speed versus quality trade-offs

Composer is designed to be fast while keeping enough context to work across repositories. In practice, early users will see a familiar pattern with new agent flows. Throughput jumps first, then accuracy climbs as you tune prompts, tools, and tests.

Expect missteps on unfamiliar stacks, flakiness around integration tests, and occasional over-eager refactors. The fix is not blind trust or blanket skepticism. It is a short feedback loop:

- Start with narrow, high-leverage tasks that are easy to check. Examples include test generation, small feature flags, dependency updates with changelog checks, and low-risk migrations.

- Add a rules file with style conventions, preferred libraries, and prohibited patterns. The best improvements come from clarifying intent, not from inventing new prompts every day.

- Keep humans in the loop on risky changes. Attach a checklist to agent-authored pull requests that reviewers must complete. That keeps standards high even as volume climbs.

- Tune parallelism to the task. Launch fewer agents for simple fixes. Save full fan-out for hard or ambiguous work where diverse approaches help.

A simple litmus test is whether review time is shrinking. If your team still spends most of its time reconstructing what happened, fix the plan and the review surface before you scale up.

Where this fits in the broader agent stack

Cursor’s release lands in a stack that is consolidating across three layers: orchestration, tool connectivity, and transactional trust. The patterns echo across industries. We have seen agents move from demos to production in commerce, operations, and QA, and the same playbook applies in the editor.

-

Orchestration. Frameworks for graph-based agents are becoming the backbone for describing workflows with branching, retries, and state. As the agent console becomes normal inside the IDE, expect teams to standardize on one orchestration layer for headless runs in continuous integration and another inside the editor that mirrors the same graphs. This mirrors how retail teams rolled out checkout automation in agentic checkout goes live.

-

Tool connectivity. The Model Context Protocol, introduced in 2024 and widely adopted in 2025, is making tools look like servers that any conforming agent can call. That lowers the barrier to adding internal tools like schema dashboards or deploy commands. The more the IDE and the backend share a protocol, the less glue code you maintain. The trend rhymes with the way QA teams embraced agent-to-agent QA arrives to make testing both automatable and auditable.

-

Transactional trust. Payments and procurement are entering the agent loop. Google’s announcement of the Agent Payments Protocol provides a missing piece for agents that must purchase services, book resources, or settle usage with receipts. The protocol defines how to authorize and verify agent-led transactions and how to produce a verifiable trail. For teams that plan to let agents acquire cloud credits, test devices, or marketplace add-ons, the standard will matter. Read the overview in Google’s Agent Payments Protocol announcement.

Put together, you can see a 2026 picture forming. Editor-resident agents for design and development, headless agents in continuous integration and continuous delivery, a unifying orchestration graph, a shared tool protocol, and a payments protocol for anything that crosses money or resource boundaries. The control plane looks like an agent command center with runs, metrics, budgets, and policies. The data plane is worktrees or sandboxes where code changes happen with strong isolation.

These layers also intersect with memory. Teams that invest in durable context, style guides, and example banks will see compounding returns as agents reuse what worked. This echoes a broader shift we covered in the memory layer moment, where shared memory unlocked better performance across tasks.

How to pilot Cursor 2 in your org next quarter

Here is a pragmatic plan that teams can follow without boiling the ocean:

-

Choose a repo with good tests and active development. Avoid your thorniest monorepo for the first month.

-

Catalog three to five task types to automate. Examples include upgrading a library across services with changelog checks, adding a small feature flag, closing a flaky test, migrating a logging call, or backporting a bug fix.

-

Define policy and telemetry before you begin. Set agent identities, commit signing, and a run ledger that records model, tools, prompts, diffs, and tests.

-

Stand up a sandbox runner. Decide whether you will use local worktrees or remote machines for isolation and performance. Pre-load caches for installs and builds.

-

Build a slim evaluation harness. For each task type, specify success checks and failure patterns the agents should recognize. Keep it small but real.

-

Start with two or three agents per task. Capture outcomes, review time, and escape hatches where humans had to step in. Double the parallelism only when review time is consistently falling.

-

Close the loop. Convert recurring review comments into rules. Add positive examples the agents can copy. Update the evaluation harness with new failure cases.

-

Publish weekly summaries to the team. Show time saved, defects escaped, and money spent. Sunlight is how you build trust.

By the end of a focused six-week pilot you should know where agent teams pay for themselves and where you need more scaffolding. You will also have logs and artifacts to inform a broader rollout.

What to measure to prove value

It is easy to be impressed by a slick demo. Proving value requires numbers that hold up in daylight. Track a small set of leading indicators:

- Median time from intent to merged commit for common task shapes.

- Reviewer time per change and the number of review comments per diff.

- Automated coverage touched by agent-authored changes and the pass rate of those tests.

- Rework rate in the first two weeks after merge for agent-authored changes versus human-authored changes.

- Dollar cost per merged change including model time and sandbox compute.

These metrics tell you whether parallel agents are moving the needle where it matters. If throughput rises but rework also rises, tighten your gates. If reviewer time is flat, invest in clearer plans, better diff summaries, and rules files that remove ambiguity.

Practical risks and how to manage them

Even with good guardrails, parallel agents introduce new failure modes. Here are three to anticipate early:

-

Overlapping migrations. Two agents might independently decide to bump a dependency or rename a symbol. Isolation prevents clobbering, but you still need a policy on who is allowed to touch shared interfaces in a given run. The fix is a simple preflight check in the planner: if a change touches a shared interface, lock that lane to a single agent.

-

Tricky tests. Agents can generate lots of tests quickly, but the value lies in stable, meaningful checks. Invest in a small library of project-specific helpers and fixtures so generated tests look like your best human tests.

-

Prompt drift. As people tweak prompts to get a stuck task moving, definitions can diverge. Store prompts alongside code, treat them like source, and review changes the same way you review code changes. Add a job that flags prompt diffs that skip review.

The editor is now the agent platform

The meaning of Cursor’s October 29 release is not just that one company built a faster coding model. It is that the editor became an operations surface for agent teams. With a frontier model in the loop and an agent console that plans, tests, and proposes commits from isolated worktrees, developers can trade prompt juggling for outcome selection.

The consequences are immediate. Cycle times shrink, quality gates move earlier, and teams spend more time on review and integration than on orchestration. We will still argue about benchmarks, and we should, but the direction of travel is clear. The next year will be about operationalizing agents across the software development life cycle with clear policies, durable protocols, and better reviews.

If you are a developer, your job stays the same at its core. You still design systems, set standards, and make the call. You just do it with more parallelism and better instruments, right where you always have, in the editor.