The Opt-In Memory Divide: Why Assistants Learn to Forget

AI assistants are shifting to consent-first memory and longer continuity. The next moat is selective recall, revocation that works, and portable profiles. This playbook shows how to earn trust and win users.

Breaking news: assistants now ask before they remember

A quiet but profound shift is underway in consumer AI. Several assistant providers are moving to explicit opt-in training and clearer memory controls, while also extending how long chats can be referenced to improve continuity. OpenAI has rolled out memory you can turn on or off, with review tools and the ability to reference past conversations to personalize replies. Users control both saved memories and chat history. See the specifics in OpenAI memory controls for ChatGPT.

At the operating system layer, Microsoft learned the hard way that always-on recall creates a backlash. After researchers raised security risks, the company made its desktop-wide Recall feature optional, tightened encryption, and required authentication to view timelines. Those changes are laid out in Microsoft Recall update details.

Taken together, these moves draw a line in the sand. The industry is standardizing on asking before learning and on giving people ways to see and prune what the assistant keeps. The race is not only about bigger models. It is about smarter memory that is consentful, selective, and accountable.

The new moat is memory, not model size

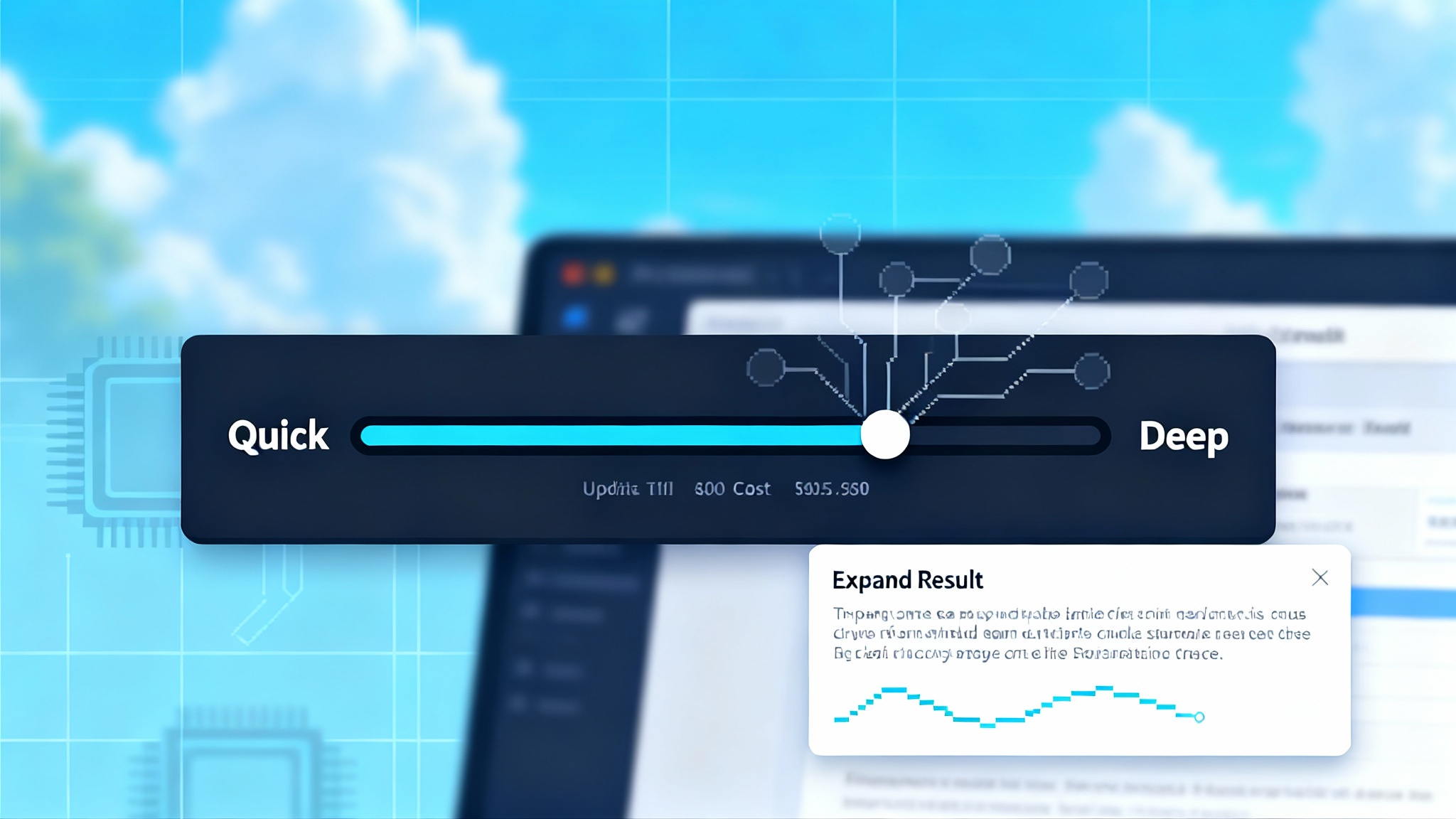

For a year, the scoreboard was parameter counts and benchmark wins. That contest is flattening. Improvements still come, but each point costs more compute and time. Meanwhile, a different curve is steep: how well an assistant remembers what matters to you.

Memory is not just storage. It is preference, routine, and intent. The assistant that remembers you like Chicago style citations, books night flights, and calls your father "Dad" will feel far more capable than a slightly larger model that forgets the basics every session. Think of it as the difference between a giant public library and a well organized home office. The library holds more knowledge. The office holds your notes, your calendar, and your tools. You get more done.

That is why the next moat is built from three M's: meaning, mechanisms, and maintenance.

- Meaning: the assistant selects only what is useful about you, not every line you ever typed.

- Mechanisms: the assistant stores those facts in ways that are safe to carry, revoke, and decay over time.

- Maintenance: the assistant gives you the steering wheel to audit, edit, and erase.

Companies that excel at those three will unlock trust, switching costs, and day-to-day utility that raw model size cannot buy.

Trustware: design for selective, revocable, time-decayed memory

We need a design language for memory that treats consent and control as first-class features. Call it trustware. Trustware is software that proves it can be trusted because of how it is built, not because a privacy page says so. If you are shaping an assistant, build to these principles.

1) Selection at the source

- Only remember what a user explicitly marks or what a clear rule allows. Examples: "Remember my dietary restriction: gluten free." "Remember my preferred citation style: Chicago." "Never store anything tagged health."

- Before inferring sensitive attributes, show visible prompts and ask permission. No silent scraping of personal details.

2) Granular scopes

- Split memory into scopes like personal, professional, and household. A family recipe does not belong in a work scope. A client preference does not belong in personal.

- Let users assign a scope with one tap when saving a memory, and change scope later without retyping.

3) Revocation that actually works

- Deleting a chat is not enough. Provide a Memory Dashboard that lists atomic memories with their sources and last use. Allow single-click forget for each item. When a memory is deleted, it disappears from all future retrieval and from any fine-tuned adapters tied to it.

- Issue a deletion receipt with a unique identifier and timestamp so users can track what was removed.

4) Time decay by default

- Set a sensible time-to-live for every memory. Restaurant preferences might decay in 90 days unless refreshed by use. Phone numbers can persist until replaced. If a memory is not used, it fades. Treat it like milk in the fridge, not paper in a filing cabinet.

5) Sensitive-by-design redactions

- Automatically redact credentials, financial numbers, and precise location unless a user explicitly saves them. Store those items in a separate, highly restricted vault.

6) Temporary and guest modes

- Provide a one-click temporary chat. Nothing from that chat trains general models or updates user memory. Treat it like a whiteboard that is wiped when the tab closes.

7) Memory budgets with alerts

- Show a meter for each scope that indicates how much memory is active. Let users set a cap. When the budget is full, the assistant asks what to drop to add something new.

8) Explainability in plain language

- Each time a memory is used, highlight it: "I recalled that you prefer Chicago style citations from a note saved on March 2." Make that highlight clickable to view, edit, or forget.

Trustware is not a wishlist. It is a product spec that converts consent and control into the core user experience.

Distill the person, not the logs

The wrong pattern is to hoard raw transcripts. The right pattern is to distill useful signals into small, portable representations and discard the rest.

A practical approach looks like this:

- Session capture: During a chat, candidate signals are flagged, such as "prefers Chicago style," "vegetarian," "calls father Dad," or "avoid meetings before 10 a.m." The assistant proposes these as structured hints, not wall-of-text quotes.

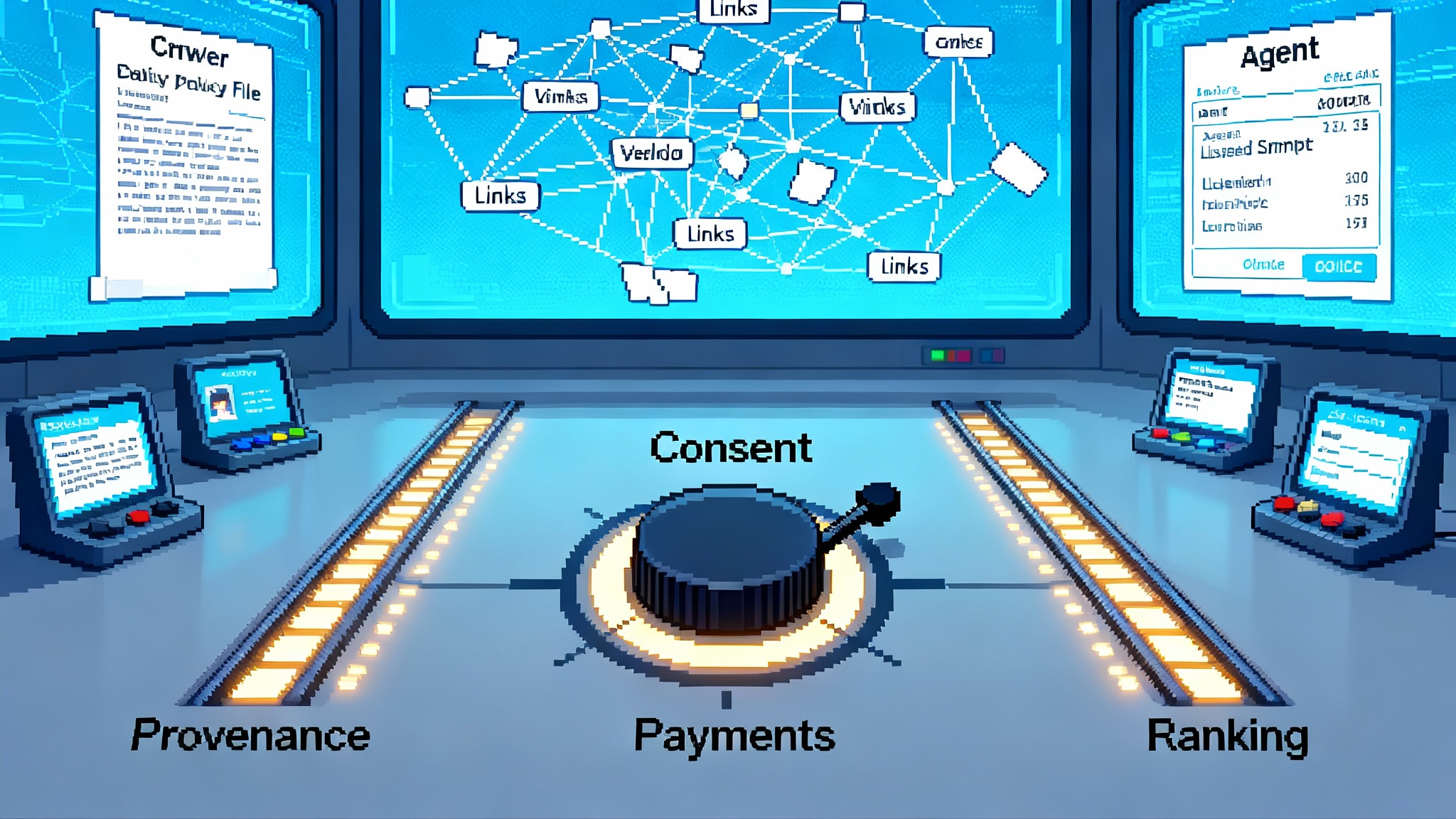

- Consent gate: The assistant proposes a memory with human-readable text, scope, and default time-to-live. The user approves, edits, changes scope, or rejects. This should map cleanly to a permissions model, echoing how the constitution of agentic AI reframes prompts as explicit rights.

- Personal adapter: Approved memories update a small, personal adapter or embedding shard, stored locally or in a personal vault. The base model stays the same. The adapter is loaded at inference to steer behavior, keeping personalization fast and reversible.

- Proof of forgetting: When a user deletes a memory, the adapter is recompiled without that signal. The system generates a receipt that includes a hash of the adapter before and after deletion. That receipt is the breadcrumb a compliance tool can follow.

This delivers personalization without keeping a pile of raw logs. It also makes audits cheaper. You can answer a regulator with a chart of memory counts by scope and the last compile time of the adapter, rather than digging through transcripts.

The portability moment is coming

If memory becomes the moat, users will demand the right to move it. Portability is the pressure valve for platform power.

Imagine a Memory Cartridge file. One export and you have:

- Canonical memories in plain text with scopes, timestamps, and expiration dates.

- A signed adapter file that any compliant assistant can load.

- A deletion manifest listing the memories you have removed in the past, so a new assistant does not re-suggest them.

The import flow would ask which scopes to accept, how to map them, and whether to reset expiration dates. Switching providers would feel like bringing your contacts list and keyboard dictionary to a new phone. The broader shift toward a consent economy, described in AI search flips to consent, will push assistants in the same direction.

Two things make this feasible now:

- The personal adapter can be small, so it moves quickly and can run on-device when possible.

- The industry already accepts export and deletion for photos, contacts, and files. Adding a memory export feels natural to users.

Vendors that embrace portability will court power users and businesses that fear lock-in. Vendors that resist will watch rivals market Bring your memory onboarding that gets people to switch in an afternoon.

Forgetfulness guarantees will become table stakes

Privacy policies say you can delete your data. A forgetfulness guarantee proves it. Three building blocks can turn forgetting from a promise into a product feature.

1) Cryptographic receipts

- Each memory gets a unique identifier when created. When deleted, the system issues a signed receipt that records the identifier, deletion time, and the resulting adapter hash. Users can store receipts or share them with compliance tools.

2) Machine unlearning

- For memories that have already influenced a personal adapter, a targeted unlearning pass removes their gradient contribution. You do not need to retrain the base model. You recompile or fine-tune the small personal adapter. For shared safety classifiers, batch unlearning runs on a schedule to remove accumulated personal traces.

3) Verifiable decay

- The system publishes a public policy for time-to-live and decay curves by memory type, and exposes telemetry in the Memory Dashboard. Users can see what will expire next week and choose to refresh or let it lapse.

Put together, this is a user experience, not just a backend change. You can point to a place in the interface and say: this is how forgetting works here. The idea pairs naturally with audit trails explored in post-incident logs teach AI why.

Alignment becomes a social contract

Traditional alignment treats safety as a static checklist. Memory turns alignment into a relationship. The assistant negotiates what to keep, when to forget, and how to explain its use of that knowledge.

Social contracts have rituals. Assistants need them too:

- Ask before learning. Propose memories in plain language with a clear purpose.

- Remind and refresh. Nudge users when important memories are near expiration.

- Apologize and repair. If a memory causes harm or annoyance, acknowledge it, forget it, and prevent similar inferences.

- Stay in character. If you save a preference, act on it consistently and explain when you cannot.

This is alignment you can feel. It reduces friction, builds trust, and sets a higher bar than a static safety spec on a website.

What longer retention should mean

Some providers are extending how long chats can be referenced. That can be useful if the references are distilled and accountable. It becomes risky if retention is raw, silent, or hard to unwind.

A trustworthy approach to longer retention follows four rules:

- Preference over provenance: keep the preference, drop the transcript.

- Renewal beats hoarding: if a memory matters, a brief refresher is a better signal than an old chat log.

- Scoped search: let users search their own memories and chat history with the same controls you use, including filters for scope and date.

- Visible lineage: when the assistant references something from a prior chat, show the source snippet with a link to view or delete.

Handle retention this way and it becomes a feature, not a fear.

Product checklist: ship trustware next quarter

If you are building an assistant, here is a practical checklist with clear actions:

- Ship a Memory Dashboard with create, edit, scope, expire, export, and delete.

- Add a one-click temporary chat. No memories. No training. No history.

- Require explicit approval for first-party inferences about health, finance, or family.

- Set default time-to-live by category. Start with 90 days for general preferences.

- Compile a personal adapter on-device or in a personal vault. Never store raw logs longer than needed for support.

- Provide deletion receipts and show the before and after adapter hash.

- Build a Memory Cartridge export that other assistants can import.

- Instrument memory usage highlights in replies, with quick links to edit or forget.

- Publish a decay policy and display what will expire soon.

- Run a quarterly trust drill. Randomly sample memories and trace their creation, use, and deletion to verify the system.

These are not only good ethics. They are competitive advantages that users can see in screenshots and feel in daily use.

Regulation will set the floor, not the ceiling

Regulators already expect data minimization, deletion, and portability in many jurisdictions. Memory turns those principles into specific product features. Expect three baselines to spread:

- Consent-first training for consumer assistants. Default off for using chats to improve general models, with clear opt-in and an easy path to change your mind.

- Auditable forgetting. Interfaces that make deletion observable by the user, with receipts for compliance.

- Portable personalization. A standard export that allows moving assistants without starting from scratch.

Teams that move first will shape how those baselines are written and will be better prepared when they arrive.

Strategy: measure what matters

To manage what you build, measure it. Replace vanity metrics with trust metrics:

- Memory approval rate: share of proposed memories that users accept. If it is low, your suggestions are off.

- Decay refresh rate: share of expiring memories that users renew. If it is low, your defaults are too long.

- Deletion latency: average time from delete click to receipt issuance. If it is high, your pipeline is brittle.

- Personal adapter size: target a small footprint. If it grows, you are storing noise.

- Highlight coverage: how often replies show which memory was used. If it is rare, your assistant is opaque.

These numbers are better predictors of retention and word of mouth than model size alone.

The next 12 months: the Memory Wars

Here is what to expect in the year ahead:

- Memory as a feature tier. Free plans get saved memories with short time-to-live. Paid plans unlock longer horizons and more scopes.

- Bring your memory onboarding. Switching assistants prompts you to import a Memory Cartridge. Setup time drops from hours to minutes.

- Forgetfulness guarantees in marketing. Vendors advertise deletion receipts, time-decay, and unlearning, much like browsers once advertised private modes.

- Trustware badges. Independent labs certify that a product meets selection, revocation, and decay criteria. App stores display the badge.

- Personal adapters as plugins. You can load your adapter into specialized tools like code assistants or travel bots to get your preferences without sharing transcripts.

When the dust settles, the winners will be the teams that turned memory into a capability users can shape, carry, and erase.

Conclusion: teach assistants to forget on purpose

The news hook is clear. Providers are shifting to explicit opt-in training and stronger memory controls, while stretching continuity across chats. That is not just a privacy story. It is a product strategy. Memory is the next moat. The path forward is trustware: selective capture, revocation that works, time-decay by default, and personal distillation that keeps utility while shedding raw logs. Package it so people can carry their preferences to any assistant, then back it with forgetfulness guarantees you can prove.

If you build this way, you do more than check a compliance box. You make a promise that your product can keep. In a market where everyone sounds smart, the assistant that can remember the right things and forget the rest will feel human in the ways that count.