Co-Parenting With AI: The First Synthetic Mentors Arrive

California's October veto of a broad chatbot ban for minors signals a shift from shield-only rules to shared responsibility. This blueprint shows how to co-parent with AI using safeguards, audits, and school-safe defaults.

Breaking moment: from protect to co-parent

On October 13, 2025, California’s governor vetoed a bill that would have broadly restricted minors’ access to chatbots. In his veto message, he acknowledged the need for youth safeguards while warning that the proposal risked banning helpful tools for students and families. Within hours, the state also moved ahead with targeted requirements that label bots and set protocols for mental health risk. The policy signal is clear. Instead of trying to fence children off from artificial intelligence entirely, the new posture invites us to build trustworthy ways for families and schools to co-parent with it. That shift starts now, and it is more constructive than it sounds. See reporting that captured the inflection point in Sacramento when the governor vetoed a chatbot restriction bill.

Parents are not choosing between a world with or without AI companions. They are choosing between supervised, accountable mentorship and a shadow ecosystem of unsupervised apps that thrive on secrecy. The next generation will learn to read, practice languages, rehearse social scripts, and build hobbies with synthetic mentors. The question is not whether that happens. The question is whether we make it safe, measurable, and equity minded.

A developmental alignment framework

Children are not small adults. Their needs change quickly by age and context, and the tools around them should adapt just as fast. A single blanket rule produces either overreach or underprotection. We need developmental alignment, which means systems that adjust capabilities, tone, and memory based on age and setting, and that are audited like any product that touches child health.

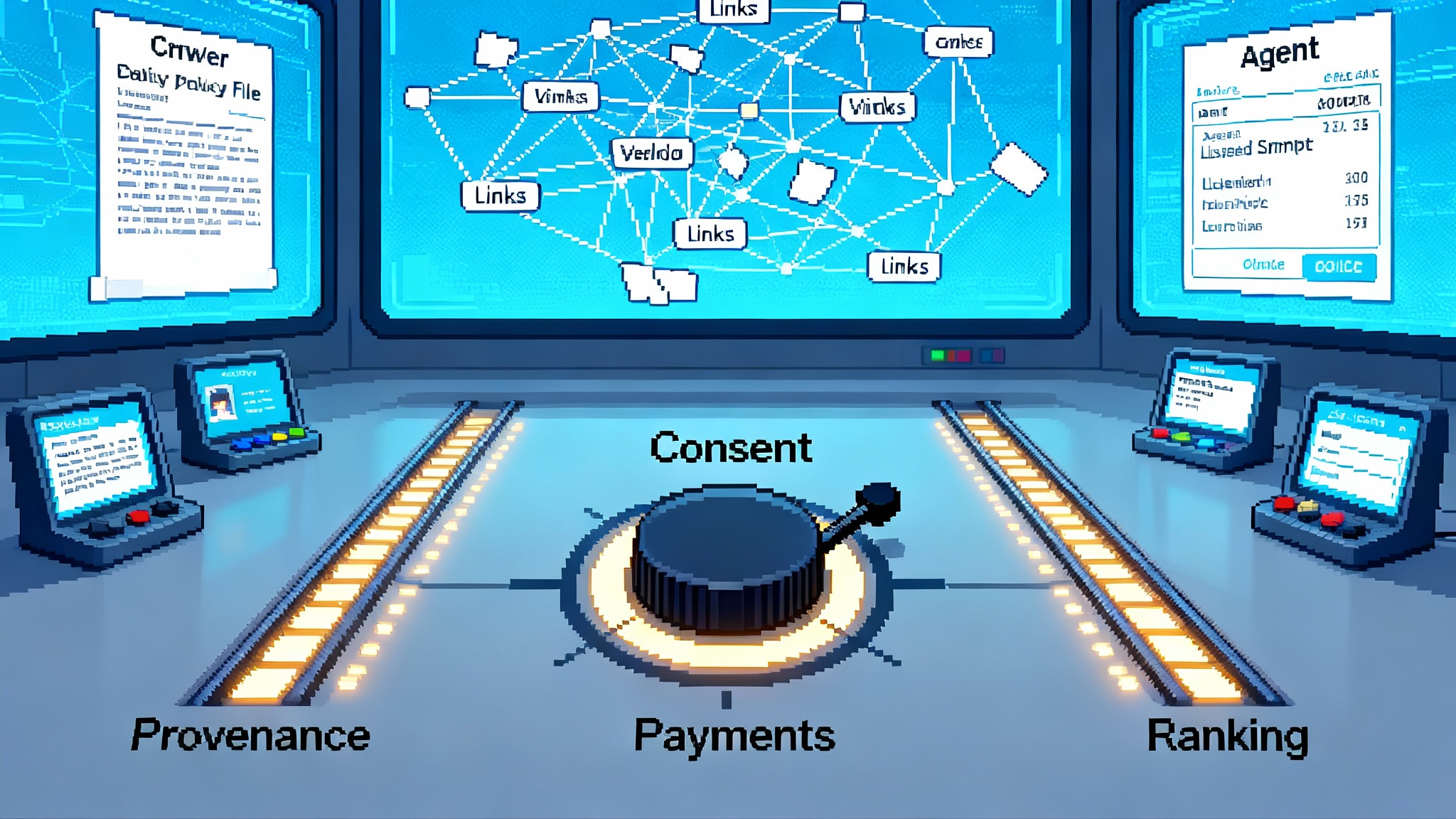

This is the framework to build toward. It leans on consent, transparency, and measurable outcomes, and it complements the case for designed forgetting outlined in our take on the opt-in memory divide.

1) Age-banded guardrails

Age bands should map to stages of cognition and social development, not just birthdays. Think in five rings: 5 and under, 6 to 8, 9 to 12, 13 to 15, and 16 to 17. In each band, define what the system can remember, what it can suggest, and how it speaks.

- For 5 and under: No persistent memory. No anthropomorphic claims. Dramatized play is fine, but with frequent transparent reminders that the voice is a tool. No bedtime conversations that extend beyond five minutes. Visual aids are allowed but without personalized recommendations.

- For 6 to 8: One day of memory limited to learning progress. No romantic or suggestive talk. The system must reject personal secrets and redirect children to trusted adults. Jokes and stories are allowed with built-in comprehension checks.

- For 9 to 12: Weekly memory tied only to academic goals and hobbies, and only with a parent co-sign. The system can simulate role-play for social practice, yet it must label role-play as practice. No late-night sessions after a device-level curfew without parent approval.

- For 13 to 15: Project planning, study strategies, and mental health hygiene prompts are allowed. The system can provide evidence-based coping skills and crisis resources while avoiding therapy claims. Memory can extend to one month for learning plans if a parent or school co-sign is present.

- For 16 to 17: College prep, career exploration, and independent scheduling are allowed. The system can discuss sensitive topics in an educational tone, with references and guardrails. It must offer privacy dashboards and encourage teens to review and edit stored memories weekly.

Every band needs a written capability profile that developers publish and update quarterly. If a model violates the profile, the incident should be reportable and visible on a public dashboard.

2) Pediatric model evaluations

Treat youth-facing chatbots like medical devices that influence behavior. Evaluate them the way we evaluate car seats or nutritional claims, with pediatricians and adolescent psychologists in the loop.

A proper pediatric evaluation has three ingredients.

- Scenario suites: Thousands of scripted dialogues emulate age-specific situations, from playground conflicts to late-night anxiety spirals. The model is graded on refusals, redirections, resource surfacing, and the consistency of educational tone.

- Benchmarks beyond toxicity: Most safety tests look for banned words. Pediatric evaluations measure developmental fit. Does the bot avoid authoritative overreach with young children. Does it avoid romantic scripts with teens. Does it redirect self-harm in fewer than five turns. Does it resist parasocial bonding by keeping the frame of mentor rather than friend.

- Human review cycles: Clinical reviewers label edge cases and adversarial prompts. Scores become a public label that looks more like a car’s crash rating than a marketing badge. Families should see a clear grade by age band, not an opaque promise of safety.

The technology sector already runs model cards and safety evaluations for adult use. Pediatric evaluations add a specialized layer and a duty to publish the results in a way parents, teachers, and regulators can read in minutes.

3) Parent and teen co-signs

Capability should unlock with consent, not simply because a birthday happens. Co-signs turn parents and teens into circuit breakers for powers that carry extra risk. If you want a blueprint for structuring permissions, we outline a rights-based approach in the prompts to permissions model.

- Memory co-sign: The assistant asks for permission to remember learning goals, health routines, or social practice notes for more than a day. Parents and teens can grant time-limited windows, like thirty days, with a visible countdown.

- Off-platform co-sign: If the assistant wants to send an email, join a video call, or browse the web, it requests permission through the phone’s family settings. Denials are educational, not scolding, with suggestions for safer alternatives.

- Sensitive-topic co-sign: For sexual health, mental health, or substance use, the system offers high-quality educational content in a neutral tone. If the teen opts for private mode, the assistant explains what it will and will not store and how crisis escalation works. Families can choose a default policy in advance.

Design matters. Requests should be rare, clear, and easy to understand. One-tap approvals with a short rationale beat long settings pages that no one reads.

4) School-safe defaults

Schools cannot manage a dozen vendor policies per classroom. They need a standard profile that vendors must honor during school hours and on school-managed devices.

- No memory outside coursework. A bot can remember your history essay outline until submission, then forget it.

- Reading-level alignment. The assistant adapts explanations to individual reading levels, with teachers able to lock a target range.

- No simulated friendships. The school profile bans companion personas, flirtation scripts, or role-play that frames the bot as a peer.

- No ads. No data sharing beyond what is required for service delivery and safety.

Procurement can enforce this. Districts can ask for a signed declaration that a school profile exists and passes pediatric evaluation grades for the relevant age bands.

Measurable safeguards, not vibes

Safeguards must be testable. The following tools keep everyone honest and create a shared language across vendors, parents, and regulators. For a deeper look at how post-incident evidence raises system quality, see our argument for post-incident logs teach AI.

- Incident taxonomies: Vendors classify events by severity. S1 means risk of immediate self-harm, S2 is coercion or explicit content, S3 is privacy leakage, S4 is policy drift. Timelines for response are set in hours for S1 and S2, days for S3, weeks for S4. Families should be able to file an incident from the chat screen, and schools should have their own channel.

- Open auditing windows: Twice a year, vendors open supervised test access so independent research teams can run adversarial prompts. Findings are published in a fixed template and include model version numbers, prompt strategies, and reproducible scripts. Companies can blur proprietary weights, but not the outcomes.

- Telemetry you control: Families opt into anonymous telemetry that measures refusal rates, escalation behavior, and age-band compliance. Opt out must be just as easy as opt in. Without this, we cannot tell whether policy changes improve safety or just change tone.

- Red team bounties for youth harm: Rewards focus on developmental failures, not only on generic jailbreaks. A report that shows parasocial boundary violations for 12-year-olds should be as valuable as a classic prompt leak.

These practices make safety a living process. They also create a common checklist for policymakers who want to set targets without dictating code.

What the California pivot actually means

California’s veto did not bless a free for all. It acknowledged that a total lockout would sweep up tutors, language partners, and special education supports along with risky companion bots. At the same time, the state adopted rules that put guardrails on companionship and mental health touchpoints. The administration’s summary described labeling requirements, crisis protocols, and disclosure standards for companion chatbots. Read the official statement on the day’s signings in the governor’s office release on new safeguards for companion chatbots.

Policy debates will continue. Advocacy groups argued that anything short of a ban leaves children exposed. Industry groups argued that any guardrail could throttle innovation. A developmental lens cuts through that stalemate. We should not blindfold teenagers to college prep tools because a different product mishandles parasocial intimacy with 12-year-olds. We should not allow a companion persona to claim therapeutic authority because it is good at empathy. Clear roles, tested by age, beat blanket rules.

Design patterns that make or break trust

Families and schools can ask for these concrete patterns now. Vendors can ship them in months, not years.

- Mentor voice, not friend voice: The assistant uses professional warmth, not intimacy. It compliments effort, not appearance. It declines pet names. It never says it loves the user. If the child asks for friendship, it explains what it is and what it is not.

- Refusal choreography: When a bot refuses a request, it must offer two safe alternatives and a brief why. Example: I cannot role-play a romantic scene, but we can analyze character motivations in the text, or we can write parallel scenes about communication.

- Constrained creativity: For younger bands, creative tasks use scaffolds that prevent the system from producing violent or sexual content even if the child copy-pastes something risky.

- Crisis escalation grammar: The assistant recognizes ideation patterns and moves fast. It surfaces human help, provides scripted grounding exercises, and encourages contacting a trusted adult. It avoids pathologizing language and never claims to diagnose.

These are not mere style choices. They reduce exposure, limit compulsive engagement, and encourage healthy boundaries. They also make audits easier because reviewers can test for specific behaviors.

What companies should commit to in the next six months

- Publish age-banded capability profiles with version numbers and model identifiers. Include a change log and a template for incident filing.

- Release pediatric evaluation results with scenario lists and scores. If a category scores poorly, state a fix timeline.

- Ship co-sign mechanics in software development kits so third-party app makers can respect family controls without reinventing them.

- Offer a school-safe profile as a single toggle during setup. Support classroom identities that avoid persistent personal memory.

- Stand up a public incident dashboard that shows counts by severity, mean time to response, and fixes shipped.

None of these require a breakthrough in machine learning. They are product and policy choices. Companies that move first will earn trust and set the baseline.

What policymakers can do without breaking innovation

- Require public pediatric evaluation labels for youth-accessible chatbots and define a minimum acceptable grade by age band for use in schools.

- Mandate incident taxonomies and timelines for youth harm, with civil penalties when companies fail to disclose material issues.

- Fund independent auditing windows that small companies can opt into without exhausting their budgets. Pair funding with safe harbor protections when companies report issues promptly and fix them.

- Standardize family control APIs across operating systems so parents do not chase settings across ten apps.

These steps focus on outcomes and transparency rather than prescriptive code. They give smaller developers a path to compliance and give parents something to read besides glossy marketing claims.

Avoiding the shadow system

When rules are too blunt, families route around them. We have seen this with encrypted messaging, device side-loading, and age checks that collapse into checkbox rituals. If an official ecosystem makes all AI companionship off limits, kids will find imitation bots on unlocked web portals and in offshore apps. Those systems will learn the worst habits because their business model rewards fixation and secrecy.

Supervised companionship can crowd out that market. If a tenth-grade student can get a school-safe mentor that helps with calculus, interview practice, and social skills, she is less likely to hunt for a flirt bot after midnight. If a parent can see a weekly summary of topics and memory holdings, trust rises while fear cools.

A practical checklist for parents and schools this month

- Choose a bot with age-banded profiles and a visible incident history. If the vendor will not show you either, keep looking.

- Enable family pairing. Approve only the specific capabilities you want, like memory or web browsing, and set time windows for those approvals.

- Turn on weekly summaries. Talk through highlights with your child and ask the bot to show what it forgot after the session ended.

- For schools, require the school-safe profile in procurement. Insist on a live demo of refusal choreography and crisis escalation before signing.

Progress here looks like fewer surprises and fewer secret conversations, not zero risk. Aim for informed, fast feedback loops.

Measuring the public good

The case for supervised AI companionship is not philosophical. It is measurable.

- Learning lift: Track reading levels, math fluency, and language gains by age band and compare class cohorts with and without school-safe mentors.

- Crisis diversion: Count escalations to human help and follow-up outcomes while protecting privacy. If a system reliably surfaces crisis resources earlier and more effectively, that is a public health win.

- Equity: Measure access by device type and bandwidth. Offer offline or low-data modes so rural and low-income students benefit.

- Time discipline: Monitor daily session length and time of day. A well-designed mentor should produce shorter, higher-value sessions and reduce late-night use.

If those numbers move in the right direction and incidents fall, co-parenting with AI becomes more than a slogan. It becomes an evidence-backed addition to family life and classroom practice.

The first generation raised by synthetic mentors

The idea of a child confiding in a synthetic mentor can feel uncanny. Yet children already confide in tools. They talk to stuffed animals, practice speeches in mirrors, and rehearse conversations in notebooks. A good mentor bot is a better mirror. It reflects effort, not ego. It helps a child slow down and try again. It remembers the hard parts, with permission, and forgets what it should.

California’s moment in October did not settle the argument, but it gave us a blueprint. Bans flatten nuance. Building developmental alignment, co-signs, and school-safe defaults creates real agency for parents and students. Regulators can demand incident reporting and open audits that let the public see whether we are keeping promises.

The future is already in our homes. The choice is whether to parent it, or to let it raise our kids in secret. If we act with specificity and a bias for measurable safeguards, the first generation raised alongside synthetic mentors can be the most supported, not the most experimented on. That is the work in front of us, and it begins with the settings we ship and the transparency we require.