Zendesk’s Resolution Platform Turns Bots Into Operators

Zendesk’s Resolution Platform reframes support around outcomes with knowledge graph grounding, guardrails, and safe tool access. Learn how it works and use a 30-60-90 plan to launch fast without losing trust.

Support Moves From Replies To Real Resolutions

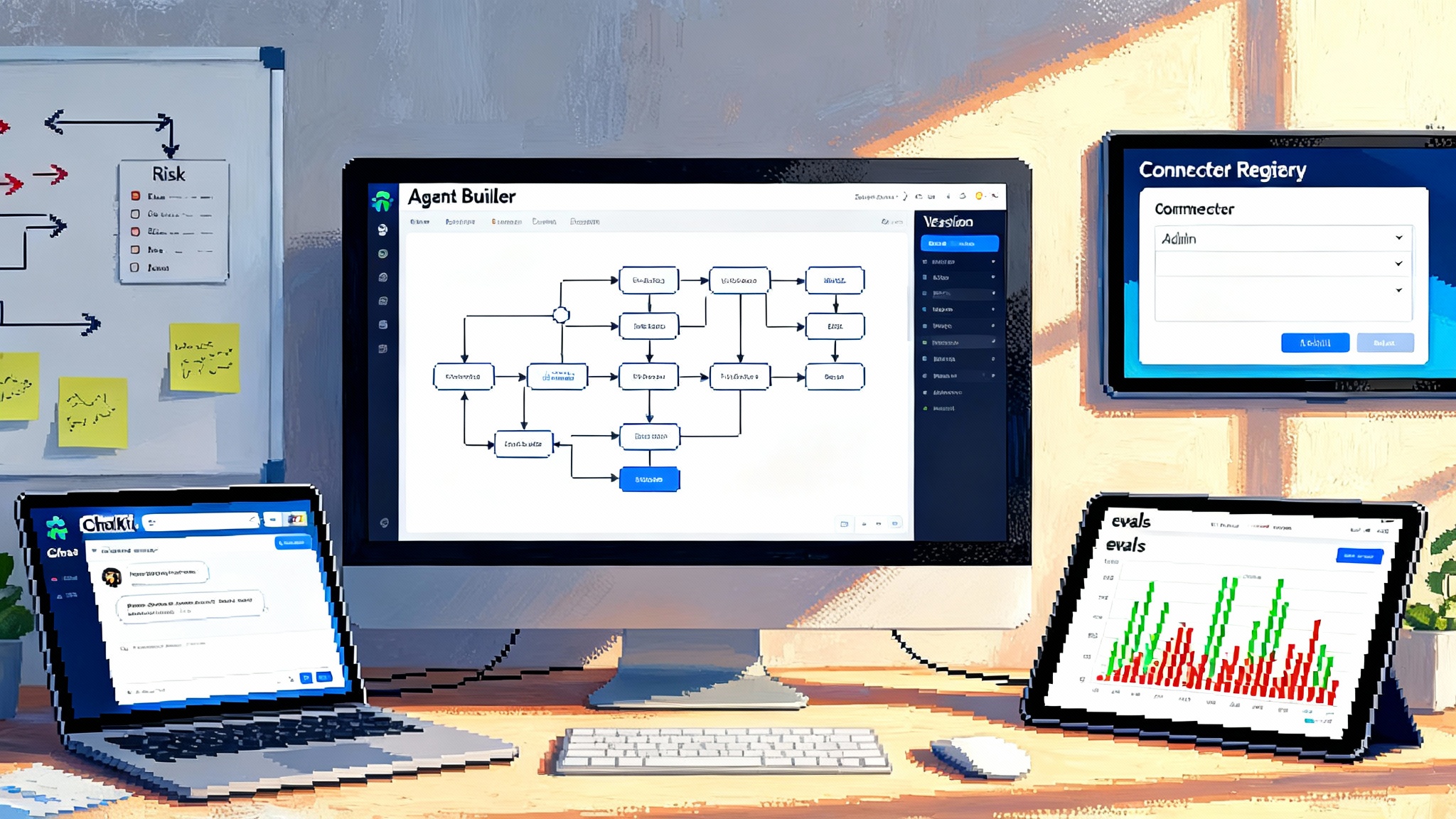

Zendesk just reframed what a support bot can be. At Relate in March 2025, the company introduced the Resolution Platform, a service stack built around one metric that matters to customers and operators alike: did the problem get fixed. The platform includes agent builders, a service knowledge graph, a catalog of actions and integrations, and governance with measurement. Zendesk’s own description is clear about scope and ambition in its official Resolution Platform launch.

Why this matters: the past decade gave us scripted chatbots and narrow flows. They answered questions, but they rarely closed the loop. The new wave is resolution-first. Instead of asking for a ticket number and routing you to a queue, these agents fetch the order, verify eligibility, trigger a refund, confirm by email, and post a note to the account. They behave less like a help center search box and more like a junior operator with a checklist, a radio, and the keys to a few doors.

For teams already building production agents, this shift will feel familiar. We have seen it in platforms designed to ship real outcomes, such as the approach outlined in AgentKit turns demo bots into shippable agents, and in runtime battles like AWS AgentCore runtime wars. Zendesk’s move brings that pattern to a customer support giant.

Case Study: From Scripts To Shippable Operators

Think of a common retail scenario. A customer writes, “My shoes arrived damaged. I want an exchange.” A scripted bot would offer a link to return instructions, maybe ask for a photo, then escalate. A resolution-first agent does more:

-

It grounds itself in the service knowledge graph. It knows what “damaged on arrival” means in policy, the exchange window, what counts as proof, and which shipping codes apply.

-

It runs through guardrails. The agent checks it has the right customer, verifies order and payment, and confirms that exchanges are allowed for this SKU and region. If any precondition fails, it explains the issue and escalates with context.

-

It uses tool access. The agent calls Create Return Label, Issue Exchange Order, and Send Confirmation. Each tool has scoped permissions, inputs, and expected outputs. The agent waits for success or a structured error, then adapts.

-

It closes the loop across channels. If the conversation started in chat, the agent might finish by sending an email with the label and a text message reminder with the drop off deadline.

That end to end pattern is the difference between a bot that chats and an operator that ships.

What Changed Under The Hood

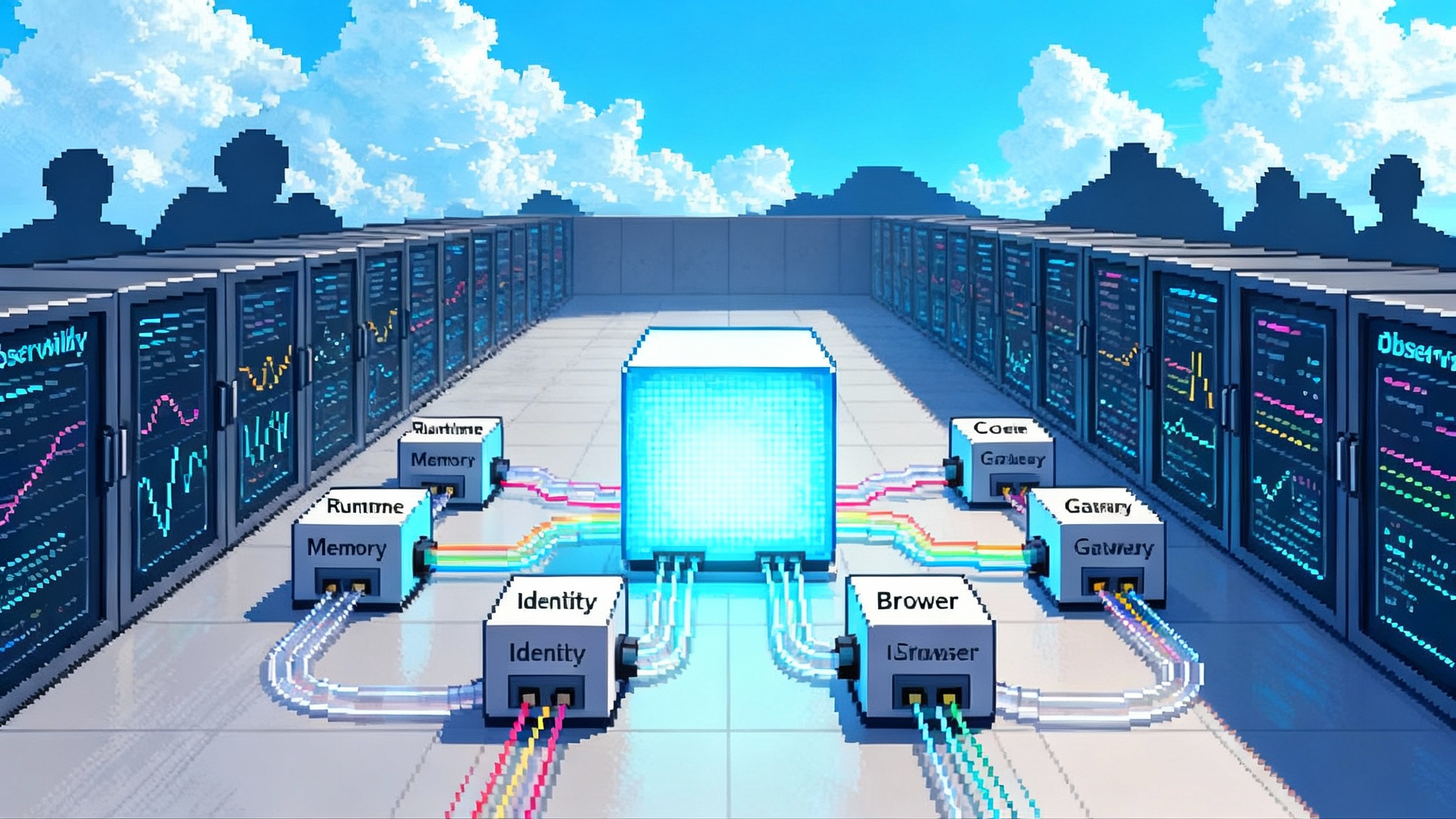

Resolution-first agents are not a single model. They are a stack with four critical layers.

-

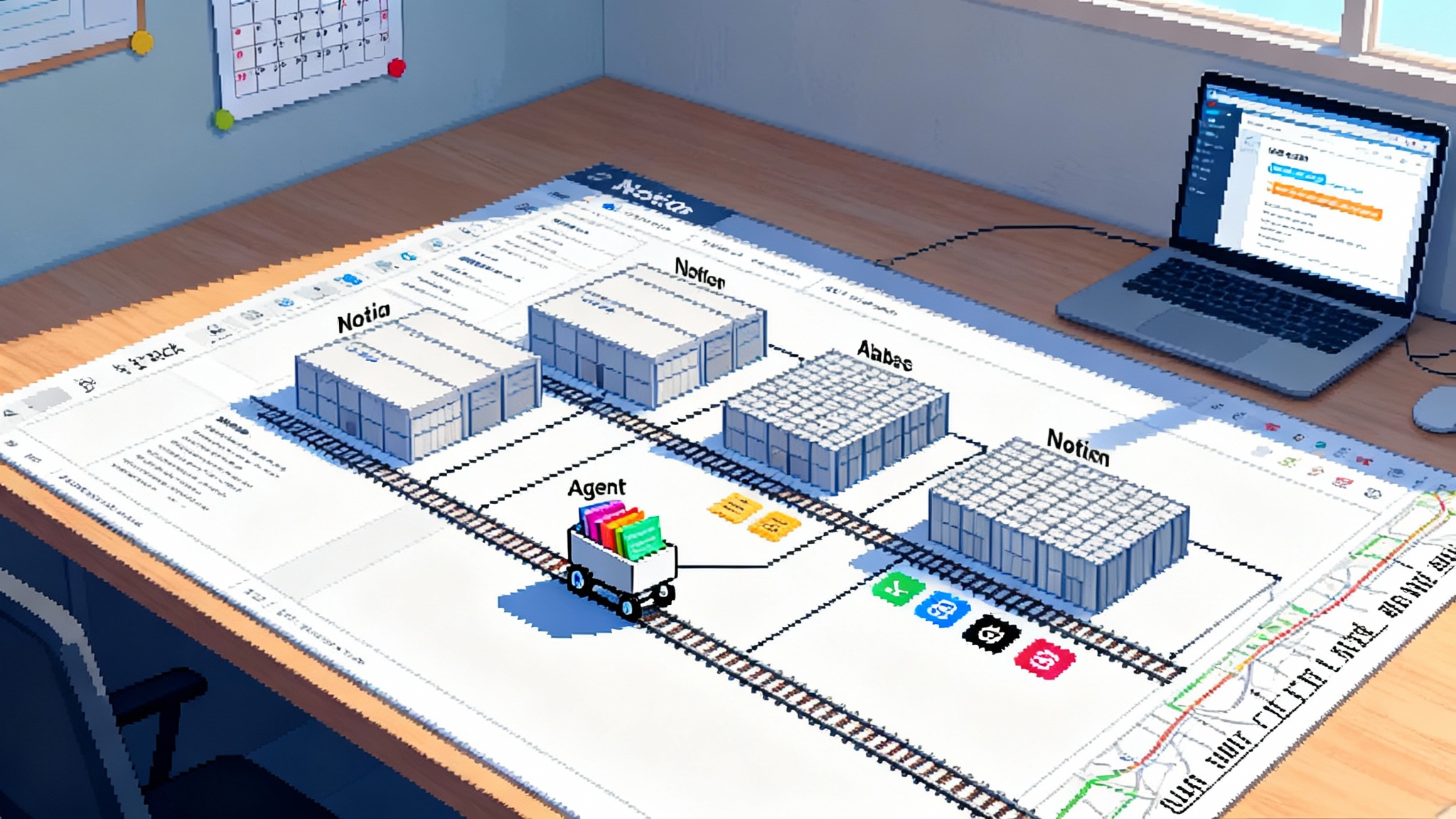

Knowledge graph grounding. A unified map of your service world. Policies, product data, account status, and past tickets are connected into a graph so the agent can reason with context instead of hallucinating. In practice, this looks like connecting help center articles, order systems, and policy tables with consistent identifiers and freshness guarantees.

-

Guardrails and governance. A policy engine that enforces what the agent may do, when, and for whom. Guardrails include identity checks, rate limits, eligibility rules, and safety filters. Governance adds approval steps, audit trails, and rollbacks so risky actions are gated and explainable.

-

Tool access and actions. A catalog of callable procedures with clear contracts. Tools can be simple functions like Reset Password or composed workflows like Rebook Flight and Issue Voucher. The platform tracks success and error rates per tool, so failing actions are pulled from the agent’s repertoire until fixed.

-

Multi channel orchestration. The same agent brain should handle email, chat, web, and voice. Voice, in particular, benefits from a real time loop that blends speech recognition, dialog planning, and action execution.

Zendesk’s public statements add color. In October 2025, coverage highlighted a new autonomous agent tier and a claim that it could resolve a large share of issues on its own. See TechCrunch on the 80 percent claim. Treat that number as directional rather than guaranteed, but the signal is clear: the ceiling for automated resolution is moving much higher.

The Anatomy Of A Resolution First Interaction

To make this concrete, here is what a real interaction looks like when all four layers work together.

-

Intent and constraint discovery. The agent extracts the user’s goal and any limitations. Exchange damaged shoes plus constraints like size availability and return window.

-

Eligibility check. A guardrail query confirms purchase date, shipping address, and return policy applicability.

-

Plan with tools. The agent constructs a plan such as Request photo, create return label, create replacement order, confirm address, send confirmation.

-

Execute with feedback. It calls tools in order, watching for structured errors like SKU out of stock in size 9. If stock is missing, it offers alternatives like refund or alternate color.

-

Close the loop. It posts a transcript and events to the customer record, sends confirmation, and schedules a follow up if the return is not scanned within 7 days.

-

Measure. The interaction is labeled as Auto Resolved, Assisted, or Escalated, with reasons captured for the next model update or policy tweak.

The key is that the agent does not wing it. It stays grounded in company policy, executes only allowed actions, and records what happened.

Why Knowledge Graphs Beat Static Knowledge Bases

A classic knowledge base is unstructured or loosely structured text. Useful for humans, brittle for machines. A service knowledge graph adds structure and relationships. Practical advantages include:

-

Real time eligibility. You can answer Can this user get a same day exchange by joining policy nodes to order and inventory nodes.

-

Versioned policy. The graph can represent policy changes over time, so the agent applies the correct rules based on purchase date and region.

-

Unified identity. Tickets, chats, calls, and emails tie back to the same person entity. That keeps the agent from requesting information it already has.

-

Safer tool calls. Precondition nodes encode what must be true before a tool runs. The agent checks them before acting.

If you do one thing in data prep, make it this: define the small set of entities and relationships the agent needs for your top 10 intents. Keep it tight, accurate, and fresh.

Guardrails That Matter In Production

Guardrails are not one big filter. They are layered checks that keep the agent safe and on brand without turning it into a brick.

-

Scope guardrails. The agent cannot issue refunds over a dollar threshold or change shipping addresses after fulfillment without approval.

-

Identity guardrails. The agent must verify the user with a one time code or device fingerprint before disclosing personal data.

-

Policy guardrails. The agent can only apply price adjustments if the policy for that region and channel allows it.

-

Language guardrails. The agent must use approved phrases for regulatory disclosures and store consent events.

-

Escalation guardrails. If the plan involves two or more high risk actions, the agent moves into assisted mode and calls a human.

-

Change guardrails. After a major policy update or a new tool release, the agent runs in shadow mode with extra monitoring for a set period before full rollout.

Well designed guardrails feel like lane keeping assist, not a brick wall. They keep you on the road and nudge you back when needed.

Tool Access That Unlocks Outcomes

Actions and integrations are where chat turns into shipping labels, vouchers, resets, and rebookings. Four design choices help:

-

Small, composable tools. Prefer Issue Refund and Create Return Label over a single Handle Return mega tool. Composability improves reuse and observability.

-

Contracts with strong typing. Every tool gets a schema for inputs, outputs, and error codes. The agent learns predictable patterns and recovers better when something fails.

-

Idempotency and retries. Make actions safe to retry. If a call times out, a second attempt should not issue a duplicate refund.

-

Telemetry by default. Log latency, success rate, and rollback events per tool and version. Use this to keep a high quality toolbox and quarantine flaky tools.

The aim is not to give the agent every power. It is to give it just enough safe, reliable powers to finish common jobs without help.

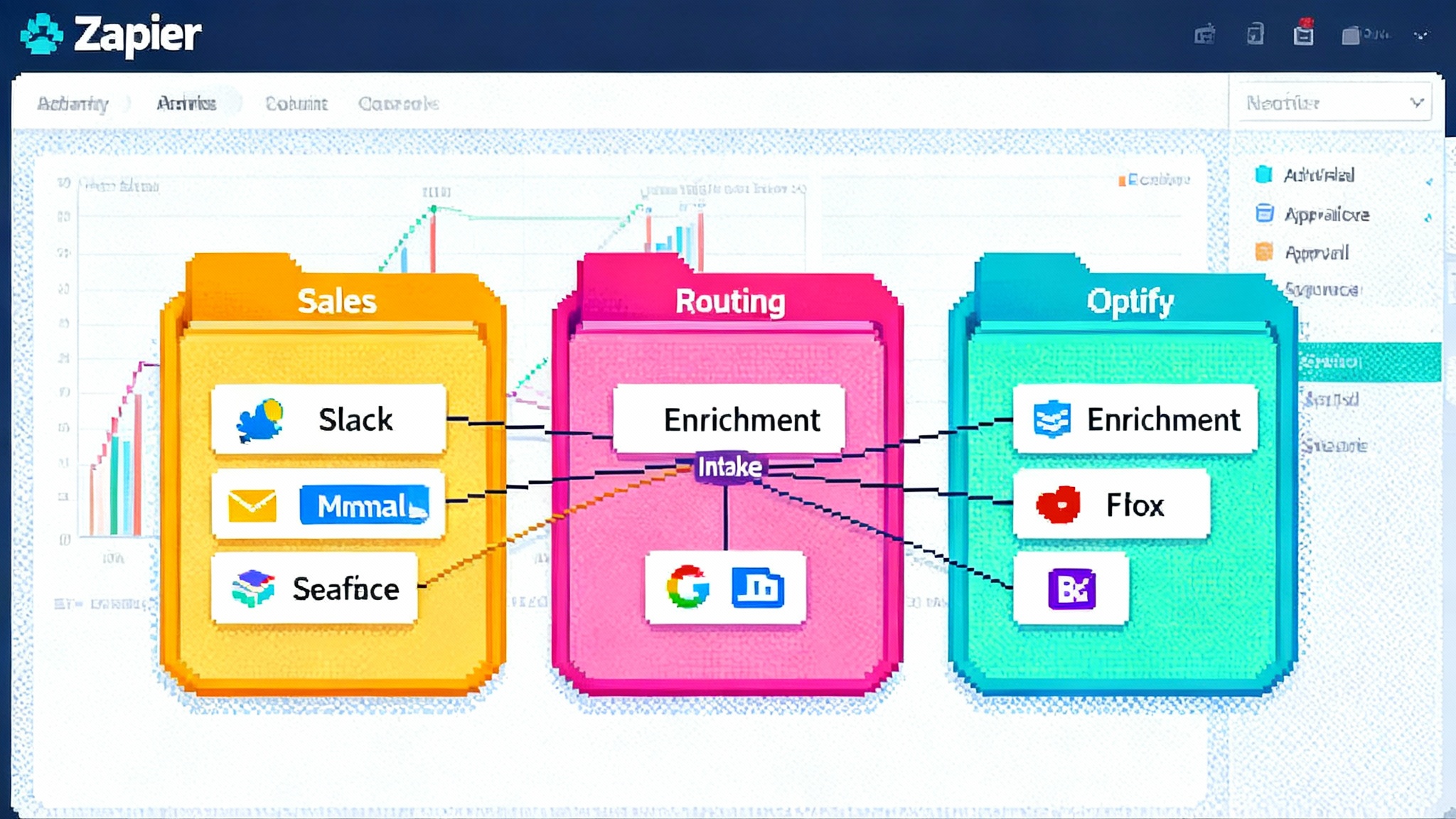

Multi Channel Without Silos

Customers bounce between channels. Email for a long explanation, chat for quick follow up, voice when it gets urgent. A resolution first agent should carry the same plan across channels and keep state synchronized.

-

Email. The agent writes long form replies, cites policy, and proposes options. It should insert structured confirm buttons or links that translate back into tool calls.

-

Chat and web. The agent keeps a short loop and uses snippets for disclosures and confirmations. It moves fast and references the same case state.

-

Voice. Real time constraints matter. Keep utterances brief, confirm critical actions verbally, and send a summary by text or email immediately after the call.

Voice is improving quickly across the industry. For a deeper playbook on voice centric rollouts, see the internal guide in Salesforce voice native playbook.

Getting multi channel right is a trust multiplier. It makes automated help feel coordinated rather than fragmented.

A Pragmatic 30 60 90 Day Rollout Plan

Here is a rollout plan teams can use to ship fast without breaking trust.

Day 0 To 30: Pick The First Five Resolutions

-

Select five high volume, low to medium risk intents. Use a simple score: volume times financial impact divided by policy complexity.

-

Draft the mini knowledge graph. Define entities like Order, Product, Customer, Policy, and Inventory. Map relationships and data freshness rules.

-

Inventory required tools. For each intent, list the smallest set of actions needed. Document preconditions, inputs, outputs, and error codes.

-

Write guardrails. Codify dollar limits, verification methods, and channel specific rules for these intents.

-

Prepare human review flows. Decide when the agent must ask a person to approve or take over. Create one click approval tasks with all context prefilled.

-

Define your three north star metrics. See the KPI section below and pick the measures that matter for your business model.

Day 31 To 60: Ship To A Small Cohort With A Human In The Loop

-

Pilot in one region and one language. Limit to a known set of customers or a single product line.

-

Run the agent in assisted mode. The agent proposes plans and actions. Humans review and approve in one click. Track what you override.

-

Build an error taxonomy. For every failed resolution, label the reason. Missing tool, policy mismatch, poor grounding, external outage, or customer withdrawal. Use this to prioritize fixes.

-

Close the content gaps. Use past tickets to create or update policy articles and step by step guides. Remove obsolete content that creates conflicting signals.

-

Red team weekly. Have a separate crew try to trick the agent into breaking policy. Log every attempted exploit and the outcome.

-

Tune thresholds. Set stricter confirmation prompts for high risk steps and simpler ones for low risk actions.

Day 61 To 90: Graduate To Partial Autonomy And Add One New Channel

-

Turn on full autonomy for the top two intents if assisted mode approval rates are consistently above 95 percent with low error rates.

-

Add a second channel. If you started in email, add web chat. If you started in chat, add voice for the same two intents.

-

Instrument everything. For each autonomous run, record tools used, time to outcome, and whether a human was needed.

-

Publish your safety rules. Tell customers what the agent can and cannot do, how identity is verified, and how to reach a person immediately.

-

Review business impact. Compare handle time, cost per resolution, and satisfaction pre and post launch. Decide what to add next based on measured lift.

For teams building their own stacks, the patterns above align well with builder oriented approaches like those in AgentKit turns demo bots into shippable agents. If you are choosing a runtime, the competitive dynamics covered in AWS AgentCore runtime wars will influence vendor choices and integration tactics.

KPIs That Predict Trust And Throughput

Pick a small set, measure them precisely, and tie incentives to them.

-

Resolution rate by mode. Percent of conversations that end in a resolved outcome, split into Auto, Assisted, and Human only. Trend this weekly.

-

Time to resolution. Median and 90th percentile time from first customer message to resolution. Include waits for approvals and external systems.

-

Containment with satisfaction. Share of interactions that did not need a human and also scored positive satisfaction. Both must be true.

-

Action success rate. For each tool, measure success and rollback rates. Retire or fix tools that fall below targets.

-

Safety incidents per 10,000 actions. Count policy violations, identity failures, or unapproved actions. Treat any increase as a severity one alert.

-

Escalation quality. When the agent hands off, does the human have the full plan, context, and artifacts. Measure average time for the human to close.

-

Automation debt. The ratio of issues the agent flags as blocked by missing tool or content to total opportunities. Use this to fund platform work.

Team Structure And Playbooks

Small, cross functional crews beat large committees in the first 90 days.

-

Agent lead. Owns the intents and quality bar. Writes the agent’s instructions and reviews transcripts.

-

Data mapper. Builds the mini knowledge graph and keeps entity definitions clean.

-

Toolsmith. Designs and maintains the action catalog with strong contracts and telemetry.

-

Safety owner. Designs guardrails, runs red teaming, and handles incident response.

-

Channel lead. Adapts prompts and interaction patterns for email, chat, and voice.

Create two playbooks from day one. A rollback playbook that explains how to disable a tool or revert autonomy in minutes, and a communications playbook that says what to tell customers if something goes wrong.

Common Failure Modes And How To Avoid Them

-

Conflicting policies. Two articles say different things. Fix by making policy sources canonical and versioned.

-

Overbroad tools. A single tool does ten things with many hidden branches. Split it into smaller tools with clear guards.

-

Latency spikes. Tool calls time out and the agent rambles. Add timeouts, retries, and short fallback messages that keep the user informed.

-

Ghost escalations. The agent quietly gives up and hands off with little context. Force every escalation to include intent, plan, and evidence.

-

Silent drift. A model update subtly changes behavior. Use canary traffic and behavior diffing to catch regression early.

How To Explain Automation Without Losing Trust

Transparency earns patience. Practical steps:

-

Label the agent clearly and offer a one click way to reach a person at any time.

-

State capabilities and limits in plain language. For example, I can exchange items and issue refunds up to 100 dollars. For larger refunds I will bring in a person.

-

Confirm key actions twice. Before charging a card, changing an address, or canceling a service, ask for an explicit Yes.

-

Send a post action receipt. Summarize what happened, who authorized it, and how to undo it.

-

Invite feedback. Ask one question after resolution: Did this solve your problem. Treat No as a safety alert.

The Near Future: Voice Becomes Practical, Email Stays Powerful

Voice agents have crossed the line from demo to production. They now handle identity, interruptions, and tool calls well enough for common tasks. The trick is choosing the right use cases. Delivery rescheduling, password resets, and simple billing questions work well. Complex exceptions still need a human. For implementation patterns and risk controls, the deep dive in Salesforce voice native playbook pairs well with Zendesk’s channel orchestration.

Email remains a quiet powerhouse. Long form replies are good for policy heavy answers. With strong templates and grounded retrieval, an email agent can safely resolve a large share of inbound without feeling robotic.

The Bottom Line

Zendesk’s Resolution Platform is a clear signal. The era of scripted bots is ending, and the era of shippable, resolution first operators has begun. The winning pattern pairs three ideas: ground the agent in a real service knowledge graph, wrap it in practical guardrails, and give it just enough tool access to finish the job. Ship in 90 days, start with five intents, measure what matters, and expand only when both resolution rate and safety are rising together. Do that, and your support bot will stop sounding helpful and start being helpful in the one way customers recognize immediately: their problem gets fixed.