Gemini Enterprise makes agent stores the new app stores

On October 9, 2025, Google introduced Gemini Enterprise, a governed platform for building, buying, and running workplace AI agents. Here is what changed, why it matters, and a 100 day plan to ship three agents to production.

Breaking: Google puts governed agents at the front door of work

On October 9, 2025, Google announced a new front door for everyday work. The company launched Gemini Enterprise, a platform that elevates approved artificial intelligence agents from chat helpers to first class operators. It gives technology leaders one governed surface to build, buy, run, and monitor agents across the enterprise. This is not another chat window. It is a control plane for automation that learns from your systems, follows your policies, and shows its work. Google launched Gemini Enterprise for businesses with a clear message that agent stores are the new app stores.

What exactly launched

Three capabilities define the launch and explain why it is bigger than a feature drop.

- Curated agent marketplace. Think of it as an internal app store for agents that can read documents, call business systems, and take steps on your behalf. You can browse Google built agents, partner built agents, and internal agents in one place. Access is role based, approvals are built in, and every action is auditable.

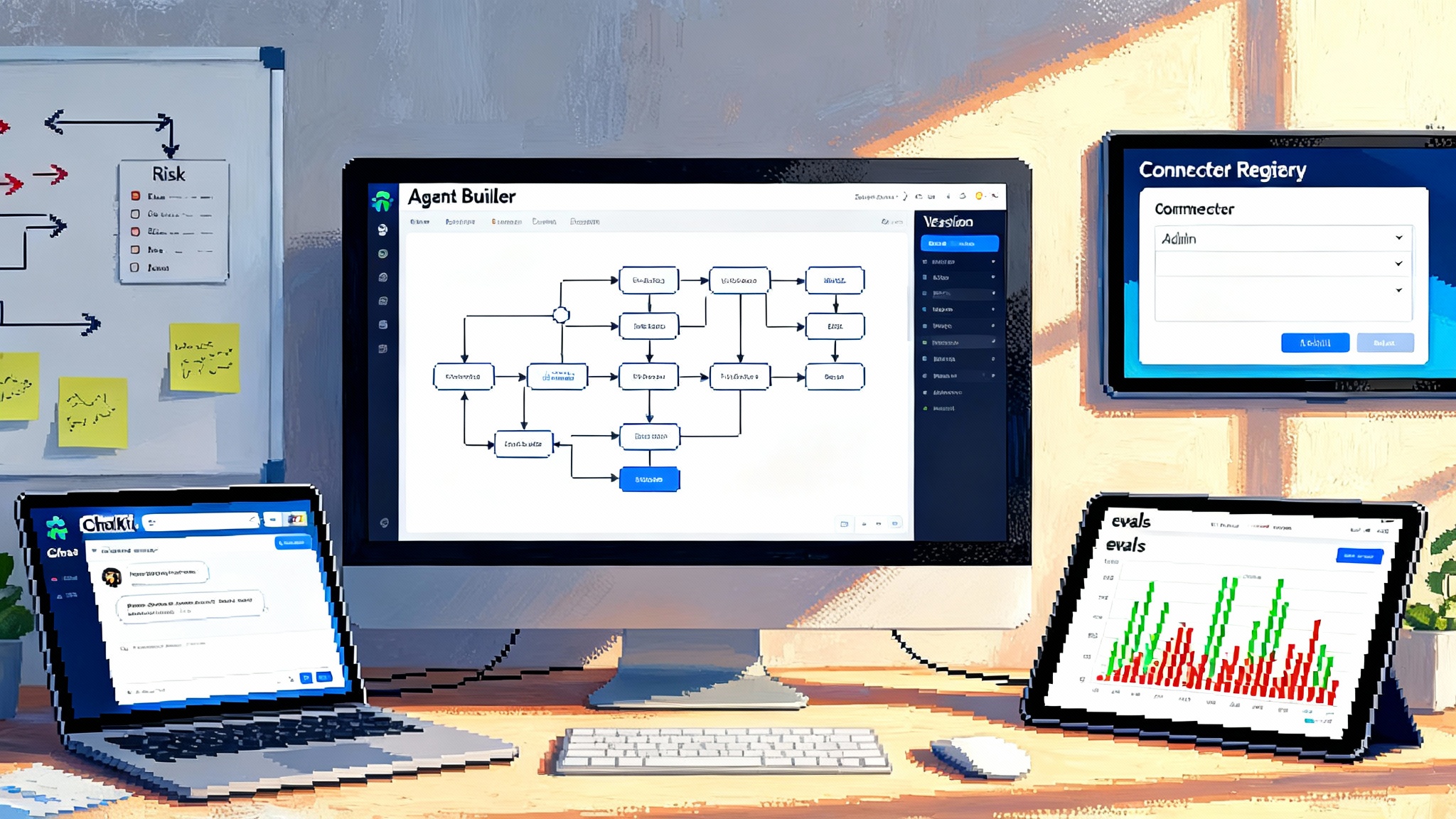

- No code Agent Designer. Business teams can turn repeatable know how into shippable agents without writing code. A product manager can assemble a research agent that reads market notes and competitor filings and ships a weekly brief. A finance analyst can build an invoice triage agent that flags exceptions and drafts emails to vendors. The point is speed with supervision.

- Optional on premises runtime. Some organizations cannot let data leave their walls. Gemini Enterprise accommodates that constraint with Google Distributed Cloud so you can run models and agents in your own data centers and still benefit from a common governance layer.

Google’s own documentation describes the catalog, partner validation, and Agent Designer unified in one governed surface, presented as AI Agents for Gemini Enterprise. Taken together, these pieces turn scattered experiments into a standard way to put agents into production.

Why this is a strategic shift, not just a shiny UI

For two years most enterprise AI lived in chat. That was useful for drafts, summaries, and coding help, but it created a pattern of fragmented tools and unmanaged connectors. Gemini Enterprise formalizes an alternative. Agents are first class, identity aware, and policy aware. They can be discovered like apps, approved like vendors, and observed like services.

The next wave is not a better prompt. It is a workflow that finishes the job. An agent that can read a purchase order, look up pricing in a planning system, check budget in finance, draft an approval note, and send it for sign off is more valuable than a clever chat assistant. The platform shift is from talk to task.

The architecture in plain language

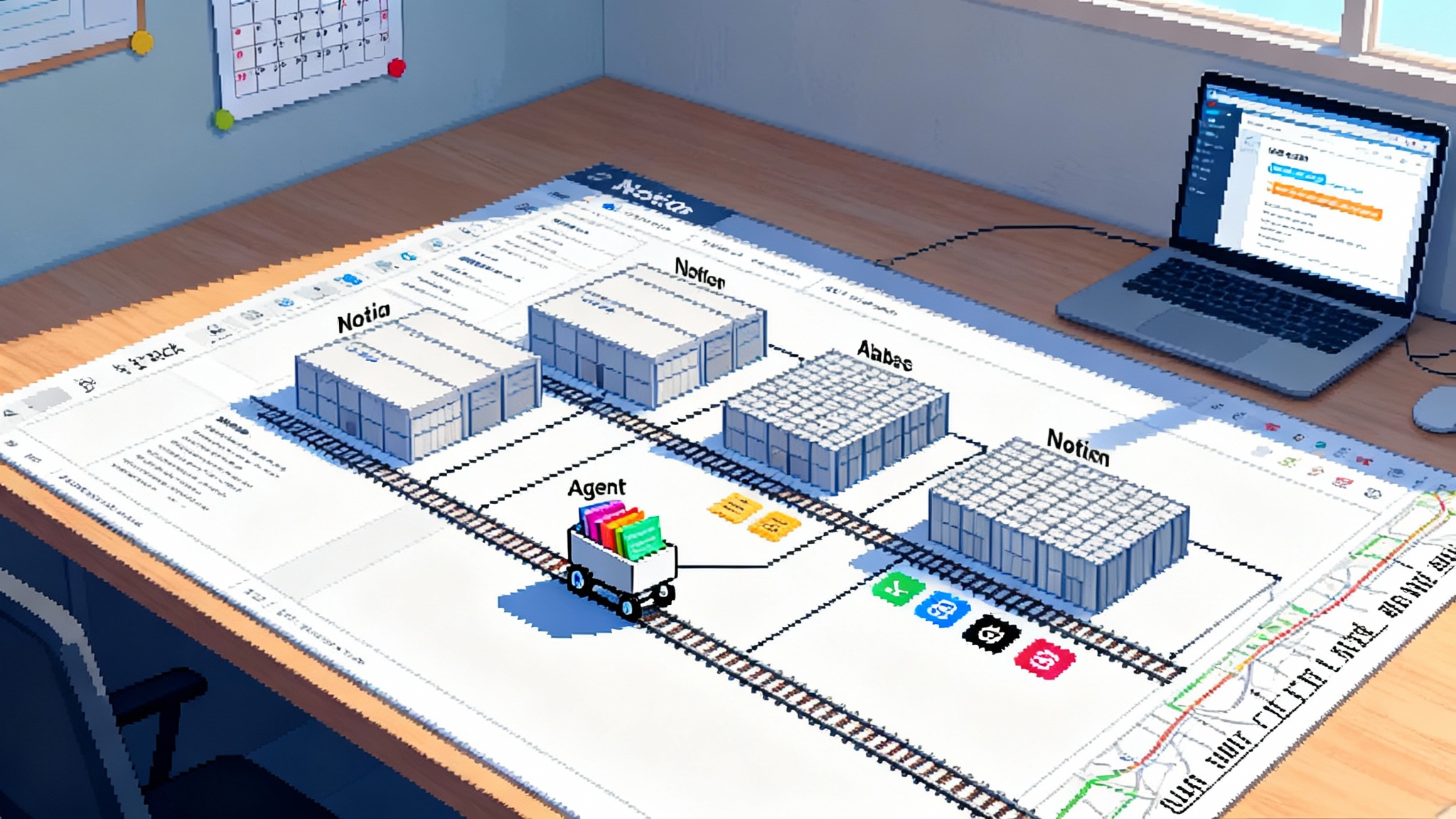

A simple mental model helps separate the marketing from the operating model.

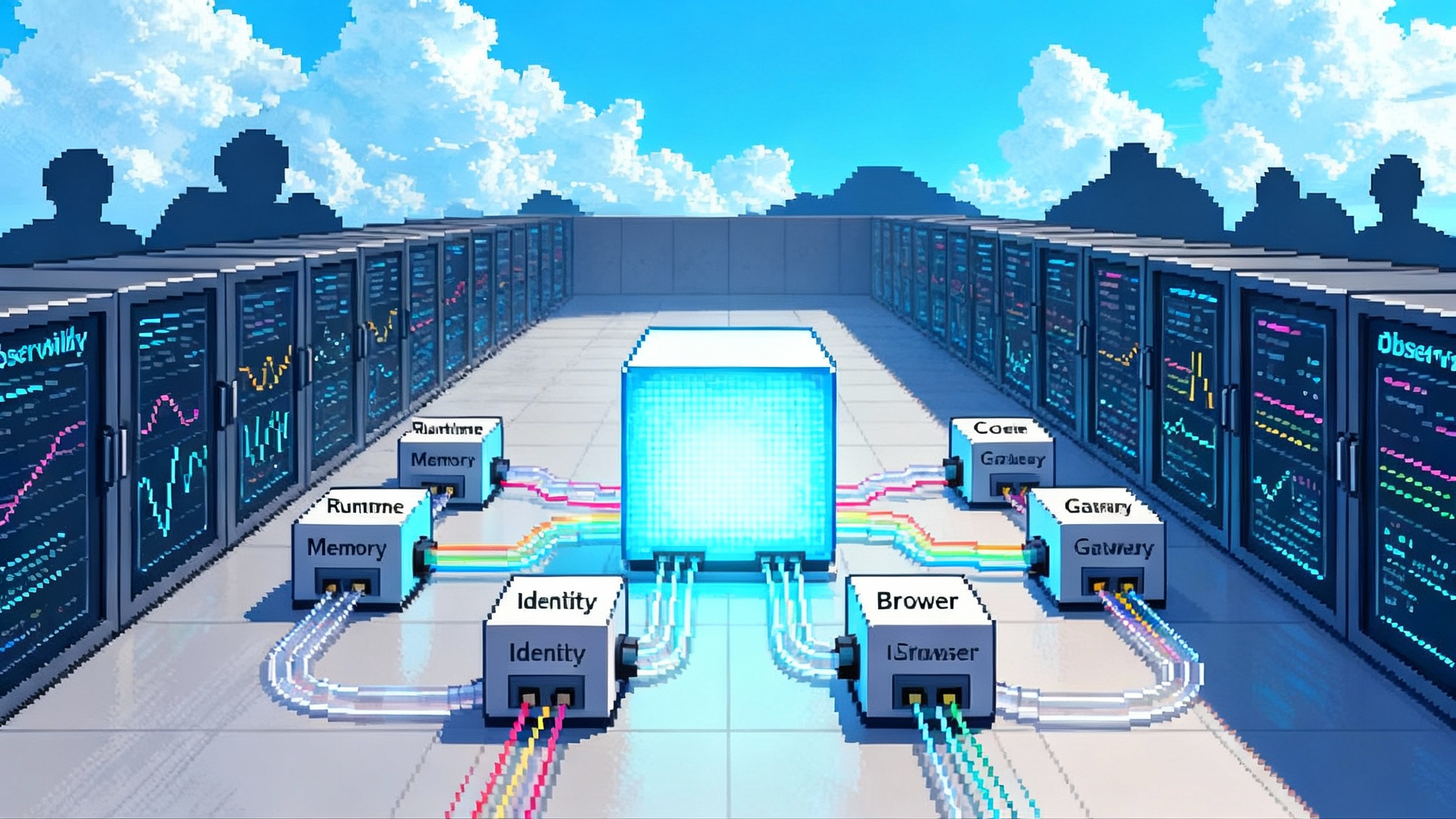

- Control plane for agents. A single inventory of agents with who can use them, what data they can touch, and how they are monitored. Think mobile device management for automation.

- Connectors as plumbing. Agents reach into data lakes, software as a service tools, and on premises applications through managed connectors. Those connectors carry policies, masking rules, and logs.

- Guardrails as traffic rules. Before an agent runs, policy checks apply. During execution, tools limit where it can go. After execution, reports show what happened and where data moved.

- Evaluation as inspections. Every new or updated agent must pass safety and quality tests. You can measure accuracy, latency, cost per task, and failure modes on real samples before rollout.

- Optional on premises runtime. The same agent definition can run in a Google Distributed Cloud cluster for workloads that must stay inside your facility.

This reduces the risk of shadow automation while improving time to value. It gives chief information officers one lever to pull rather than dozens of unmanaged widgets.

How the enterprise stack changes

- Identity and access become primary controls. Agents inherit user identity and role or operate as service identities with narrow scopes. Single sign on and step up authentication apply to high risk actions.

- Observability shifts left. Every agent produces structured traces, inputs, outputs, and tool call metadata. Security teams get hooks for detection and response. Product teams get dashboards for quality and cost.

- Data governance moves into design. Retrieval rules, redaction policies, and data use declarations are specified when the agent is defined. You stop arguing about access in a ticket queue and start encoding the decision in templates.

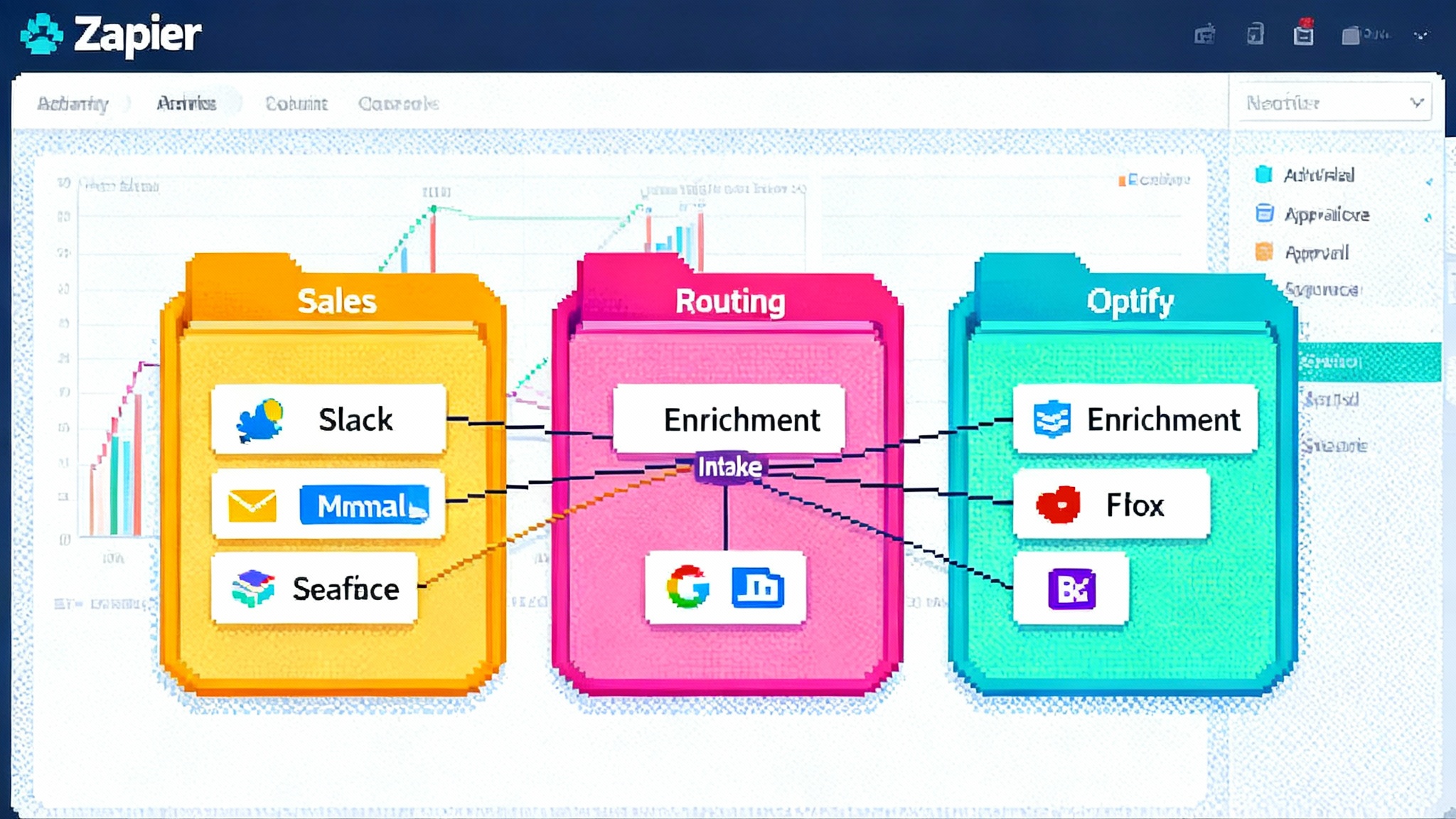

- Procurement looks like marketplace operations. Instead of signing ten pilots, buyers subscribe to validated partner agents that meet enterprise criteria, then measure adoption and outcomes like any other software as a service product.

If you want a deeper backdrop on why agent platforms are converging, see our analysis of the start of agent runtime wars and how AgentKit turns demo bots into shippable agents.

The three immediate plays for CIOs

1) Consolidate shadow bots into governed agents

- What to do: Inventory every chatbot and scripting tool that touches sensitive data. Prioritize agents with strong adoption and clear owners such as sales briefing assistants, support summarizers, and code copilots.

- Why: Unmanaged chatbots leak data, duplicate costs, and give inconsistent answers. Centralizing them into a platform with identity, logging, and policy reduces risk and spend.

- How: Migrate the top five by usage into Gemini Enterprise. Recreate them in Agent Designer or import them as custom agents where feasible. Replace one off connectors with managed ones. Enforce retention policies and restrict model access by group.

2) Ship domain specific agents fast

- What to do: Choose three bread and butter workflows where an agent can finish a task, not just summarize it. Ideal candidates are invoice exception handling, contract clause extraction and routing, and first line service desk triage.

- Why: Business value comes from cycle time and accuracy gains on routine work. These workflows are repetitive, measurable, and easy to review.

- How: Use Agent Designer to assemble each agent from a repeatable template. Add retrieval from approved sources, define the allowed tools, and set thresholds for confidence. Wire approvals into email or ticketing systems. Require human in the loop for the first two releases, then dial up autonomy as metrics allow.

3) Plug into the partner ecosystem

- What to do: Identify two partner built agents that map to existing platforms, such as an automation agent that triggers robotic process automation or a data insights agent that reads your warehouse and drafts executive summaries.

- Why: You get a head start without building from scratch and you keep vendor count low by using validated agents that meet Google’s governance checks.

- How: Source them through the curated catalog. Review the validation badges. Run your standard security questionnaire once and apply the decision across the enterprise with the same policy package.

For a customer service angle on governance in action, see how Zendesk turns bots into operators.

A pragmatic 100 day plan to put three agents into production

You can prove value fast with a staged plan that is tight on scope and heavy on measurement.

Days 0 to 15: set the foundation

- Name an executive sponsor and a product owner. The sponsor unblocks decisions. The product owner writes the backlog and owns outcomes.

- Stand up a sandbox environment. Enable single sign on, configure logging to a security information and event management tool, and define data regions.

- Pick three target workflows with clear owners and data access. Define success as numbers, not adjectives, such as 30 percent faster invoice cycle time or 20 percent fewer tier one tickets.

Days 16 to 30: governance and design

- Draft a lightweight agent policy. Specify data sources allowed, redaction rules by field, retention periods, and an approval process for new tools.

- Create a test harness. Build a small set of real documents and tickets with ground truth answers. You will use this to evaluate quality and cost on every change.

- Sketch the three agents. For each, list the inputs, the steps, the tools called, and the expected outputs. Decide the level of autonomy at launch and where human in the loop applies.

Days 31 to 45: build the first two agents

- Assemble an invoice exception agent. Connect it to your planning system through a managed connector. Configure it to extract exception reasons, draft a resolution email, and route to the right queue.

- Build a service desk triage agent. Allow it to read recent tickets and knowledge base articles. Configure it to suggest a resolution and fill the ticket form. Limit it to tier one issues with clear resolution paths.

- Run both agents against the test harness. Record precision, recall, latency, and cost per item. Tune prompts, tools, and thresholds until you meet your targets.

Days 46 to 60: build the third agent and wrap operations

- Build a contract clause extraction agent for legal operations. Point it to a curated clause library. Allow it to flag missing or risky terms and route to counsel.

- Wire alerts. Send anomalies and policy violations to the security team’s queue. Turn on daily activity reports and cost dashboards for the product owner.

- Prepare training. Write a one page quick start for each agent with examples, limits, and escalation paths. Record a short video demo for each.

Days 61 to 75: pilot in production

- Roll out each agent to 10 percent of the target user group. Require thumbs up or thumbs down feedback on every action and capture free text feedback.

- Hold two weekly office hours. Review what worked, what broke, and what needs to change. Ship fixes three times a week. Keep the backlog small and focused on real friction.

- Compare outcomes to baselines. Measure cycle time, error rate, and user effort. If the agent is slower than the baseline, pause and fix before growing the pilot.

Days 76 to 90: expand and harden

- Move to 50 percent of the target group if metrics hold. Turn on limited autonomy for the simplest cases. Keep human in the loop for exceptions.

- Add red team tests. Try prompt injection, data exfiltration, and tool misuse. Adjust policies and tools based on findings.

- Lock pricing. Negotiate seat tiers and partner agent subscriptions now that you have real usage and value data.

Days 91 to 100: declare production and scale

- Graduate the three agents. Announce them as supported services with known owners, service level objectives, and a change cadence.

- Publish the playbook. Turn your process into a repeatable template. Name the next five workflows and reuse everything you built.

- Create a small agent review board. Meet every two weeks to approve new agents, review metrics, and stop anything that drifts off policy.

Concrete examples that pay off quickly

- Sales briefing agent. Pulls the day’s meetings, reads recent emails and support tickets, fetches firmographic data, and drafts a two minute brief for each customer. Measure prep time saved and conversion lift.

- Customer support summarizer. Reads transcripts, updates the ticket, proposes a follow up email, and files a knowledge base update. Measure handle time and article reuse.

- Finance close helper. Reconciles small variances, flags policy exceptions, and drafts notes for review. Measure close time and exception rate.

Each of these can start with human in the loop, then move to partial autonomy for narrow, low risk cases.

How to govern without slowing down

- Define tiers of autonomy. Tier 0 is suggestion only. Tier 1 can act on low risk tasks under a dollar or access threshold. Tier 2 can act with post hoc review. Tier 3 requires explicit approval. Most agents will live in tier 0 or tier 1 at first.

- Set budget guardrails. Cap tokens or requests per user per day. Alert when a run exceeds a cost threshold. Review long running tasks weekly.

- Keep a golden dataset. Ten to fifty examples per workflow with ground truth answers. Re run them after every change to catch regressions before users do.

- Make rollback easy. Every agent should have a kill switch, a version history, and a way to revert to the last known good release.

Competitive context and why Google looks different

Microsoft, OpenAI, and Anthropic are all moving toward agent platforms. Google’s differentiator is breadth of deployment and a strong partner channel presented inside one governed surface. The curated agent catalog, the no code Agent Designer, and the option to run on Google Distributed Cloud create one standard from the laptop to the data center. That combination reduces integration work for technology teams and shortens the path from idea to impact for business owners.

This move also fits a larger market pattern. Vendors are racing to standardize runtimes, catalogs, and controls for production agents. We have covered the start of agent runtime wars and why horizontal kits like AgentKit turns demo bots into shippable agents. Gemini Enterprise is Google’s answer packaged for enterprise scale.

What to measure to prove value

- Unit cost per completed task. If an agent cannot beat the cost of manual work, change the design or shut it down.

- Cycle time. Track end to end, not just model time. The goal is to finish the job faster.

- Error rate with severity. Not all errors are equal. Track critical, major, and minor errors separately.

- Adoption by active use. Weekly active users and tasks per user tell you whether the agent fits into real work.

- Escalation ratio. How often did human in the loop reject or edit the agent’s output and why.

The takeaway

Agent stores are the new app stores. The October 9 launch turns that idea into a plan you can execute. Gemini Enterprise gives chief information officers a single place to consolidate shadow tools, ship domain specific agents with speed, and plug into a partner ecosystem that comes with governance. The fastest movers will not try to automate everything at once. They will start with three well chosen workflows, measure hard outcomes, and expand by template. The platform is ready. The next hundred days are enough time to prove it.